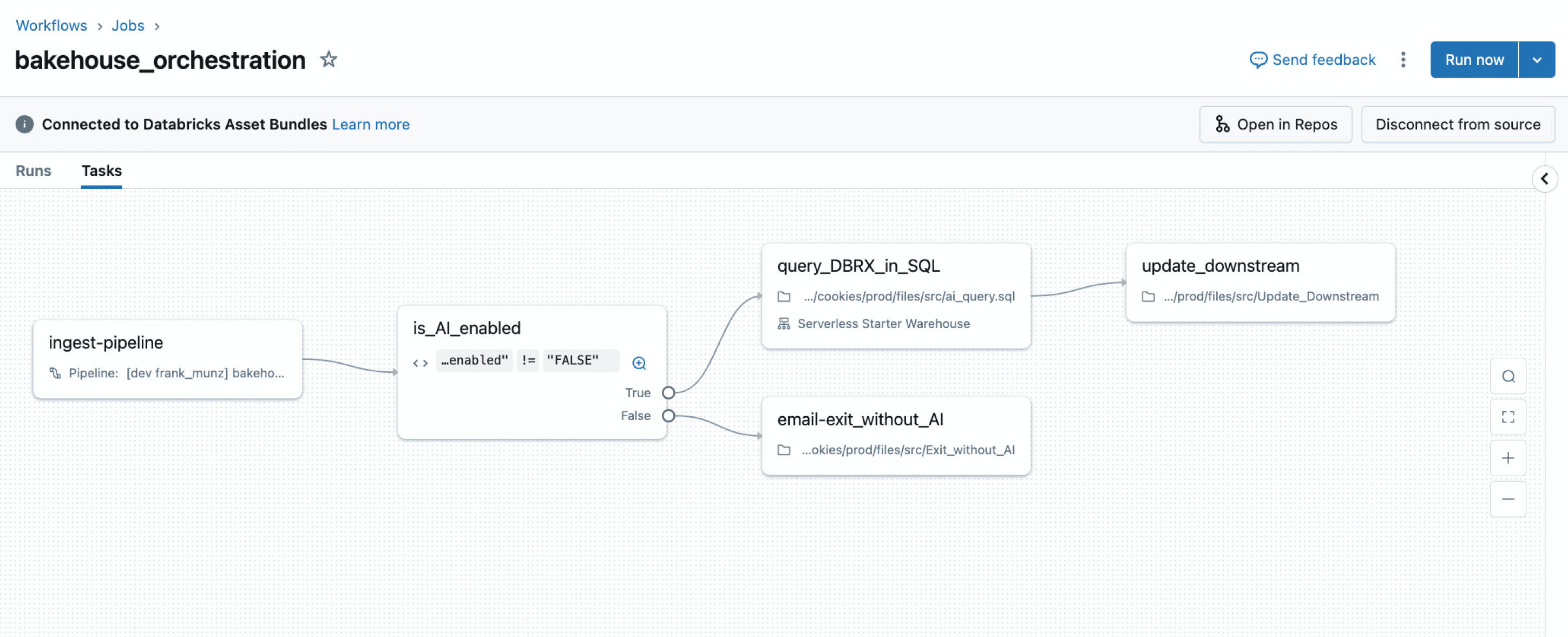

Orquestração gerenciada nativamente para qualquer carga de trabalho

Orquestração unificada de dados, análises e IA na Data Intelligence Platform

CLIENTES USANDO TRABALHOS LAKEFLOW

Orquestre qualquer fluxo de trabalho de dados, análises ou IA

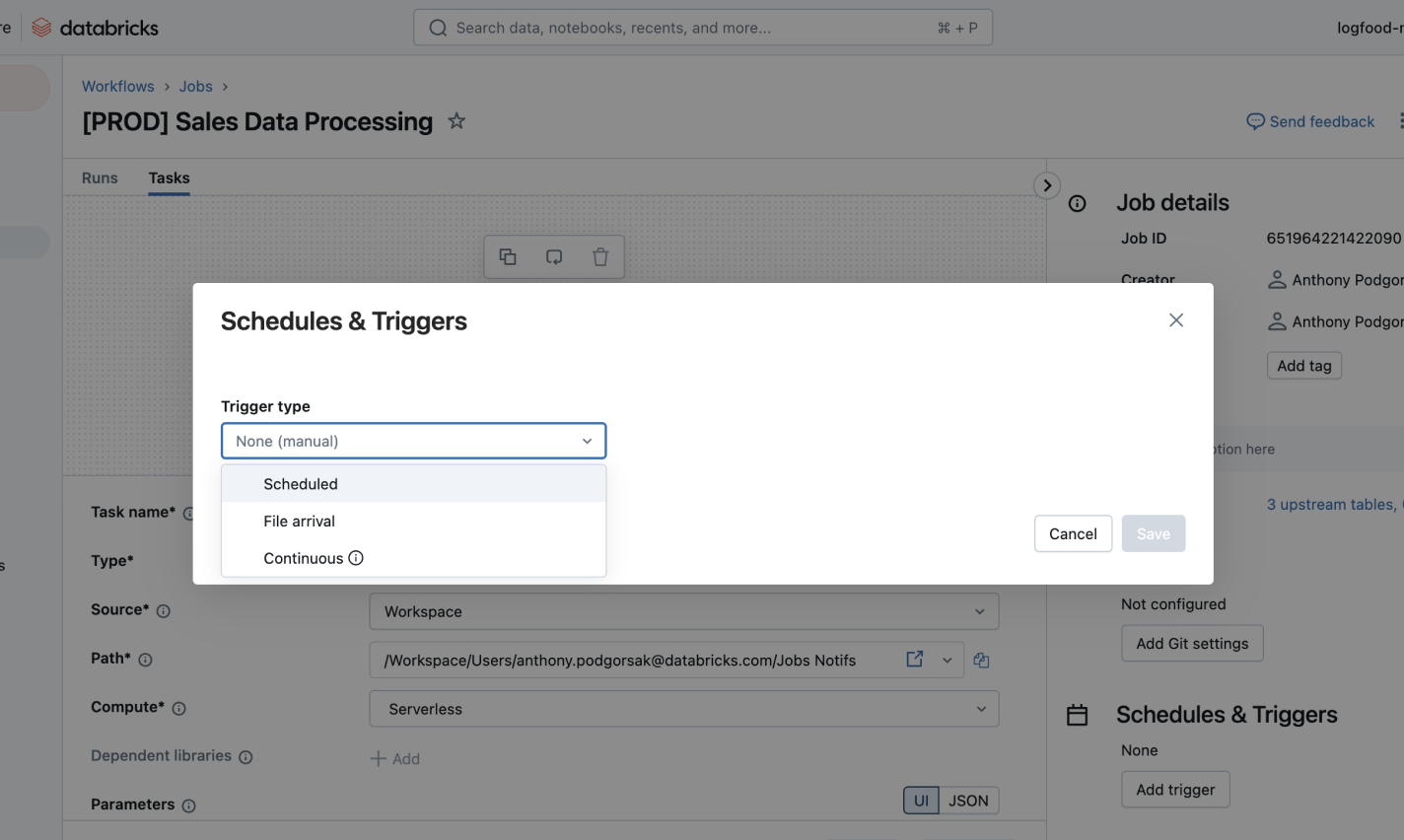

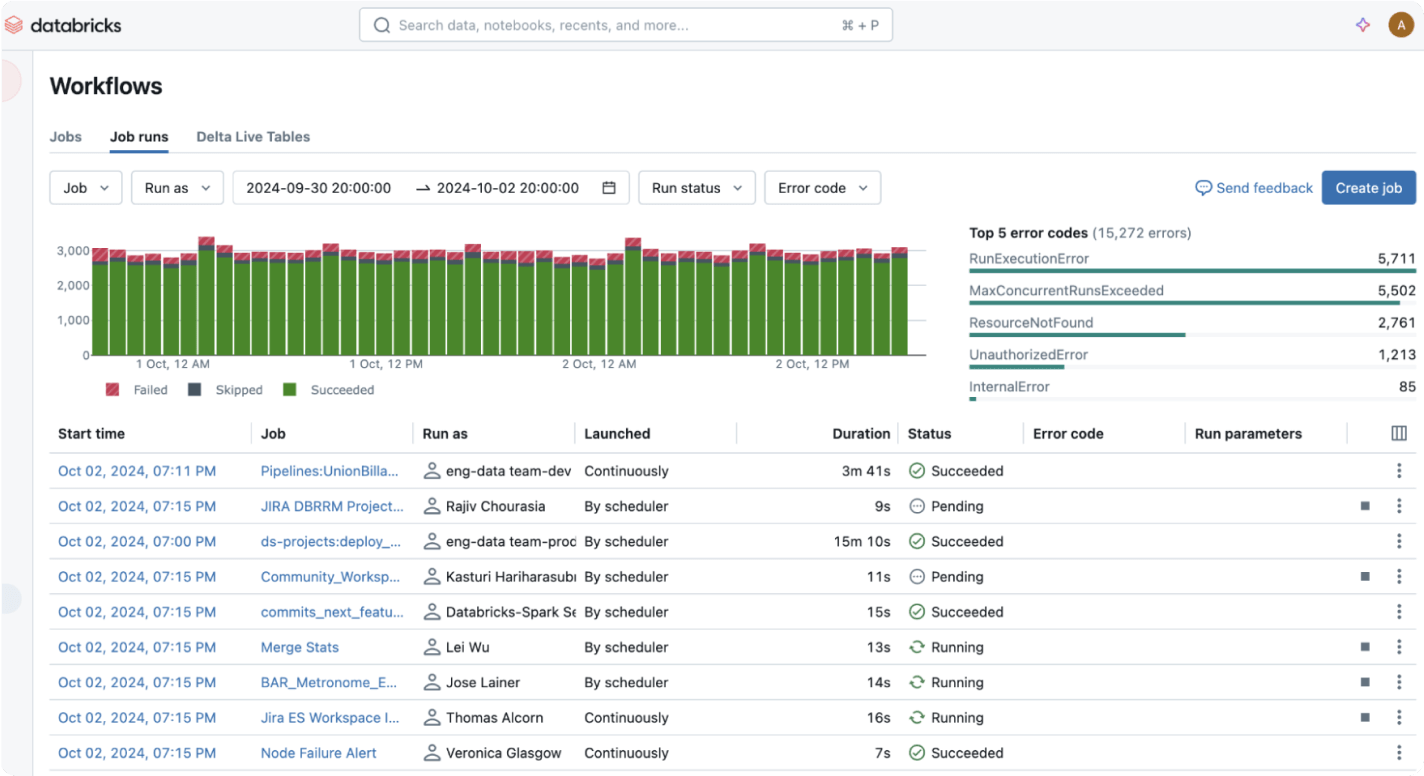

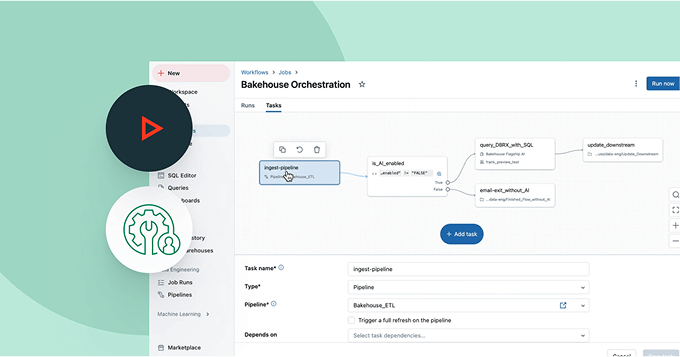

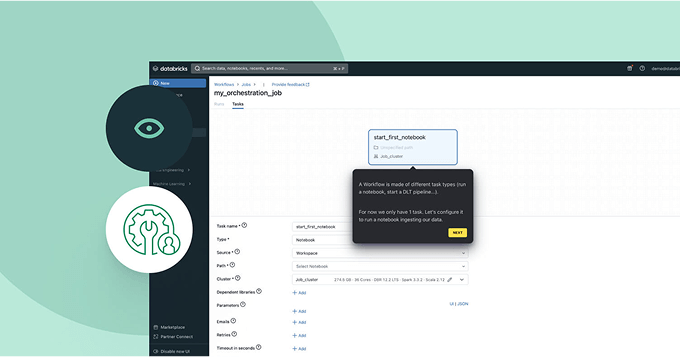

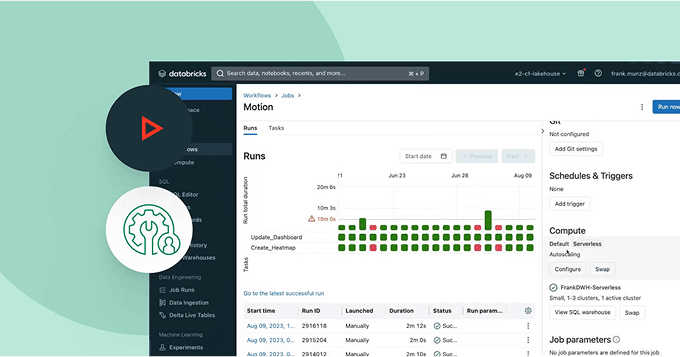

Otimize seus pipelines com um serviço de orquestração gerenciado totalmente integrado à sua plataforma de dados. Defina, monitore e automatize facilmente fluxos de trabalho de ETL, análise e ML com confiabilidade e observabilidade profunda.Simplifique e orquestre suas pipelines de dados

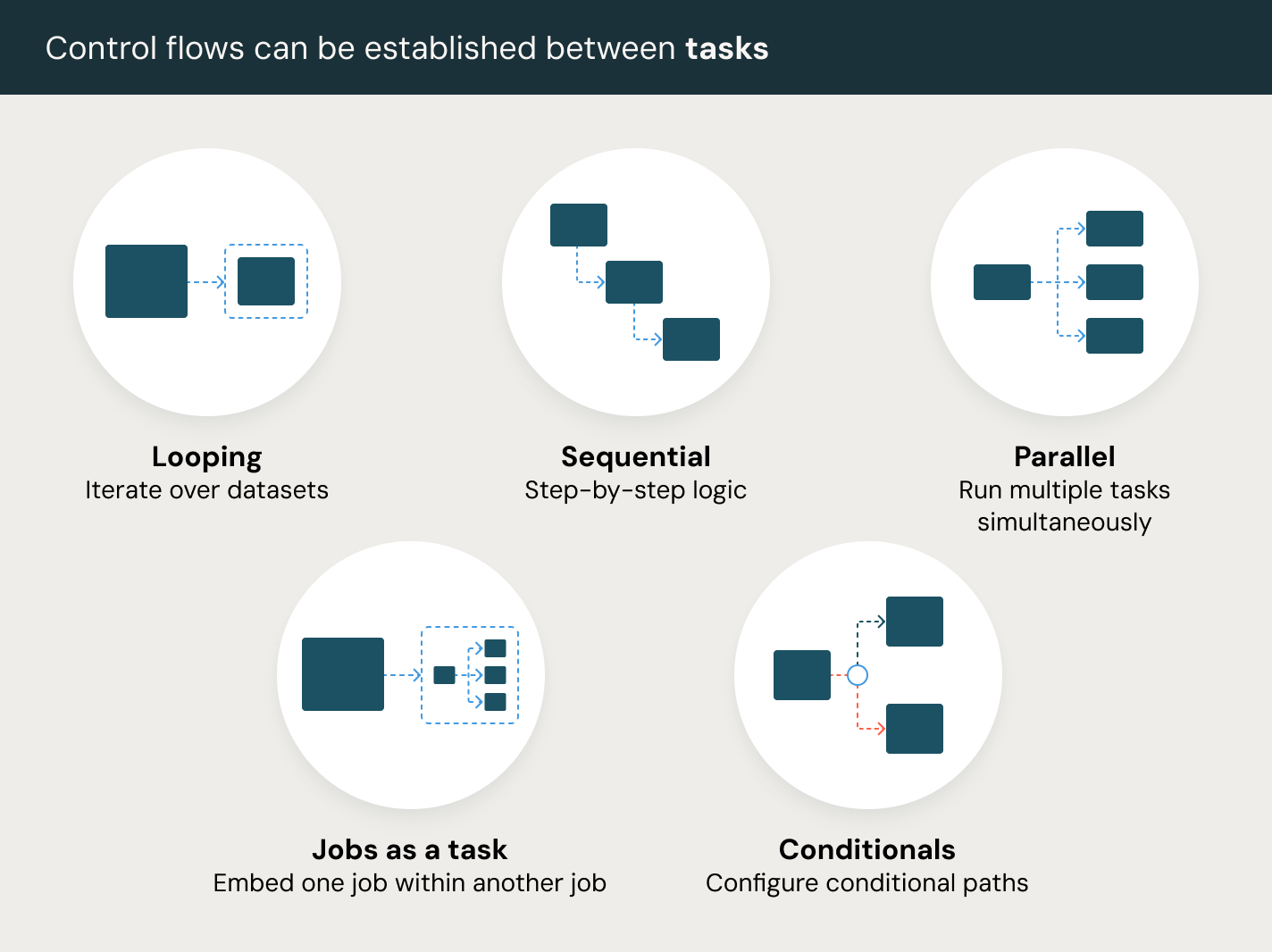

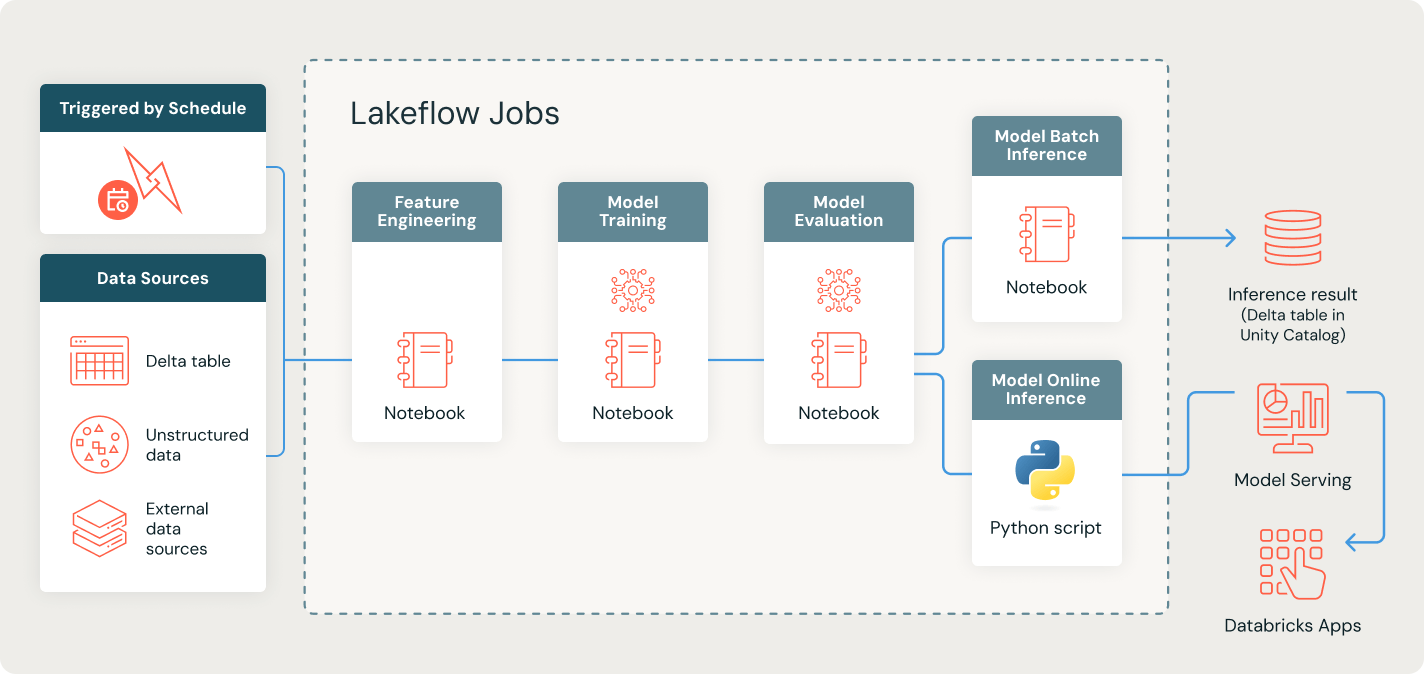

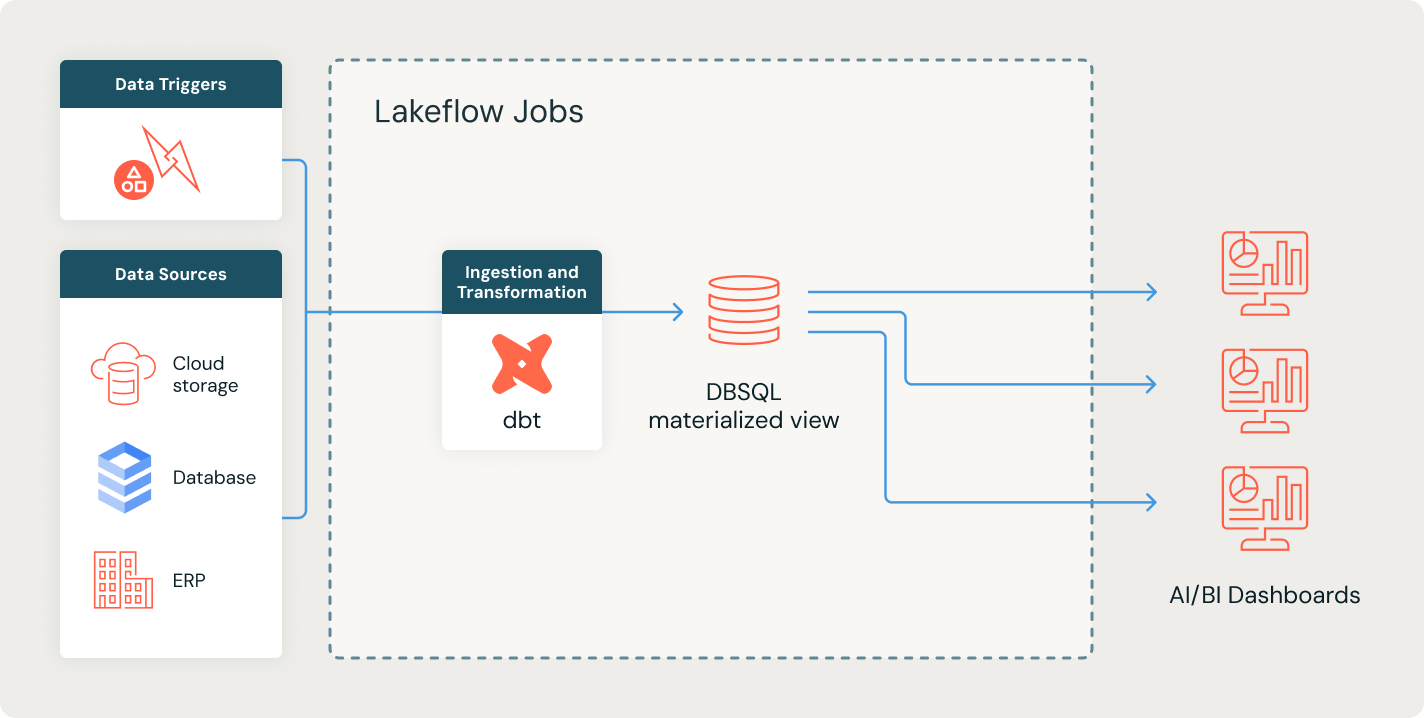

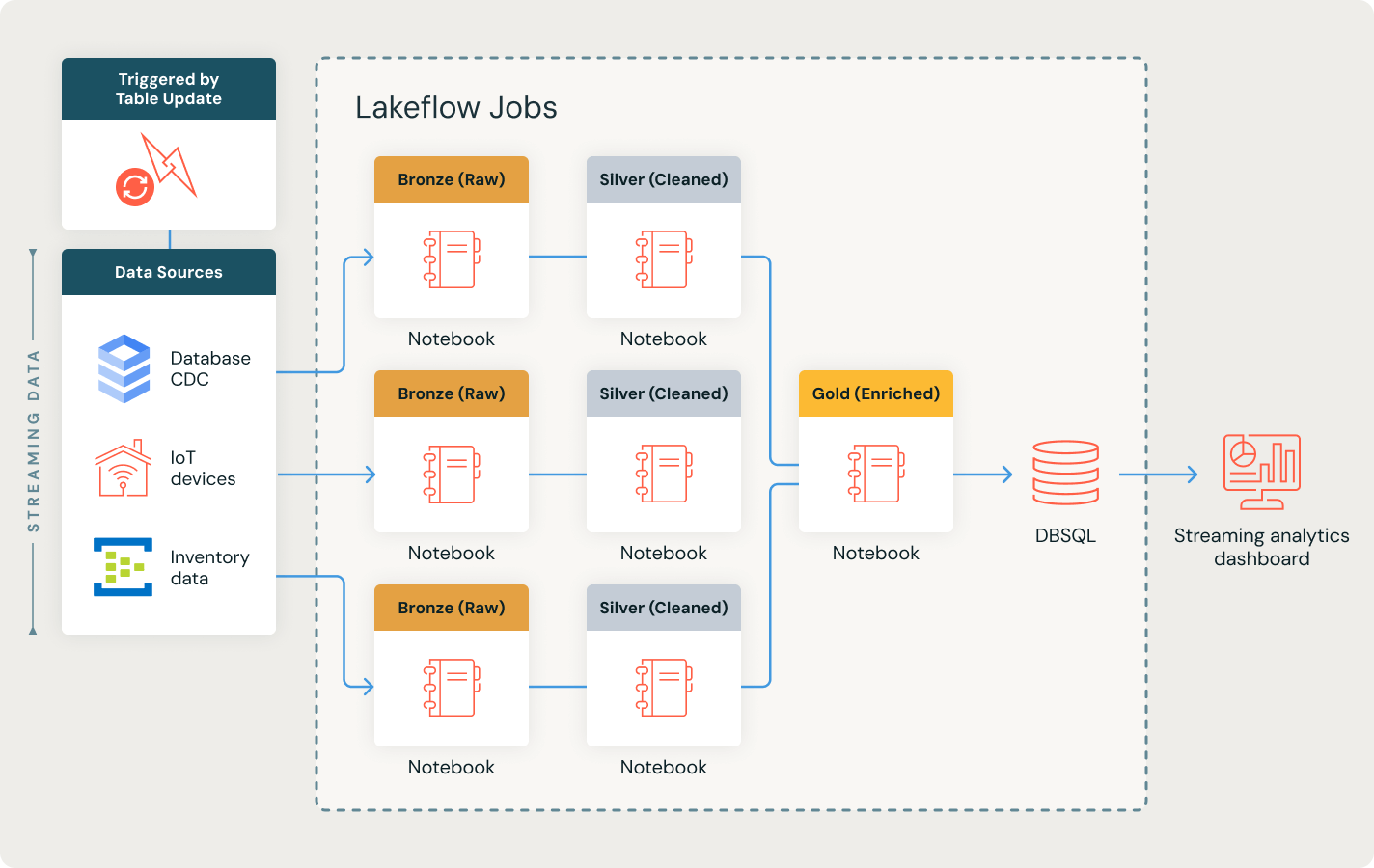

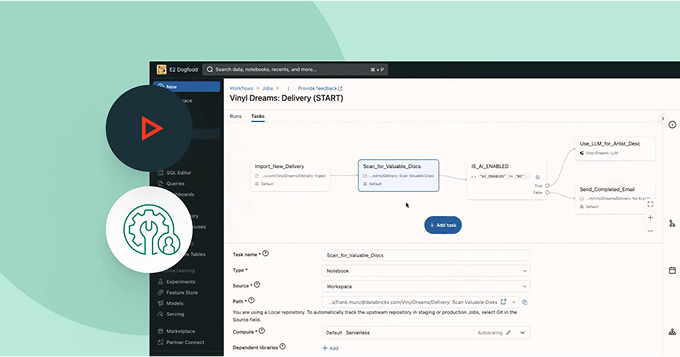

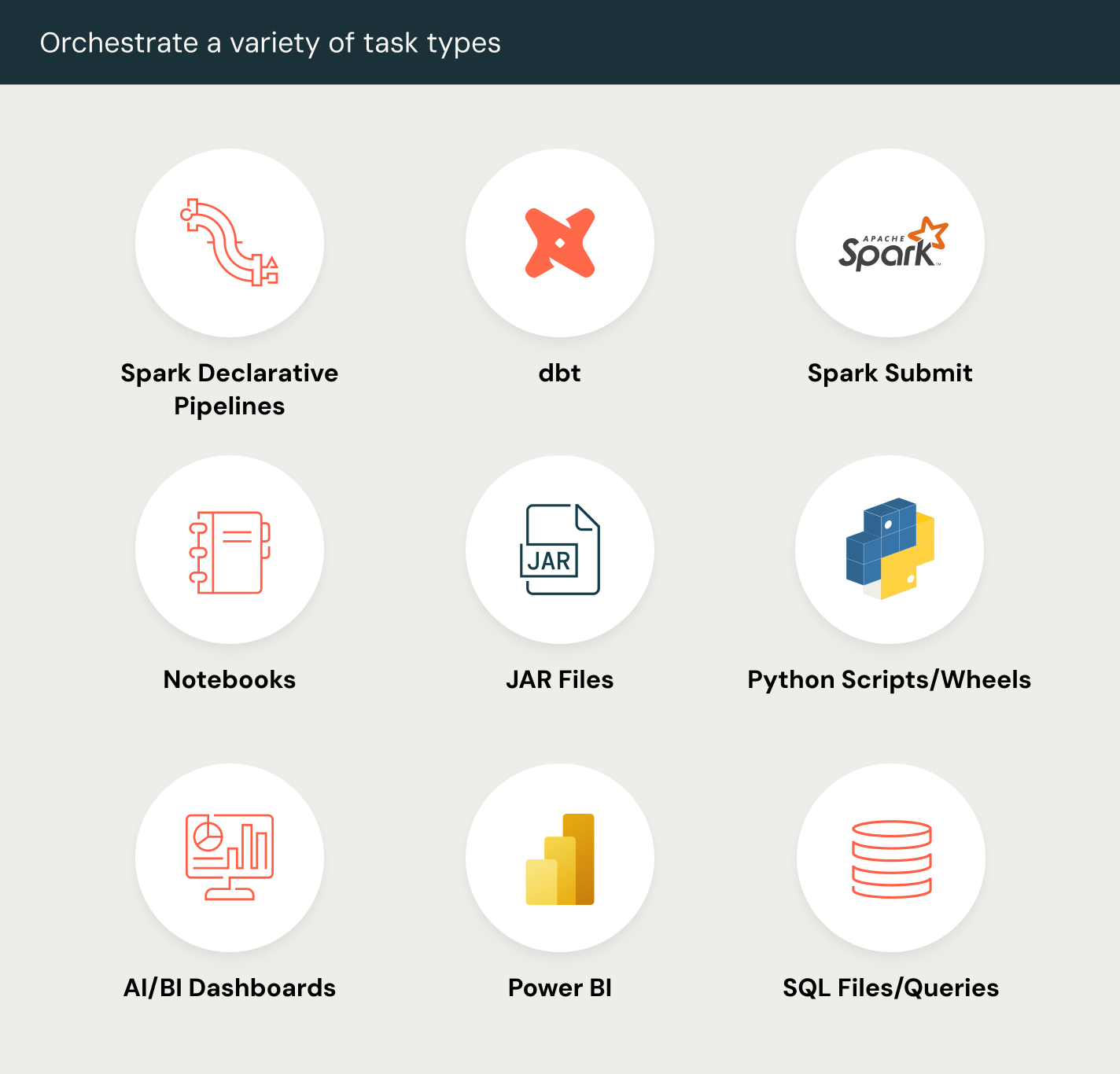

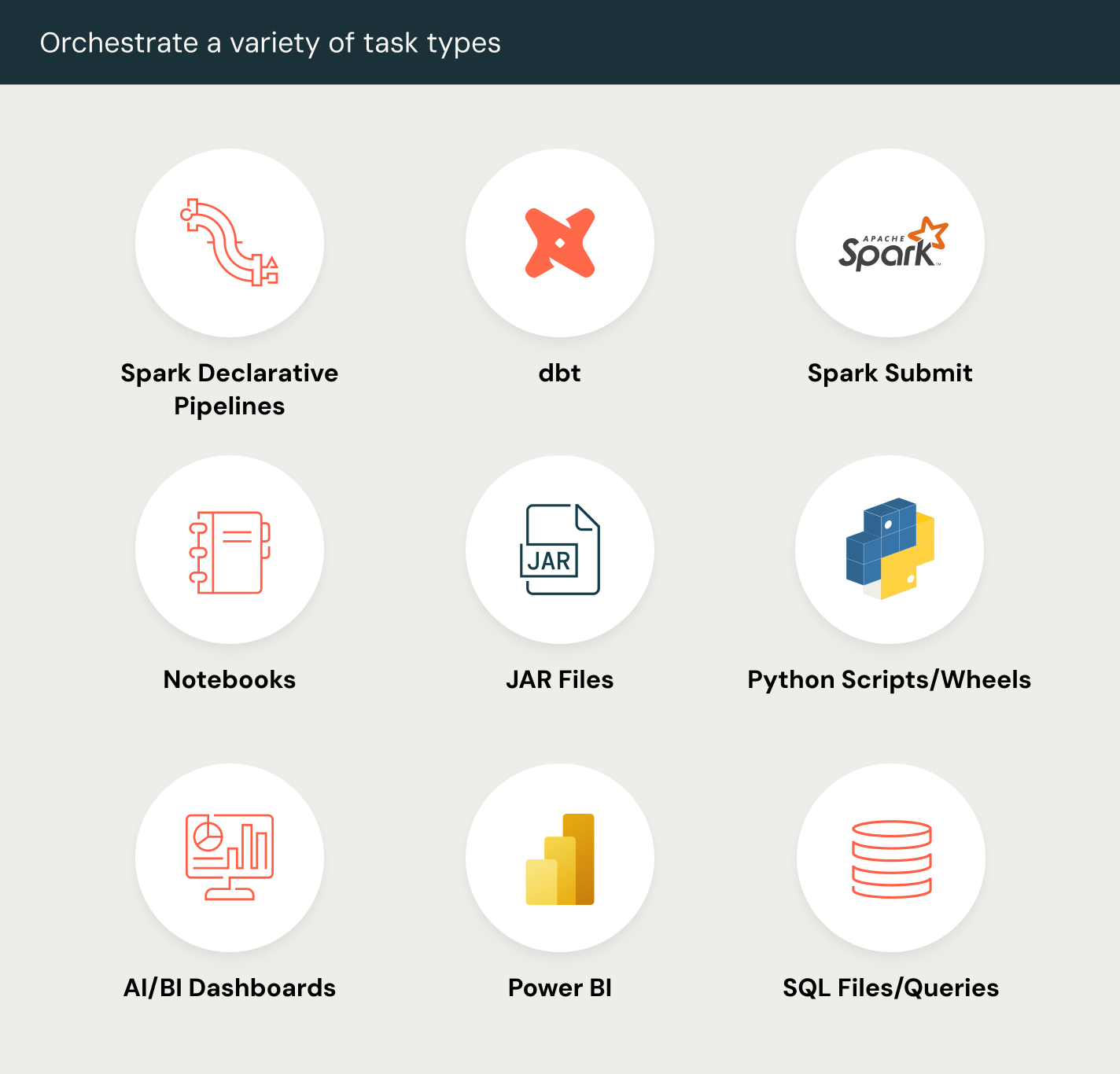

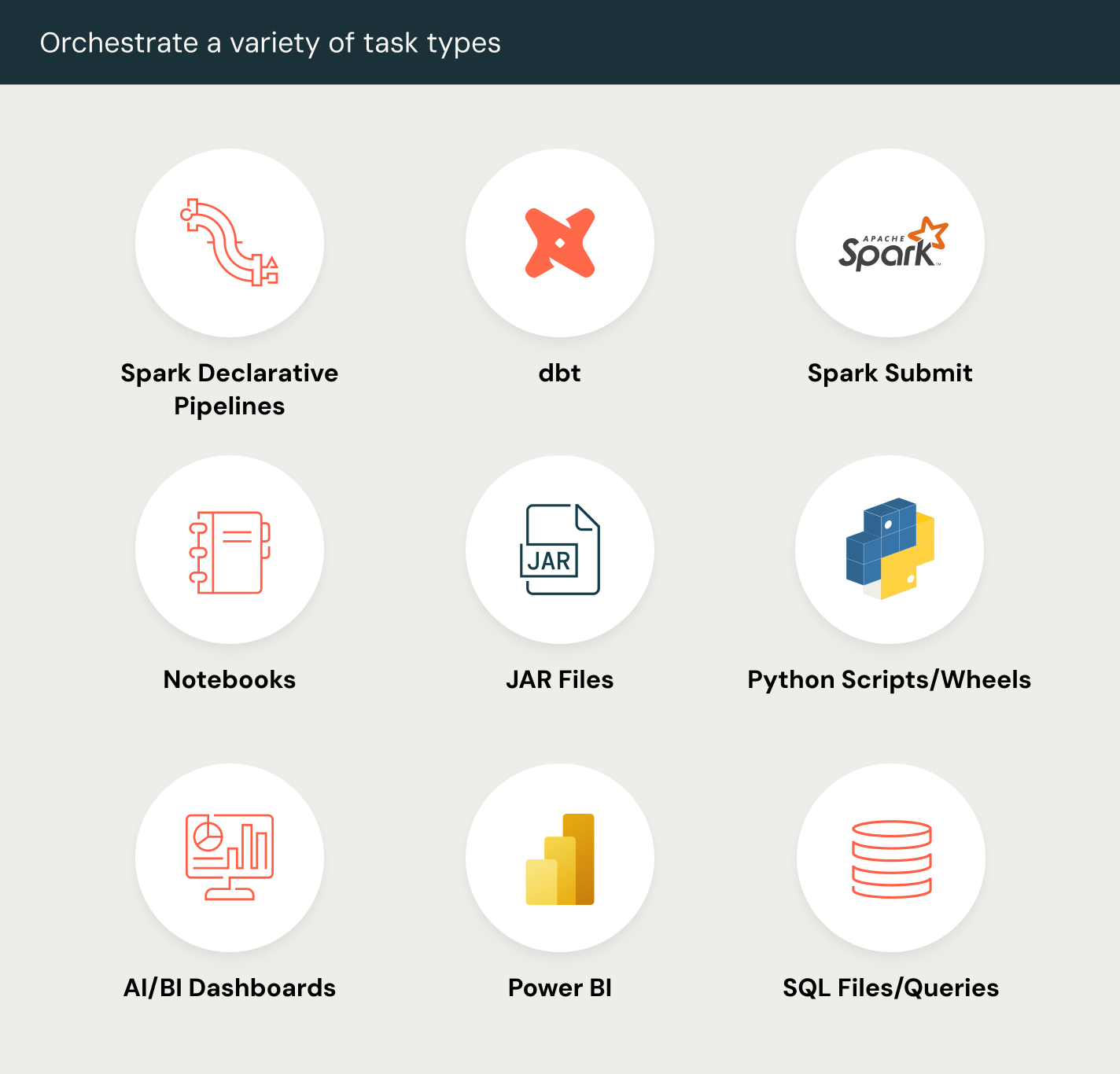

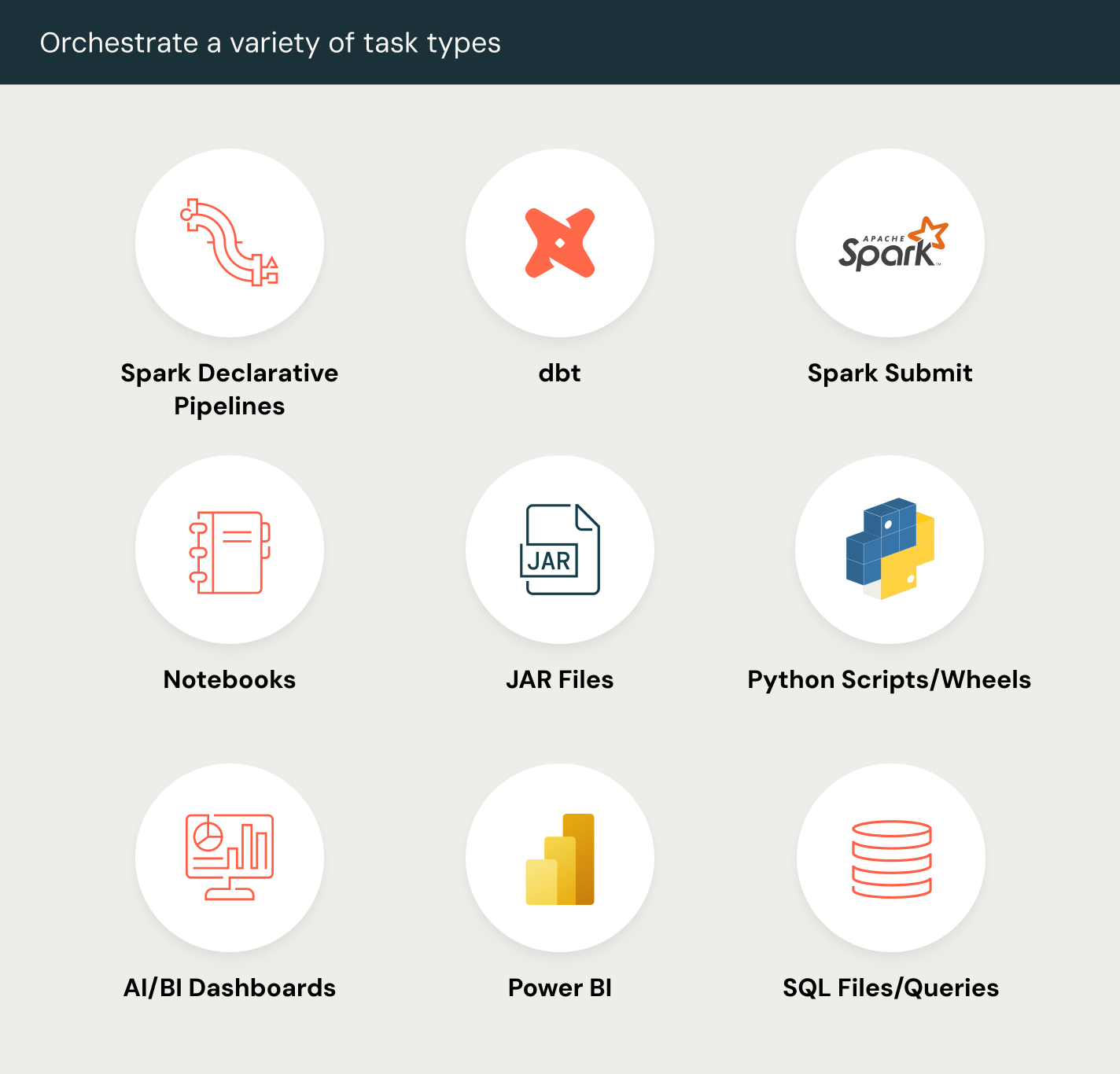

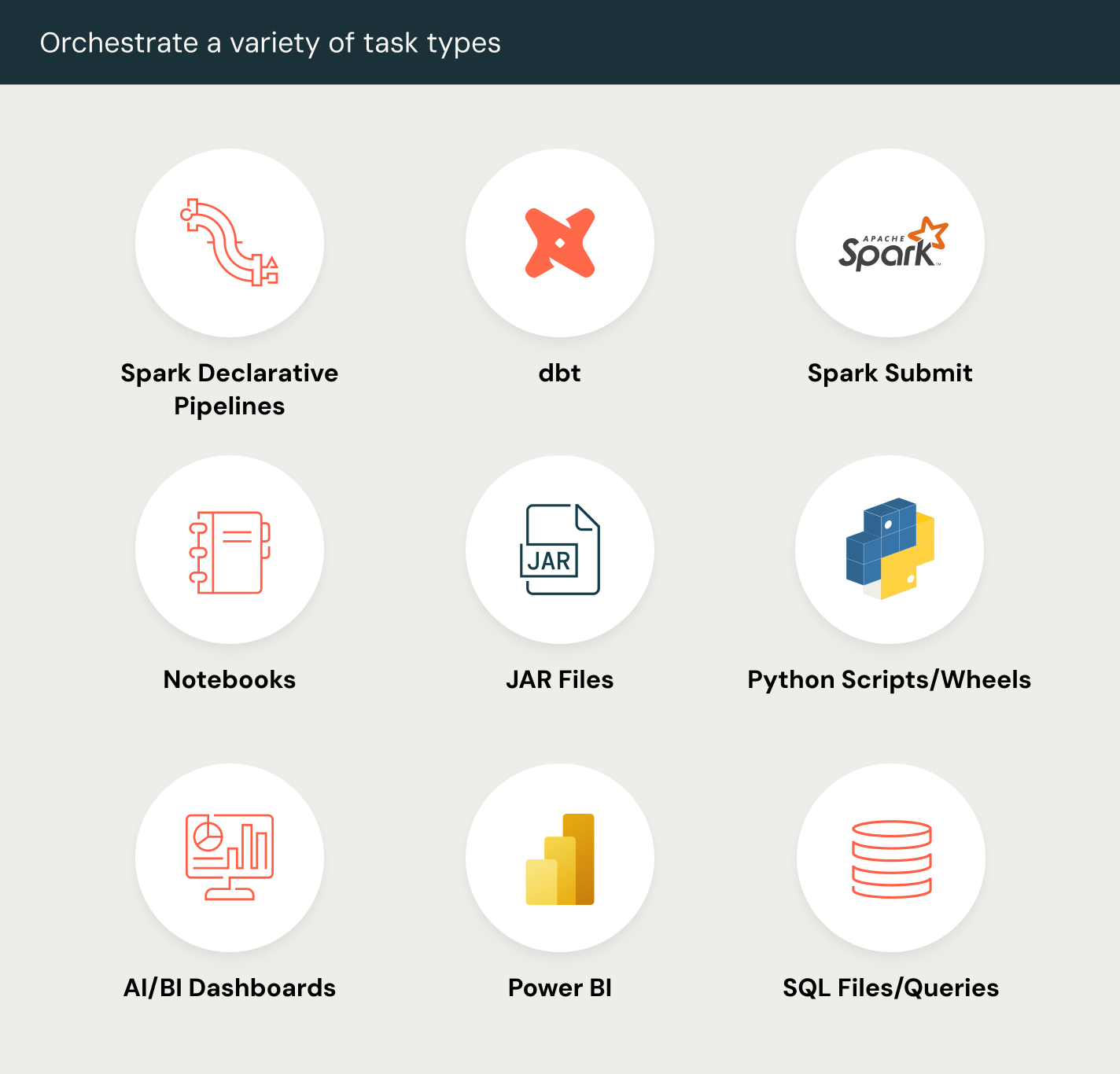

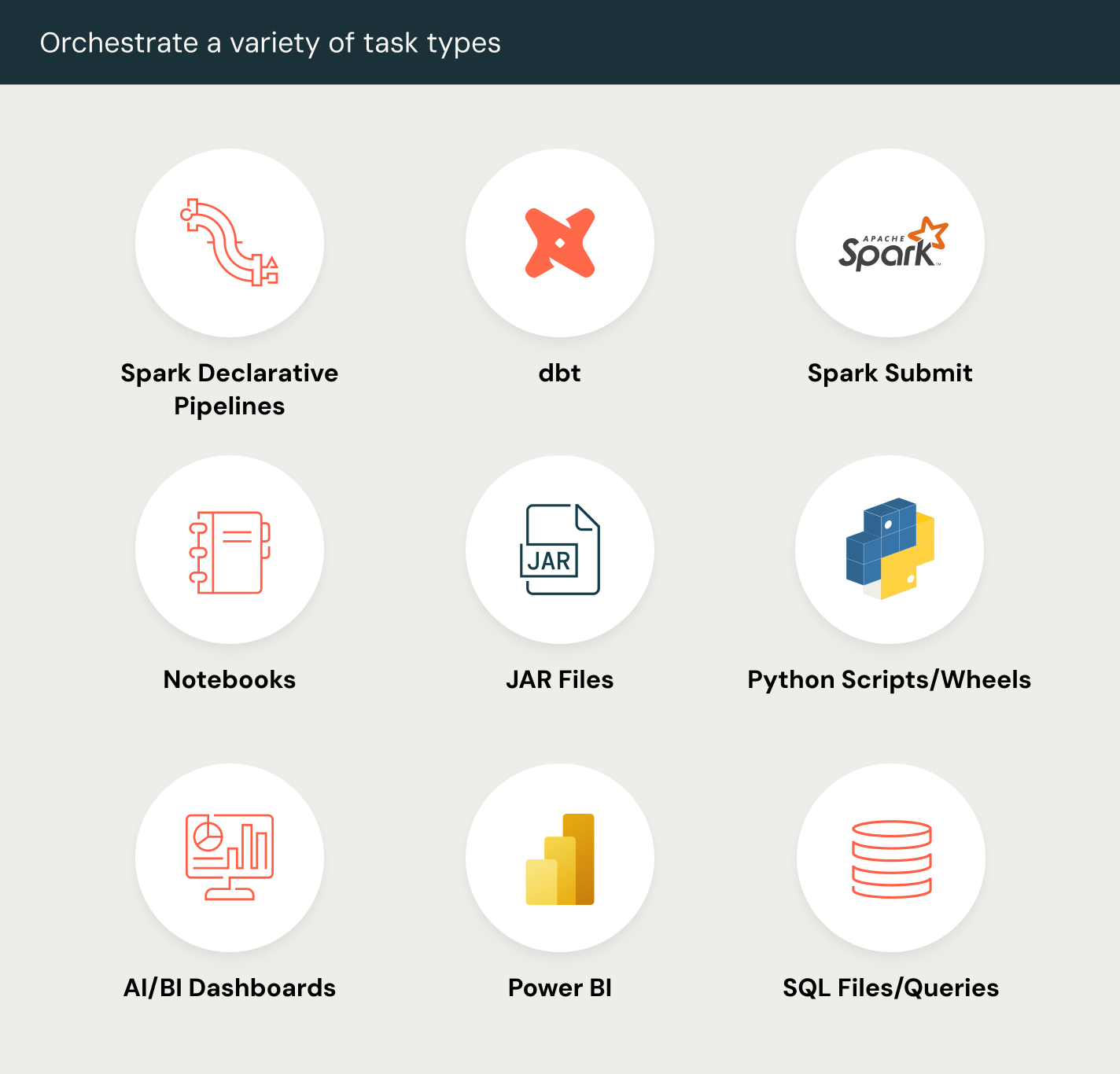

Defina, gerencie e monitore facilmente trabalhos em pipelines de ETL, análise de dados e IA.Desbloqueie a orquestração perfeita com tipos de tarefas versáteis, incluindo notebooks, SQL, Python e mais, para potencializar qualquer fluxo de trabalho de dados.

Mais recursos

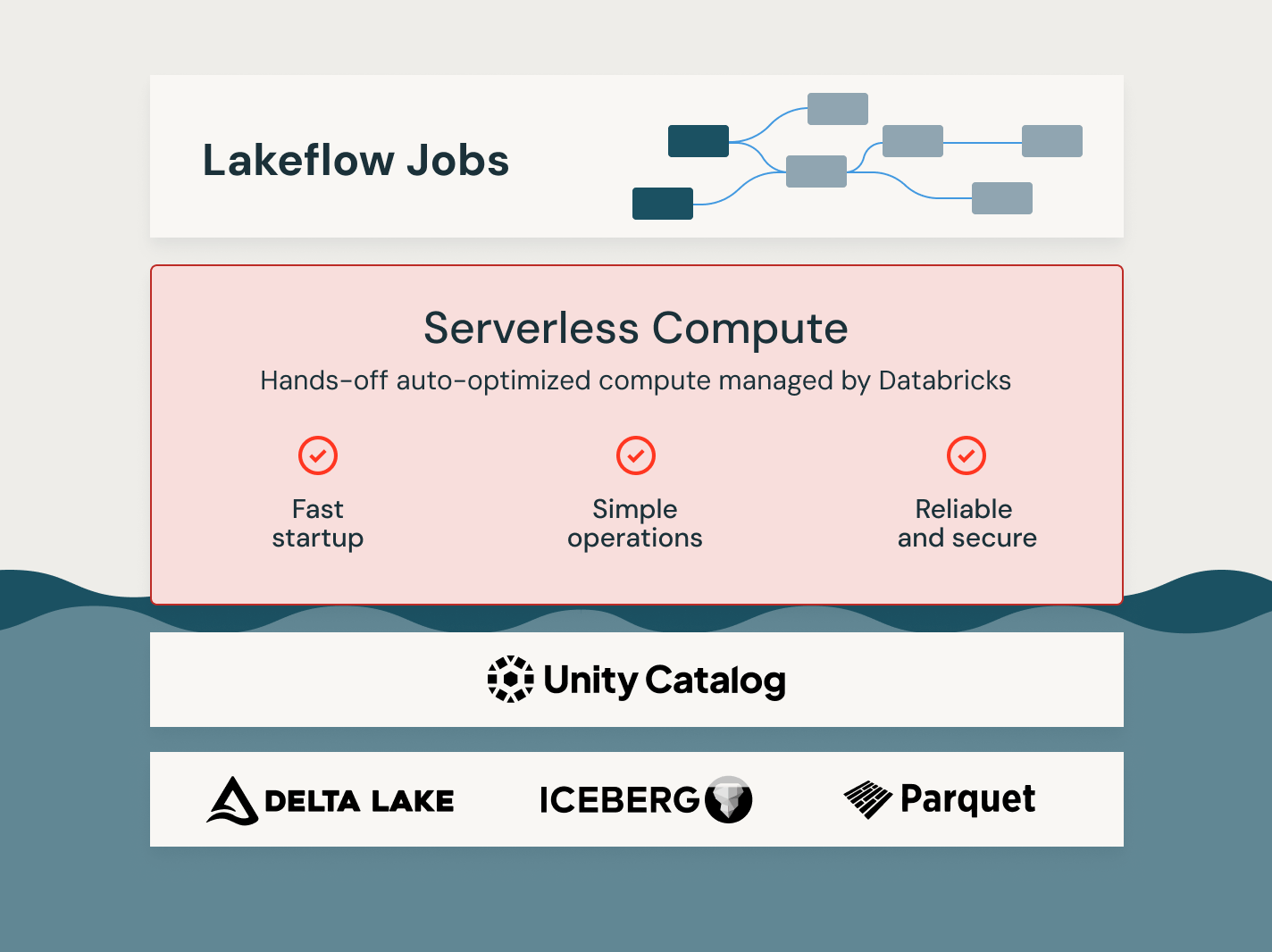

Desbloqueie o poder dos Trabalhos Lakeflow

Automatize a orquestração de dados para processos de extração, transformação e carga (ETL)

Automatize a ingestão e transformação de dados de várias fontes para o Databricks, garantindo a preparação de dados precisa e consistente para qualquer carga de trabalho.

Explore as demonstrações de trabalhos do Lakeflow

Descubra mais

Saiba mais sobre como a Databricks Data Intelligence Platform capacita suas equipes de dados em todas as suas cargas de trabalho de dados e IA.LakeFlow Connect

Conectores eficientes de data ingestion de qualquer fonte e integração nativa com a Plataforma de Inteligência de Dados desbloqueiam fácil acesso a analytics e AI, com governança unificada.

Spark Declarative Pipelines

Oferece pipelines simplificados e declarativos para processamento de dados em lote e em tempo real, garantindo transformações automatizadas e confiáveis para tarefas de análise e IA/ML.

Databricks Assistant

Aproveite a assistência alimentada por IA para simplificar a codificação, depurar fluxos de trabalho e otimizar consultas usando linguagem natural para uma engenharia e análise de dados mais rápidas e eficientes.

Armazenamento lakehouse

Unifique os dados em seu lakehouse, em todos os formatos e tipos, para todas as suas cargas de trabalho de analytics e AI.

Unity Catalog

Governe sem esforço todos os seus ativos de dados com a única solução de governança unificada e aberta do setor para dados e AI, integrada à Databricks Data Intelligence Platform.

Plataforma de Inteligência de Dados

Descubra como a plataforma Databricks Data Intelligence permite seus dados e cargas de trabalho de IA.

Dê um passo adiante

Perguntas frequentes sobre Lakeflow Jobs

Pronto para se tornar uma empresa de dados + AI?

Dê os primeiros passos na transformação dos seus dados