LAKEFLOW 작업을 사용하는 고객들

어떤 데이터, 분석 �또는 AI 워크플로우를 조정하십시오

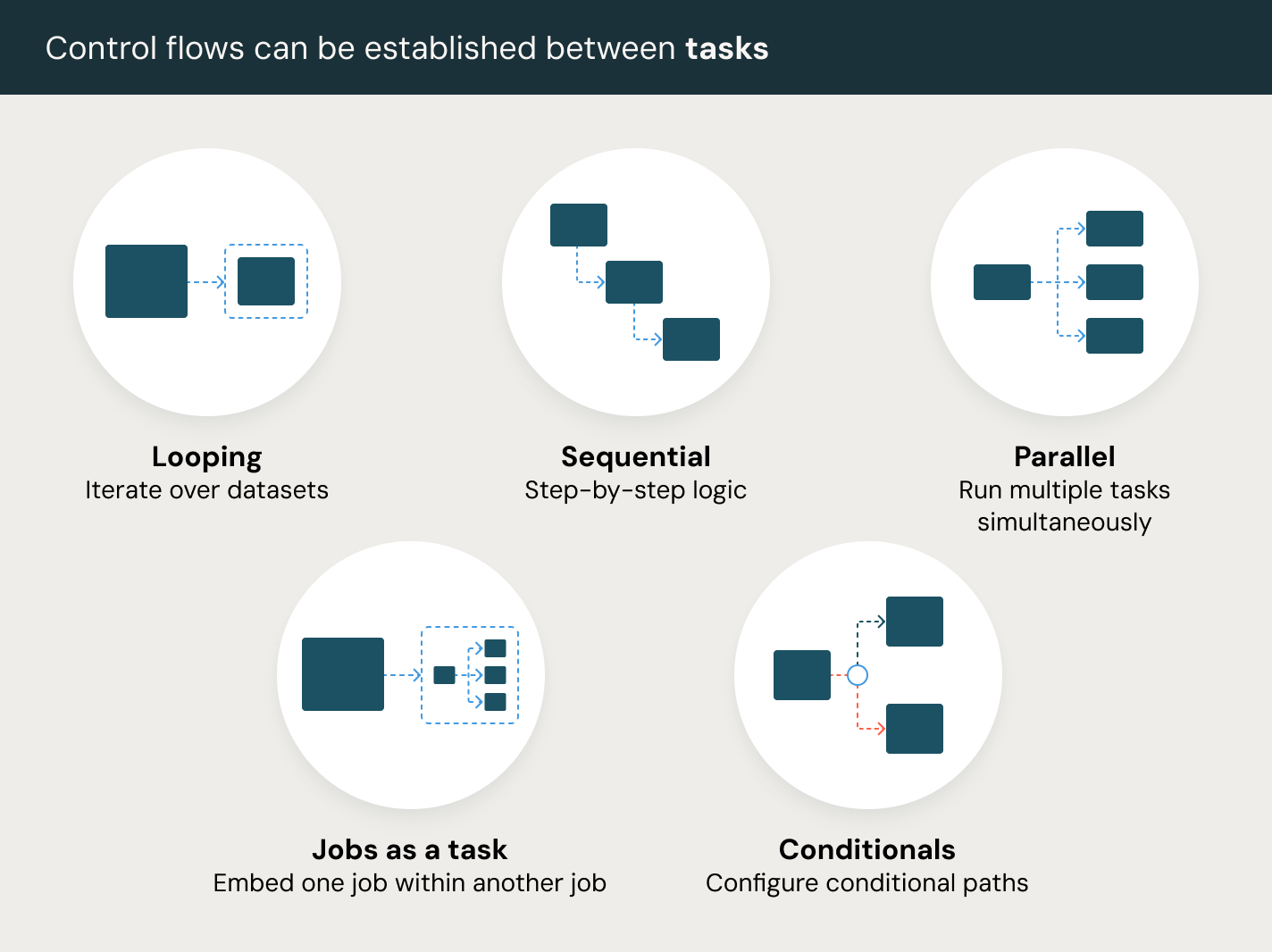

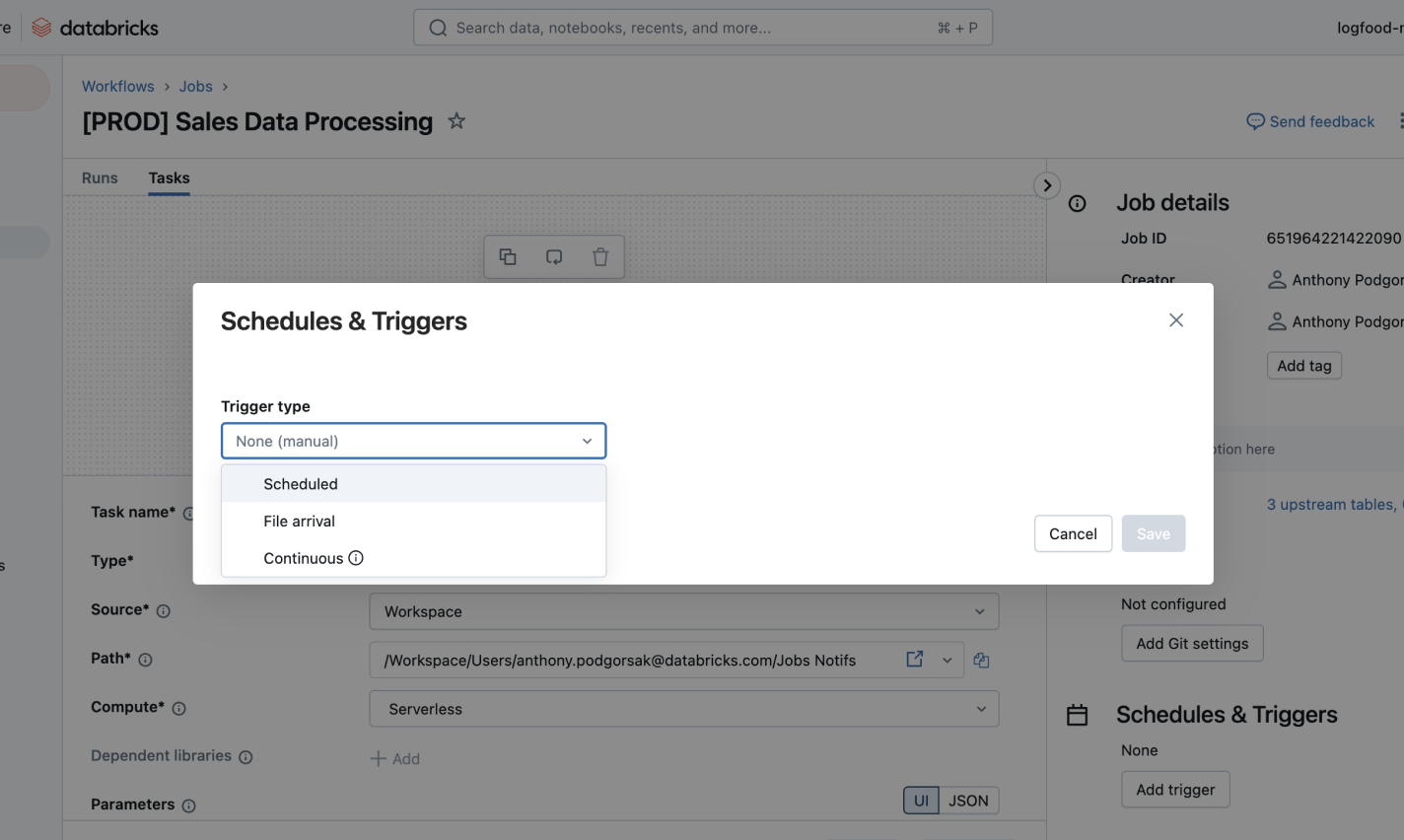

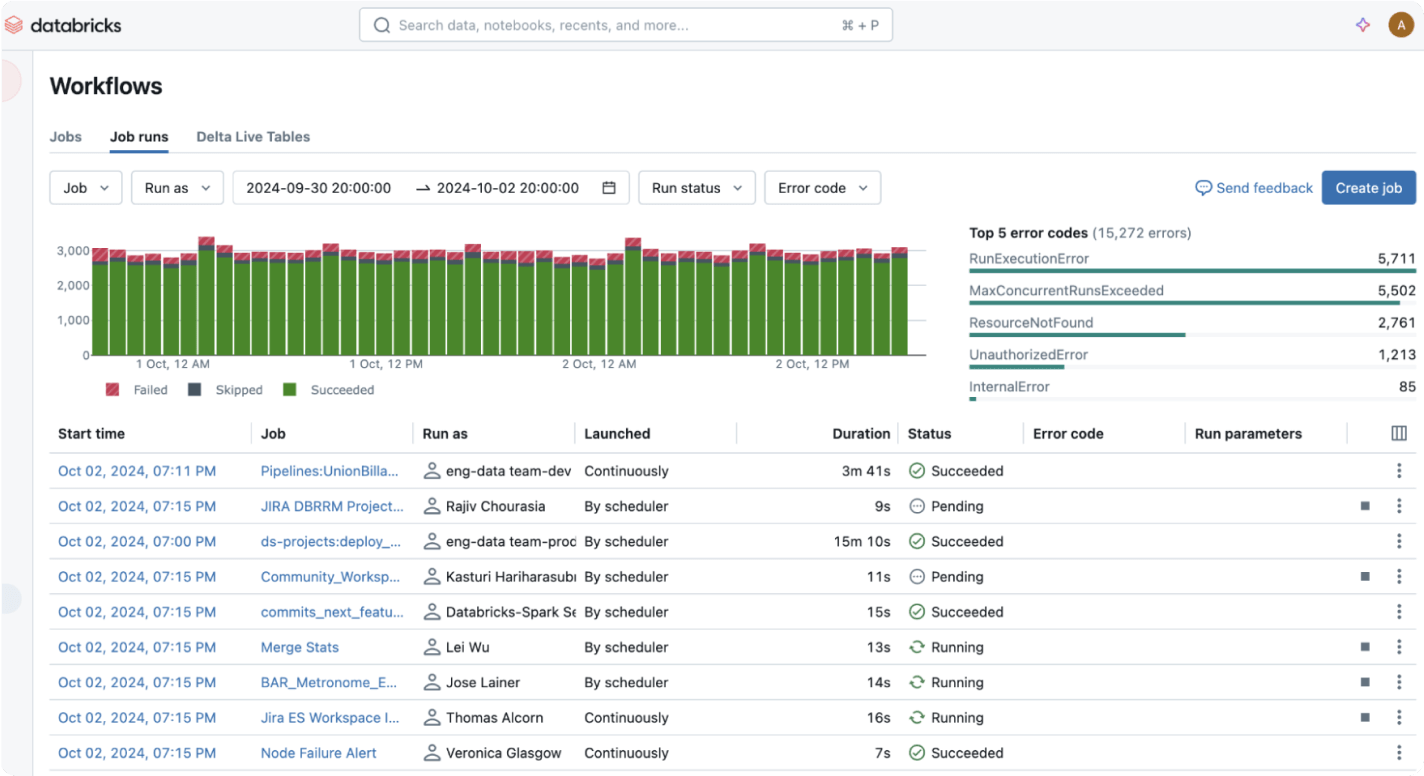

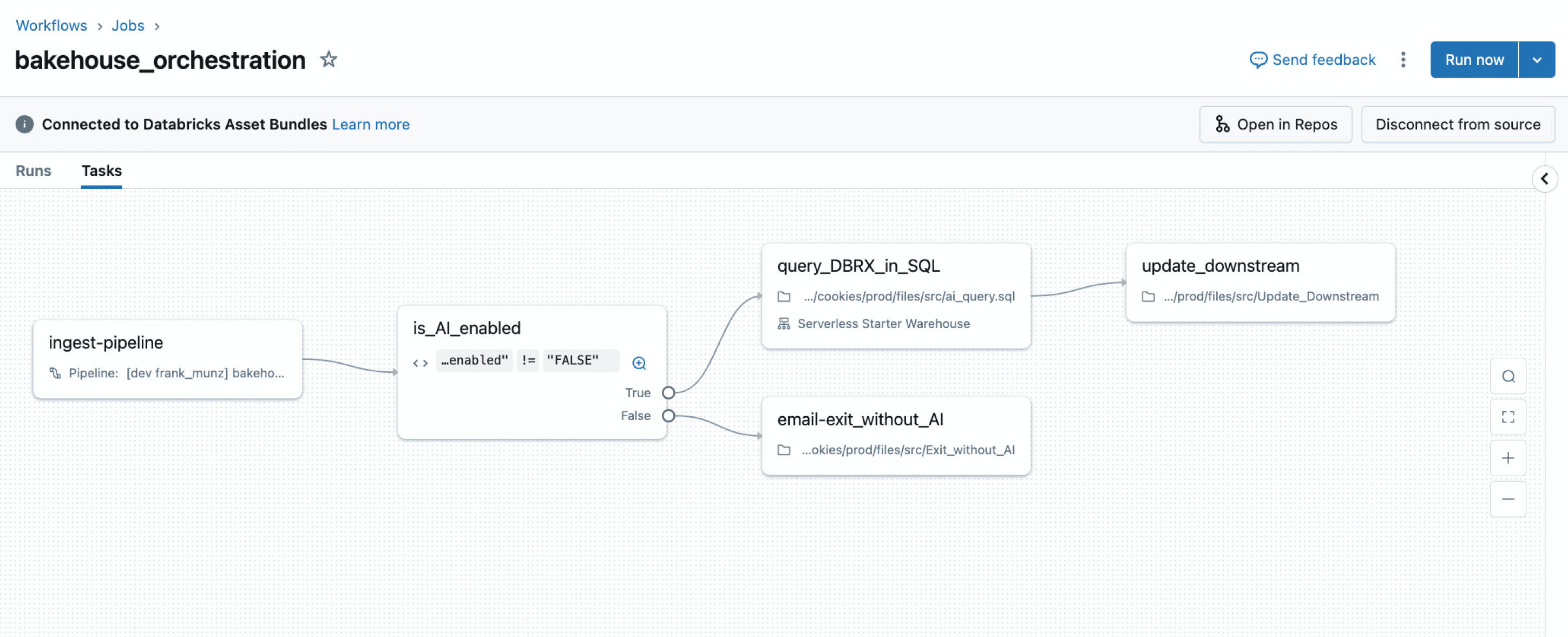

데이터 플랫폼에 완전히 통합된 관리 조정 서비스를 사용하여 파이프라인을 간소화하십시오. 간편하게 ETL, 분석 및 ML 워크플로우를 정의하고, 모니터링하며, 신뢰성과 깊은 관찰력으로 자동화하세요.데이터 파이프라인을 간소화하고 조정하세요

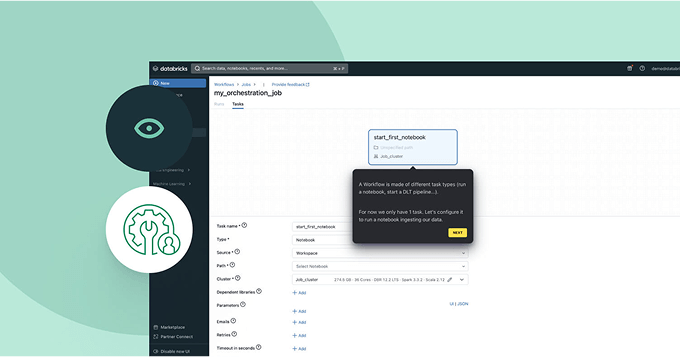

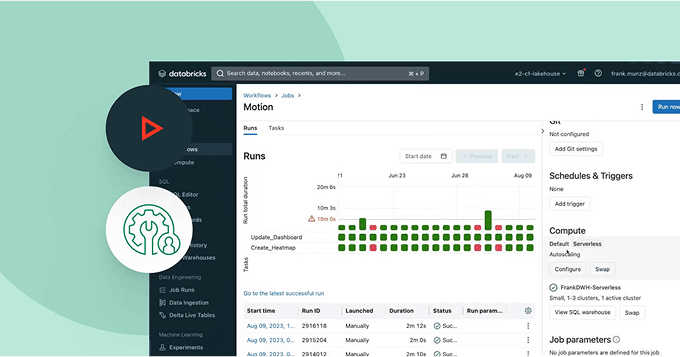

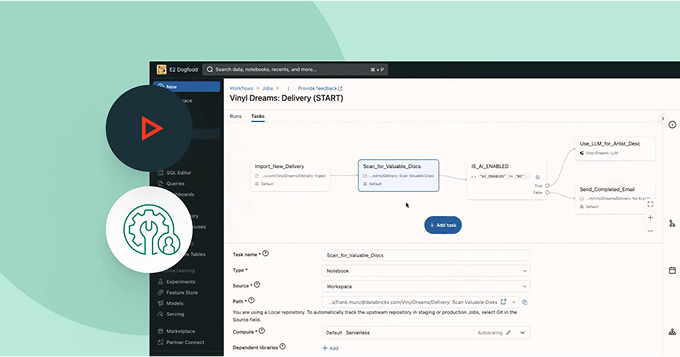

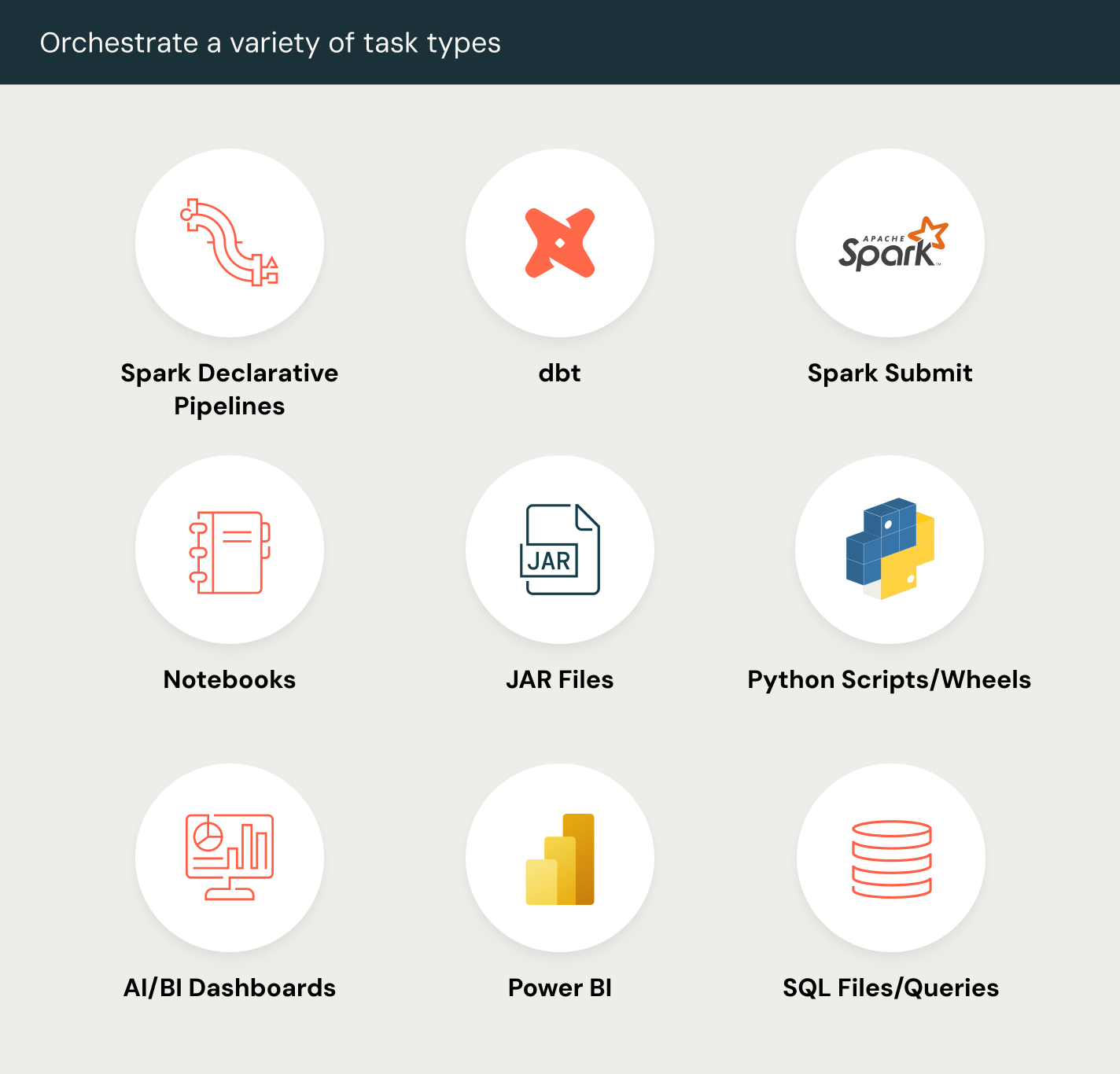

ETL, 분석 및 AI 파이프라인에서 작업을 쉽게 정의하고, 관리하고, 모니터링하세요.

기타 기능

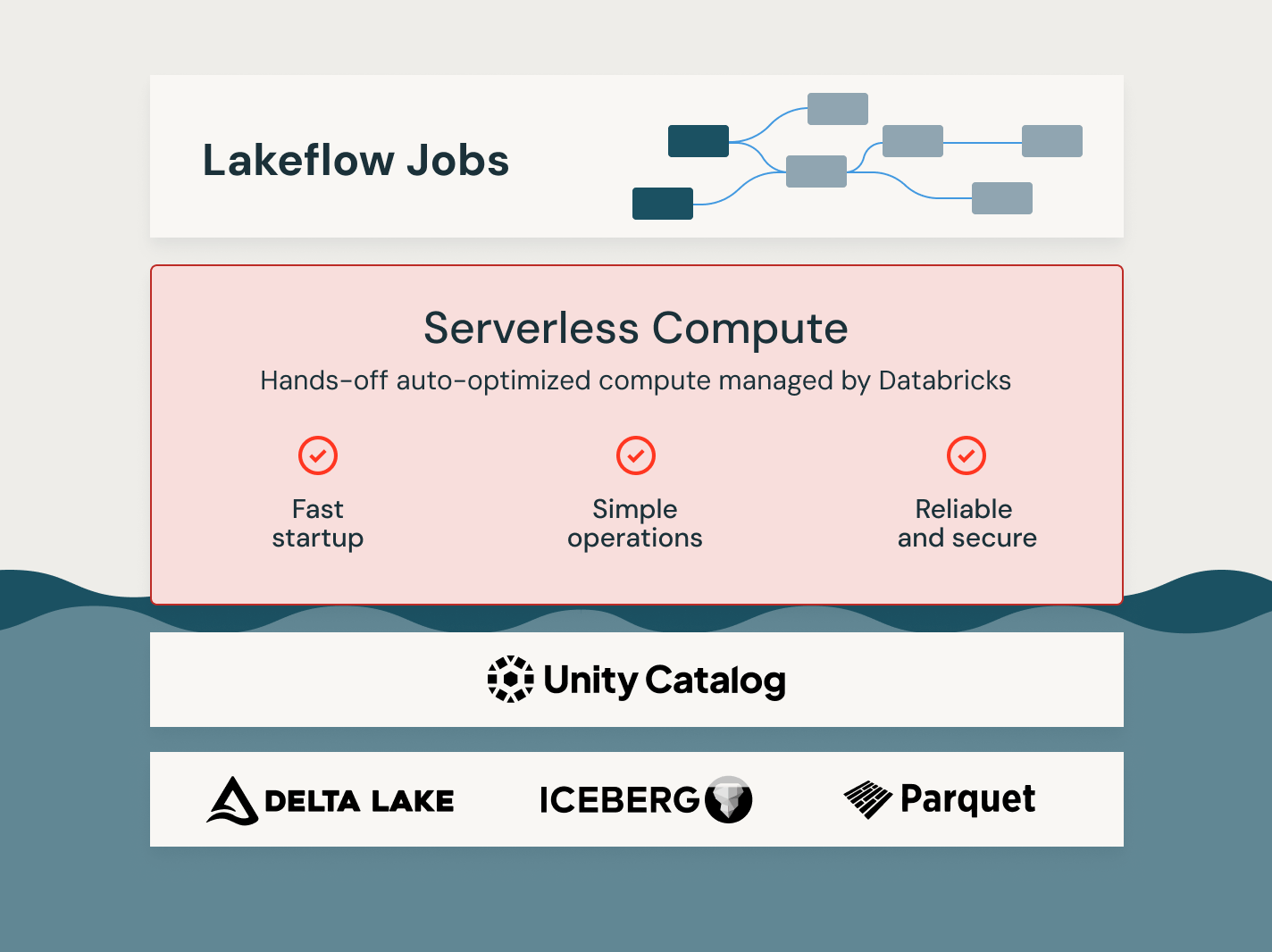

Lakeflow Jobs의 힘을 해제하세요

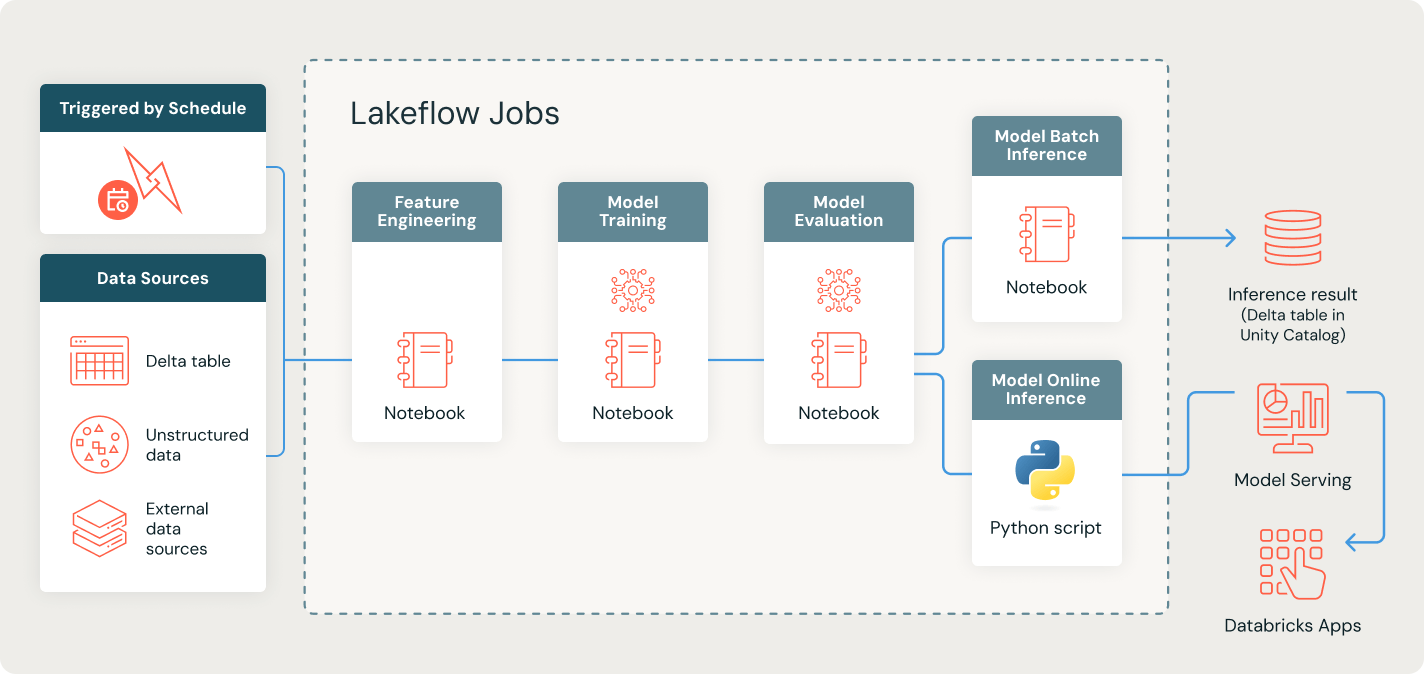

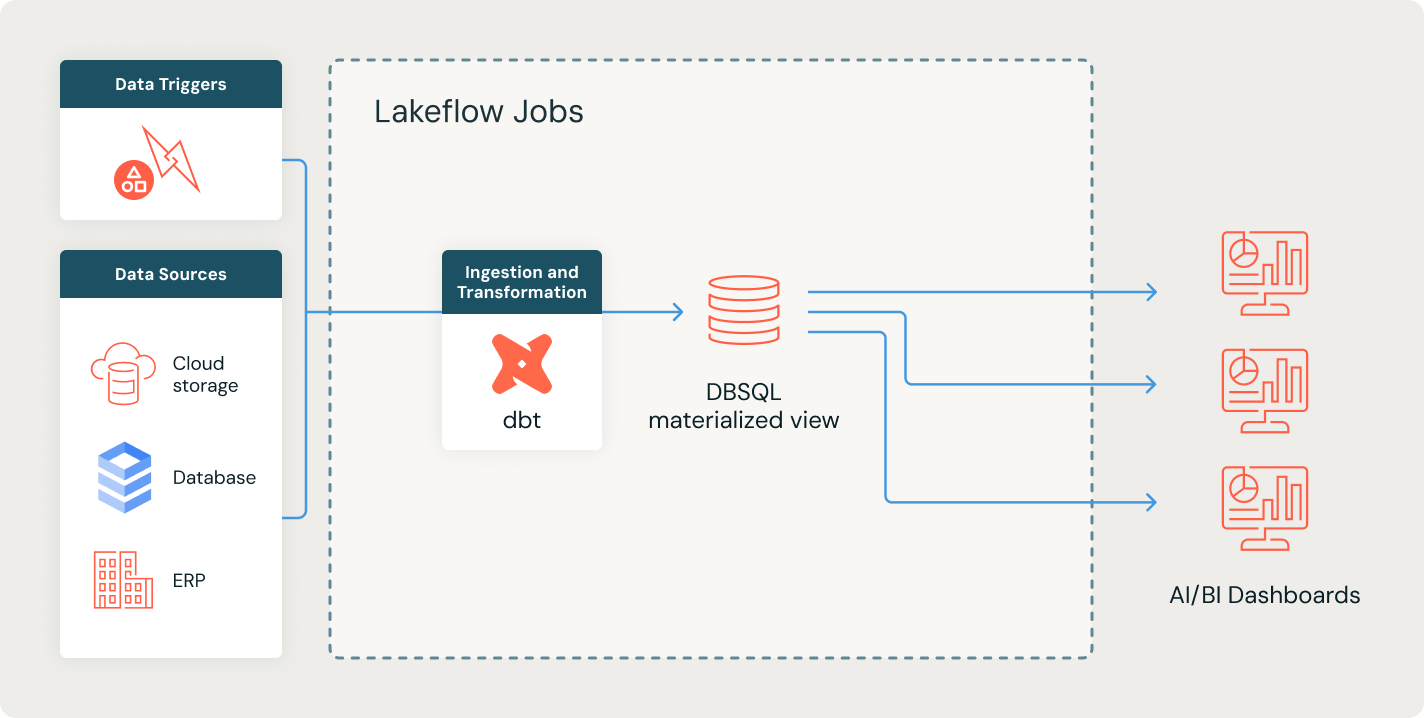

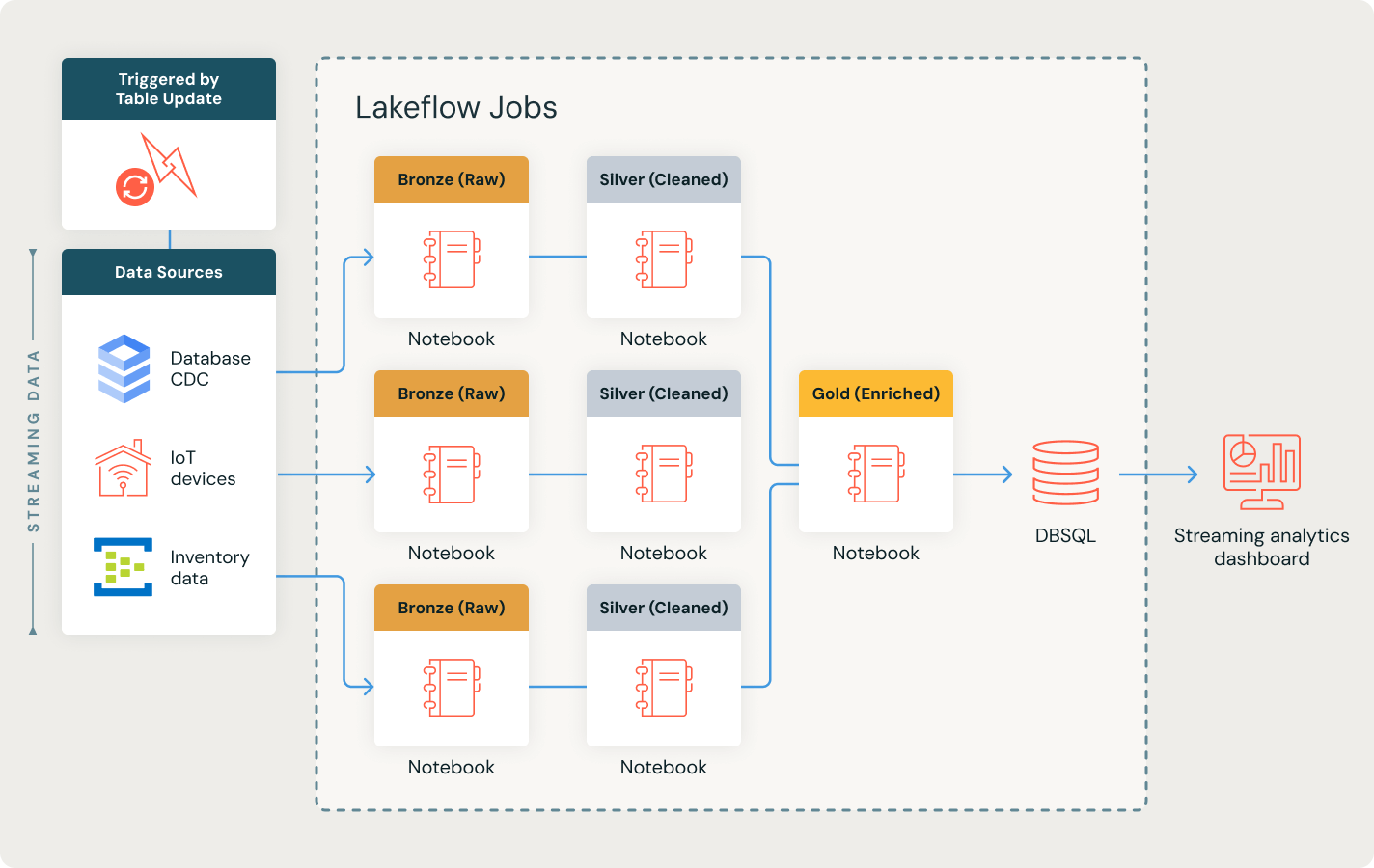

데이터 오케스트레이션을 자동화하여 추출, 변환, 로드 (ETL) 프로세스를 수행합니다

다양한 소스에서 Databricks로의 데이터 수집 및 변환을 자동화하여, 어떤 작업량에도 정확하고 일관된 데이터 준비를 보장합니다.

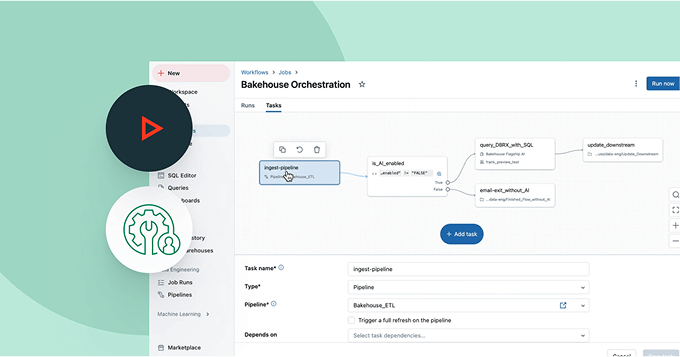

Lakeflow Jobs 데모를 탐색해보세요

더 자세히 알아보기

Databricks 데이터 인텔리전스 플랫폼이 데이터 팀을 어떻게 강화하는지에 대해 더 자세히 알아보세요. 이 플랫폼은 모든 데이터와 AI 작업 부하에 걸쳐 사용됩니다.LakeFlow Connect

어떤 소스에서든 효율적인 데이터 수집 커넥터와 데이터 인텔리전스 플랫폼과의 기본 통합은 통합된 거버넌스와 함께 분석 및 AI에 쉽게 접근할 수 있게 해줍니다.

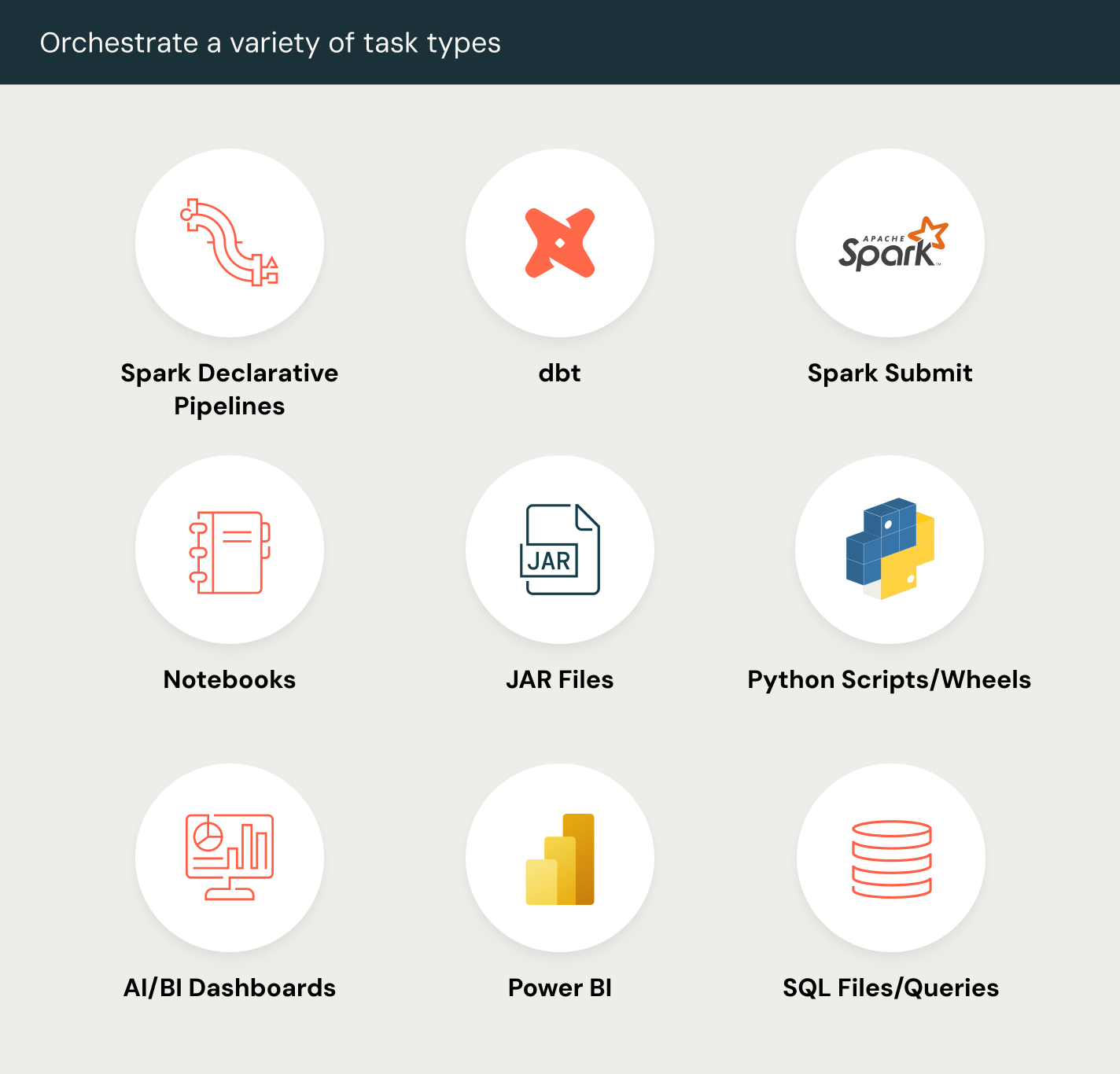

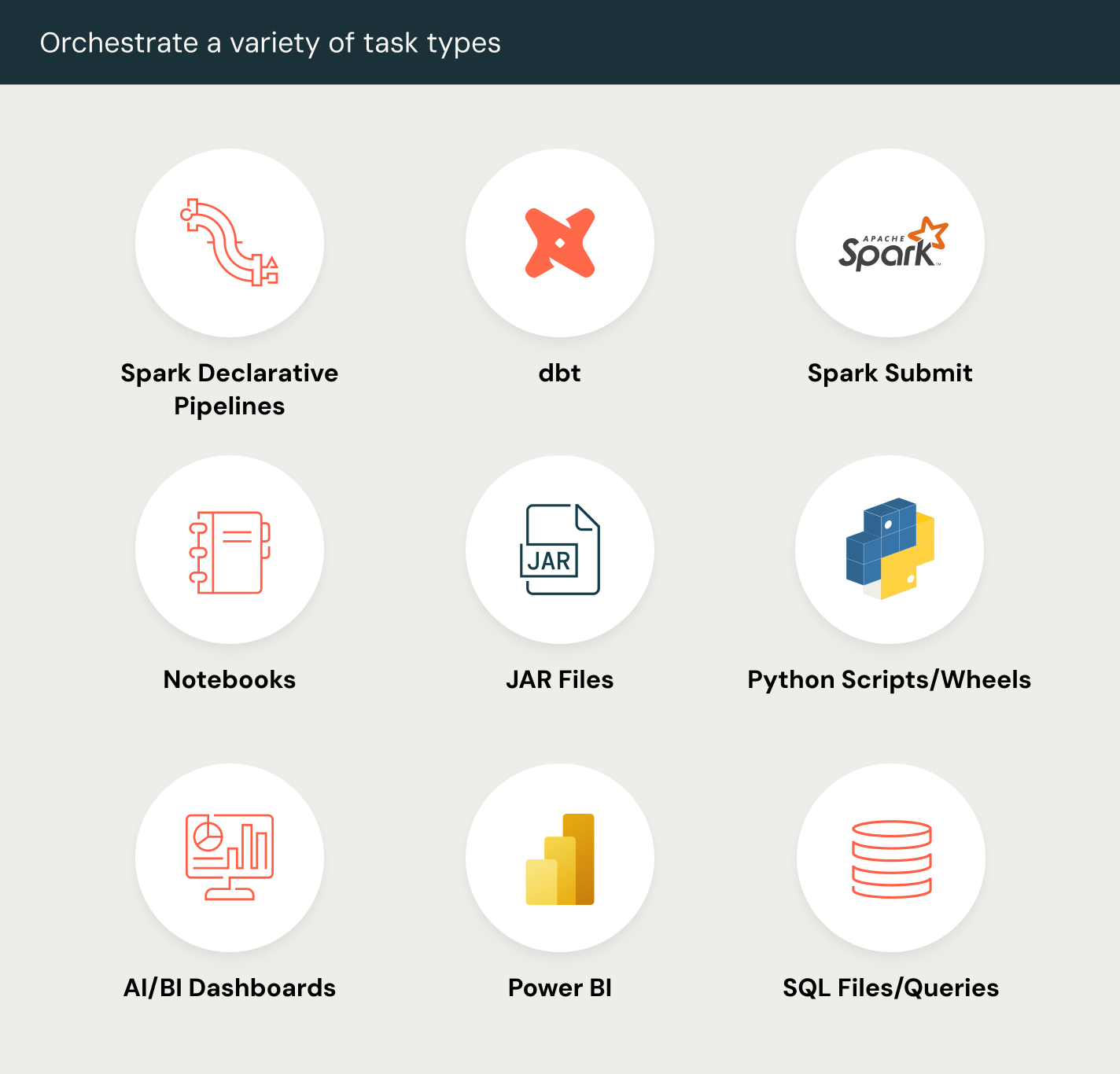

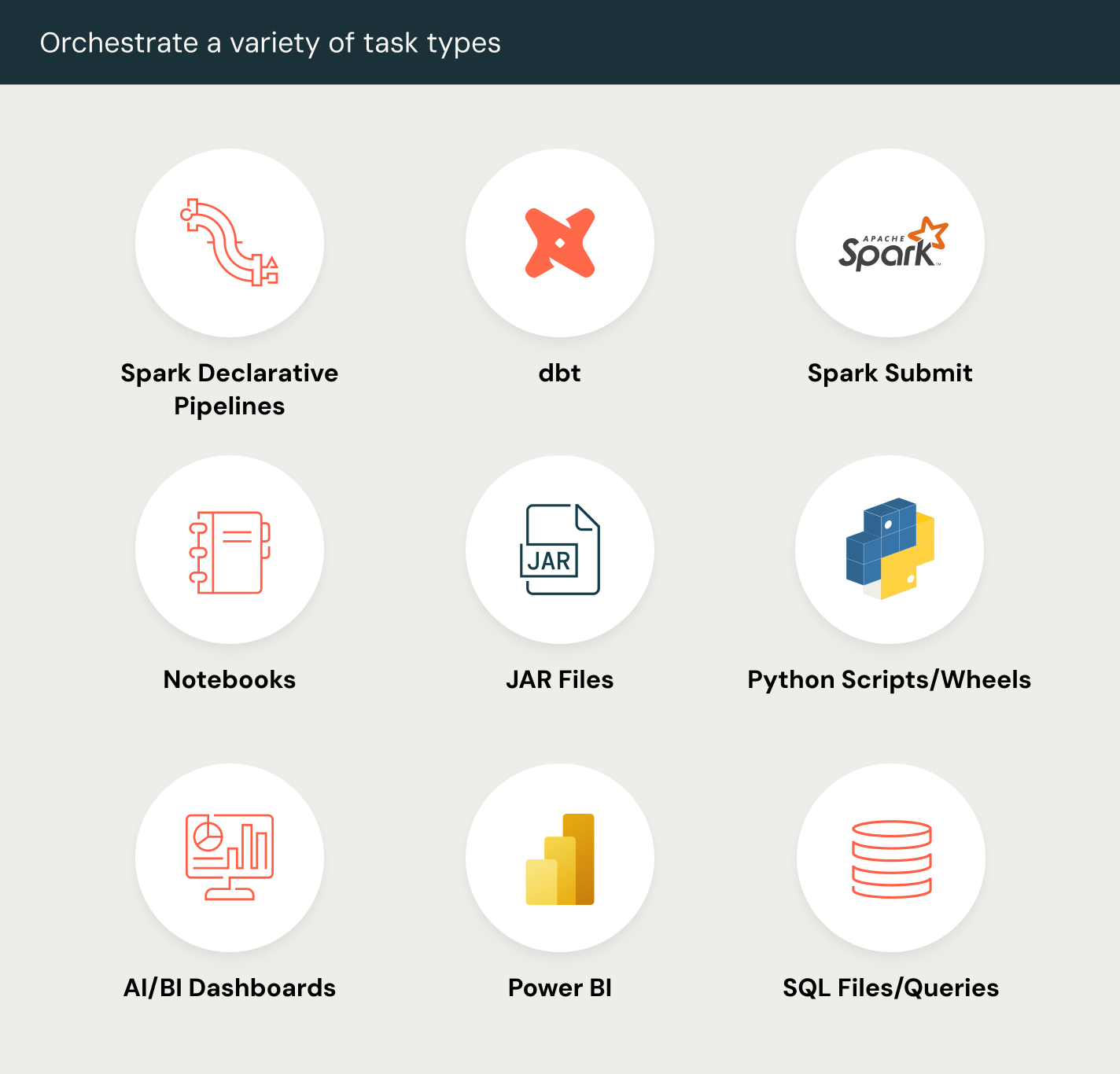

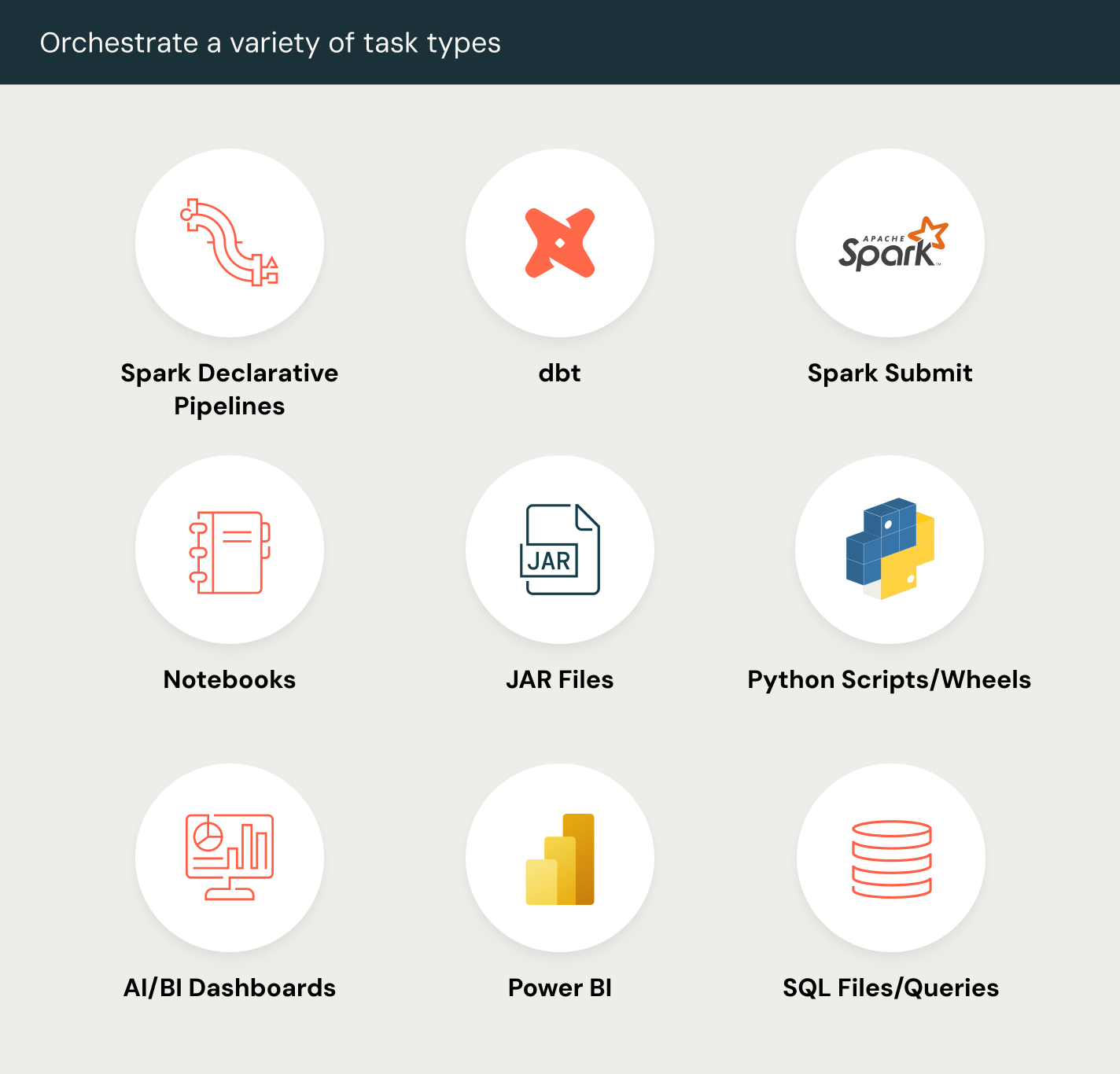

Spark 선언적 파이프라인

배치 및 스트리밍 데이터 처리를 위한 간소화된 선언적 파이프라인을 제공하여, 분석 및 AI/ML 작업을 위한 자동화된 신뢰할 수 있는 변환을 보장합니다.

Databricks 어시스턴트

AI 기반의 도움을 활용��하여 코딩을 단순화하고, 워크플로우를 디버그하며, 자연어를 사용하여 쿼리를 최적화하여 데이터 엔지니어링 및 분석을 더 빠르고 효율적으로 수행하세요.

레이크하우스 스토리지

모든 형식과 유형에 걸쳐 레이크하우스의 데이터를 통합하여 모든 분석 및 AI 작업 부하를 위해 사용합니다.

Unity Catalog

당신의 모든 데이터 자산을 산업에서 유일하게 통합되고 개방된 거버넌스 솔루션을 통해 원활하게 관리하세요. 이 솔루션은 데이터와 AI를 위해 만들어졌으며, Databricks 데이터 인텔리전스 플랫폼에 내장되어 있습니다.

Data Intelligence Platform

Databricks Data Intelligence Platform을 통해 데이터 및 AI 워크로드를 지원하는 방법에 대해 자세히 알아보세요