Was ist das Model Context Protocol (MCP)? Ein praktischer Leitfaden zur KI-Integration

Einführung: Das Model Context Protocol verstehen

Das Model Context Protocol (MCP) ist ein offener Standard, der es KI-Anwendungen ermöglicht, sich nahtlos mit externen Datenquellen, Tools und Systemen zu verbinden. Stellen Sie sich das Model Context Protocol als einen USB-C-Anschluss für KI-Systeme vor – so wie ein USB-C-Anschluss die Verbindung von Geräten mit Computern standardisiert, standardisiert MCP, wie KI-Agenten auf externe Ressourcen wie Datenbanken, APIs, Dateisysteme und Wissensdatenbanken zugreifen.

Das Kontextprotokoll löst eine entscheidende Herausforderung bei der Entwicklung von KI-Agenten: das "N×M-Integrationsproblem". Ohne ein standardisiertes Protokoll muss jede KI-Anwendung direkt mit jedem externen Dienst integriert werden, was zu N×M separaten Integrationen führt, wobei N die Anzahl der Tools und M die Anzahl der Clients darstellt. Dieser Ansatz lässt sich schnell nicht mehr skalieren. Das Model Context Protocol (MCP) löst dieses Problem, indem es von jedem Client und jedem MCP-Server verlangt, das Protokoll nur einmal zu implementieren, wodurch die Gesamtzahl der Integrationen von N×M auf N+M reduziert wird.

Indem es KI-Systemen den Zugriff auf Echtzeitdaten über die Trainingsdaten ihres LLM hinaus ermöglicht, hilft MCP KI-Modellen, präzise und aktuelle Antworten zu geben, anstatt sich nur auf statische Trainingsdaten aus ihrer anfänglichen Lernphase zu verlassen.

Ähnliche Themen erkunden

Was ist ein Modellkontextprotokoll?

Das Model Context Protocol ist ein einheitlicher Open-Source-Standard für Interoperabilität, der es Entwicklern ermöglicht, kontextbezogene KI-Anwendungen zu erstellen. MCP ergänzt LLMOps, indem es Laufzeitintegration, Beobachtbarkeit und Governance-Kontrollen bereitstellt, die die Bereitstellung, das Monitoring und das Lebenszyklusmanagement von LLM-Anwendungen vereinfachen.

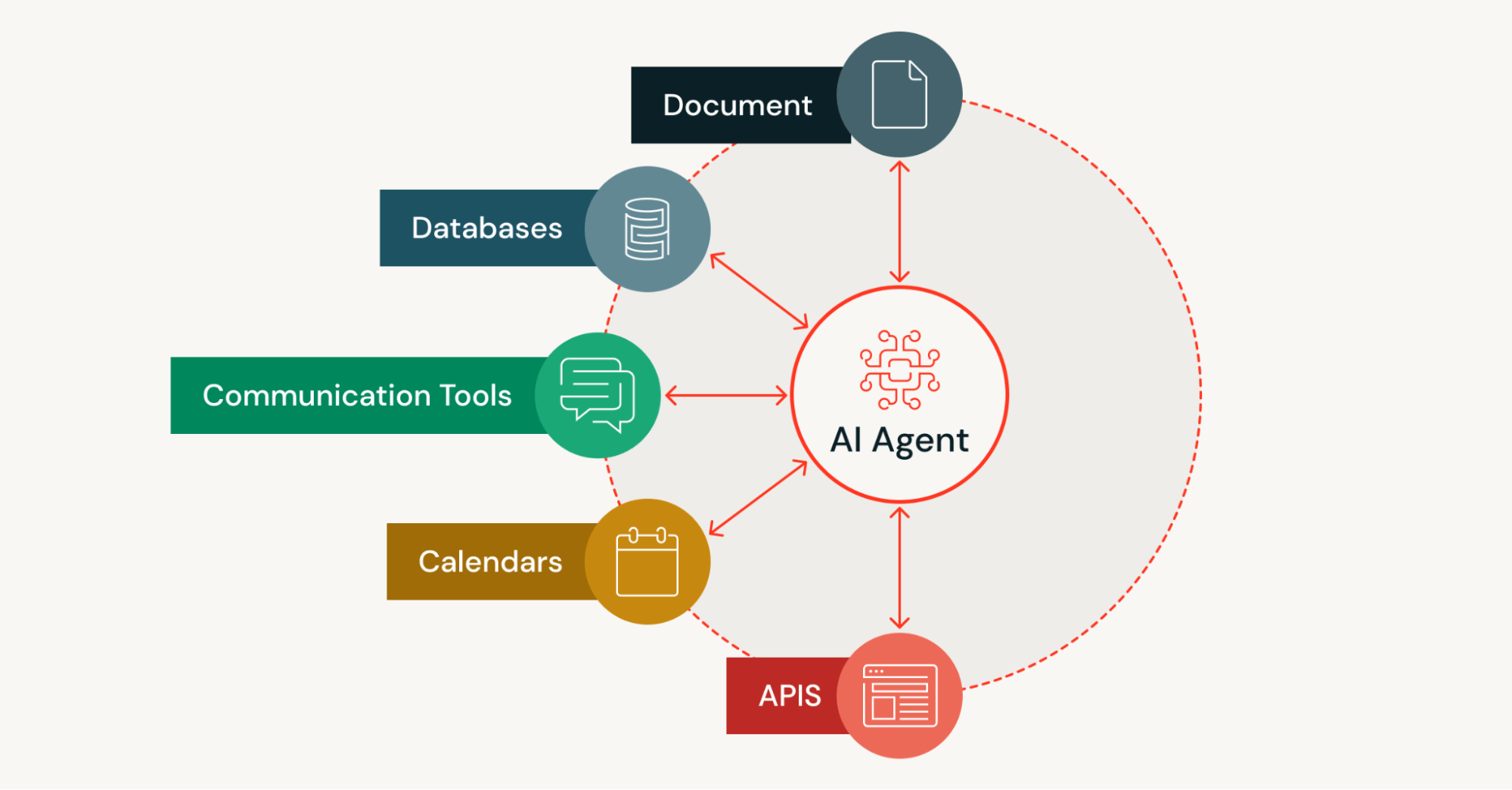

KI-Anwendungen benötigen Zugriff auf Assets wie lokale Ressourcen, Datenbanken, Datenpipelines (Streaming/Batch), Suchmaschinen, Rechner und Workflows zur Prompt-Konditionierung und Grounding-Generierung. Das Kontextprotokoll standardisiert die Verbindung von Anwendungen mit diesen Assets auf eine strukturierte Weise, wodurch Boilerplate-Integrationscode reduziert wird.

Das Skalierbarkeitsproblem bedeutet, dass KI-Modelle (insbesondere große Sprachmodelle) für das Training in der Regel auf bereits vorhandene, statische Daten zurückgreifen müssen. Dies kann zu ungenauen oder veralteten Antworten führen, da Modelle, die auf statischen Datasets trainiert wurden, zusätzliche Aktualisierungen benötigen, um neue Information zu integrieren. Durch die Adressierung der Skalierbarkeit ermöglicht MCP KI-Anwendungen, kontextsensitiv zu sein und aktuelle Ausgaben zu liefern, die nicht durch die Beschränkungen statischer Trainingsdaten eingeschränkt sind.

Was ist MCP und wofür wird es verwendet?

Das Model Context Protocol MCP dient als standardisierte Methode für KI-Anwendungen, um externe Tools und Datenquellen zur Laufzeit zu entdecken und mit ihnen zu interagieren. Anstatt Verbindungen zu jedem externen Dienst fest zu codieren, können KI-Agenten, die MCP verwenden, dynamisch verfügbare Tools entdecken, ihre Fähigkeiten durch strukturierte Aufrufe verstehen und sie mit den richtigen Tool-Berechtigungen aufrufen.

MCP wird verwendet, weil es die Art und Weise verändert, wie KI-gestützte Tools auf Informationen zugreifen. Herkömmliche KI-Systeme sind durch ihre Trainingsdaten eingeschränkt, die schnell veralten. Das Kontextprotokoll ermöglicht es Entwicklern, KI-Agenten zu erstellen, die Aufgaben unter Verwendung von Live-Daten aus gängigen Unternehmenssystemen, Entwicklungsumgebungen und anderen externen Quellen ausführen können – alles über ein einziges, standardisiertes Protokoll.

Das offene Protokoll reduziert auch den Boilerplate-Integrationscode. Anstatt für jede neue Integration benutzerdefinierte Konnektoren zu schreiben, implementieren Entwickler MCP einmal sowohl auf der Client- als auch auf der Serverseite. Dieser Ansatz ist besonders wertvoll für agentenbasierte KI-Systeme, die autonom mehrere Tools in verschiedenen Kontexten entdecken und verwenden müssen.

Was unterscheidet MCP von einer API?

Einschränkungen traditioneller APIs

Traditionelle APIs legen Endpunkte mit typisierten Parametern offen, die von Clients fest codiert und bei jeder Änderung einer API aktualisiert werden müssen. Die Kontextverknüpfung wird zur Aufgabe des Clients, da APIs nur minimale semantische Anleitungen zur Verwendung der zurückgegebenen Daten bieten. Eine API-Anfrage folgt typischerweise einem einfachen Anfrage-Antwort-Muster, ohne den Zustand oder Kontext zwischen den Aufrufen beizubehalten.

Wie sich das Model Context Protocol unterscheidet

MCP definiert einen anderen Ansatz als traditionelle APIs. Anstatt fest codierter Endpunkte legen MCP-Server eine maschinenlesbare Funktionsschnittstelle offen, die zur Laufzeit erkennbar ist. KI-Systeme können verfügbare Tools, Ressourcen und Prompts abfragen, anstatt sich auf vordefinierte Verbindungen zu verlassen. Das Model Context Protocol standardisiert Ressourcenstrukturen – Dokumente, Datenbankzeilen, Dateien – und reduziert so die Komplexität der Serialisierung, damit KI-Modelle relevanten, für das logische Schließen optimierten Kontext erhalten.

MCP-Implementierungen unterstützen eine bidirektionale, zustandsbehaftete Kommunikation mit Streaming-Semantik. Dies ermöglicht es MCP-Servern, Updates und Fortschrittsbenachrichtigungen direkt in die Kontextschleife eines KI-Agenten zu pushen, was mehrstufige Workflows und Teilergebnisse unterstützt, die herkömmliche APIs nicht nativ bereitstellen können. Diese Client-Server-Architektur ermöglicht ausgefeiltere Tool-Nutzungsmuster in agentenbasierten Systemen.

MCP im Vergleich zu RAG: Ergänzende Ansätze

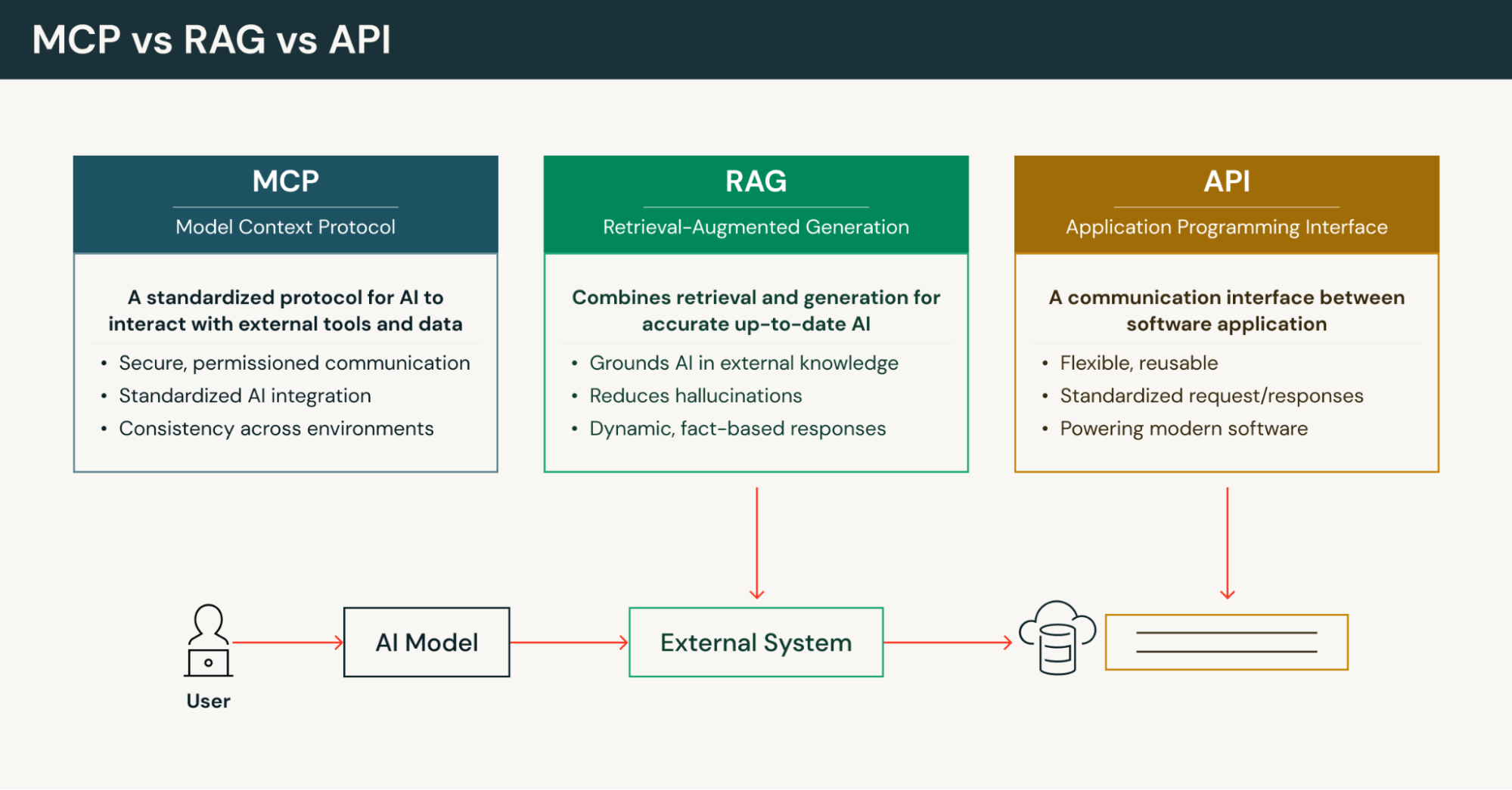

Retrieval-Augmented Generation (RAG) verbessert die Genauigkeit der KI, indem Dokumente in Einbettungen umgewandelt, in Vektordatenbanken gespeichert und relevante Informationen während der Generierung abgerufen werden. Allerdings stützt sich RAG typischerweise auf indizierte, statische Quellen aus Content-Repositorys. Das Model Context Protocol bietet On-Demand-Zugriff auf Live-APIs, Datenbanken und Streams und liefert maßgeblichen, aktuellen Kontext, wenn Aktualität wichtig ist.

Im Gegensatz zu RAG, das hauptsächlich schreibgeschützten Kontext zurückgibt, trennt das Kontextprotokoll Ressourcen von Tools, sodass KI-Agenten sowohl Daten abrufen als auch Aufgaben auf externen Systemen mit kontrollierten Schemata ausführen können. MCP deckt umfassendere Integrationsanforderungen ab – und ermöglicht agentenbasierte Workflows, Multi-Turn-Orchestrierung, Laufzeit-Fähigkeitserkennung und Multi-Tenant-Governance – was RAG nicht nativ bereitstellt.

MCP kann RAG-Implementierungen ergänzen. Organisationen können RAG verwenden, um Evergreen-Content für einen schnellen Abruf zu indizieren, während sie das Model Context Protocol für transaktionale Queries, die Ausführung von SQL-Queries und Aktionen nutzen, die den richtigen Kontext von Live-Systemen erfordern. Dieser hybride Ansatz bietet sowohl Geschwindigkeit als auch Genauigkeit.

Der Wert der Standardisierung im MCP-Ökosystem

Als zur Laufzeit erkennbares, bidirektionales Protokoll verwandelt das Model Context Protocol unterschiedliche externe Tools und Daten in adressierbare Ressourcen und aufrufbare Aktionen. Ein einzelner MCP-Client kann Dateien, Datenbankzeilen, Vektor-Snippets, Live-Streams und API-Endpunkte einheitlich erkennen. In Koexistenz mit indizierten RAG-Caches bietet MCP autoritative Just-in-Time-Abfragen und eine Aktionssemantik.

Das praktische Ergebnis sind weniger maßgeschneiderte Konnektoren, weniger benutzerdefinierter Code, schnellere Integrationen und zuverlässigere agentenbasierte Systeme mit ordnungsgemäßer Fehlerbehandlung und Audit-Trails. Diese standardisierte Methode, KI-Assistenten mit Remote-Ressourcen zu verbinden, beschleunigt die Entwicklungszyklen und sorgt gleichzeitig für die Einhaltung unternehmensweiter Sicherheitskontrollen. Das MCP-Ökosystem profitiert von dieser Standardisierung, da mehr MCP-Serverimplementierungen für gängige Unternehmenssysteme verfügbar werden.

Kernarchitektur von MCP: Das Client-Server-Modell verstehen

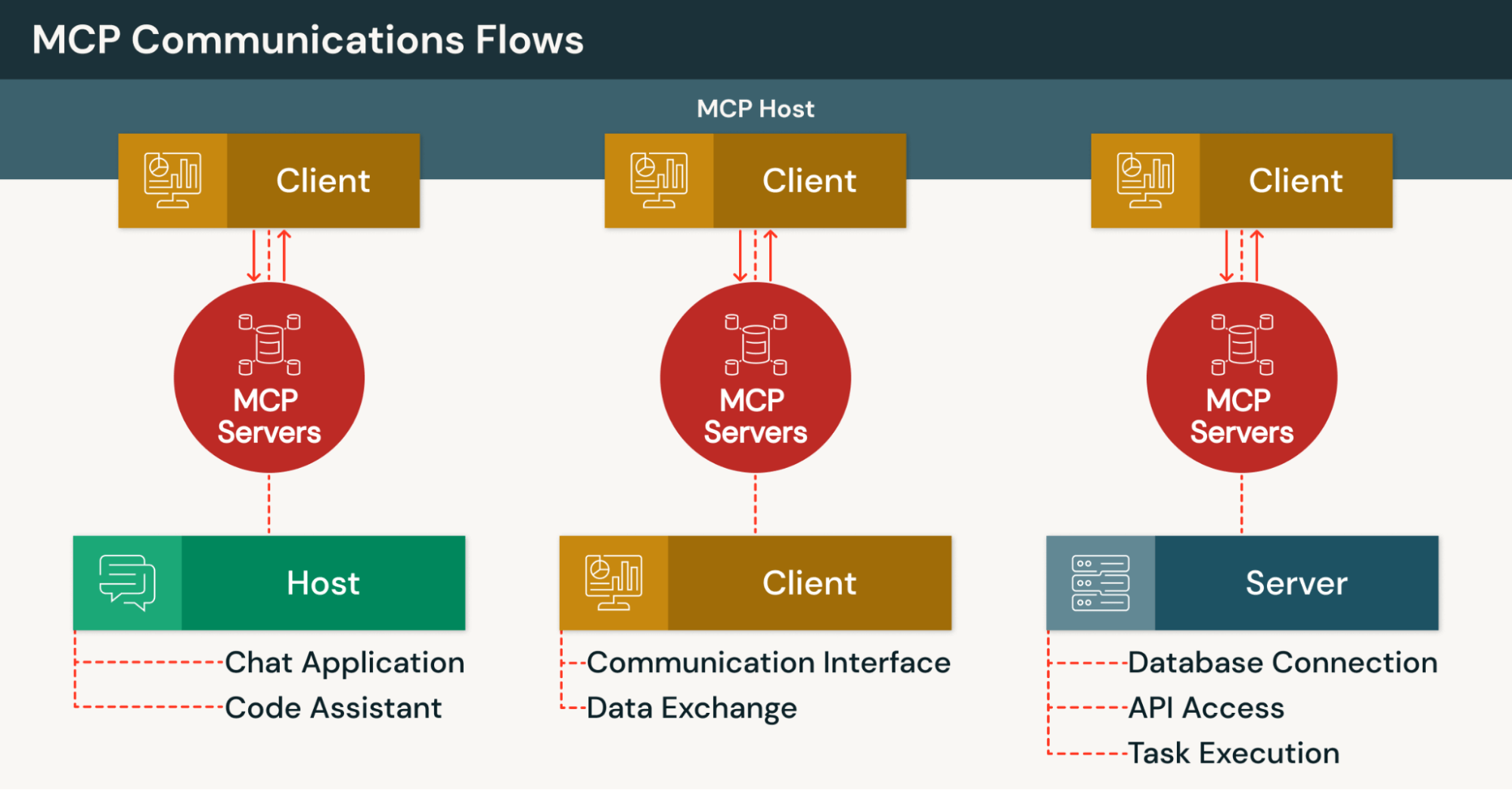

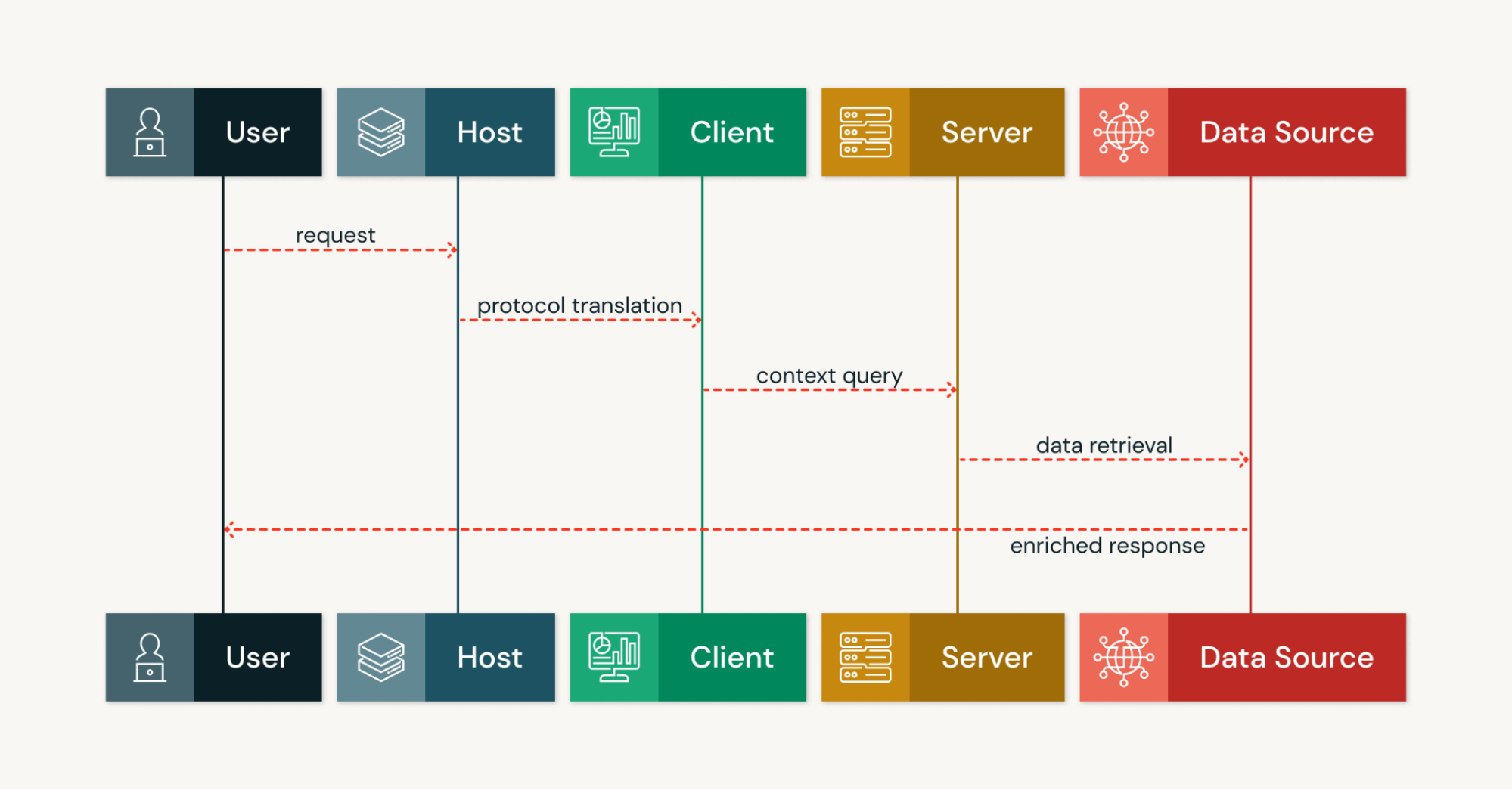

Die MCP-Architektur organisiert Integrationen um drei Schlüsselrollen – MCP-Server, MCP-Clients und MCP-Hosts –, die über persistente Kommunikations-Channels verbunden sind. Diese Client-Server-Architektur ermöglicht es KI-Tools, mehrstufige, zustandsbehaftete Workflows anstelle von isolierten Anfrage-Antwort-Interaktionen auszuführen.

Was MCP-Server tun

MCP-Server stellen Daten und Tools über standardisierte Schnittstellen bereit und können in Cloud-, On-Premises- oder Hybrid-Umgebungen ausgeführt werden. Jeder Server veröffentlicht eine Funktionsoberfläche aus benannten Ressourcen, aufrufbaren Tools, Prompts und Benachrichtigungs-Hooks. Zu den Ressourcen können Dokumente, Datenbankzeilen, Dateien und Pipeline-Ausgaben gehören.

MCP-Serverimplementierungen verwenden JSON-RPC-2.0-Methoden und -Benachrichtigungen, unterstützen Streaming für lang andauernde Betriebe und bieten eine maschinenlesbare Erkennung über die Transportschicht. Dies ermöglicht es MCP-Hosts und KI-Modellen, Fähigkeiten zur Laufzeit abzufragen, ohne dass vordefiniertes Wissen über verfügbare Tools erforderlich ist.

Beliebte MCP-Server-Implementierungen verbinden KI-Systeme mit externen Diensten wie Google Drive, Slack, GitHub und PostgreSQL-Datenbanken. Diese MCP-Server übernehmen die Authentifizierung, den Datenabruf und die Tool-Ausführung und bieten gleichzeitig eine konsistente Schnittstelle über das standardisierte Protokoll. Jeder Server im MCP-Ökosystem kann mehrere Clients gleichzeitig bedienen.

Wie MCP-Clients funktionieren

MCP-Clients sind Komponenten innerhalb von Host-Anwendungen, die Benutzer- oder Modellabsichten in Protokollnachrichten übersetzen. Jeder Client unterhält in der Regel eine Eins-zu-eins-Verbindung mit einem MCP-Server und verwaltet Lebenszyklus-, Authentifizierungs- und Transportdetails auf strukturierte Weise.

MCP-Clients serialisieren Anfragen als strukturierte Aufrufe mithilfe von JSON-RPC, verarbeiten asynchrone Benachrichtigungen und partielle Streams und stellen eine einheitliche lokale API bereit, um die Integrationskomplexität zu reduzieren. Mehrere Clients können vom selben MCP-Host aus betrieben werden, wobei sich jeder gleichzeitig mit verschiedenen MCP-Servern verbindet.

Diese Clients ermöglichen es KI-Agenten, mit externen Datenquellen zu interagieren, ohne die Implementierungsdetails der einzelnen externen Dienste verstehen zu müssen. Der Client verarbeitet alle Kommunikationsprotokolle, die Fehlerbehandlung und die Wiederholungslogik automatisch.

Die Rolle von MCP-Hosts

MCP-Hosts stellen die KI-Anwendungsschicht bereit, die die Fähigkeiten von MCP-Clients und -Servern koordiniert. Beispiele hierfür sind Claude Desktop, Claude Code, KI-gestützte IDEs und andere Plattformen, auf denen KI-Agenten agieren. Der MCP-Host aggregiert Prompts, Konversationszustände und Client-Antworten, um Multi-Tool-Workflows zu orchestrieren.

Der MCP-Host entscheidet, wann Tools aufgerufen, zusätzliche Eingaben angefordert oder Benachrichtigungen angezeigt werden. Diese zentralisierte Orchestrierung ermöglicht es KI-Modellen, über heterogene MCP-Server hinweg ohne maßgeschneiderten, dienstspezifischen Code zu arbeiten. Dies unterstützt das Ziel des MCP-Ökosystems einer universellen Interoperabilität bei der Verbindung von KI-Assistenten mit verschiedenen Systemen.

Kontextfluss und bidirektionale Kommunikation

Die Client-Server-Kommunikation im Model Context Protocol ist bidirektional und nachrichtengesteuert und verwendet JSON-RPC 2.0 über die Transportschicht. MCP-Clients rufen Methoden auf, um Ressourcen abzurufen oder Tools zu starten, während MCP-Server Ergebnisse zurückgeben, Teilausgaben streamen und Benachrichtigungen mit relevanten Informationen senden.

MCP-Server können auch Anfragen initiieren und MCP-Hosts bitten, Optionen zu prüfen oder Benutzereingaben über Funktionsaufrufmechanismen anzufordern. Diese bidirektionale Fähigkeit unterscheidet das Kontextprotokoll von herkömmlichen, unidirektionalen API-Mustern. Die autoritativen Live-Abfragen von MCP ergänzen RAG, indem sie Just-in-Time-Datensätze mit Herkunfts-Metadaten zur Nachverfolgbarkeit bereitstellen.

Persistente Transporte erhalten die Nachrichtenreihenfolge und ermöglichen Echtzeit-Updates, sodass KI-Systeme über Zwischenergebnisse iterieren und agentenbasierte Schleifen ausführen können, die autonome KI-Agenten ermöglichen.

Was sind die Anforderungen für das Model Context Protocol?

Sicherheitsanforderungen und Schutz vor Bedrohungen

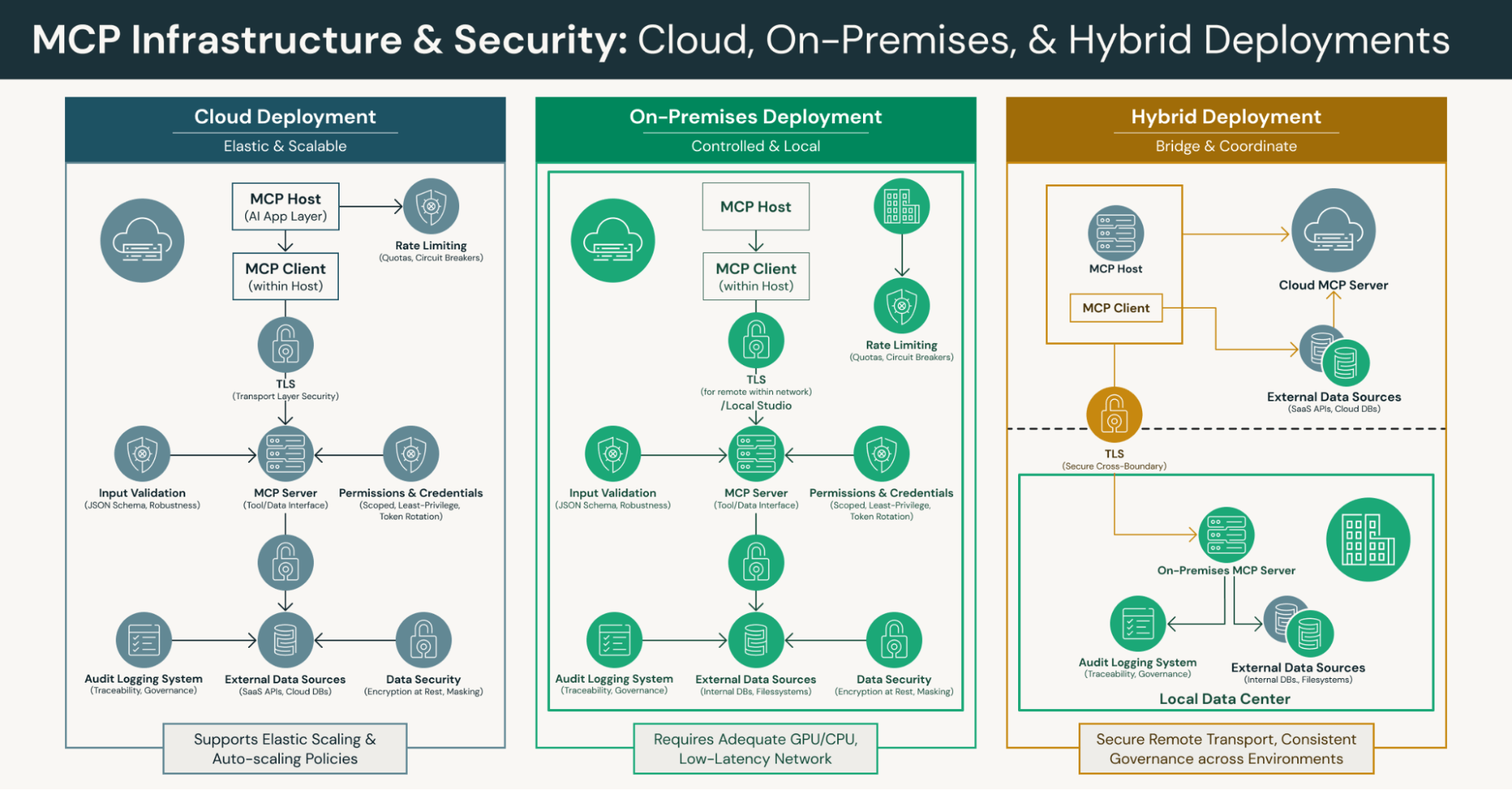

MCP-Implementierungen müssen Transport Layer Security (TLS) für Remote-Übertragungen, strikte Tool-Berechtigungen und bereichsbezogene Anmeldeinformationen durchsetzen, um vor Sicherheitsbedrohungen zu schützen. Das Protokoll erfordert Ratenbegrenzung und eine robuste Eingabevalidierung durch JSON-Schemaerzwingung sowohl auf MCP-Clients als auch auf Servern, um Injection-Angriffe und fehlerhafte Anfragen zu verhindern.

Audit-Protokollierung, Token-Rotation und die Vergabe von minimalen Rechten sind wesentliche Anforderungen für die Verwaltung langlebiger Channels. Diese Sicherheitsmaßnahmen schützen vor unbefugtem Zugriff und erhalten gleichzeitig die auffindbaren Integrationsmöglichkeiten, die das Model Context Protocol ermöglicht. Unternehmen müssen für langlebige MCP-Channels eine Verschlüsselung bei der Übertragung und im Ruhezustand, Maskierung und bereichsbezogene Berechtigungen implementieren, um die Datensicherheit zu gewährleisten.

Infrastruktur und Systemanforderungen

Organisationen, die MCP einsetzen, benötigen eine Compute- und Netzwerkinfrastruktur, die große Sprachmodelle, MCP-Server und angebundene Datenquellen hosten kann. Dazu gehören eine ausreichende GPU/CPU-Kapazität, Arbeitsspeicher, Festplatten-I/O und Netzwerkpfade mit geringer Latenz zwischen den Komponenten in der Client-Server-Architektur.

Cloud-Plattformen sollten die elastische Skalierung von Modellinstanzen und MCP-Servern unterstützen. Teams müssen Richtlinien für die automatische Skalierung für gleichzeitige Streams und lang andauernden Betrieb definieren. Die Transportschicht sollte sowohl lokales STDIO für eingebettete Komponenten als auch Remote-Streaming-Channels wie HTTP/SSE oder WebSocket für verteteilte Bereitstellungen unterstützen.

Implementierungsanforderungen für die Arbeit mit MCP

Die Arbeit mit MCP erfordert die Implementierung von JSON-RPC 2.0-Messaging, Discovery-Endpunkten und Ressourcen-/Tool-Schemata. MCP-Server müssen ihre Fähigkeiten in einem maschinenlesbaren Format über das Standardprotokoll veröffentlichen. Dies ermöglicht es Entwicklern, erkennungsbasierte Integrationen zu erstellen, die die Tool-Erkennung ohne fest programmierte Verbindungen unterstützen.

Fehlerbehandlung, Strategien zur Wiederverbindung und Backpressure-Management sind entscheidende Implementierungsanforderungen für die Zuverlässigkeit in der Produktionsumgebung. Organisationen sollten Observability für persistente Streams, Methodenlatenzen und Ressourcennutzung mithilfe von Metriken, Traces und Logs implementieren. Ratenbegrenzer, Circuit Breaker und Quoten schützen nachgelagerte Systeme vor Überlastung.

Praktische Vorteile: Echtzeit-Datenzugriff und reduzierte Halluzinationen

Mit dem Model Context Protocol rufen KI-Modelle Live-Datensätze, Pipeline-Ausgaben, API-Antworten und Dateien bei Bedarf ab, anstatt sich ausschließlich auf zwischengespeicherte Embeddings oder die statischen Trainingsdaten von LLMs zu verlassen. Dadurch basieren die Antworten auf aktuellen, maßgeblichen Datenquellen und es werden Halluzinationen reduziert, bei denen KI-Systeme falsche Informationen generieren.

Ressourcen, die zur Abfragezeit zurückgegeben werden, enthalten Herkunftsmetadaten wie Quell-IDs und Zeitstempel. Dies ermöglicht es MCP-Hosts, Ursprünge zu protokollieren und Ausgaben nachverfolgbar zu machen. Diese Transparenz ist entscheidend, wenn KI-Agenten Aufgaben ausführen, die in regulierten Branchen eine Auditierbarkeit erfordern. Das Kontextprotokoll stellt sicher, dass relevanter Kontext immer von maßgeblichen externen Systemen verfügbar ist.

Unterstützung für agentenbasierte KI-Workflows

Da MCP-Server Ressourcen, Tools und Prompts auf standardisierte Weise veröffentlichen, können KI-Modelle Dienste ohne hartcodierte Endpunkte entdecken und aufrufen. Der offene Standard unterstützt serverinitiierte Abfragen und Streaming-Antworten von MCP-Servern, was mehrstufiges Schlussfolgern, die Klärung von Eingaben und die Iteration über Teilergebnisse ermöglicht.

Tools legen über JSON-Schema definierte Ein-/Ausgaben mit bereichsbezogenen Tool-Berechtigungen offen, sodass KI-Agenten kontrollierte Aktionen wie das Erstellen von Tickets, das Ausführen von SQL-Abfragen oder das Ausführen von Workflows durchführen können. Diese autonome Tool-Erkennung, die bidirektionale Interaktion und die integrierten Sicherheitsmechanismen bilden die Grundlage für eine zuverlässige, agentenbasierte KI über externe Systeme hinweg.

Das Model Context Protocol MCP ermöglicht explizit agentenbasierte Workflows, die auf dynamischer Tool-Erkennung und Aktionsprimitiven basieren, um systemübergreifend wahrzunehmen, zu entscheiden und zu handeln. Dies ermöglicht die Erstellung von KI-Agenten, die autonom agieren und dabei ordnungsgemäße Governance-Kontrollen aufrechterhalten.

Vereinfachte Entwicklung durch standardisierte Integrationen

Das Kontextprotokoll ermöglicht es Entwicklern, eine einzige Server-Oberfläche zu implementieren, die MCP-Hosts und KI-Modelle mit konsistenter Erkennungs- und Aufrufsemantik wiederverwenden können. Dadurch entfallen separate Konnektoren für gängige Dienste, was den Engineering-Aufwand reduziert, um KI-Assistenten mit neuen Datenquellen zu verbinden.

Typisierte Ressourcen und JSON Schema reduzieren den Code für benutzerdefinierte Serialisierung, Validierung und Fehlerbehandlung, der andernfalls erforderlich wäre. Lokale STDIO- oder Remote-Streaming-Transporte ermöglichen es Teams, zwischen On-Premises-, Cloud- oder hybriden Bereitstellungen zu wählen, ohne die MCP-Host-Logik zu ändern. Diese Flexibilität beschleunigt die Erstellung von KI-Agenten durch Teams in verschiedenen Entwicklungsumgebungen.

MCP bietet eine praktische Möglichkeit, Integrationen einmalig zu standardisieren, anstatt für jede neue Integration benutzerdefinierte Adapter zu erstellen. Dieser standardisierte Protokollansatz kommt dem gesamten MCP-Ökosystem zugute, da immer mehr Organisationen den Standard übernehmen.

Erhöhtes Automatisierungspotenzial für komplexe Workflows

Die persistenten, zustandsbehafteten Kanäle von MCP ermöglichen es KI-Systemen, Lookups, Transformationen und Nebeneffekte über mehrere externe Dienste hinweg in einer einzigen, kontinuierlichen Schleife zu kombinieren. Bei lang andauernden Betrieben können MCP-Server Teilergebnisse streamen, sodass KI-Agenten bei Bedarf Zwischenentscheidungen treffen, Workflows verzweigen oder menschliche Eingaben anfordern können.

Die Kombination von indexiertem Abruf für Evergreen-Content-Repositorys mit den autoritativen On-Demand-Abfragen des Model Context Protocol unterstützt schnelle und genaue Antworten. Dieser hybride Ansatz behält die Governance-Kontrollen bei und ermöglicht KI-gestützten Tools den Zugriff auf sowohl statische Wissensdatenbanken als auch dynamische externe Datenquellen.

Die Unterstützung des Kontextprotokolls für die Multi-Turn-Orchestrierung ermöglicht es agentenbasierten Systemen, komplexe Workflows zu bewältigen, die eine Koordination über mehrere Tools und Datenquellen hinweg erfordern. Dieses Automatisierungspotenzial verändert die Art und Weise, wie Organisationen KI-Anwendungen in Produktionsumgebungen bereitstellen.

Best Practices für die Implementierung: Systemvorbereitung

Überprüfen Sie, ob Ihre Infrastruktur LLM-Hosting, MCP-Server und verbundene Datenquellen unterstützt. Stellen Sie ausreichende GPU/CPU-Ressourcen, Speicherzuweisung und Netzwerkbandbreite für die Client-Server-Architektur sicher. Wählen Sie Cloud-Plattformen, die eine elastische Skalierung für gleichzeitige Benutzer unterstützen, und definieren Sie Autoscaling-Richtlinien.

Standardisieren Sie sichere Transporte mittels TLS für alle Remoteverbindungen zwischen MCP-Clients und -Servern. Dokumentieren Sie das Lifecycle-Management von Verbindungen, einschließlich Wiederverbindungsstrategien und beobachtbarer Metriken zum Stream-Zustand. Implementieren Sie Ratenbegrenzungen, Circuit Breaker und Kontingente, um nachgelagerte externe Systeme vor Überlastung zu schützen.

Unternehmen sollten Streaming-Channels (HTTP/SSE, WebSocket) sowie lokales STDIO für eingebettete Komponenten standardisieren. Validieren Sie JSON-Payloads und -Schemata sowohl auf dem Server als auch auf dem Client, um Injection-Angriffe zu verhindern und eine ordnungsgemäße Fehlerbehandlung im gesamten System sicherzustellen.

Nutzung von Open-Source-Ressourcen in Programmiersprachen

Das MCP-Ökosystem umfasst Community-SDKs in mehreren Programmiersprachen, die die Client- und Server-Entwicklung beschleunigen. Diese SDKs bieten etablierte Muster für JSON-RPC-Messaging, Streaming und Schemavalidierung, wodurch die Neuimplementierung der Protokoll-Basisfunktionen entfällt.

Entwickler können bestehende MCP-Serverimplementierungen für gängige Unternehmenssysteme wiederverwenden und sie durch den offenen Standard für domänenspezifische Anwendungsfälle erweitern. Die Erstellung von Simulatoren, die Benachrichtigungen, langlebige Streams und Fehlerbedingungen nachahmen, hilft Teams, agentenbasierte Systeme vor der Produktivsetzung zu testen.

Nutzen Sie Community-Ressourcen, um die Arbeit mit MCP zu beschleunigen und die Neuerstellung gängiger Funktionalitäten zu vermeiden. Diese Open-Source-Tools ermöglichen es Entwicklern, sich auf die Geschäftslogik zu konzentrieren anstatt auf Details der Protokollimplementierung.

Integrationsstrategie für die Produktionsbereitstellung

Starten Sie mit besonders wirkungsvollen Anwendungsfällen, die einen messbaren ROI aufweisen, wie zum Beispiel kontextsensitiven KI-Assistenten oder automatisierten Workflows mit KI-Agenten. Beschränken Sie den anfänglichen Tool-Umfang und die Tool-Berechtigungen, erfassen Sie Telemetriedaten und Nutzerfeedback und erweitern Sie dann die Funktionen nach der Stabilisierung der Kernfunktionalität.

Gleichen Sie Latenz und Aktualität aus, indem Sie die Live-Lookups von MCP mit RAG für große statische Korpora aus Content-Repository kombinieren. Definieren Sie SLAs, Audit-Trails und Eskalationsverfahren vor einem breiten Produktions-Rollout. Dieser schrittweise Ansatz reduziert das Risiko und stärkt gleichzeitig das Vertrauen der Organisation in agentenbasierte KI-Bereitstellungen.

MCP erfüllt die Anforderung an strukturierte Integrationspläne, die skalieren, wenn weitere MCP-Server und -Clients zum Ökosystem hinzugefügt werden. Organisationen sollten ihre Integrationsarchitektur und Governance-Richtlinien frühzeitig im Bereitstellungsprozess dokumentieren.

Häufige Missverständnisse: MCP ist nicht nur ein weiteres API-Framework

Realität: Das Model Context Protocol standardisiert die Integration auf Protokollebene mit persistentem Kontextmanagement und dynamischer Fähigkeitserkennung. Im Gegensatz zu REST- oder RPC-Aufrufen definiert MCP über das Standardprotokoll, wie KI-Agenten Funktionen entdecken, Streams abonnieren und kontextbezogene Zustände über Interaktionen hinweg aufrechterhalten.

Dieses standardisierte Protokoll bedeutet, dass Sie Tools einmal erstellen und sie über das MCP-Ökosystem einheitlich für mehrere KI-Agenten und Modellanbieter bereitstellen können. Anstatt den Modellkontext als eine flüchtige Nutzlast zu behandeln, behandelt das Kontextprotokoll den Kontext als eine erstklassige, versionierte Ressource mit einem ordnungsgemäßen Lebenszyklusmanagement.

Tools und Agenten sind unterschiedliche Komponenten

Realität: Tools sind diskrete Fähigkeiten, die über MCP-Server bereitgestellt werden – wie z. B. Datenbankzugriff, Dateibetrieb oder API-Integrationen. KI-Agenten sind entscheidungsfindende Computerprogramme, die diese verfügbaren Tools entdecken, orchestrieren und aufrufen, um Tasks autonom auszuführen.

Das Kontextprotokoll ermöglicht es KI-Agenten, Tool-Metadaten dynamisch zu erkennen, Tool-Schnittstellen sicher mit Funktionsaufrufsemantik aufzurufen und Ausgaben über MCP-Clients in Konversationen zu integrieren. Diese Trennung ermöglicht es, dass verschiedene agentenbasierte Systeme denselben Katalog von Tools verwenden, während Tool-Besitzer die Schnittstellen unabhängig von der Agentenlogik aktualisieren.

MCP verwaltet umfassende Datenkonnektivität

Realität: Das Model Context Protocol verwaltet eine umfassende Konnektivität zu externen Datenquellen, die über die einfache Tool-Nutzung hinausgeht. Es unterstützt Streaming-Benachrichtigungen, den authentifizierten Zugriff auf Content-Repositorys und Vektorspeicher sowie eine konsistente Semantik für langlaufenden Betrieb und die Fehlerbehandlung.

MCP bietet eine praktische Möglichkeit, den Zugriff auf lokale Ressourcen, Remote-Ressourcen, Live-Datenabfragen und operative Aktionen auf strukturierte Weise zu vereinheitlichen. Dieser einheitliche Ansatz hilft dabei, Governance, Beobachtbarkeit (Observability) und Zugriffskontrolle in Unternehmensumgebungen mit den KI-Fähigkeiten zu skalieren. Das Kontextprotokoll behandelt andere Tools und externe Dienste über eine konsistente Schnittstelle.

Zukünftige Forschungsrichtungen und Entwicklung

Mit der Weiterentwicklung des offenen Standards umfassen zukünftige Forschungsrichtungen verbesserte Sicherheitsframeworks für mandantenfähige Bereitstellungen, verbesserte Streaming-Semantik für komplexe agentenbasierte Arbeitsabläufe und standardisierte Muster für die Integration mit zusätzlichen Programmiersprachen und Entwicklungsumgebungen.

Das wachsende MCP-Ökosystem erweitert sich kontinuierlich durch neue MCP-Server-Implementierungen für neue externe Tools und Plattformen. Beiträge der Community zu SDKs, Adaptern und Referenzarchitekturen beschleunigen die Akzeptanz und wahren gleichzeitig das Hauptziel des Protokolls: jeder KI-Anwendung zu ermöglichen, sich auf standardisierte Weise mit jedem externen Dienst zu verbinden.

Organisationen, die das Model Context Protocol erkunden, sollten die Entwicklungen im Ökosystem beobachten, zu MCP-Implementierungen beitragen und an Arbeitsgruppen teilnehmen, die die Entwicklung der Anbindung von KI-Assistenten an externe Systeme gestalten. Dieser kollaborative Ansatz stellt sicher, dass MCP-Implementierungen interoperabel bleiben, während sich KI-Systeme und externe Dienste weiterentwickeln. Zukünftige Forschungsrichtungen werden sich wahrscheinlich darauf konzentrieren, die Fähigkeiten des Protokolls zu erweitern und gleichzeitig seine grundlegende Einfachheit beizubehalten.

Fazit: Das Model Context Protocol als Grundlage für moderne KI

Das Model-Kontext-Protokoll stellt einen fundamentalen Wandel in der Art und Weise dar, wie KI-Anwendungen auf externe Datenquellen und Tools zugreifen. Durch die Bereitstellung eines offenen Protokolls für die entdeckungsbasierte Integration ermöglicht das Kontextprotokoll Entwicklern die Erstellung kontextbewusster KI-Agenten, die Aufgaben mithilfe von Live-Daten aus gängigen Unternehmenssystemen ohne umfangreichen Boilerplate-Integrationscode ausführen können.

Das standardisierte Protokoll reduziert die Komplexität durch die Client-Server-Architektur, beschleunigt Entwicklungszyklen und ermöglicht es KI-Systemen, die Grenzen der statischen Trainingsdaten von LLMs zu überwinden. Durch seine bidirektionale Kommunikation zwischen MCP-Clients und MCP-Servern und die Unterstützung für agentenbasierte KI-Workflows legt das Model Context Protocol (MCP) den Grundstein für fähigere, autonome KI-Tools in verschiedenen Umgebungen.

Da das MCP-Ökosystem mit neuen Implementierungen und Integrationen von MCP-Servern wächst, können Organisationen hochentwickelte KI-Agenten erstellen, die mehrere externe Dienste entdecken und orchestrieren und dabei die entsprechenden Tool-Berechtigungen, Sicherheitskontrollen und Audit-Trails beibehalten. Dieser standardisierte Ansatz zur Verbindung von KI-Systemen mit externen Tools und Datenquellen wird auch in Zukunft die Art und Weise prägen, wie Unternehmen produktive KI-Anwendungen bereitstellen. Das Kontextprotokoll stellt die wesentliche Infrastruktur bereit, die es Entwicklern ermöglicht, KI-Anwendungen der nächsten Generation zuverlässig zu erstellen.