AI Governance Best Practices: How to Build Responsible and Effective AI Programs

Summary

- Enterprise AI governance manages risk, compliance, and trust as AI systems move into high-stakes, production use, grounding decisions in principles like fairness, transparency, accountability, privacy, security, and built‑in safeguards.

- Effective governance frameworks align oversight with business risk, define clear cross-functional roles and policies, embed checkpoints across the AI lifecycle, and rely on structured risk assessments, monitoring, incident response, and standardized documentation.

- Scalable programs use centralized standards with federated execution, human‑in‑the‑loop for high‑risk decisions, proactive tracking of evolving regulations, and strong C‑suite sponsorship so governance becomes an enabler for responsible AI innovation rather than a bottleneck.

Why Enterprise AI Governance Matters Now

Enterprise AI adoption is accelerating rapidly, driven by advances in generative AI. These models learn from data, change as inputs change and advance with training techniques and datasets.

But even as models become more sophisticated and accurate, state-of-the-art LLMs and AI projects still pose enterprise risks, especially when deployed in production. The impact is that AI governance is more important than ever and companies must have a robust and multifaceted strategy in place.

Key pillars of governance include:

- Data access controls and permissions

- Data lineage and full observability

- Built-in AI safeguards to protect PII and block unsafe content

- Compliance and regulatory requirements

What are the goals of an AI governance framework?

AI governance best practices provide a structured way to ensure AI systems are developed, deployed and operated responsibly. Additionally, these practices align with business objectives, manage risk across the AI lifecycle and build trust with users and stakeholders.

Regulatory pressure on AI use is also increasing. Governments and standards bodies are introducing new discussions around transparency, accountability and oversight of AI systems, giving users a set of theoretical and practical frameworks on which to build their governance. For example, the OECD AI Principles provide a values-based foundation for an AI governance framework, while the European Union’s AI Act establishes a risk-based framework with heightened requirements for high-risk AI use cases.

Beyond compliance, AI governance has direct business value. Organizations with clear governance structures are better positioned to:

- Earn stakeholder trust in AI-driven decisions

- Reduce operational and legal risk

- Scale AI systems more efficiently across teams and use cases

- Demonstrate accountability and control as AI programs mature

Common Challenges Driving the Need for Governance

Many enterprises encounter similar challenges as AI adoption grows:

- Unclear ownership: Responsibility for AI outcomes is often fragmented across data, engineering, legal and business teams. Teams may ship models, but no one team owns the outcomes.

- Rapidly evolving tools: New AI and GenAI are improving rapidly and the sheer speed and velocity of their development mean they may lack proper controls and processes.

- Fragmented data and processes: Data, training, deployment and monitoring can live in separate systems, making oversight and consistent documentation difficult.

- Limited auditability: Proving how an AI system was trained, evaluated and deployed can be time-consuming or impossible without governance artifacts.

These challenges underscore why governance must be intentional and embedded in core processes early, rather than retrofitted after issues arise.

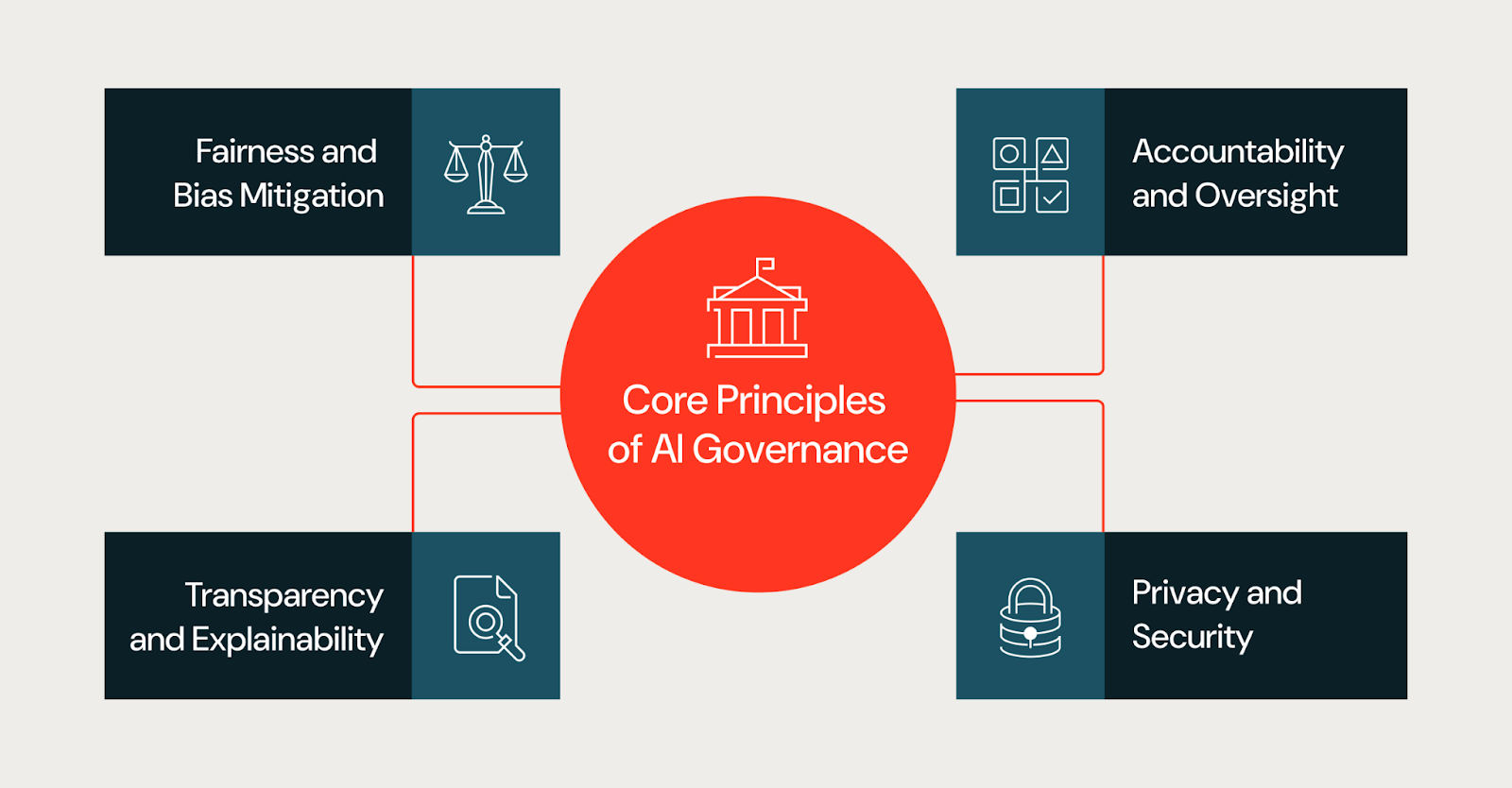

Core Principles Behind Effective AI Governance

Governance applies to AI systems as a whole, including data, prompts, workflows, human decision points and downstream applications, not just to individual models. Many enterprise risks emerge from how these components interact, rather than from the model itself. AI governance best practices are grounded in a consistent set of foundational principles. These principles guide decisions across the AI lifecycle and provide a shared framework for teams with different responsibilities.

Fairness and Bias Mitigation

Bias can be introduced through training data, feature selection or deployment context and lead to disparate outcomes across populations. Governance programs should require teams to assess fairness risks early, document known limitations and monitor for unintended bias as models evolve in production.

In practice, teams assess fairness by examining training data for representation gaps, testing model outputs across demographic groups and defining fairness metrics before deployment. Common approaches include disaggregated evaluation, bias audits during development and ongoing monitoring for drift in production. Teams should also document known limitations and edge cases where the model may underperform.

Transparency and Explainability

Transparency helps stakeholders understand how AI systems are built and how they influence outcomes. This doesn’t mean demanding full visibility into a vendor’s proprietary model architecture or training data, as closed model providers typically don’t disclose those details. Instead, transparency focuses on what an organization can control and document.

This includes clarity on which models and versions a team might be using, what data they’re passing to them and how they’re prompting or fine-tuning them and what evaluation criteria a team might apply before deployment. Teams should also document decision logic at the application layer to explain how model outputs are used, filtered or overridden in downstream workflows. For stakeholders who need explanations, such as regulators, executives or affected users, the goal is providing appropriate context for how the system reaches its outputs, even when the underlying model is a black box.

Accountability and Oversight

Effective governance defines clear ownership for AI systems. Every model or AI application should have accountable individuals or teams responsible for outcomes, risk management and compliance with internal policies. Oversight mechanisms ensure that responsibility persists after deployment instead of disappearing once a model ships.

Privacy and Security

AI systems often process sensitive or regulated data and governance must ensure that privacy protections and security controls are consistently applied, including role-based access management, PII filters and filtering against unsafe outputs. Privacy and security considerations should be integrated throughout the AI lifecycle, not addressed only at deployment time.

Built-In Safeguards

AI systems need guardrails that prevent harmful or unintended outputs. Built-in safeguards include input validation to catch malformed or adversarial queries, output filters that block unsafe or inappropriate content, PII detection to prevent data exposure and content moderation for user-facing applications. These controls should be configurable by risk tier; a low-risk internal tool may need lighter safeguards than a customer-facing agent.

Unified Access for Models and AI Projects

As organizations scale AI adoption, access to models and AI projects should be governed through a centralized framework. Unified access controls ensure consistent permissions across development, staging and production environments. This includes role-based access for who can view, modify or deploy models; audit trails that track changes and usage; and integration with identity management systems. Centralized access reduces the risk of shadow AI projects and makes compliance easier to demonstrate. Without unified access controls, organizations often struggle with ‘shadow AI’ (models and applications deployed outside formal oversight), which becomes one of the biggest barriers to consistent governance at scale.

Building a Practical AI Governance Framework

While governance principles may define what good governance looks like, frameworks are what define how those organizations implement their processes. In other words, a practical AI governance framework translates high-level goals into specific roles, policies and controls that fit within an organization’s overall structure and risk tolerance.

See the Databricks AI Governance Framework for an example of a structured approach to defining governance pillars and key considerations.

Align Governance With Business Objectives

Organizations achieve better results when governance aligns with business impact and risk. Not every AI system needs the same level of oversight. A chatbot that summarizes external documents carries a different risk than a model that approves loans or prioritizes medical cases.

Establish Governance Roles and Structures

For AI governance to be its most effective, it must be cross-functional. This involves ongoing and intentional collaboration between data and AI teams, legal and compliance, privacy and security and business stakeholders. Common structures include:

- Cross-functional governance committees

- Clearly defined RACI models

- Human-in-the-loop requirements for high-risk decisions

- Role-based access controls

These structures clarify decision rights and reduce ambiguity as AI programs scale.

Define AI Policies, Standards and Controls

Clear standards reduce friction. Effective governance frameworks specify:

- Required documentation and artifacts

- AI risk classification criteria

- Approval thresholds by risk tier

- Monitoring, incident response and audit expectations

When standards stay vague, teams invent local interpretations. When standards stay concrete, teams move faster with fewer surprises.

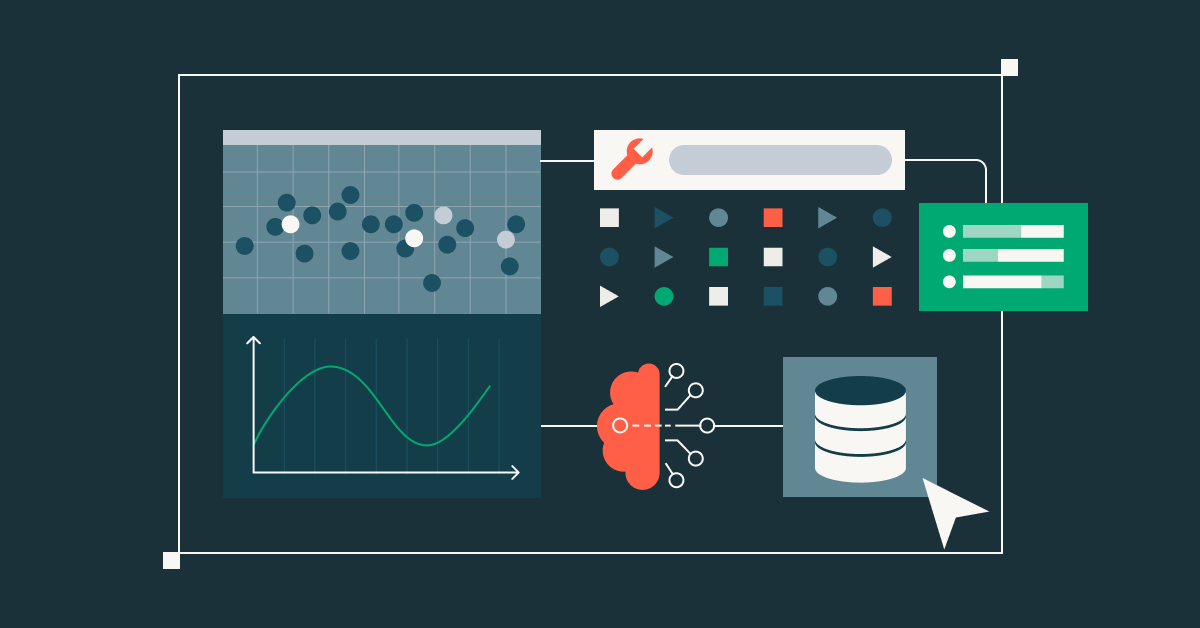

Operationalizing AI Governance

AI governance must be built into the very structure of the workflow, such as how teams design, ship and operate AI systems. However, it needs to be more than just a theory; operational governance must answer practical questions, such as who decides, what evidence teams must produce and how systems stay compliant over time. The result is an explicit process teams can follow to integrate AI governance into their workflows.

Integrate Governance Into the AI Development Lifecycle

Most organizations aren’t building foundation models from scratch. They’re combining existing models with proprietary data to create AI projects, agents and applications. Governance should reflect this reality by embedding checkpoints directly into the development lifecycle. For example, an internal AI assistant that summarizes external documents may initially be classified as low risk. If that same system is later exposed to customers or used to inform regulated decisions, its risk profile changes, requiring new approvals, safeguards and monitoring.

- Define scope and intent. Before development begins, document the system’s intended use, prohibited uses and decision context. This prevents scope creep, where systems get reused in higher-risk scenarios without review.

- Document data sources. Record data ownership, consent constraints and known limitations. If teams can’t explain where data came from and why it’s appropriate, they shouldn’t use it to build or fine-tune a system.

- Establish evaluation criteria. Agree on metrics, threshold and acceptable trade-offs before testing. Teams should document why they chose specific metrics and what failure modes they observed. This turns evaluation into a decision record for future reference.

- Enforce release gates. Require named owners, completed documentation and signoff aligned to the system’s risk tier. Define rollback criteria so teams know when to pull a system out of production.

- Monitor and review. After deployment, review production behavior, validating assumptions against real usage and documenting changes over time.

Conduct AI Risk Assessments

Risk assessment is the heart of practical governance. It determines how much control a system needs and where teams should focus attention. As teams deploy effective assessments, they should focus on a small set of questions:

- Who does this system affect?

- What decisions does it influence or automate?

- What happens when it fails?

- How easily can humans intervene?

- What data sensitivity does it involve?

Once teams gather answers to these questions, they can then assign a risk tier, which is a way of translating judgment into action. For example, a low-risk internal tool may require lightweight documentation and periodic review, whereas a high-risk system may require frequent human oversight, formal approval and continuous monitoring.

Risk assessments should happen early and be subject to continuous updates over time. As systems expand to new users or use cases, their risk profile often changes. Governance processes should be designed to account for that evolution.

Define Approval and Escalation Paths

Operational governance depends on clear decision paths. Teams need to know who can approve a system, when escalation is required and how to resolve disagreements. As a result, organizations typically define the following decision paths:

- approval authorities by risk tier

- escalation triggers for unresolved issues

- timelines for review and response

- criteria for halting or rolling back systems

Without defined paths, governance can become confusing and teams may suffer paralysis as decisions stall, responsibility diffuses and teams bypass controls to keep moving. Establishing clear paths reduces ambiguity and increases compliance because teams understand how to move forward.

Implement Monitoring and Compliance Controls

Given the rapid and ongoing development of AI systems, AI governance cannot be static. Over time, data changes, usage patterns shift and performance degrades. It’s crucial that teams monitor AI behavior in production by focusing on:

- performance against defined metrics

- data drift and distribution changes

- unexpected inputs or outputs

- system usage outside the intended scope

Governance defines what teams must monitor, how often they review results and what actions they take when thresholds are breached. These actions may include retraining, restricting usage, escalating to review bodies, or shutting systems down. By turning monitoring into a feedback loop, organizations can ensure they are maximizing the benefits of their internal processes.

Establish Incident Response and Remediation Processes

In addition to creating a culture of feedback and accountability, strong governance frameworks must account for failure. Even well-designed systems break or degrade over time.

Another step to governance is defining how a team responds to AI incidents, including biased outcomes, unsafe behavior, data exposure, or regulatory concerns. Teams need predefined playbooks that specify:

- How to identify and classify incidents

- Who owns response and communication

- How to contain harm

- How to document root causes and remediation

Post-incident reviews can help feed new learnings or updates back into established governance, helping to update risk assessments, improve controls and refine policies. This loop ensures governance evolves with real-world experience.

Standardize Governance Artifacts

As teams develop their governance, standardized documentation is important for evidence and consistency across the system. Consistent documentation can also assist with audits and reduce the need for duplicative efforts in reproducing documents. Organizations prioritize the following standardized documents:

- System summaries that define purpose and scope

- Data documentation that records sources and constraints

- Evaluation summaries that capture performance and limitations

- Monitoring plans that define ongoing oversight

Scale Governance Across Teams and Domains

As AI adoption continues to grow, an organization’s governance must scale without introducing bottlenecks. Many teams use a centralized–federated model, in which a central group defines standards, risk frameworks and policies, while domain teams apply them locally and remain accountable for outcomes. This model helps strike a balance between consistency and speed.

Scaling also requires training. As governance systems evolve and develop, teams need to understand what governance requires,and how to best comply with the requirements.

Gartner®: Databricks Cloud Database Leader

Ensuring Transparency, Trust and Explainability

Trust is a central outcome of effective AI governance. Stakeholders are more likely to adopt and rely on AI systems when they understand how decisions are made and how risks are managed throughout the AI lifecyle.

Human-in-the-Loop Oversight

For high-risk or sensitive use cases, humans should retain final authority over AI-driven decisions. Governance frameworks should define when human review is required, how interventions occur and how decisions are documented.

Communicating AI Decisions to Stakeholders

Different stakeholders require different levels of explanation. Technical teams may need detailed evaluation metrics, while executives and regulators may rely on summaries, model cards, or decision rationales. Clear communication builds confidence and supports accountability.

Keeping Pace With Regulatory and Industry Change

AI governance is never finished. Laws and regulations evolve, standards mature and AI capabilities advance faster than policy cycles.

Staying Current With Standards and Updates

Organizations stay current by assigning clear ownership for monitoring regulatory and standards changes. Effective programs create a small cross-functional group that includes legal, compliance, privacy, security and AI practitioners, rather than relying on ad hoc updates.

Monitoring works best on a fixed cadence, such as quarterly, instead of reactive updates. In order to stay proactive, teams should assess each change by asking:

- Does this affect existing AI systems or only new ones?

- Does it introduce new documentation or oversight expectations?

- Does it apply globally or only in specific regions?

These impact checks help teams use and refine existing governance processes instead of creating parallel controls.

Preparing for Future AI Governance Requirements

Future AI governance requirements will likely expand expectations around explainability, auditability and documentation. Organizations can prepare their processes now without waiting for detailed mandates.

Traceability and Explainability: Teams should define what explanations they require by risk tier and audience, from technical reviews to executive and regulatory summaries.

Auditability: It is important to maintain decision records that show what a system was designed to do, how it was evaluated, who approved it and how it changed after deployment.

Documentation: Standardization of your documentation is crucial. Ensure your governance teams are documenting system summaries, risk assessments, evaluation records and monitoring plans – and continuously keeping them up to date.

Overcoming Common Barriers to AI Governance Adoption

Despite its benefits, AI governance presents its own set of challenges within organizations. Common barriers include:

Sustainability. When organizational incentives reward shipping models as quickly as possible, teams may view governance as a blocker. Data and AI teams focus on deploying models quickly, while governance requirements appear later as unexpected review cycles or documentation work.

Fragmented Ownership. When responsibility for AI outcomes is spread across multiple teams, governance responsibilities can be unclear. No single team owns the outcome, so no one owns the controls.

Legacy technical debt. Older pipelines and models often lack the metadata, monitoring or documentation that governance expects. Retrofitting these systems requires effort that competes with new development priorities.

Strategies to Increase Adoption

Successful governance programs focus on proactivity, not reactive reforms. There should be consistent messaging from the C-suite on why governance matters. Clear communication and training help practitioners understand how governance fits into existing workflows, rather than adding parallel processes. Meanwhile, pilot initiatives play a critical role by demonstrating that governance reduces rework, prevents production incidents and accelerates approvals once standards are in place. Together, these strategies shift governance from a perceived obstacle into a practical foundation for scaling AI responsibly.

How to get started building an AI governance strategy

Strong AI governance helps organizations scale AI adoption with confidence. Attributes such as clear ownership, risk-based controls and continuous oversight reduce surprises and keep systems aligned with business and regulatory expectations.

Teams should start with a practical foundation: inventory current AI use cases, classify them by risk and assign accountable owners. Pilot governance controls on a small set of high-impact systems to establish standards and refine processes. As teams gain experience, they can extend governance across additional use cases and domains, strengthening oversight while preserving the speed and flexibility needed to sustain AI innovation.

Never miss a Databricks post

What's next?

Data + AI Foundations

February 3, 2026/9 min read