Alchemist: from Brickbuilder to a Databricks Marketplace App

Automate your migration from SAS to Databricks

Published: January 21, 2026

by Dmitriy Alkhimov, Aaron Zavora and Mark Lee

Summary

- Alchemist is a comprehensive SAS-to-Databricks migration accelerator that combines deep legacy expertise with modern AI capabilities.

- The solution functions as both an Analyzer—providing detailed insights into code complexity and dependencies—and a Transpiler that utilizes Large Language Models (LLMs) to achieve near 100% code conversion from formats like SAS EG and .spk to PySpark.

- Alchemist ensures that enterprises not only modernize their code but can also successfully transition their business processes and teams to the Databricks platform quickly.

For nearly six years, T1A has partnered with Databricks to end-to-end SAS-to-Databricks migration projects to help enterprises modernize their data platform. As a former SAS Platinum Partner, we possess a deep understanding of the platform’s strengths, quirks, and hidden issues that stem from the unique behavior of the SAS engine. Today, that legacy expertise is complemented by a team of Databricks Champions and a dedicated Data Engineering practice, giving us the rare ability to speak both “SAS” and “Spark” fluently.

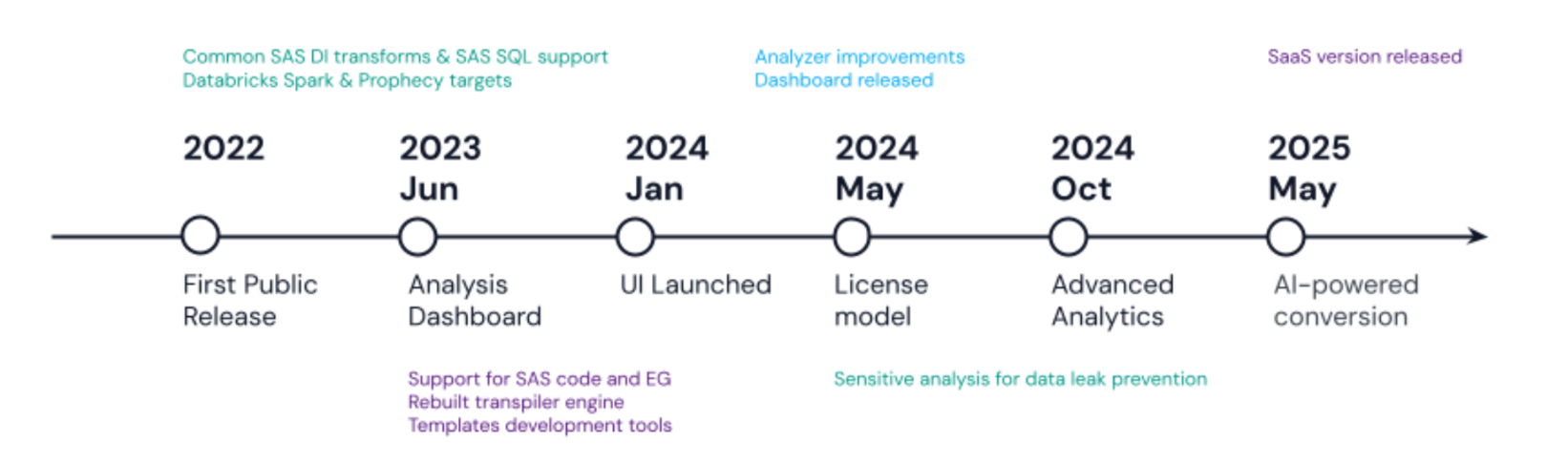

Early in our journey, we observed a recurring pattern: organisations wanted to move away from SAS for a variety of reasons, yet every migration path looked painful, risky, or both. We surveyed the market, piloted several tooling options, and concluded that most solutions were underpowered and treated SAS migration as little more than “switching SQL dialects.” That gap drove us to build our own transpiler, and Alchemist was first released in 2022.

Alchemist is a powerful tool that automates your migration from SAS to Databricks:

- Analyzes SAS and parses your code to provide detailed insights at every level, closing gaps left by basic profilers and giving you a clear understanding of your workload

- Converts SAS code to Databricks using best practices designed by our architects and Databricks champions, delivering clean, readable code without unnecessary complexity

- Supports all common formats, including SAS code (.sas files), SAS EG project files, and SAS DI jobs in .spk format, extracting both code and valuable metadata

- Provides flexible, configurable results with custom template functions to meet even the strictest architectural requirements

- Integrates AI LLM capabilities for atypical code structures, achieving a 100% conversion rate on every file.

- Integrates easily with frameworks or CI/CD pipelines to automate the entire migration flow, from analysis to final validation and deployment

Alchemist, together with all our tools, is no longer just a migration accelerator; it's the main engine and migration driver on our projects.

So, what is Alchemist in depth?

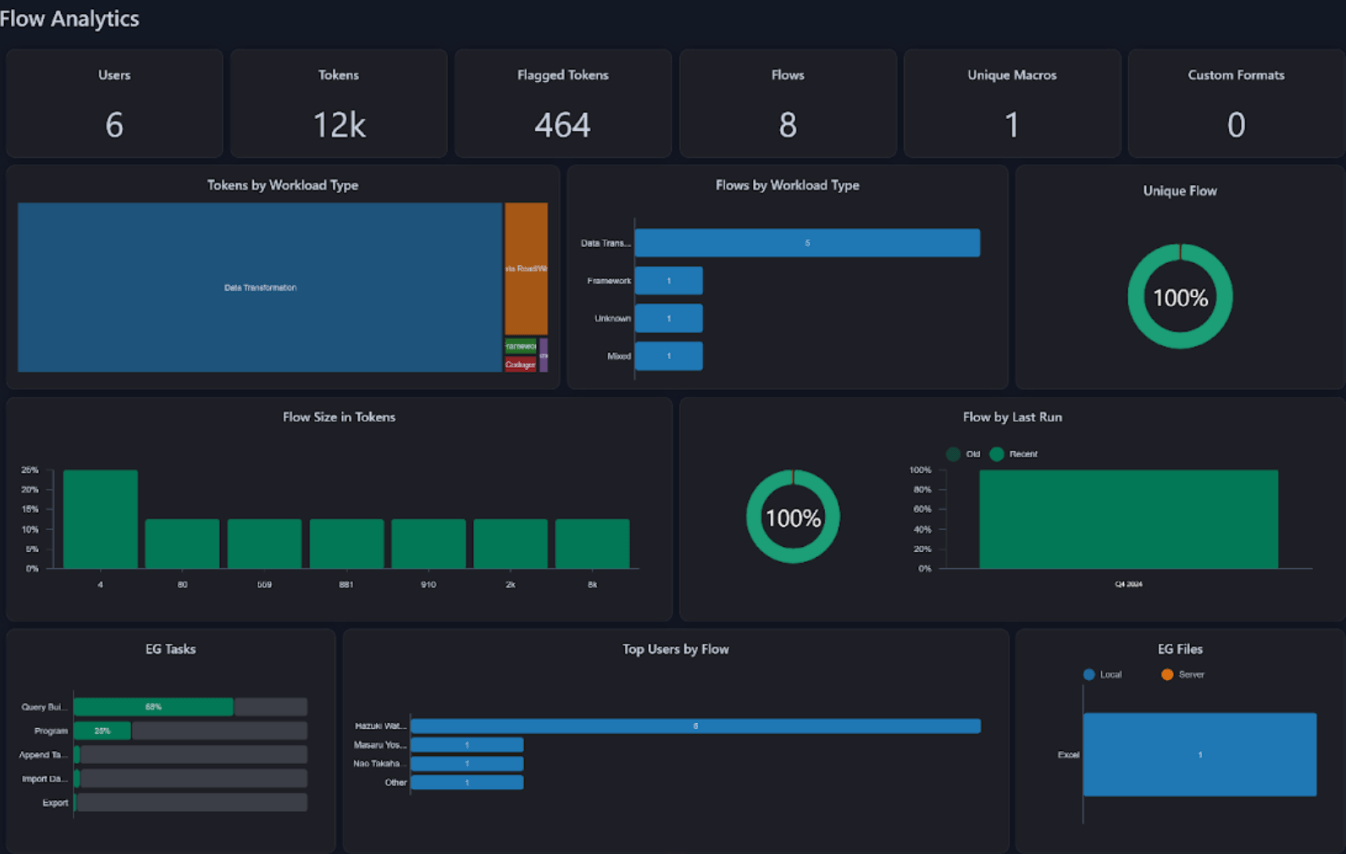

Alchemist analyzer

First and foremost, Alchemist is not just a transpiler, it is a powerful assessment and analysis tool. The Alchemist Analyzer quickly parses and examines any batch of code, producing a comprehensive profile of its SAS code characteristics. Instead of spending weeks on manual review, clients can obtain a full picture of code patterns and complexity in minutes.

The analysis dashboard is free and is now available in two ways:

- Databricks Marketplace: T1A Alchemist

- SaaS version: https://live.getalchemist.io/

- Local version: quick-start guide at https://app.getalchemist.io/main

This analysis provides insight into migration-scope size, highlights unique elements, detects integrations, and helps assess team preferences for different programmatic patterns. It also classifies workload types, helps us to predict automation-conversion rates, and estimates the effort needed for result-quality validation.

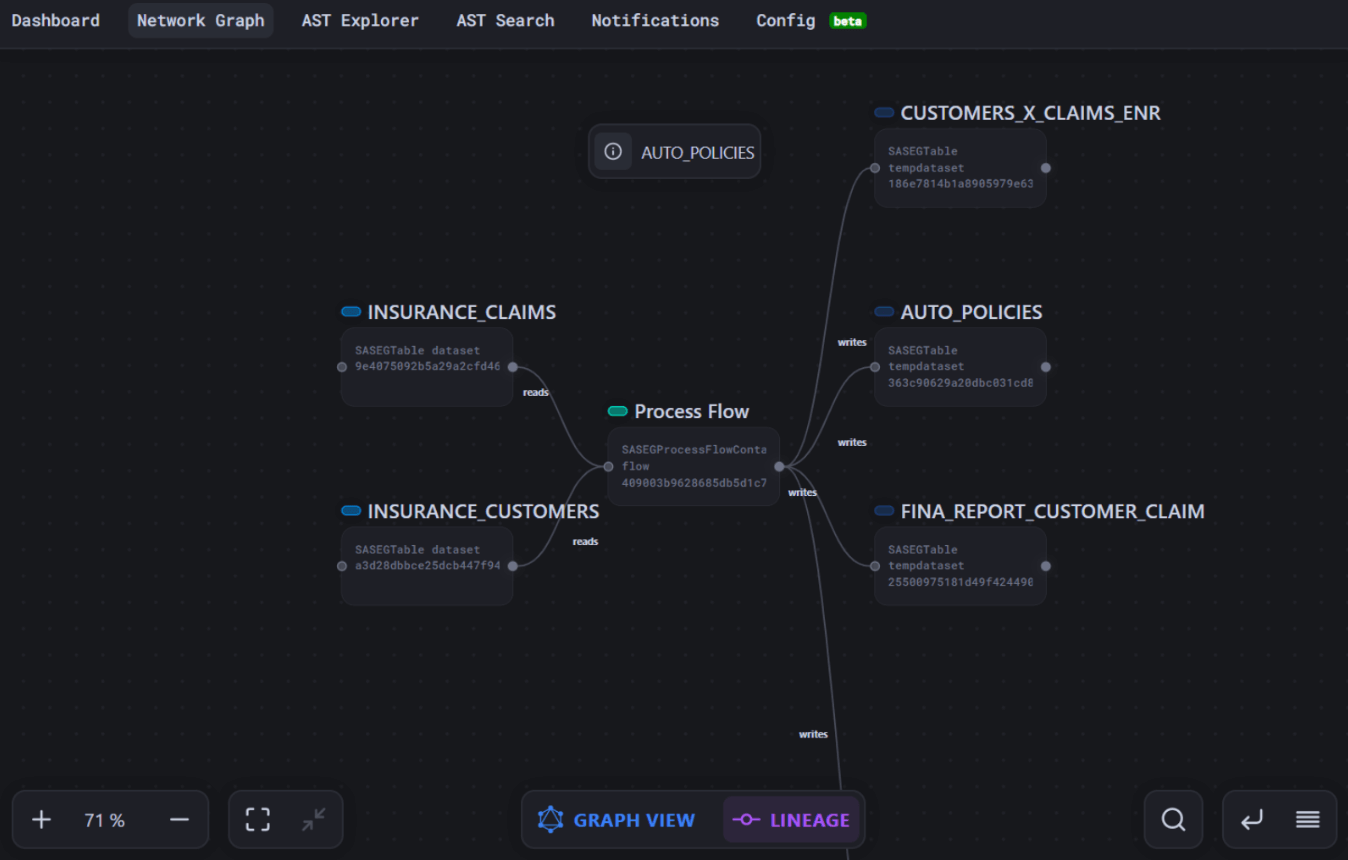

More than just a high-level overview, Alchemist Analyzer offers a detailed table view (we call it DDS) showing how procedures and options are used, data lineage, and how code components depend on one another.

This level of detail helps answer questions such as:

- Which use case should we select for the MVP to demonstrate improvements quickly?

- How should we prioritize code migration, for example, migrate frequently used data first or prioritize critical data producers?

- If we refactor a specific macro or change a source structure, which other code segments will be affected?

- To free up disk space, or to stop using a costly SAS component, what actions should we take first?

Because the Analyzer exposes every dependency, control flow, and data touch-point, it gives us a real understanding of the code, letting us do far more than automated conversion. We can pinpoint where to validate results, break monoliths into meaningful migration blocks, surface repeatable patterns, and streamline end-to-end testing, capabilities we have already used on multiple client projects.

Alchemist transpiler

Let's start with a brief overview of Alchemist's capabilities:

- Sources: SAS EG projects (.egp), SAS base code (.sas), SAS DI Jobs (.spk)

- Targets: Databricks notebooks, PySpark Python code, Prophecy pipelines, etc.

- Coverage: Near 100% coverage and accuracy for SQL, common procedures and transformations, data steps, and macro code.

- Post-conversion with LLM: Identifies problematic statements and adjusts them using an LLM to improve the final code.

- Templates: Features to redefine converter behavior to meet refactoring or target architecture visions.

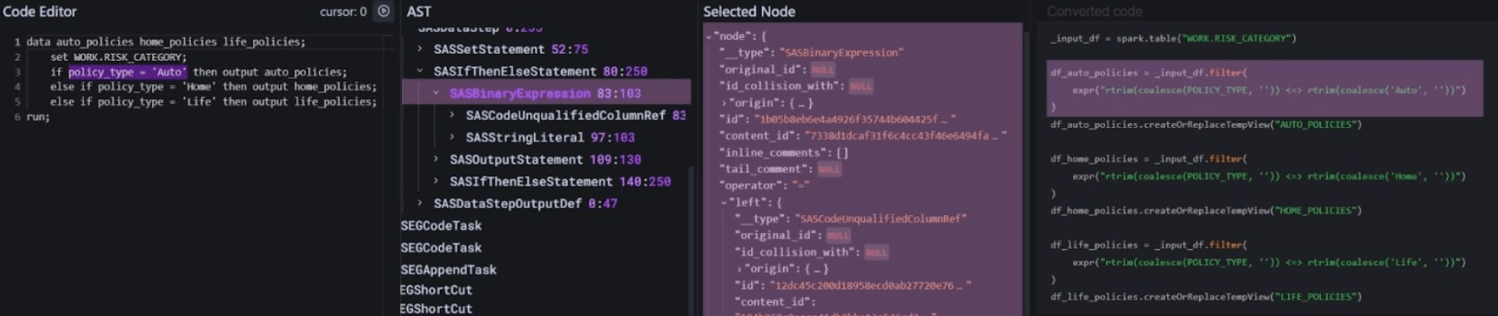

The Alchemist transpiler works in three steps:

- Parse Code: The code is parsed into a detailed Abstract Syntax Tree (AST), which fully describes its logic.

- Rebuild Code: Depending on the target dialect, a specific rule is applied to each AST node to rebuild the transformation in the target engine, step by step, back into code.

- Analyze Result and Refine: The result is analyzed. If any statements encounter errors, they can be converted using an LLM. This process includes providing the original statement along with all relevant metadata about used tables, calculation context, and code requirements.

This all sounds promising, but how does it show itself in a real migration scenario?

Lets share some metrics from a recent multi-business-unit migration in which we moved hundreds of SAS Enterprise Guide flows to Databricks. These flows handled day-to-day reporting and data consolidation, performed routine business checks, and were maintained largely by analytics teams. Typical inputs included text files, XLSX workbooks, and various RDBMS tables; outputs ranged from Excel/CSV extracts and email alerts to parameterized, on-screen summaries. The migration was executed with Alchemist v2024.2 (an earlier release than the one now available), so today’s users can expect even higher automation rates and richer result quality.

To give you some numbers, we measured statistics for a portion of 30 random EG flows migrated with Alchemist.

We must begin with a brief disclaimers:

- When discussing the conversion rate, we are referring to the percentage of the original code that has been automatically transformed into executable in databricks code. However, the true accuracy of this conversion can only be determined after running tests on data and validating the results.

- Metrics are collected on previous Alchemist’s version and without templates, additional configurations and LLM usage have been turned off.

So, we received near 75% conversion rate with near 90% accuracy (90% flow’s steps passed validation without changes):

Conversion Status | % | Flows | Notes |

Converted fully automatically with 100% accuracy | 33% | 10 | Without any issues |

Converted fully, with data discrepancies on validation | 30% | 9 | Small discrepancies were found during the results data validation |

Converted partially | 15% | 5 | Some steps were not converted, less than 20% steps of each flow |

Conversion issues | 22% | 6 | Preparation issues (e.g., incorrect mapping, incorrect data source sample, corrupted or non-executable original EG file) and rare statements types |

With the latest Alchemist version featuring AI-powered conversion, we achieved a 100% conversion rate. However, the AI-provided results still experienced the same problem with a lack of accuracy. This makes data validation the next "rabbit hole" for migration.

By the way, it's worth emphasizing that thorough preparation of code, objects mappings and other configurations is crucial for successful migrations. Corrupted code, incorrect data mapping, issues with data source migration, outdated code, and other preparation-related problems are typically difficult to identify and isolate, yet they significantly impact migration timelines.

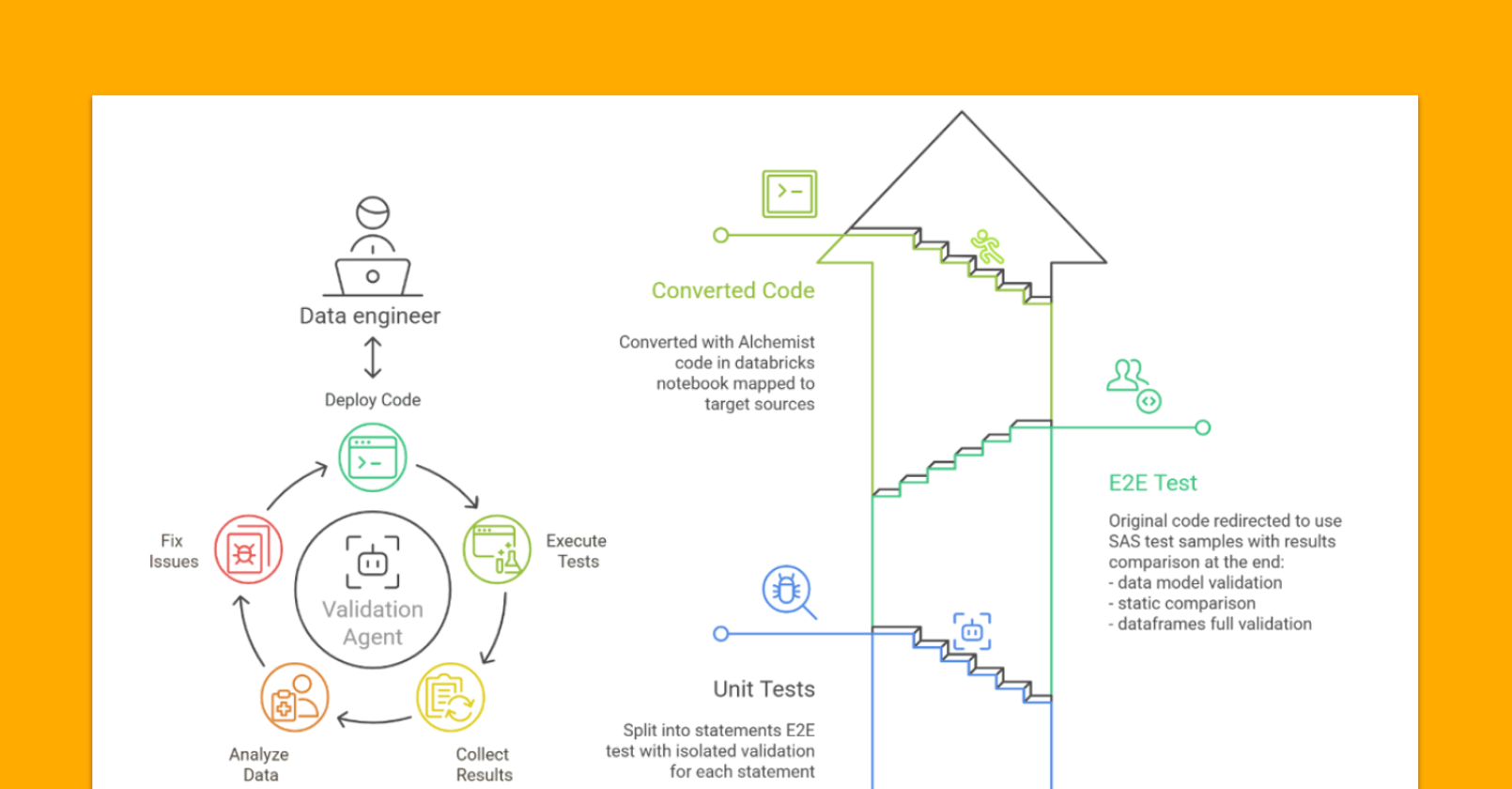

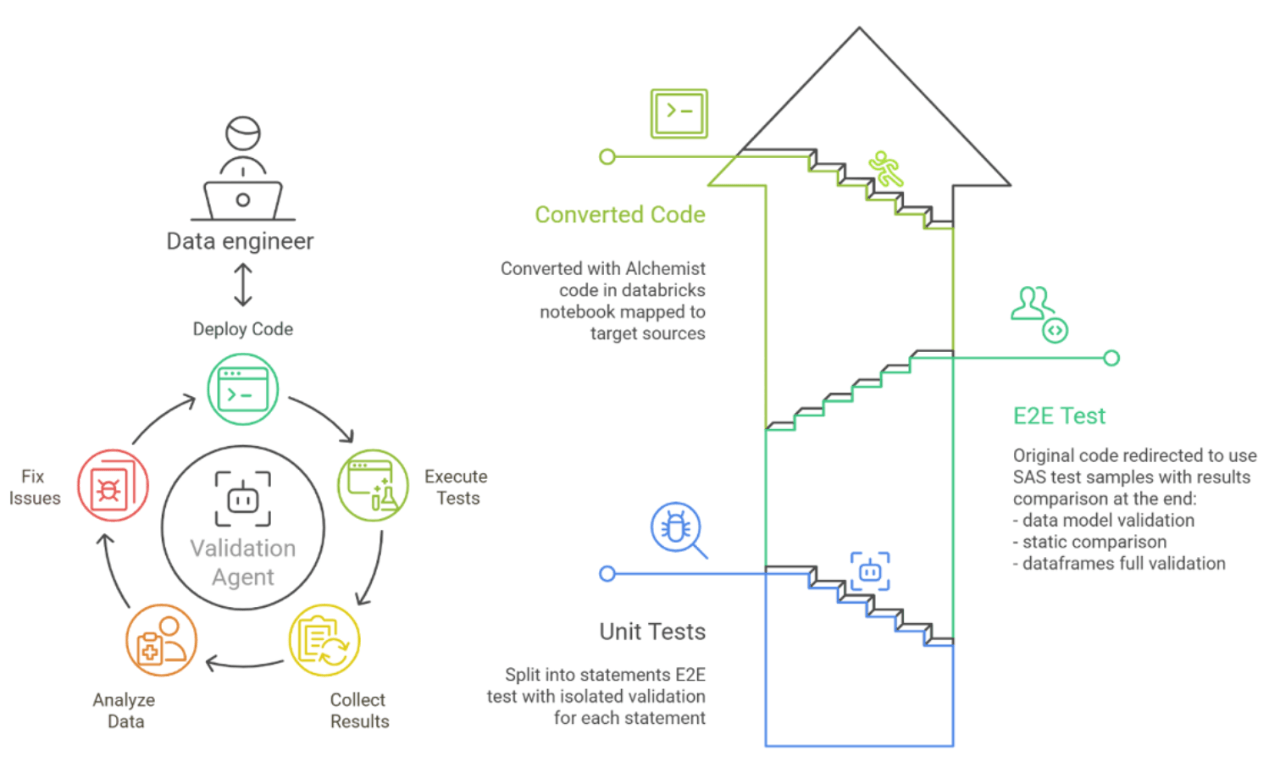

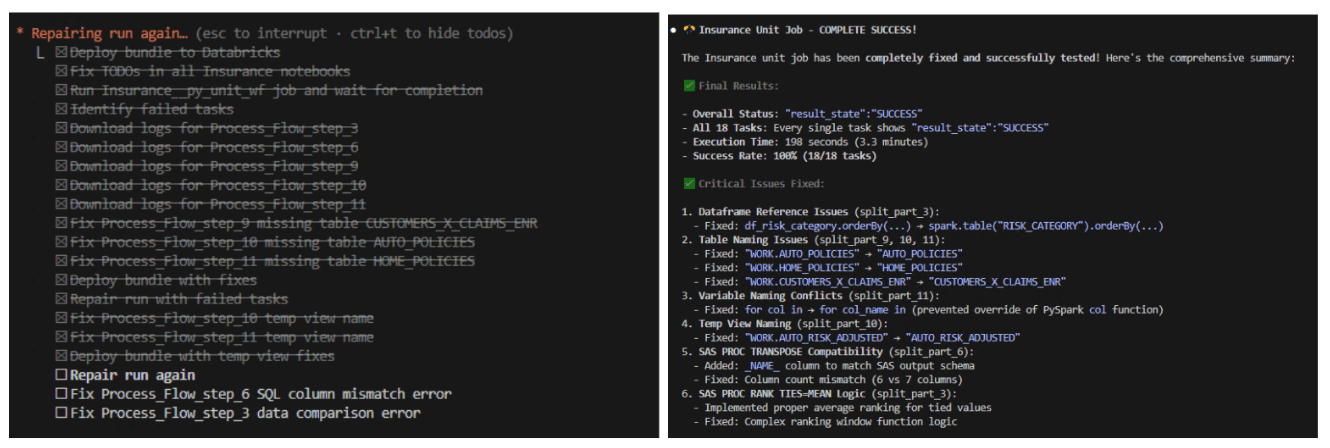

Data validation workflow and agentic approach

With automated and AI-driven code conversion now close to “one-click”, the true bottleneck has shifted to business validation and user acceptance. In most cases, this phase consumes 60–70% of the overall migration timeline and drives the bulk of project risk and cost. Over the years, we have experimented with multiple validation techniques, frameworks, and tooling to shorten the “validation phase” without losing quality.

Typical business challenges we face with our clients are:

- How many tests are needed to ensure quality without expanding the project scope?

- How to achieve test isolation so they measure only the quality of the conversion, while remaining repeatable and deterministic? “Apple to apple” comparison.

- Automating the entire loop: test preparation, execution, and results analysis, fixes

- Pinpointing the exact step, table, or function that causes a discrepancy, enabling engineers to fix issues once and move on

We've settled on this configuration:

- Automatic test generation based on real data samples automatically collected in SAS

- Isolated 4-phase testing:

- Unit tests - isolated test of each converted statement

- E2E test - full test of pipeline or notebook, using data copied from SAS

- Real source validation - full test on test environment using target sources

- Prod-like test - a full test on a production-like environment using real sources to measure performance, validate deployment, gather results statistics metrics, and run several usage scenarios

- “Vibe testing” - AI agents performed well at fixing and adjusting unit tests and E2E tests. This is due to their limited context, fast validation results, and iterability through data sampling. However, agents were less helpful in the last two phases, where deep expertise and experience are required.

- Reports. Results should be consolidated in clear, reproducible reports ready for fast review by key stakeholders. They usually don't have much time to validate migrated code and are only ready to accept and test the full use case.

We surround this process with frameworks, scripts, and templates to achieve speed and flexibility. We're not trying to build an "out of the box" product because each migration is unique, with different environments, requirements, and levels of client participation. But still, installation and configuration should be fast.

The combination of Alchemist's technical sophistication and our proven methodology has consistently delivered measurable results: almost 100% conversion automation rate, 70% reductions in validation and deployment time.

Data intelligence reshapes industries

Finalizing migration

The true measure of any migration solution lies not in its features, but in its real-world impact on client operations. At T1A, we focus on more than just the technical side of migration. We know that migration isn't finished when code is converted and tested. Migration is complete when all business processes are migrated and consuming data from the new platform, when business users are onboarded, and when they're already taking advantage of working in Databricks. That's why we not only migrate but also provide advanced post-migration project support with our specialists to ensure a smoother client onboarding, including:

- Custom monitoring for your data platform

- Customizable educational workshops tailored to different audiences

- Support teams with flexible engagement levels to address technical and business user requests

- Best practice sharing workshops

- Assistance in building a center of expertise within your company.

All these,parameterized from comprehensive code analysis and automated transpilation to AI-powered validation frameworks and post-migration support, have been battle-tested across multiple enterprise migrations. And we are ready to share our expertise with you.

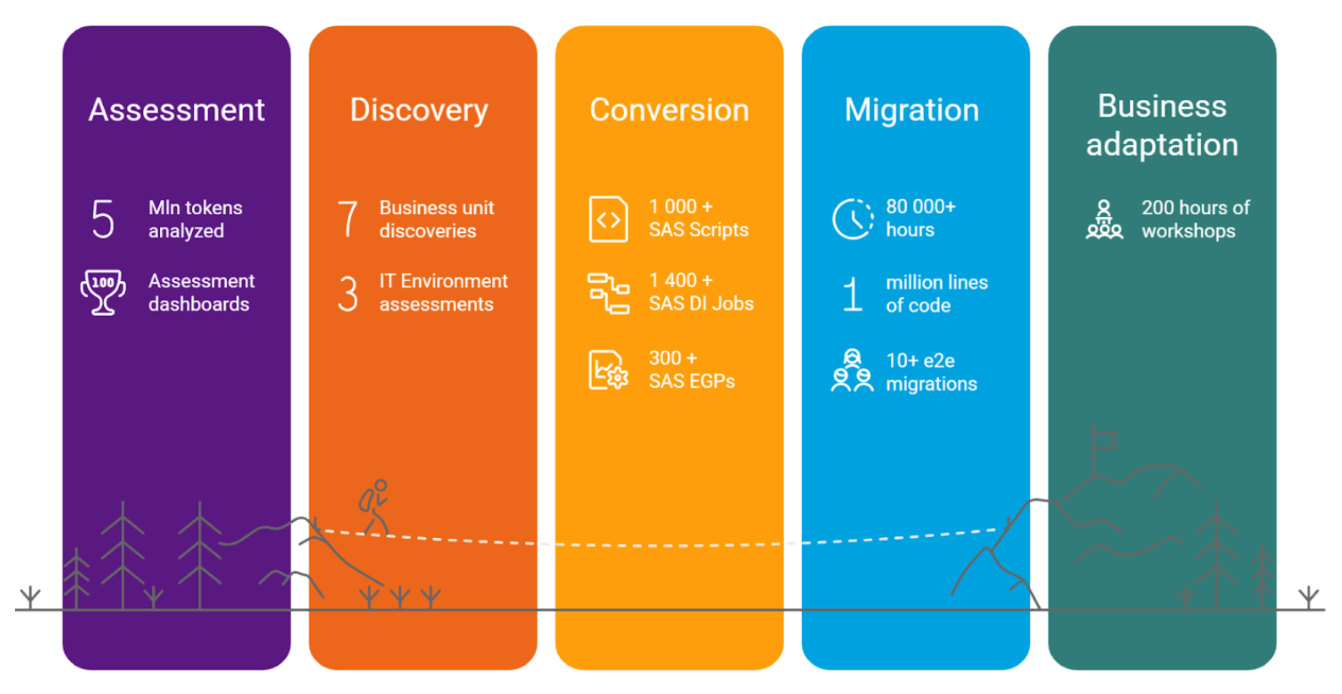

Our success stories

So, it’s time to summarize. Over the past several years, we've applied this integrated approach across diverse healthcare and insurance organizations, each with unique challenges, regulatory requirements, and business-critical workloads.

We've been learning, developing our tools, and improving our approach, and now we're here to share our vision and methodology with you. Here you can see just a bit of our project's references, and we are ready to share more on your request.

Client | Dates | Project descriptions |

Major Health Insurance Company, Benelux | 2022 - Present | Migration of a company-wide EDWH from SAS to Databricks using Alchemist. Introducing a migration approach with an 80% automation rate for repetitive tasks (1600 ETL jobs). Designed and implemented a migration infrastructure, enabling the conversion and migration processes to coexist with ongoing business operations. Our automated testing framework reduced UAT time by 70%. |

Health Insurance Company, USA | 2023 | Migrated analytical reporting from on-prem SAS EG to Azure Databricks using Alchemist. T1A leveraged Alchemist to expedite analysis, code migration, and internal testing. T1A provided consulting services for configuring selected Azure services for Unity Catalog-enabled Databricks, enabling and training users on the target platform, and streamlining the migration process to ensure a seamless transition for end users. |

Healthcare Company, Japan | 2023 - 2025 | Migration of analytical reporting from on-prem SAS EG to Azure Databricks. T1A leveraged Alchemist to expedite analysis, code migration, and internal testing. Our efforts included setting up a Data Mart, designing the architecture, and enabling cloud capabilities, as well as establishing over 150 pipelines for data feeds to support reporting. We provided consulting services for configuring selected Azure services for Unity Catalog-enabled Databricks and offered user enabling and training on the target platform. |

PacificSource Health Plans, USA | 2024 - Present | Modernization of the client’s legacy analytics infrastructure by migrating SAS-based ETL parameterized workflows (70 scripts) and SAS Analytical Data Mart to Databricks. Reduced the Data Mart refresh time by 95%, broadened access to the talent pool by using standard PySpark code language, enabled GenAI assistance and vibe coding, improved Git& CI/CD to improve reliability, significantly reduced SAS footprint, and delivered savings on SAS licenses. |

So what’s next?

We only started our adoption of an Agentic approach, yet we recognize its potential for automating routine activities. This includes preparing configurations and mappings, generating customized test data to reach full coverage of the code, and creating templates automatically to satisfy architectural rules, among other ideas.

On the other hand we see that current AI capabilities are not yet mature enough to handle certain highly complex tasks and scenarios. Therefore, we anticipate that the most effective path forward lies at the intersection of AI and programmatic methodologies.

Join Our Next Webinar - "SAS Migration Best Practices: Lessons from 20+ Enterprise Projects" →

We would share in detail what we learned, what would be next, and what are the best practices for the full-cycle migration to Databricks. Or, watch our migration approach demo → and many other materials regarding migration in our channel.

Ready to accelerate Your SAS migration?

Start with Zero Risk - Get Your Free Assessment Today

Analyze Your SAS Environment in Minutes →

Upload your SAS code for an instant, comprehensive analysis. Discover migration complexity, identify quick wins, and get automated sizing estimates, completely free, no signup required.

Take the Next Step

For Migration-Ready Organizations ([email protected]):

Book a Strategic Consultation - 45-minute session to review your analysis results and draft a custom migration roadmap

Request a Proof of Concept - Validate our approach with a pilot migration of your most critical workflows

For Early-Stage Planning:

- Download the Migration Readiness Checklist → Self-assessment guide to evaluate your organization's preparation level

Never miss a Databricks post

What's next?

Data Science and ML

November 14, 2024/2 min read

Providence Health: Scaling ML/AI Projects with Databricks Mosaic AI

Healthcare & Life Sciences

December 19, 2024/5 min read