Databricks Spatial Joins Now 17x Faster Out-of-the-Box

Native Spatial SQL now delivers much faster spatial joins with no tuning or code change

Summary

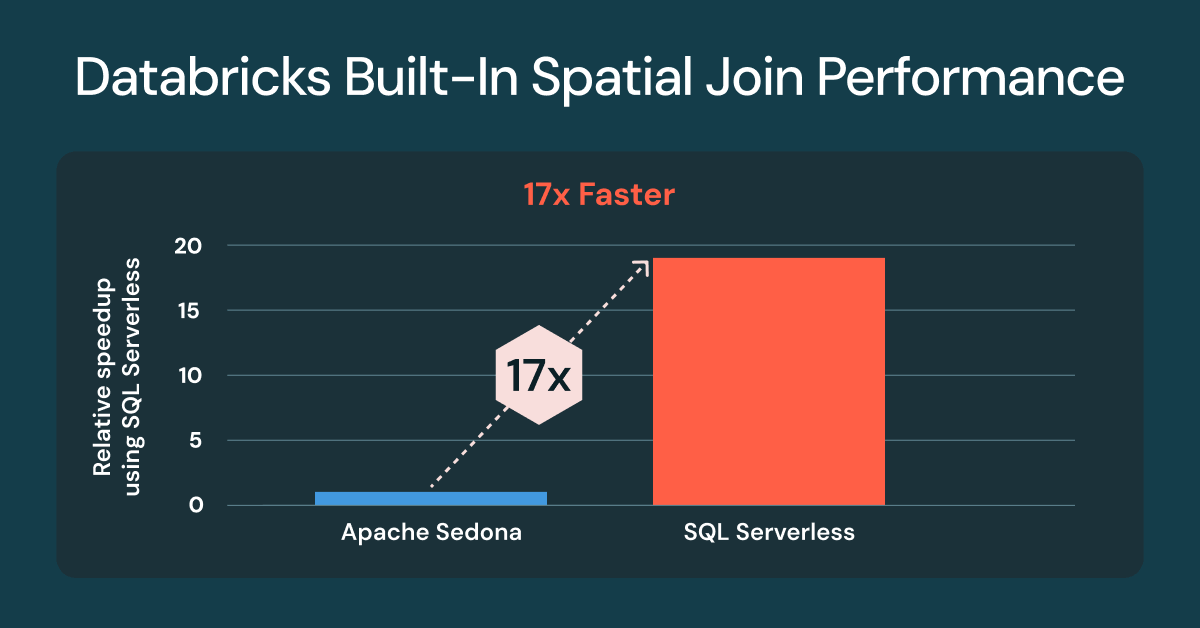

- Spatial joins on Databricks are now up to 17x faster out of the box

- Benchmarks use customer-inspired workloads and Overture Maps data

- GEOMETRY types deliver the best performance

Spatial data processing and analysis is business critical for geospatial workloads on Databricks. Many teams rely on external libraries or Spark extensions like Apache Sedona, Geopandas, Databricks Lab project Mosaic, to handle these workloads. While customers have been successful, these approaches add operational overhead and often require tuning to reach acceptable performance.

Early this year, Databricks released support for Spatial SQL, which now includes 90 spatial functions, and support for storing data in GEOMETRY or GEOGRAPHY columns. Databricks built-in Spatial SQL is the best approach for storing and processing vector data compared to any alternative because it addresses all of the primary challenges of using add-on libraries: highly stable, blazing performance, and with Databricks SQL Serverless, no need to manage classic clusters, library compatibility, and runtime versions.

One of the most common spatial processing tasks is to compare whether two geometries overlap, where one geometry contains the other, or how close they are to each other. This analysis requires the use of spatial joins, for which great out-of-the-box performance is essential to accelerate time to spatial insight.

Spatial joins up to 17x faster with Databricks SQL Serverless

We are excited to announce that every customer using built-in Spatial SQL for spatial joins, will see up to 17x faster performance compared to classic clusters with Apache Sedona1 installed. The performance improvements are available to all customers using Databricks SQL Serverless, Classic clusters with Databricks Runtime (DBR) 17.3, and Lakeflow Spark Declarative Pipelines (Preview channel). If you’re already using Databricks built-in spatial predicates, like ST_Intersects or ST_Contains, no code change required.

Apache Sedona 1.7 was not compatible with DBR 17.x at the time of the benchmarks, DBR 16.4 was used.

Running spatial joins presents unique challenges, with performance influenced by multiple factors. Geospatial datasets are often highly skewed, like with dense urban regions and sparse rural areas, and vary widely in geometric complexity, such as the intricate Norwegian coastline compared to Colorado’s simple borders. Even after efficient file pruning, the remaining join candidates still demand compute-intensive geometric operations. This is where Databricks shines.

The spatial join improvement comes from using R-tree indexing, optimized spatial joins in Photon, and intelligent range join optimization, all applied automatically. You write standard SQL with spatial functions, and the engine handles the complexity.

The business importance of spatial joins

A spatial join is similar to a database join but instead of matching IDs, it uses a spatial predicate to match data based on location. Spatial predicates evaluate the relative physical relationship, such as overlap, containment, or proximity, to connect two datasets. Spatial joins are a powerful tool for spatial aggregation, helping analysts uncover trends, patterns, and location-based insights across different places, from shopping centers and farms, to cities and the entire planet.

Spatial joins answer business-critical questions across every industry. For example:

- Coastal authorities monitor vessel traffic within a port or nautical boundaries

- Retailers analyze vehicle traffic and visitation patterns across store locations

- Modern agriculture companies perform crop yield analysis and forecasting by combining weather, field, and seed data

- Public safety agencies and insurance companies locate which homes are at-risk from flooding or fire

- Energy and utilities operations teams build service and infrastructure plans based on analysis of energy sources, residential and commercial land use, and existing assets

Gartner®: Databricks Cloud Database Leader

Spatial join benchmark prep

For the data, we selected four worldwide large-scale datasets from Overture Maps Foundation: Addresses, Buildings, Landuse, and Roads. You can test the queries yourself using the methods described below.

We used Overture Maps datasets, which were initially downloaded as GeoParquet. An example of preparing addresses for the Sedona benchmarking is shown below. All datasets followed the same pattern.

We also processed the data into Lakehouse tables, converting the parquet WKB into native GEOMETRY data types for Databricks benchmarking.

Comparison queries

The chart above uses the same set of three queries, tested against each compute.

Query #1 - ST_Contains(buildings, addresses)

This query evaluates the 2.5B building polygons that contain the 450M address points (point-in-polygon join). The result is 200M+ matches. For Sedona, we reversed this to ST_Within(a.geom, b.geom) to support default left build-side optimization. On Databricks, there is no material difference between using ST_Contains or ST_Within.

Query #2 - ST_Covers(landuse, buildings)

This query evaluates the 1.3M worldwide `industrial` landuse polygons that cover the 2.5B building polygons. The result is 25M+ matches.

Query #3 - ST_Intersects(roads, landuse)

This query evaluates the 300M roads that intersect with the 10M worldwide ‘residential’ landuse polygons. The result is 100M+ matches. For Sedona, we reversed this to ST_Intersects(l.geom, trans.geom) to support default left build-side optimization.

What’s next for Spatial SQL and native types

Databricks continues to add new spatial expressions based on customer requests. Here is a list of spatial functions that were added since Public Preview: ST_AsEWKB, ST_Dump, ST_ExteriorRing, ST_InteriorRingN, ST_NumInteriorRings. Available now in DBR 18.0 Beta: ST_Azimuth, ST_Boundary, ST_ClosestPoint, support for ingesting EWKT, including two new expressions, ST_GeogFromEWKT and ST_GeomFromEWKT, and performance and robustness improvements for ST_IsValid, ST_MakeLine, and ST_MakePolygon.

Provide your feedback to the Product team

If you would like to share your requests for additional ST expressions or geospatial features, please fill out this short survey.

Update: Open sourcing geo types in Apache Spark™

The contribution of GEOMETRY and GEOGRAPHY data types to Apache Spark™ has made great progress and is on track to be committed to Spark 4.2 in 2026.

Try Spatial SQL out for free

Run your next Spatial query on Databricks SQL today – and see how fast your spatial joins can be. To learn more about Spatial SQL functions, see the SQL and Pyspark documentation. For more information on Databricks SQL, check out the website, product tour, and Databricks Free Edition. If you want to migrate your existing warehouse to a high-performance, serverless data warehouse with a great user experience and lower total cost, then Databricks SQL is the solution — try it for free.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read