How FedEx built a scalable, enterprise-grade data platform with IT automation and Databricks Unity Catalog

Summary

• FedEx adopted Databricks and Unity Catalog to unify analytics, governance, and metadata at enterprise scale, enabling secure self-service access for 2,800+ users across 220 automated workspaces and 1,300+ governed data assets.

• By standardizing architecture and automating infrastructure, metadata, and environment provisioning, FedEx reduced IT complexity, accelerated delivery, and scaled analytics reliably across a highly distributed global organization.

• Unity Catalog now serves as the foundation for secure, observable, and cost-efficient analytics, helping FedEx balance broad data democratization with strong governance while driving smarter, data-driven decisions across the business.

This blog is authored by Patrick Brown, Lead Product Manager, Data Platform, FedEx

In today’s fast-paced, data-driven world, organizations must harness the full potential of their data and technology to remain competitive. For us at FedEx, this meant reimagining how we manage, govern, and deliver data and analytics across the enterprise - not just for IT, but for the business. Our goal was ambitious: to make supply chains smarter by building a unified analytics platform that grows with our business and empowers decision-makers at every level.

By leveraging the Databricks Data Intelligence Platform and Unity Catalog, we’ve built a robust, enterprise-grade analytics foundation that supports:

- 2,800+ enterprise users empowered with self-service analytics

- 77,000+ queries executed across a unified platform

- 220+ Databricks workspaces provisioned through automation

- 1,300+ Unity Catalog tables available for secure, governed access

This transformation has enabled us to reduce IT complexity, accelerate delivery, and support a globally distributed network of business partners, including teams in logistics, sales, marketing, finance, pricing, and customer service. These users, many of whom are not technical, rely on insights generated by a federated team of engineers and data scientists to make informed, data-driven decisions that drive operational excellence and business growth.

Managing risk and unlocking value-driven analytics across the enterprise

As our business grew, so did the complexity of our data landscape, creating significant operational and technical hurdles. We faced four key challenges:

- Fragmented analytics ecosystem: With over 600 independently managed environments, our data landscape reflected the scale and diversity of our operations. However, this decentralized structure presented opportunities to improve cross-functional collaboration, streamline maintenance, and reduce complexity.

- Manual IT provisioning: Supporting thousands of analytics users with a Lean IT team highlighted the need for scalable solutions. Manual setup processes, while effective initially, presented opportunities to accelerate time-to-insight and reduce operational risk through automation.

- Unlocking metadata and data discoverability: With thousands of valuable data assets across the enterprise, we identified opportunities to improve metadata consistency and enhance discoverability - ensuring that teams could more easily access and leverage the insights available to them.

- Balancing democratization with governance: As stewards of critical enterprise data, we needed to ensure secure, governed access while enabling broad, self-service analytics across the organization.

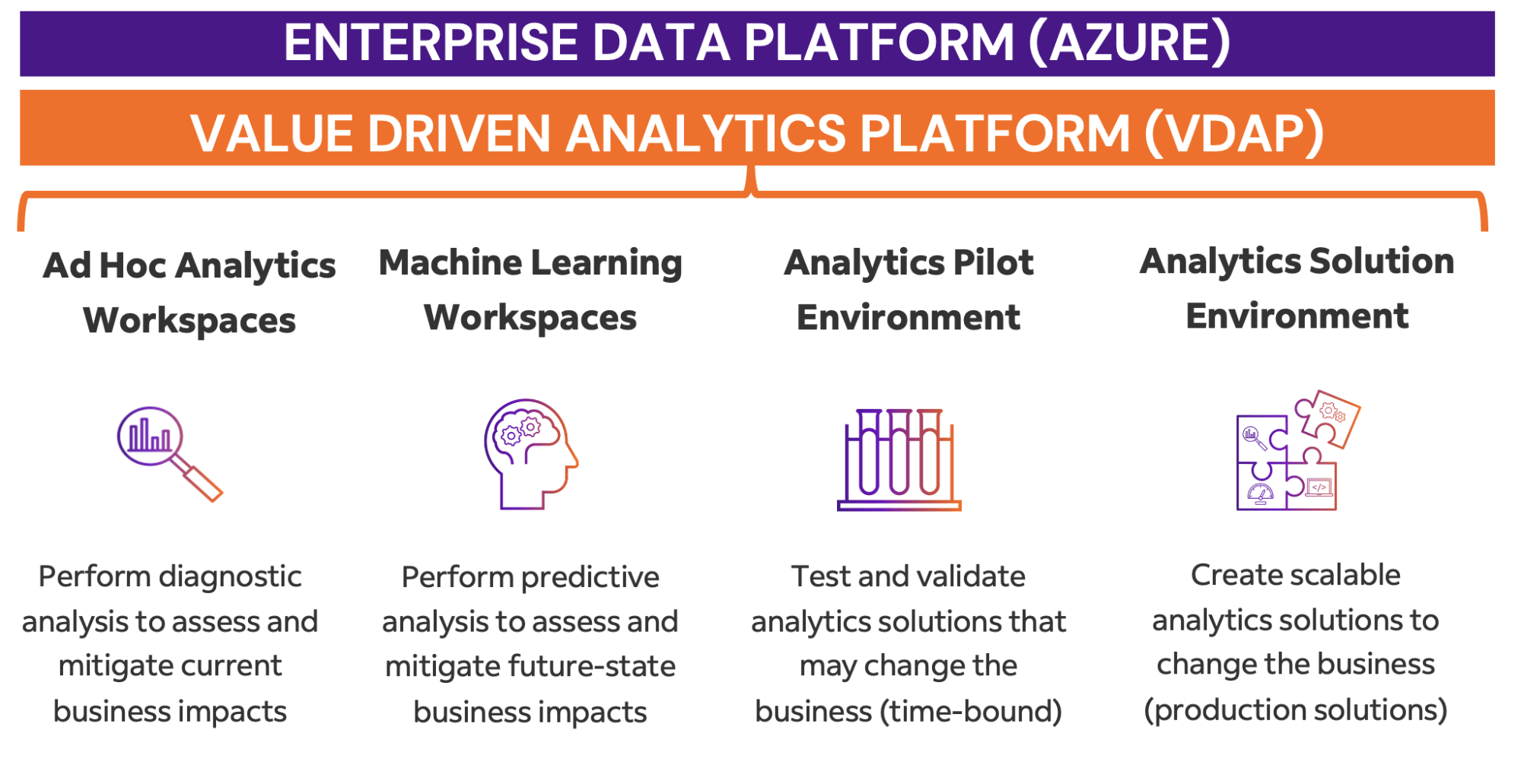

Our transformation at FedEx was guided by a clear vision: to operate at the intersection of physical and digital by combining our global network, talented people, and data-driven solutions. Central to this vision is the Value Driven Analytics Platform (VDAP) - a unified, cloud-native platform designed to abstract IT complexity and empower global analytics teams. VDAP enables us to support both ad hoc problem-solving and operationalized analytics solutions. The platform does this by providing a standardized, extensible foundation that enables our analytics teams to build, test, and deploy insights that drive operational excellence and innovation.

A platform-centric architecture design for modern analytics

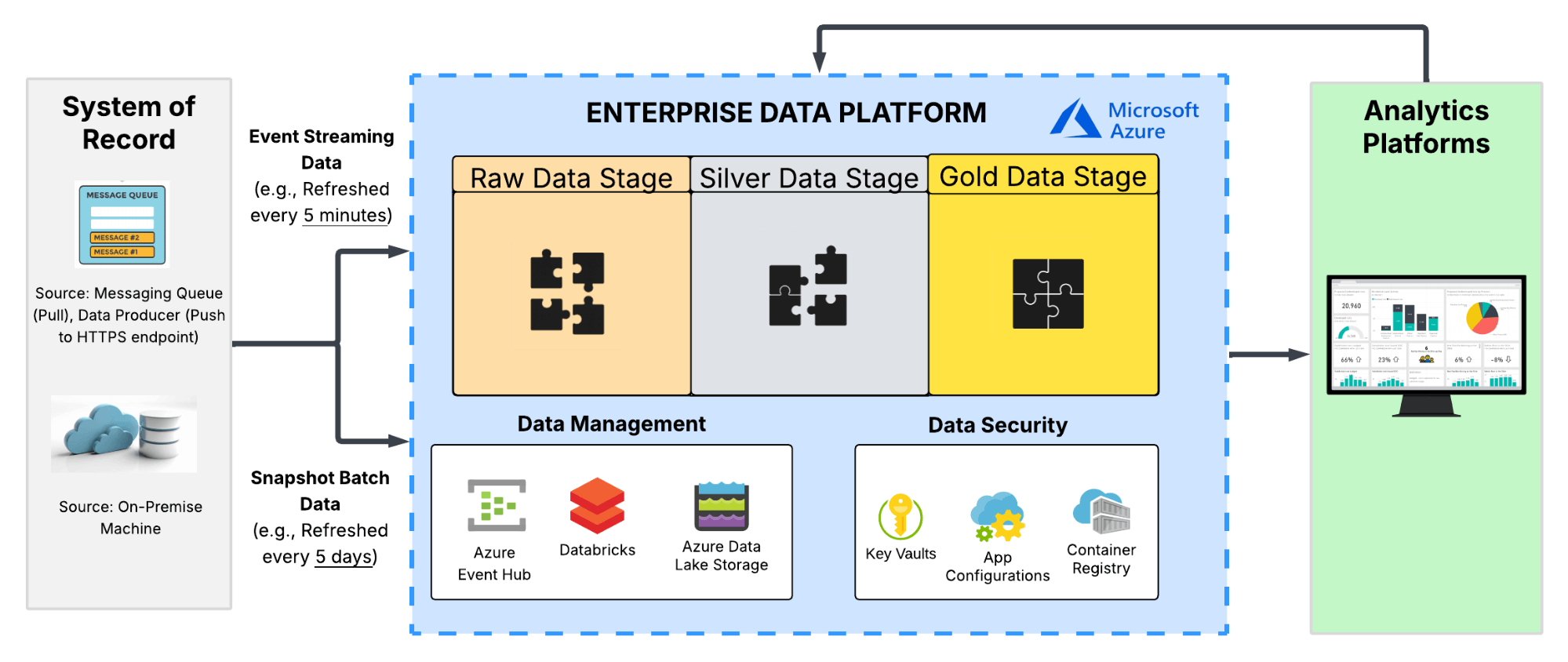

Our platform is built around two integrated layers: the Enterprise Data Platform and the Analytics Platform (VDAP).

The Enterprise Data Platform is a centralized, governed repository of trusted data, serving as the foundation for data ingestion, storage, and processing.

At its core is the Medallion Architecture, which standardizes how data is staged, standardized, and curated across three layers:

- Bronze: Raw, immutable data from source systems. The bronze data layer serves as the immutable system of record, preserving data lineage and enabling traceability for auditing and debugging purposes.

- Silver: Transformed, structured data for high-performance analytics. The silver data layer also supports semi-structured data (e.g., JSON), enabling high-performance analytics for custom applications that rely on flexible data formats.

- Gold: Business-ready data curated for diagnostic, predictive, and prescriptive use cases

The Analytics Platform (VDAP) provides self-service, purpose-built environments tailored to a broad range of use cases - from machine learning to operational dashboards.

The Four VDAP products are intended to address specific analytics focus areas for the FedEx analytics userbase. Within the purpose-driven analytics products, we utilize core Databricks services to support technical users (e.g., SQL editor, Notebooks) and non-technical users (e.g., Genie, AI/BI dashboards) for seamless cross functional collaboration.

Data intelligence reshapes industries

Automating analytics infrastructure with Azure DevOps, Databricks Lakeflow Spark Declarative Pipelines and Unity Catalog

We adopted a configuration and pipeline-based approach to automate every aspect of our data and analytics infrastructure ecosystem. All IT and data governance processes are now controlled through configuration files and deployed via automated pipelines. This approach ensures:

- Standardization across teams and solutions

- Repeatability of deployments, reducing errors and inconsistencies

- Elastic scalability to support a growing number of users and analytics use cases

- Compliance with enterprise policies and governance standards

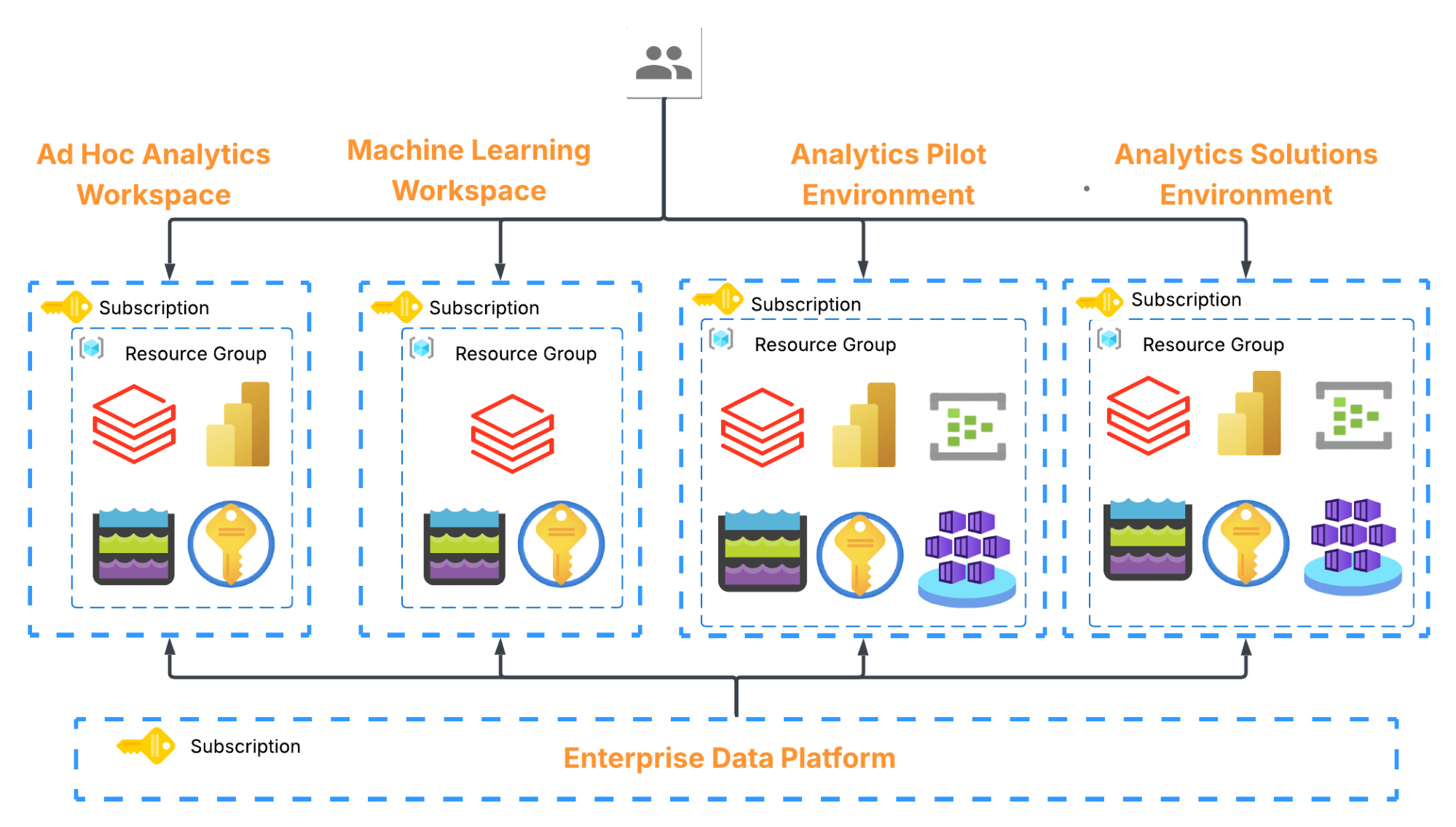

To support automation at scale, we developed three core configuration templates that standardize the deployment of resources across the IT lifecycle:

- Infrastructure Configuration Files: Define and provision cloud resources for data ingestion, processing, and storage in a consistent, repeatable manner.

- Metadata Configuration Files: Automate the exposure of metadata - such as table descriptions, data domains, and classifications - ensuring discoverability and governance compliance.

- Analytics Environment Templates: Enable rapid, standardized creation of purpose-driven analytics environments (e.g., for ML training or SQL tasks), including serverless compute, Unity Catalog installation, and security controls.

Using Microsoft Azure and Databricks services, we’ve fully automated the ingestion of data from upstream systems into our centralized data platform. Lakeflow Spark Declarative Pipelines expose this data to Unity Catalog tables, making it immediately available for analytics users across the enterprise.

Metadata is automatically published to Unity Catalog, enabling enterprise-wide data discovery. Governance policies - such as least-privilege access - are enforced through configuration, ensuring that data is both accessible and secure.

The result is a fully automated system for provisioning data pipelines, collecting and exposing metadata, and creating analytics environments - enabling faster, more secure, and more scalable analytics across the organization.

Unity Catalog as our foundation for governance, efficiency and secure data access

Unity Catalog plays a foundational role in our architecture by unifying data access governance, and discoverability across platforms. Unity Catalog enables us to deliver secure, trusted, and cost-efficient analytics at enterprise scale.

Centralized data discovery

Unity Catalog acts as a single source of truth for metadata, making it easier for users across the organization to discover and understand the data available for analytics. Column-level metadata, data classifications, and domain context accelerate access to the right data while reducing confusion.

Granular, policy-driven access control

Analytics users can browse the enterprise data assets with a unified data access policy, which allows analytics teams to find data to serve their use case. When it comes to running analytics tasks and building solutions - access is managed at the table and column level based on data sensitivity and data domain, ensuring that users only see what they’re authorized to analyze. This gives our governance and security teams confidence that compliance requirements are met, even as data access expands across functions and business units.

Observability and monitoring at scale

Unity Catalog provides observability into how data is accessed and used. System tables and custom dashboards allow us to monitor:

- Query efficiency at the user level, helping teams tune workloads and optimize performance

- Compute usage and cost trends, enabling smarter capacity optimization by our users and planning by our IT administrators

- User activity monitoring, helping detect risky or non-compliant behavior before it becomes an issue

Driving cost-efficient analytics

We’ve also implemented a series of platform-wide optimizations, made possible by Unity Catalog’s central governance model:

- Serverless compute that auto-scales based on demand

- Automatic cluster termination to prevent idle resource consumption

- Default retention policies that limit data storage to what's truly needed

- Runaway query timeout to control costs during open-ended exploration

Together, these capabilities help us to operate a more efficient and secure analytics platform, while giving users the freedom to explore and innovate with trusted data.

Looking Ahead and Conclusion

Our journey is far from over. We’re continuing to evolve our platform by:

- Streamline Analytics Environment Creation: Create simple GUI for self-service provisioning of analytics environments with required IT, governance and site reliability resources

- Enhanced diagnostic analytics: Use Databricks Notebooks and Unity Catalog for data transformation and discovery, paired with Azure Data Lake Storage and Event Hubs for ingestion and Power BI for reporting, to proactively surface and mitigate operational performance issues across the global FedEx enterprise

- Expanded predictive analytics: Leverage Databricks Notebooks, AutoML, and MLflow for model development and management, along with Unity Catalog for model metadata management, to improve forecasting as regional and global conditions evolve

- Evolving prescriptive analytics: As we continue to fine-tune and revamp the FedEx operations ecosystem, this capability is expected to incorporate a combination of Databricks and Azure services from the diagnostic and predictive capabilities above.

- Metadata augmentation with GenAI: Utilize generative AI programs to enrich data asset metadata and improve discoverability for analytics users

By automating IT processes and standardizing analytics environments with Databricks and Unity Catalog, we’ve built a platform that is not only scalable and secure but also deeply aligned with our business goals. This transformation has helped accelerate our time to insight and helped position FedEx to deliver smarter, more responsive supply chain solutions for the future.

Check out our session from the Data + AI Summit last summer to learn more.