Introducing the new Databricks Partner Program and Well-Architected Framework for ISVs and Data Providers

Raising the Bar for ISVs and Data Providers Partnering with Databricks

Summary

- Introducing a unified Databricks Partner Tiering Program that rewards impact across Connected Products, Delta Sharing, and Built-On architectures.

- Launching the Partner Well-Architected Framework (PWAF) with AI-ready, prescriptive guidance to help partners build secure, measurable, and scalable integrations faster.

- Clearer incentives, standards, and tools that connect architecture choices to tiering, GTM benefits, and joint customer success.

More than 20k customers - including over 60% of the Fortune 500 - rely on Databricks to detect fraud, discover new drugs, optimize their supply chain, personalize customer experiences, and more. And as customers ask us to expand our product, they’re also asking us to broaden who we partner with to help them democratize data and AI across their enterprise… No matter how fast we innovate, we’ll never be able to meet every customer's needs alone.

This is where you - our ISV and Data Provider partners - come in. Together, we aspire to offer customers any tool, model, dataset, or agent they need, and help grow your business along the way.

If you want to go fast, go alone. If you want to go far, go together. —African Proverb

In the 10 months since joining Databricks to look after our Product Ecosystem, I've had the privilege of meeting hundreds of ISVs, Data Providers, and startups. You told me what's working well and where we need to improve, and we've got a lot in store to address your feedback.

Today at our Partner Kickoff in Vegas, I'm excited to announce the first two (of many) improvements to our ISV and Data Provider partner program: a new Partner Tiering Program to better reward partners for their customer impact across multiple partner architectures, and a Partner Well-Architected Framework that gives clear, AI-ready technical guidance for building world-class integrations with Databricks.

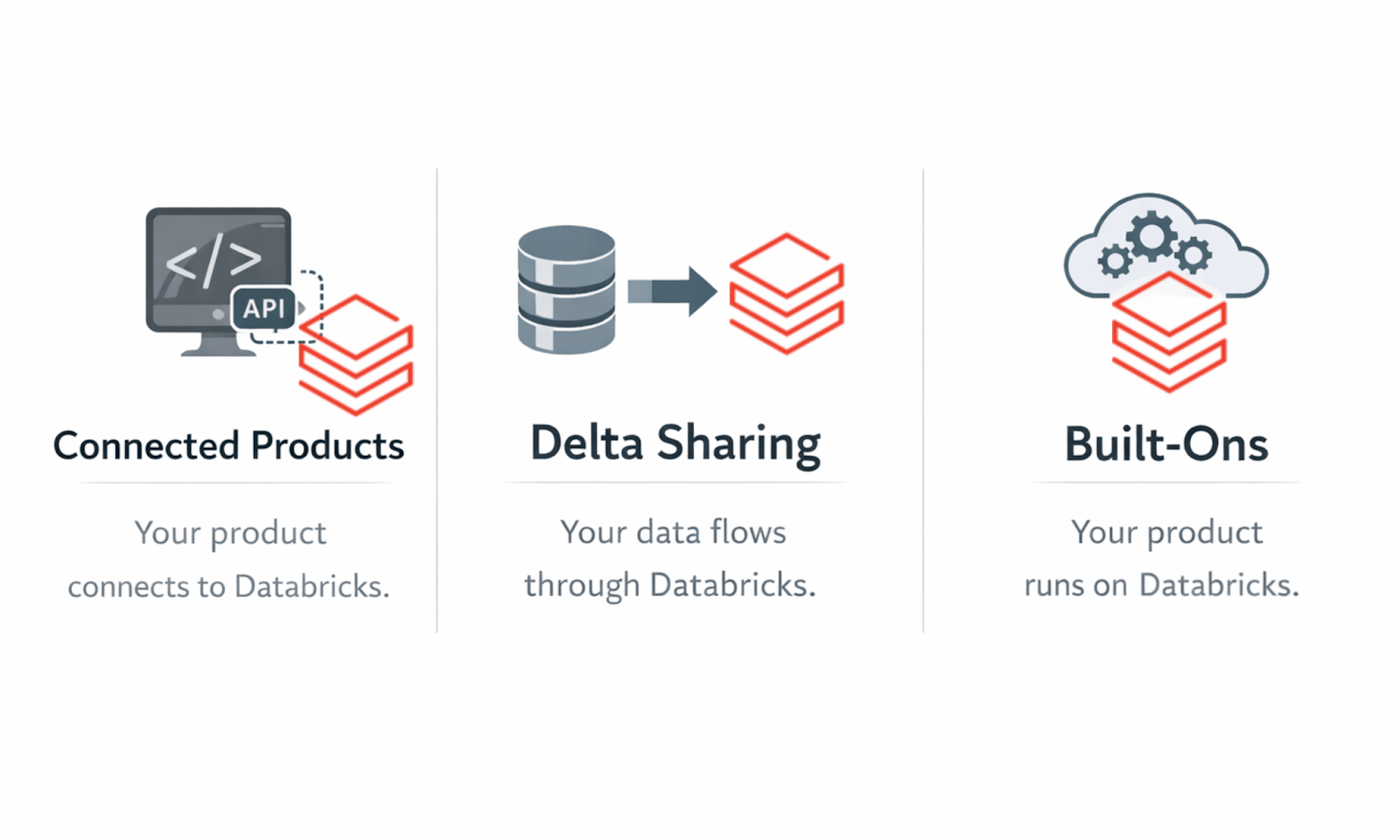

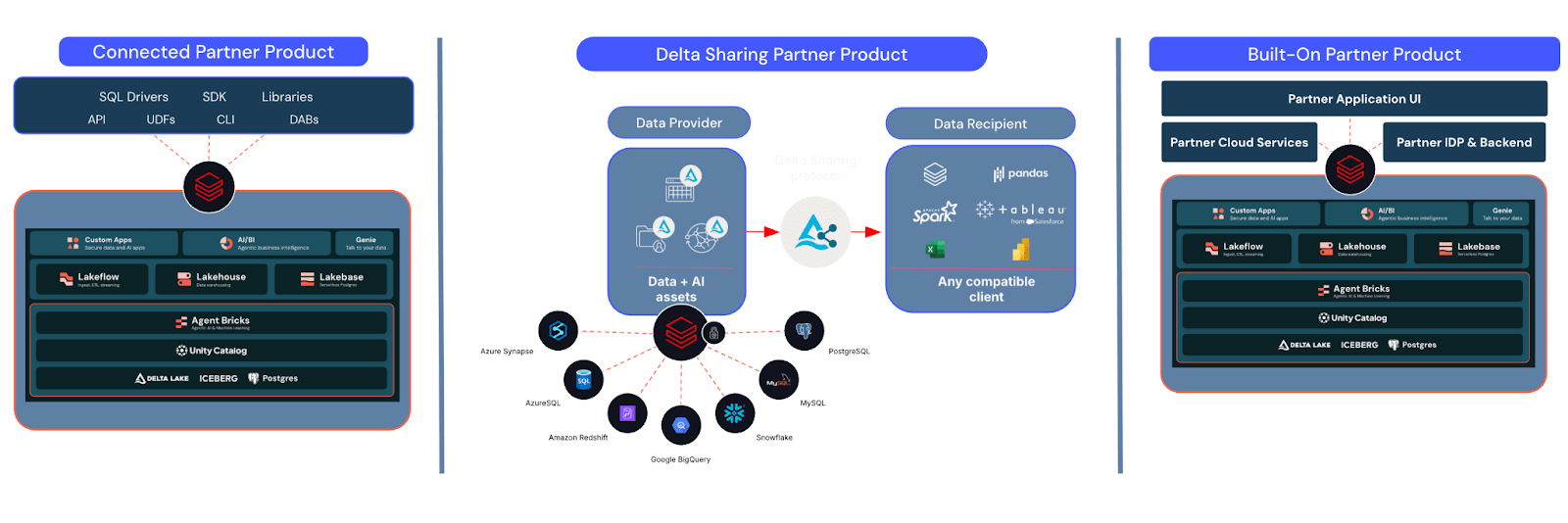

Three Partner Architectures for ISVs and Data Providers on Databricks

At the foundation of these announcements are the three architectures you co-build with us.

1. Connected Products are partner-built products that integrate with the customer’s Databricks platform to analyze, process, transform, and generate insights from their data. These drivers (e.g., JDBC, ODBC, Python) and APIs are most commonly developed by AI, BI, data governance, data transformation, or other data-intensive tools, and now also includes MCP servers that can be published to our MCP Marketplace.

2. Delta Sharing is the only “one share to anywhere protocol” that allows Data Providers to share their data with customers on any platform, using any tool, and power any model. It’s zero-copy, so you don’t have to replicate your data, and we manage all the cross-cloud networking and security complexity. Unlike the "walled garden" approaches offered by other data platforms, we'll continue to make Delta Shares work with any tool customers want. If the recipient accepts the share in Databricks, it simply appears in their Unity Catalog to use/join/query alongside the rest of their data, and it'll also work in Excel, any major BI tool, Snowflake, and others. This is likely why Delta Shares have grown more than 140% across the ecosystem this year.

3. Built-Ons are solutions built on top of Databricks, with Databricks serving as the foundational implementation behind the partner's own front-end/API/intellectual property. This is the newest motion for us, and the one that we're seeing the most demand for, particularly as ISVs build agents and applications with Agent Bricks, Lakebase, and Databricks Apps.

Previously, we've had different teams with different incentives pursuing partnerships across these architectures. This made sense as each new architecture was launched, as we had to test, measure, and learn what worked. What we learned was that many of you engage in more than one of these architectures and deserve to be rewarded accordingly, and that you want better guidance on *how* to build your integrations to meet customer expectations and get support from our field.

One Program, Three Partner Architectures

Our new Partner Tiering Program uses the same tiering structure as our Consulting & System Integrator program, and measures the combined impact of all three partner architectures - number of joint customers, Databricks consumption ($DBU) impact, commitment to Databricks, strategic services adoption, and GTM readiness - to determine tier placement and rewards in a holistic, merit-based system.

What this means for you:

- Get credit for everything you build across Connected Products, Delta Sharing, and Built-Ons

- Get “extra” credit for building with Lakebase, Genie, and/or Agent Bricks.

- See how your architecture choices translate into tiers, benefits, and GTM support

We’ll be sharing more with you about the specific tiers and benefits throughout Q1(Feb-Apr) and continue to refine based on your feedback before we officially rollout on or before Q2.

Partner Well Architected Framework: Building Better, Faster, Together

To provide you with better technical guidance and greater consistency/transparency in how we validate all partner integrations, we're publishing the Partner Well-Architected Framework (PWAF).

PWAF provides partners with AI-ready prescriptive guidance for building integrations that are secure and reliable, and includes the instrumentation requirements that allow us to measure customer adoption and $DBU impact - some of the key metrics that determine your tier placement in the Partner Program.

Best of all, we've designed PWAF to be AI-ready from the ground up. In just a few clicks, you can use this guidance with Cursor, Claude Code, Replit, or your favorite AI-assisted IDE/developer tool and prompt your way to well-architected Databricks integrations.

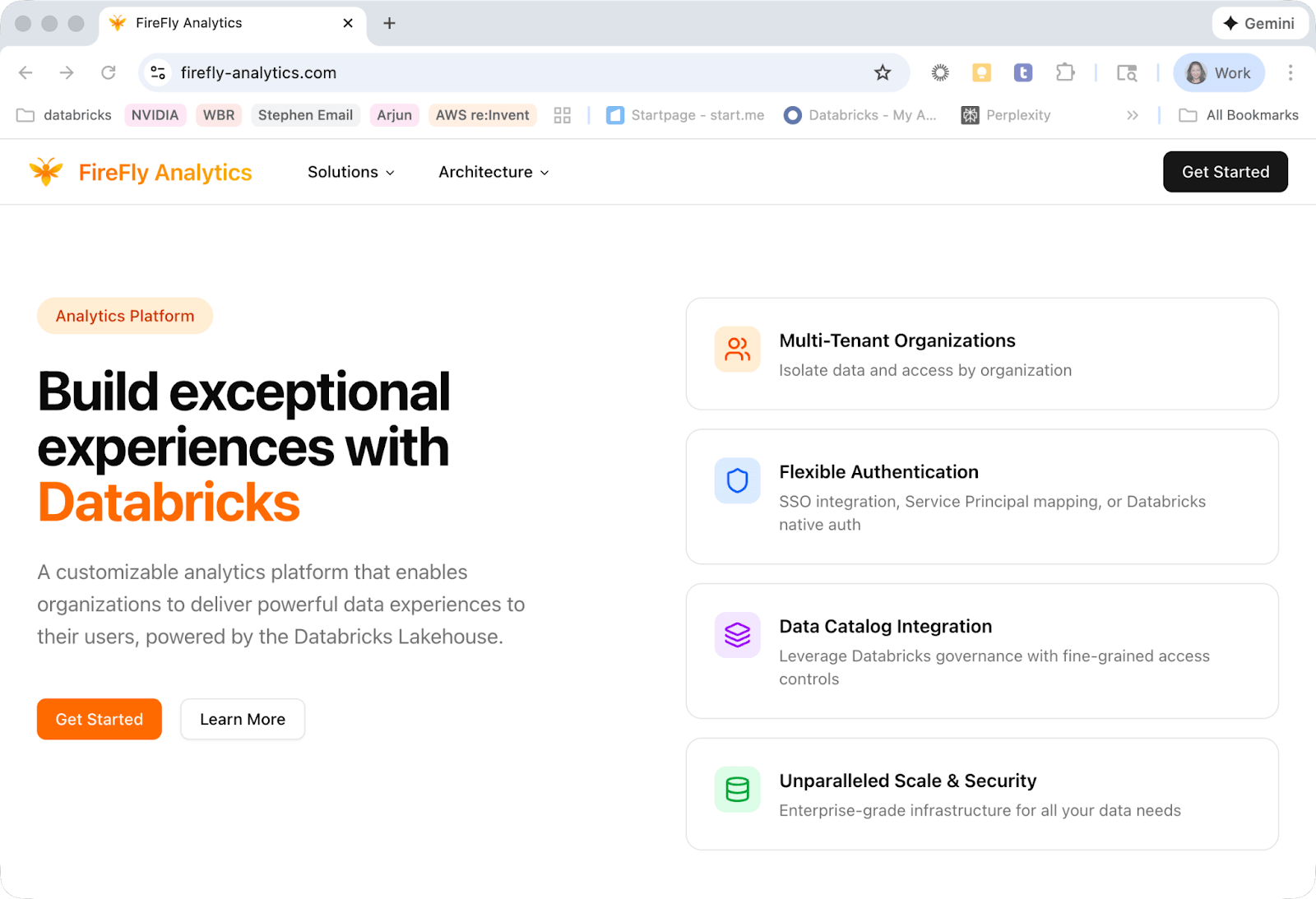

And, rather than just tell you what to do, we want to show you how. To accompany PWAF, we’ve built a fictitious company “Firefly Analytics” that’s been architected to follow PWAF’s best practices and give you the scaffolding to get started from a practical example. 100% vibe coded, and (soon) 100% open source.

Point your tools at PWAF and the Firefly repo coming soon and get building!

And we’re just getting started

These are the first of many improvements we’re working on to help you build transformative capabilities and grow your business by delighting our joint customers.

Thank you for all your feedback to help us build a better program, and we’re looking forward to growing and building together this year!

Keep building,

—Stephen