Introducing Temporary Tables in Databricks SQL

Clean, simple, and fast SQL workloads — no clean-up required

Summary

- Build and test SQL logic without creating permanent tables

- Use fast, session-scoped tables that store physical data for staging and transformations

- Keep Unity Catalog clean with automatic cleanup at the end of each session

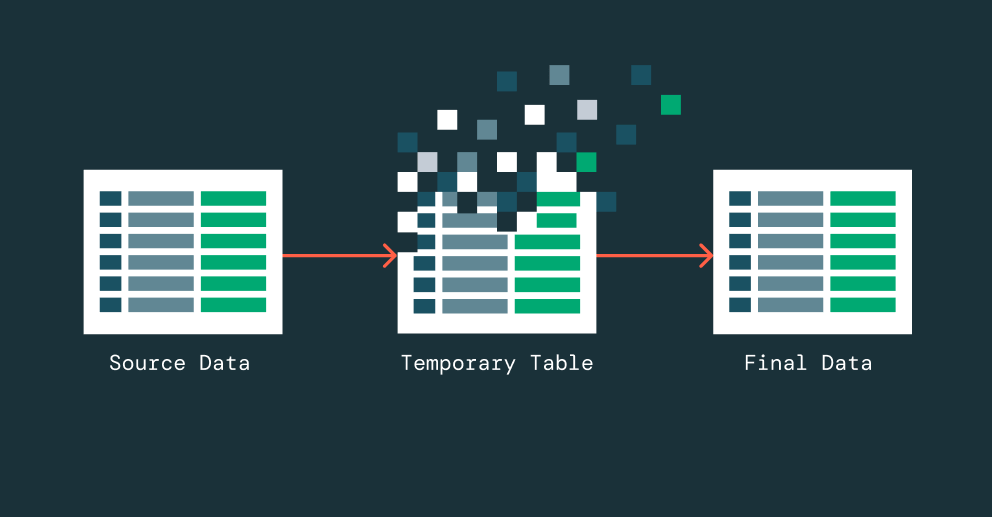

Databricks SQL now supports temporary tables. This gives teams a simple way to stage data, validate logic, and run iterative SQL workflows without adding objects to the catalog. Temporary tables behave like regular tables during your session. They store data physically and support common DML operations. When the session ends, they are removed.

This update continues the broader expansion of SQL language support in Databricks SQL. Recent work includes SQL Scripting, Stored Procedures, Collations, Recursive CTEs, and Spatial SQL. Temporary tables fit into this progress and help teams build pipelines with fewer steps and less overhead.

Many workloads from legacy data warehouses rely on temporary tables for staging and intermediate logic. Teams moving from these systems now keep familiar patterns without redesigning their pipelines. One customer captured the value clearly:

We’re thrilled about the addition of temporary tables. We started using temp tables immediately, as it simplifies how we are migrating complex procedural code from our existing legacy SQL Server warehouses to Databricks SQL. Temporary tables offer greater flexibility for data engineering with great performance. This is one of the key features that will accelerate our journey toward a fully unified and open Lakehouse architecture. —Matt Adams, Senior Data Platforms Developer, PacificSource

Temporary tables follow the ANSI standard. They will also be contributed to open source Apache Spark™.

How do Temporary Tables work?

Temporary tables are session-scoped, physical Delta tables. They store data in an internal Unity Catalog location tied to the workspace. They use the same caching and performance features as standard Delta tables. These tables differ from temporary views. Views re-evaluate the underlying query each time. Temporary tables persist data during the session. This improves performance and avoids repeated computation.

A clean-up service removes temporary tables automatically. This happens when the session ends. If a session runs for 7 days, clean up runs at that point. Failed jobs still trigger a cleanup. This prevents leftover data from affecting future workflows.

Creating a temporary table is as intuitive as it gets.

From this example, tmp_sales behaves like a typical table.

You can query it directly:

INSERT or MERGE data INTO it:

Use the temporary table in downstream transformations:

What are the key benefits of Temporary Tables?

- Performance: Temporary tables physically persist intermediate data to avoid repeated computation and leverage disk caching to accelerate data reads

- Migration simplicity: Working with temporary tables uses the same, familiar syntax found in legacy data warehouses, adhering to the ANSI standard

- Governance and isolation: Temp tables are managed by Unity Catalog, and session-level isolation prevents conflicts, data exposure, and interference from other users

- Automatic cleanup: No tables are orphaned or cluttering up your catalog. Even failed jobs still trigger a clean-up, ensuring no residual data lingers to affect future job runs.

This combination of performance, efficiency, and reliability makes temporary tables a highly useful component in ETL pipelines, reporting jobs, and interactive analysis.

See Databricks documentation for more information on using Temporary Tables during Public Preview.

Gartner®: Databricks Cloud Database Leader

Example ETL: Transforming raw event data into actionable user metrics

If you work on an e-commerce site or consumer platform, you will be familiar with user event data. Event data includes user actions like page views, sign-ups, and purchases. With this type of data, the goal for the data team is to convert raw events into actionable user metrics that power analytics dashboards, allow any business user to ask questions of the data with AI/BI Genie, and develop better products and experiences for customers.

In this scenario, temporary tables are leveraged in each step of the workload.

Step 1: Load data from raw into a temporary staging table based on today's events

Step 2: Clean and standardize timestamp data, remove invalid records, and extract key fields

Step 3: Aggregate user metrics across multiple event types

Step 4: Merge aggregated user metrics in the production user_metrics table

By staging, cleaning, and aggregating event data using temporary tables, the business can efficiently compute daily engagement metrics without cluttering production storage or risking stale data — ensuring accurate, real-time insights into customer activity and product performance.

What’s next?

Support for temporary tables will expand beyond Databricks SQL Classic, Pro, and Serverless. Standard shared clusters will support them in a future release.

Here is a preview of planned improvements:

- Lineage visibility for downstream tables

- Support for ALTER TABLE and CREATE OR REPLACE TABLE

- Support within Multi-Statement and Multi-Table Transactions

Get started

All existing Databricks users migrating from another data warehouse and building SQL pipelines should take advantage of temporary tables to simplify how they manage complex SQL workflows. Get started with temp tables by reading the Databricks documentation.

To learn more about Databricks SQL, visit our website or read the documentation. You can also check out the product tour for Databricks SQL. If you want to migrate your existing warehouse to a high-performance, serverless data warehouse with a great user experience and lower total cost, then Databricks SQL is the solution -- try it for free.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read