Predictive Optimization at Scale: A Year of Innovation and What’s Next

Faster queries, lower storage costs, and full automation across Unity Catalog managed tables

Summary

- Predictive Optimization now runs by default for new Unity Catalog managed tables and operates at massive scale

- New capabilities in 2025 delivered faster queries, cheaper storage maintenance, and enhanced capabilities

- In 2026, Predictive Optimization expands into data lifecycle automation and deeper observability

Introduction

The most performant, cost-effective lakehouse is one that optimizes itself as data volumes, query patterns, and organizational usage continue to evolve. Predictive Optimization (PO) in Unity Catalog enables this behavior by continuously analyzing how data is written and queried, then applying the appropriate maintenance actions automatically without requiring manual work from users or platform teams. In 2025, Predictive Optimization moved from an optional automation feature to the default platform behavior, managing performance and storage efficiency across millions of production tables while removing the operational burden traditionally associated with table tuning. Here’s a look at the milestones that got us here, and what’s coming next in 2026.

Adoption at scale across the lakehouse

Throughout 2025, Predictive Optimization saw rapid adoption across the Databricks Platform as customers increasingly relied on autonomous maintenance to manage a growing data estate. Predictive Optimization has grown rapidly this past year:

- Exabytes of unreferenced data were vacuumed, resulting in tens of millions of dollars in storage cost savings

- Hundreds of petabytes of data were compacted and clustered to improve query performance and file pruning efficiency

- Millions of tables adopted Automatic Liquid Clustering for autonomous data layout management

Based on consistent performance improvements observed at this scale, Predictive Optimization is now enabled by default for all new Unity Catalog managed tables, workspaces, and accounts.

How Predictive Optimization Works

Predictive Optimization (PO) functions as the platform intelligence layer for the lakehouse, continuously optimizing your data layout, reducing storage footprint, and maintaining the precise file statistics required for efficient query planning on UC managed tables.

Based on observed usage patterns, PO automatically determines when and how to run commands like:

- OPTIMIZE, which compacts small files and improves data locality for efficient access

- VACUUM, which deletes unreferenced files to control storage costs

- CLUSTER BY, which selects optimal clustering columns for tables with Automatic Liquid Clustering

- ANALYZE, which maintains accurate statistics for query planning and data skipping

All optimization decisions are workload-driven and adaptive, eliminating the need to manage schedules, tune parameters, or revisit optimization strategies as query patterns change.

Gartner®: Databricks Cloud Database Leader

Key Advances in Predictive Optimization in 2025

Automatic Statistics for 22% Faster Queries

Accurate statistics are critical for building efficient query plans, yet manually managing statistics becomes increasingly impractical as data volume and query diversity grow.

With Automatic Statistics (now generally available), Predictive Optimization determines which columns matter based on observed query behavior and ensures that statistics remain up to date without manual ANALYZE commands.

Statistics are maintained through two complementary mechanisms:

- Stats-on-write captures statistics as data is written with minimal overhead, a method that is 7-10x more performant than running ANALYZE TABLE

- Background refresh updates statistics when they become stale due to data changes or evolving query patterns

Across real customer production workloads, this approach delivered up to twenty-two percent faster queries while removing the operational cost of manual statistics management.

6x Faster and 4x Cheaper VACUUMs

VACUUM plays a critical role in managing storage costs and compliance by deleting unreferenced data files. Standard vacuuming requires listing all files in a table directory to identify candidates for removal, an operation that can take over 40 minutes for tables with 10 million files.

Predictive Optimization now applies an optimized VACUUM execution path that leverages the Delta transaction log to identify removable files directly, avoiding costly directory listings whenever possible.

At scale, this resulted in:

- Up to 6x faster VACUUM execution

- Up to 4x lower compute cost compared to standard approaches

The engine dynamically determines when to use this log-based approach and when to perform a full directory scan to clean up fragments from aborted transactions.

Automatic Liquid Clustering

Automatic Liquid Clustering reached general availability in 2025 and is already optimizing millions of tables in production.

The process is entirely workload-driven:

- First, PO analyzes telemetry from all queries on your table, observing key metrics like predicate columns, filter expressions, and the number and size of files read and pruned.

- Next, it performs workload modeling, identifying and testing various candidate clustering key combinations (e.g., clustered on date, or customer_id, or both).

- Finally, PO runs a cost-benefit analysis to select the single best clustering strategy that will maximize query pruning and reduce data scanned, even determining if the table's existing insertion order is already sufficient.

You get faster queries with zero manual tuning. By automatically analyzing workloads and applying the optimal data layout, PO removes the complex task of clustering key selection and ensures your tables remain highly performant as your query patterns evolve.

Platform-wide Coverage

Predictive Optimization has expanded beyond traditional tables to support a broader set of the Databricks Platform.

- PO now natively integrates with Lakeflow Spark Declarative Pipelines (SDP), bringing autonomous background maintenance to both Materialized Views and Streaming Tables.

- PO works on both managed Delta and Iceberg tables

- PO is enabled by default for all new Unity Catalog-managed tables, workspaces, and accounts.

This ensures autonomous maintenance across your full data estate rather than isolated optimization of individual tables.

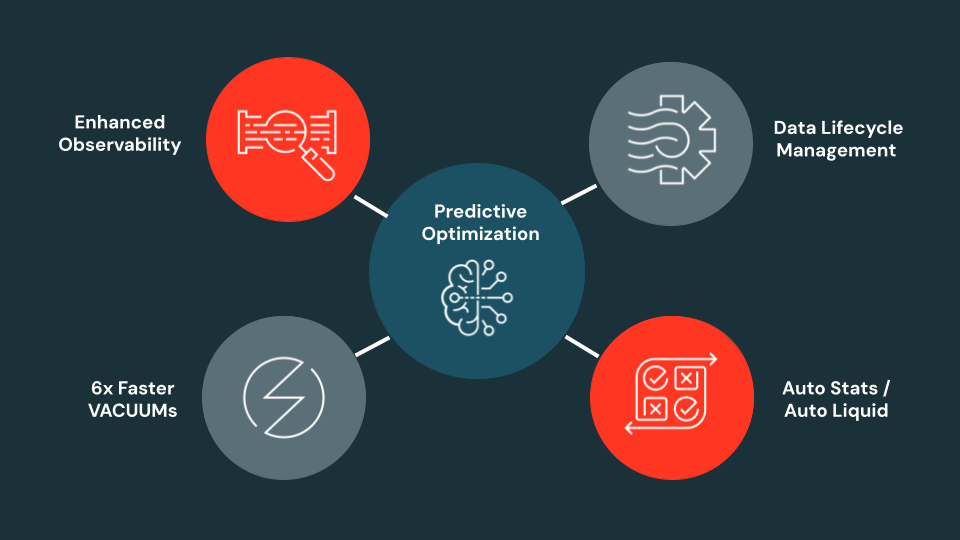

What’s Coming Next in 2026?

We’re committed to delivering features that replace manual table tuning with automated maintenance. In parallel, we’re planning to extend beyond physical table health to address total data lifecycle intelligence—automated storage cost savings, data lifecycle management, and row deletion. We are also prioritizing enhanced observability, integrating Predictive Optimization insights into common table operations and the Governance Hub to provide clearer visibility into PO operations and their ROI.

Auto-TTL (Automatic Row Deletion)

Managing data retention or controlling storage costs is a critical, yet often manual, task. We're excited to introduce Auto-TTL, a new Predictive Optimization capability that completely automates row deletion. Using this feature, you’ll be able to set a simple time-to-live policy directly on any UC managed table using a command like:

Once the policy is set, Predictive Optimization takes care of the rest. It automates the entire two-step process by first running a DELETE operation to soft-delete the expired rows, and then following up with a VACUUM to permanently remove them from physical storage.

Reach out to your account team today to try this in Private Preview!

Enhanced Observability

Improved Predictive Optimization Observability

You will be able to track the direct impact and ROI of Predictive Optimization in the new Data Governance Hub. This observability dashboard will come out of the box with a centralized view into PO's operations, surfacing key metrics that quantify its value.

Use this to see exactly what PO is doing under the hood, with clear visualizations for bytes compacted, bytes clustered by Liquid, bytes vacuumed, and bytes analyzed. Most importantly, the hub translates these actions into direct business value by showing your estimated storage cost savings. This will make it easier than ever to understand and communicate the positive impact PO is having on both your storage costs and query performance.

In DESCRIBED EXTENDED, you will also be able to see the reasons that Predictive Optimization skipped optimization (e.g. table already well-clustered, table too small to benefit from compaction, etc).

Furthermore, we’ve added the ability to see column selections for data skipping and Auto Liquid in the PO system table.

Reach out to your account team today to try the Data Governance Hub in Private Preview!

Improved Table-level Storage Observability

To provide greater clarity into your storage footprint, we will introduce enhanced observability features for Predictive Optimization. You will be able to monitor the health and evolution of your tables through high-level metrics like file counts and storage growth. By surfacing these insights directly, we’re making it easier to visualize the impact of automated maintenance and identify new opportunities to reduce costs and streamline your data estate.

Get started with Predictive Optimization

Predictive Optimization is available today for Unity Catalog managed tables and is enabled by default for new workloads.

When enabled, customers automatically benefit from faster VACUUM execution, workload-aware Automatic Statistics, and autonomous data layout through Automatic Liquid Clustering.

You can also explore Auto TTL and Predictive Optimization observability (Data Governance Hub) through Private Preview by reaching out to your account team.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read