Enabling Business Users on Databricks

Your blueprint for building a data-driven workforce with Databricks

Summary

- Empower business users to move beyond IT bottlenecks to explore and act on data directly.

- Learn about key Databricks tools, including AI/BI Dashboards and Genie, Databricks One, Lakeflow, Unity Catalog to enable self-service insights.

- Understand real-world scenarios showing how sales, claims, and marketing teams make faster, smarter decisions.

It's Thursday, 2:47 PM. Lisa Chen, Regional Sales Manager for a growing SaaS company, stares at her inbox with growing dread. Tomorrow's board meeting starts at 9 AM, and she still doesn't have the regional performance numbers the CEO requested three days ago. The data team promised the report by EOD Wednesday. Then by noon today. Her last Slack message was answered with "still working on it—lots of data sources to reconcile."

Lisa's story isn't unique. Across industries, business professionals—product managers, operations leads, marketing analysts, claims directors—know this pain all too well. They understand their business inside and out, but they’re stuck waiting for IT or data teams to reconcile systems, clean pipelines, and build reports.

But what if that wasn't the case? What if every business user could explore data, ask questions, and make decisions without learning to code or waiting for IT? That is the reality Databricks is unlocking.

Meet the People with the Problem

Lisa - Regional Sales Manager, TechStart Inc.

Every Monday morning, Lisa needs territory performance metrics to guide her team's weekly strategy. Currently, she downloads CSV files from Salesforce, pulls customer satisfaction data from their survey platform, and manually matches everything against the company's financial dashboard. By Wednesday, she has insights. By Friday, the data is already outdated, and opportunities are slipping through the cracks.

"I know my territories better than anyone, but I spend more time manipulating spreadsheets than actually managing sales."

Marcus - Claims Operations Director, SecureLife Insurance

Marcus oversees fraud detection and claims processing efficiency. He relies on weekly Power BI reports from IT that show fraud patterns and processing times. When he spots something unusual—like a 15% spike in auto claims from a specific region—he can't drill down immediately. Instead, he submits another data request and waits three days while potential fraud continues.

"By the time I get the detailed analysis, the bad actors have moved on to new schemes."

Priya - Digital Marketing Manager, RetailFlow

Priya tracks campaign performance across six different channels: social media, email, paid search, display advertising, and their mobile app. Each platform exports data differently. Attribution analysis—understanding which touchpoints actually drive conversions—requires manually joining data from all six sources. A comprehensive campaign analysis takes two weeks. Most campaigns end before she can optimize them.

"I'm making million-dollar media decisions based on gut feeling because the data arrives too late to be useful."

The Complete Business User Journey on Databricks

Business users operate differently from engineering teams. They think in terms of outcomes, not queries; decisions, not deployments. When they need an answer—whether it’s “Which products are driving the most margin this quarter?” or “Where should we focus our retention efforts?”—the ideal workflow is one that gets them there as quickly and intuitively as possible.

The diagram above illustrates how Databricks transforms the business user experience by creating multiple pathways from raw data to actionable insights. Unlike traditional data architectures that force business users into rigid, IT-dependent workflows, Databricks provides a flexible ecosystem where different user types can access the same underlying data through their preferred interfaces.

1. Data Ingestion and Federation: Eliminating the Integration Bottleneck

For business users like Lisa, Marcus, and Priya, the real frustration begins with fragmented data. Sales metrics live in Salesforce, survey results in customer platforms, claims data in insurance systems, and marketing performance across a half-dozen channels. Each dataset speaks a different language, leaving business users stuck waiting while IT reconciles and pipelines catch up.

Databricks removes this bottleneck by unifying data access at the source. With Lakeflow, teams can automate ingestion of data from enterprise applications, and with Lakehouse Federation, they can query multiple systems directly without moving the data first. The result: when Lisa opens her laptop on Monday morning, her sales, survey, and financial data is already clean, joined, and ready. External datasets are just as accessible. Through Delta Sharing and the Databricks Marketplace, Marcus can benchmark fraud patterns against industry data instantly—turning what used to take weeks into real-time comparison.

2. The Core Data Platform: Your Single Source of Truth

Instead of Marcus waiting three days for IT to extract and prepare fraud analysis data, all his information—historical patterns, current claims, external watchlists, and risk scores—is immediately available in an open, consistent, queryable format on the Databricks Platform.

Unity Catalog serves as the governance layer that makes self-service possible. Business users can explore data confidently, knowing they're always accessing the right, permissioned datasets. No more spreadsheet version control nightmares or compliance concerns that typically slow down business analysis.

Beyond governance, Unity Catalog also introduces UC Metric Views—a semantic layer that defines business metrics in a consistent, reusable way. Instead of each team reinventing calculations like “active customer,” “churn rate,” or “claims cycle time,” these metrics are defined once and reused everywhere. For business users, this means less time second-guessing formulas and more time acting on shared truths across the organization.

3. Consumption: Multiple Paths to the Same Powerful Insights

The same governed, unified data—now expressed in business-friendly metrics—becomes accessible through multiple interfaces that match how different users actually work, eliminating the rigid, one-size-fits-all approach that frustrates business professionals across different skill levels and workflow preferences.

- Excel users can keep working in familiar spreadsheets, now powered by live, governed connections—eliminating weekly CSV downloads and hours of manual wrangling.

- Dashboard users see real-time updates in BI tools or Databricks AI/BI Dashboards, spotting anomalies the moment they happen instead of days later.

- Business power users can ask questions in plain English with AI/BI Genie, tap into curated datasets with Lakebase, or design workflows visually in Lakeflow Designer—shifting from data consumers to data explorers.

- Advanced business users extend insights even further with Agent Bricks, deploying AI assistants that continuously monitor data, flag anomalies, and recommend actions directly inside business workflows.

Business User Persona <-> Databricks Capability Mapping

A Day in the Life of a Business User on Databricks

Business users want speed, simplicity, and trust: the ability to explore data, collaborate, and make decisions without waiting on IT—all while staying governed and secure. Databricks makes this possible by streamlining every step of their day.

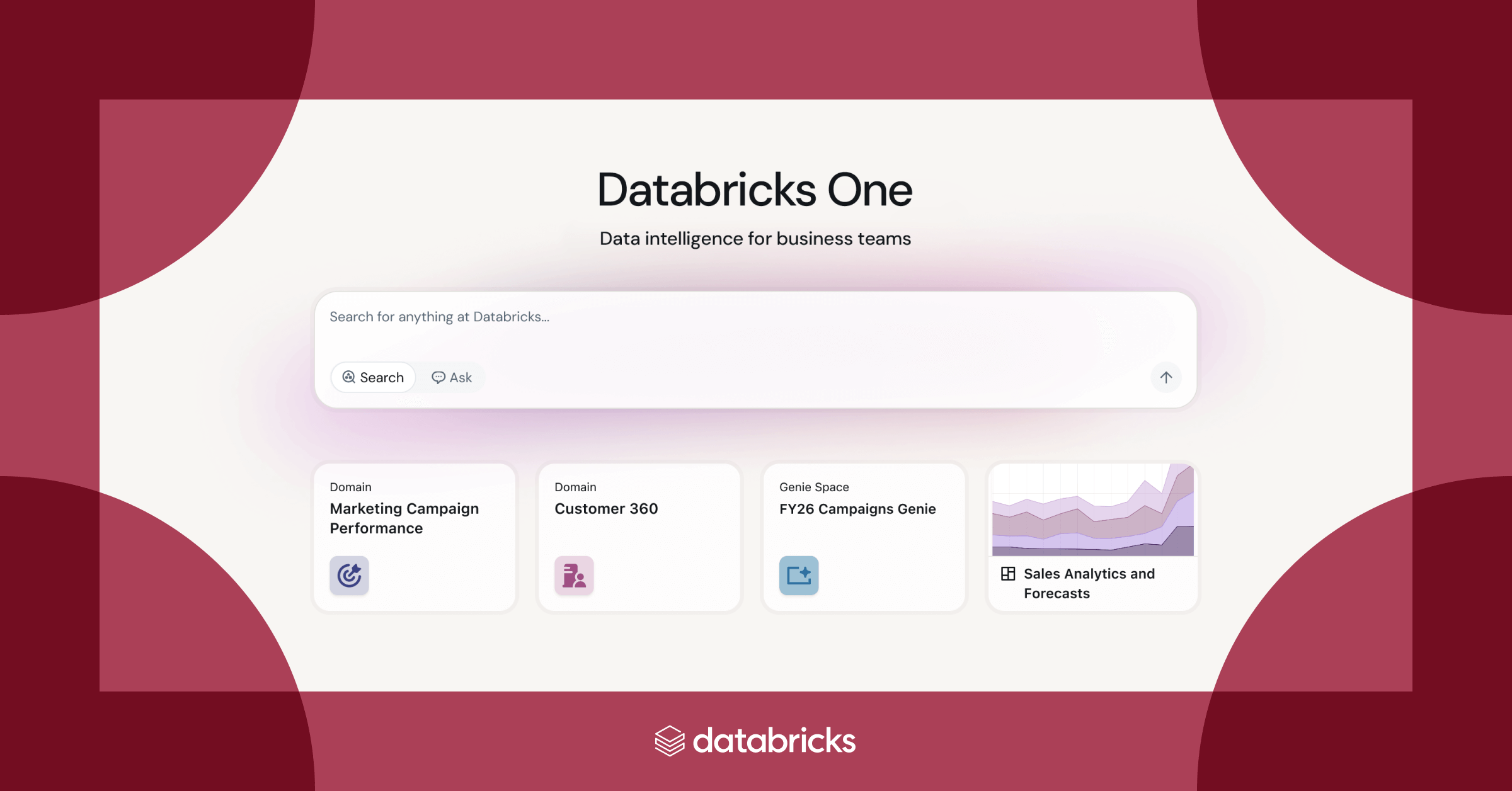

Everything begins with a seamless login through Databricks One, giving business users immediate access to trusted data, dashboards, and apps. Discovery is then simple: with Unity Catalog’s Unified Search, they can find the right datasets, dashboards, or AI models in seconds—surfacing results by relevance, quality, and lineage without needing to know exact table names or SQL syntax. When new data is required, they can use Lakeflow Designer to create drag-and-drop pipelines, connect an Excel file, or subscribe to datasets from the Marketplace, all governed under Unity Catalog. Exploration feels natural whether they are asking questions in plain English through AI/BI Genie, visualizing trends in AI/BI Dashboards and BI tools, or leveraging Databricks Assistant for help. For more advanced needs, users can tap into AI Functions, Agent Bricks, Databricks Apps, or Lakebase to build models, automate workflows, and embed proactive insights directly into business processes. From start to finish, every step in their day is designed for speed, simplicity, and trust—so they can spend less time finding and preparing data, and more time using it to drive decisions.

Gartner®: Databricks Cloud Database Leader

Getting Started: A 3-Month Phased Adoption for Business Users

The journey from data dependency to data empowerment requires a phased approach that demonstrates value while building organizational confidence. Here's how successful organizations structure this transition.

Month 1: Foundation and Quick Wins

Assessment and Role Definition Start by identifying your "Lisa, Marcus, and Priya" equivalents. Map current data workflows and identify the biggest pain points. Which business users are already creating shadow IT solutions? Which departments submit the most data requests? These early adopters will become your champions.

Establish clear user roles within Unity Catalog—don't try to give everyone access to everything immediately. Create consumer roles for each business unit (sales, marketing, operations) that provide access to relevant, governed datasets without overwhelming users with enterprise-wide data they don't need.

First Data Connections Focus on one high-impact use case. If your sales team downloads weekly territory reports, start there. Use Lakeflow Connect to automate the Salesforce data ingestion that currently requires manual CSV exports. Set up basic AI/BI dashboards that replace existing static reports.

Define Your Business Vocabulary Create semantic definitions in Unity Catalog for your most critical metrics that drive decisions like revenue, churn rate, or campaign ROI. Build these as Metric Views so that when Lisa in sales and the CFO both reference "Q3 revenue," they're guaranteed to see the same calculation from the same data. The payoff is immediate: no more "your numbers don't match my numbers" debates.

The goal isn't perfection—it's demonstrating immediate value. When Lisa can refresh her territory performance with a single click instead of spending three hours every Monday morning, word spreads quickly.

Month 2: Expanding Capabilities and Building Confidence

Self-Service Training and Adoption Now that foundational data flows are working, focus on user empowerment. Train business users on AI/BI Genie for natural language queries. Start with simple questions they already know the answers to, building confidence before tackling complex analysis.

Introduce Lakeflow Designer to power users who want to bring in additional data sources. The visual, drag-and-drop interface helps bridge the gap between business logic and data engineering without requiring coding skills.

Building Governance Confidence This is when IT teams often get nervous about business users having direct data access. Address concerns proactively by showcasing Unity Catalog's audit capabilities. Show how every query, every data access, every insight generation is tracked and governed. Business users gain self-service capabilities while IT maintains complete visibility and control.

Month 3: Advanced Analytics and Organizational Change

Scaling and Sophistication By now, early adopters are seeing significant productivity gains. Use this momentum to introduce more advanced capabilities. Deploy Agent Bricks for proactive monitoring—Marcus no longer needs to remember to check for fraud patterns because the system alerts him automatically.

Implement Databricks Apps for operational workflows. When Priya discovers that email subscribers have higher lifetime value, she can build custom applications that help her team visualize campaign performance, calculate optimal budget allocations, and generate recommendations—all within the governed Databricks environment.

Change Management and Culture Shift The most important transformation happens in organizational behavior. Business users stop asking "Can someone pull this data for me?" and start asking "What story is this data telling us?" IT teams transition from being report generators to being platform enablers and governance stewards.

5 Best Practices for Business Users to Maximize Databricks

1. Start with Role-Based Access and Shared Semantics

Use Unity Catalog to create targeted data experiences that match how your business actually operates - and layer in UC Metric Views to ensure consistent definitions across those experiences

Don't overwhelm new users with enterprise-wide data access. Instead, create focused data environments aligned with business functions—sales territories for regional managers, claims data for operations directors, campaign metrics for marketing teams—and anchor them on shared semantic models. When everyone pulls “pipeline coverage” or “loss ratio” from the same definition, debates shift from what the number means to what to do about it.

Quick win: Set up consumer roles in Unity Catalog and pair them with a handful of high-value UC Metric Views (e.g., churn rate, campaign ROI, claims cycle time). This gives your “Lisa, Marcus, and Priya” equivalents immediate access not just to their most critical datasets, but to metrics they can trust are consistent across the company.

2. Meet Users Where They Work

Leverage familiar interfaces to eliminate adoption friction.

The fastest path to adoption isn't teaching new tools—it's enhancing existing workflows. Use Excel connectors for spreadsheet-dependent teams, Power BI integration for dashboard users, and native Databricks interfaces for power users ready to explore. This strategy reduces training time while ensuring data governance across all access points.

Pro tip: Start with live Excel connections to replace those weekly CSV downloads. Once users see the power of real-time data in familiar tools, they'll naturally gravitate toward more advanced capabilities.

3. Embrace Visual, No-Code Automation

Use Lakeflow Designer to democratize data pipeline creation.

Business users understand their data needs better than anyone—they just need the tools to act on that knowledge. Lakeflow Designer enables non-technical users to build and maintain data workflows visually, reducing dependency on IT while ensuring enterprise-grade reliability and governance.

Success pattern: Identify repetitive data preparation tasks (like Priya's multi-channel attribution analysis) and transform them into automated, scheduled workflows that business users can modify as needs evolve.

4. Ask Questions in Plain English

Leverage AI/BI Genie to transform curiosity into instant insights.

The best analytics platform is one where business logic translates directly into data exploration. Train users to ask natural language questions that match their decision-making process: "Which products drive the highest margin?" "Where should we focus retention efforts?" "What's driving the claims spike in Phoenix?"

Game changer: Combine Genie with interactive dashboards to create conversational analytics workflows—ask a question, get an answer, drill down with follow-up questions, all within the same interface.

5. Build Confidence Through Transparency

Use Unity Catalog's governance features to enable fearless exploration.

Business users often hesitate to explore data independently because they're unsure about data quality, permissions, or compliance implications. Unity Catalog's built-in lineage tracking, audit logs, and data quality metrics provide the transparency needed for confident self-service analytics.

Culture shift: Train business users to view governance features not as restrictions, but as enablers. When they can see data freshness, understand data lineage, and trust access controls, they'll transition from cautious data consumers to confident data explorers.

The Transformation: A Day in Their New Reality

Lisa’s Monday Morning Revolution

Using secure connectors integrated with Unity Catalog, Lisa connects her familiar Excel interface directly to live, governed data in the Lakehouse—eliminating the CSV download-and-wrangle process entirely. Her spreadsheets refresh automatically with territory performance, customer satisfaction, and pipeline data.

When she spots a dip in Northeast satisfaction scores, she uses AI/BI Genie to ask: “Show satisfaction by product feature in the Northeast.” Genie generates the query, runs it against Delta tables, and surfaces the answer instantly.

What once took three days of manual work now happens before her coffee cools.

Marcus’s Real-Time Fraud Fighting

Marcus’s dashboards run on Databricks SQL Serverless, so queries return in seconds. Spotting a spike in auto glass claims, he types into Genie: “Show Phoenix auto glass claims this week by repair shop.”

Behind the scenes, AI/BI Genie translates his natural language request into optimized SQL, automatically joins internal claims data with external repair shop datasets through Lakehouse Federation, and returns comprehensive results in seconds. He finds a fraud ring and stops it the same day.

Priya’s Campaign Optimization in Real-Time

Priya's attribution nightmare is now a thing of the past. Through automated data ingestion pipelines built with Databricks capabilities like Lakehouse Federation and Lakeflow Connect, campaign data from all six channels—social media, email, paid search, display advertising, mobile app, and website analytics—flows continuously into unified Delta tables without manual intervention.

Her AI/BI Dashboard shows live results: high traffic but low conversions from social, high ROI from email. She immediately shifts budget from underperforming social campaigns to double down on email marketing. What used to be a two-week analysis followed by campaign adjustments that came too late now happens in real-time, optimizing spend while campaigns are still running.

The Future is Frictionless

The future of data in the enterprise is not about making a few people incredibly powerful—it’s about making everyone capable. When business users have intuitive, secure, and fast access to data, they stop waiting for reports and start making decisions in real time.

Databricks is leading this shift by combining the scale and flexibility of the Lakehouse with a user experience designed for everyone. From spreadsheets to AI, the tools are catching up to the way business teams work—and that’s how organizations can unlock the full value of their data.

Ready to Empower Your Business Users?

The shift from data dependency to data empowerment starts with a single step. Here's how to move forward:

- Try it out: Start your free Databricks trial today

- See it in action: Visit our demo center for product tours, videos and hands-on tutorials covering Lakeflow, Unity Catalog, AI/BI, and more

- Learn the basics: Get started with free Academy training

- Download: The Business Intelligence meets AI eBook

Ready to Talk? Contact your Databricks account team to see how Databricks can transform your business users' daily workflows.