Introducing LakehouseIQ: The AI-Powered Engine that Uniquely Understands Your Business

Today, we are thrilled to announce LakehouseIQ, a knowledge engine that learns the unique nuances of your business and data to power natural language access to it for a wide range of use cases. Any employee in your organization can use LakehouseIQ to search, understand, and query data in natural language. LakehouseIQ uses information about your data, usage patterns, and org chart to understand your business’s jargon and unique data environment, and give significantly better answers than naive use of Large Language Models (LLMs).

Large Language Models have, of course, promised to bring language interfaces to data, and every data company is adding an AI assistant, but in reality, many of these solutions fall short on enterprise data. Every enterprise has unique datasets, jargon, and internal knowledge that is required to answer its business questions, and simply calling an LLM trained on the Internet to answer questions gives wrong results. Even something as simple as the definition of a “customer” or the fiscal year varies across companies.

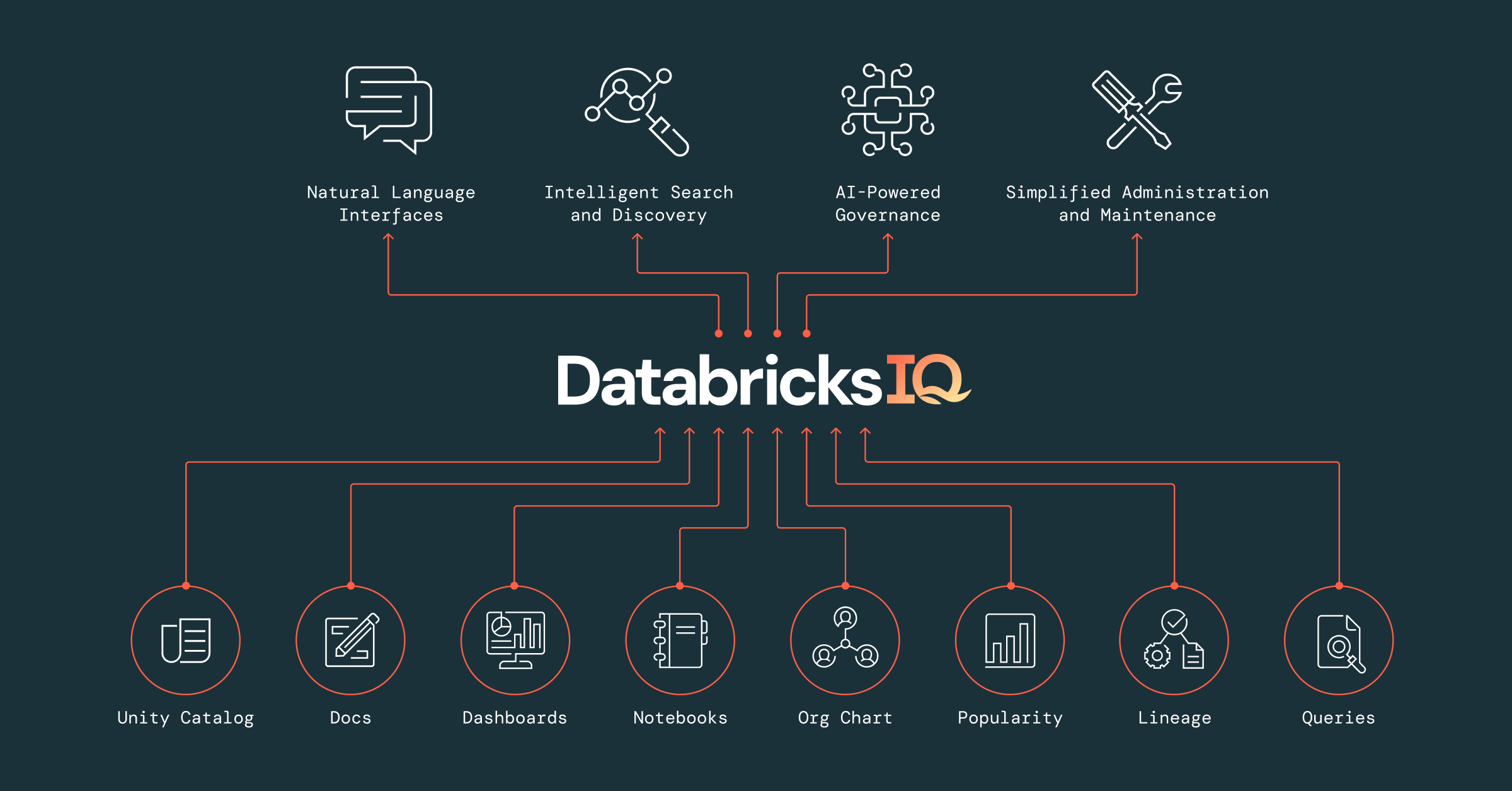

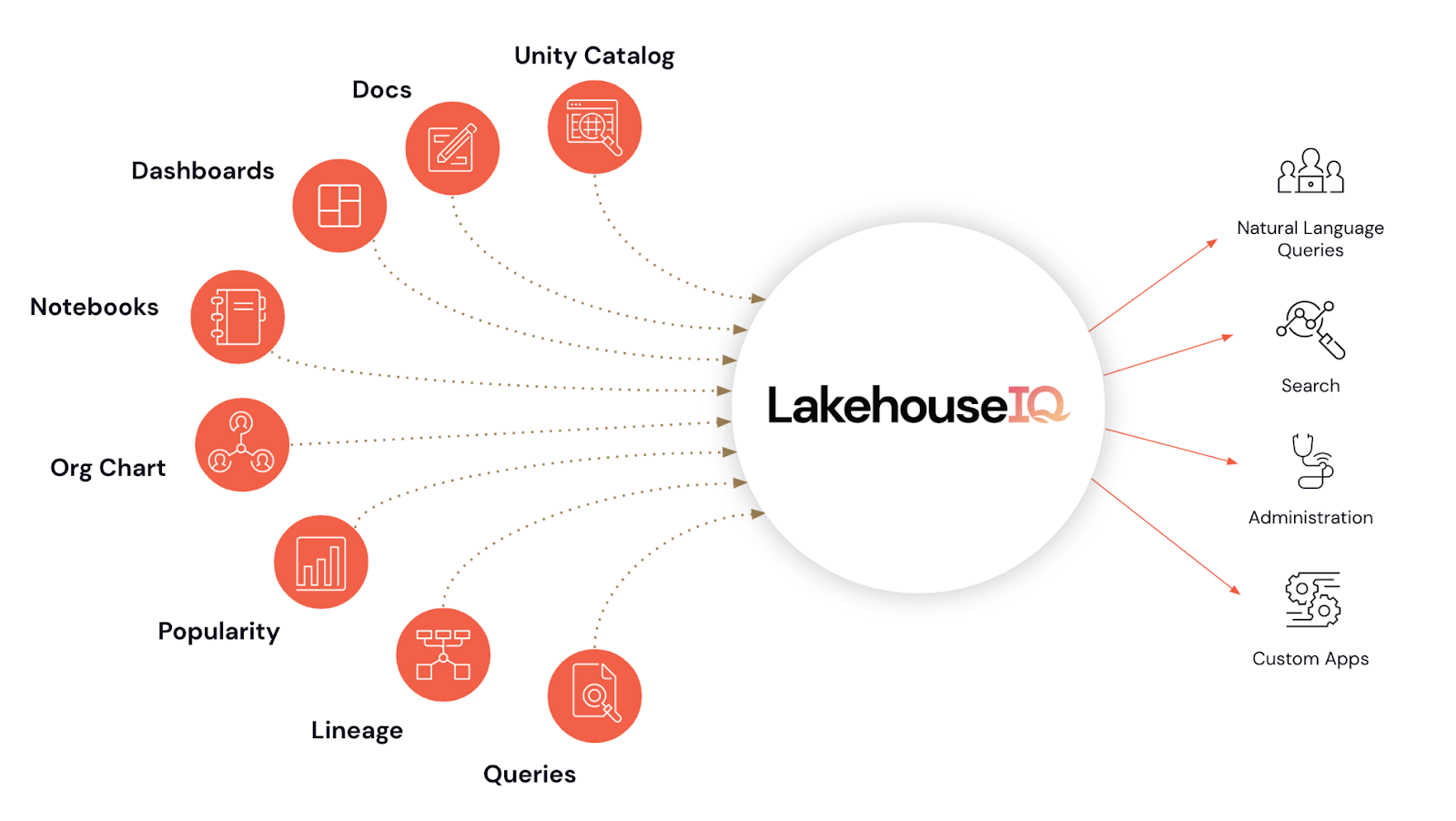

LakehouseIQ is a first-of-its-kind knowledge engine that directly solves this problem by automatically learning about business and data concepts in your enterprise. It uses signals from across the Databricks Lakehouse platform, including Unity Catalog, dashboards, notebooks, data pipelines, and docs, leveraging the unique end-to-end nature of the Databricks platform to see how data is used in practice. This lets LakehouseIQ build highly accurate specialized models for your enterprise.

We are using LakehouseIQ to power a spectrum of new natural language interfaces throughout Databricks, from queries to troubleshooting. And even more importantly, we are exposing its functionality through APIs to let customers build their own AI apps that use this automatically trained knowledge. We believe that this kind of knowledge engine for the enterprise will become a vital component of the next-generation software stack.

Natural Language Queries

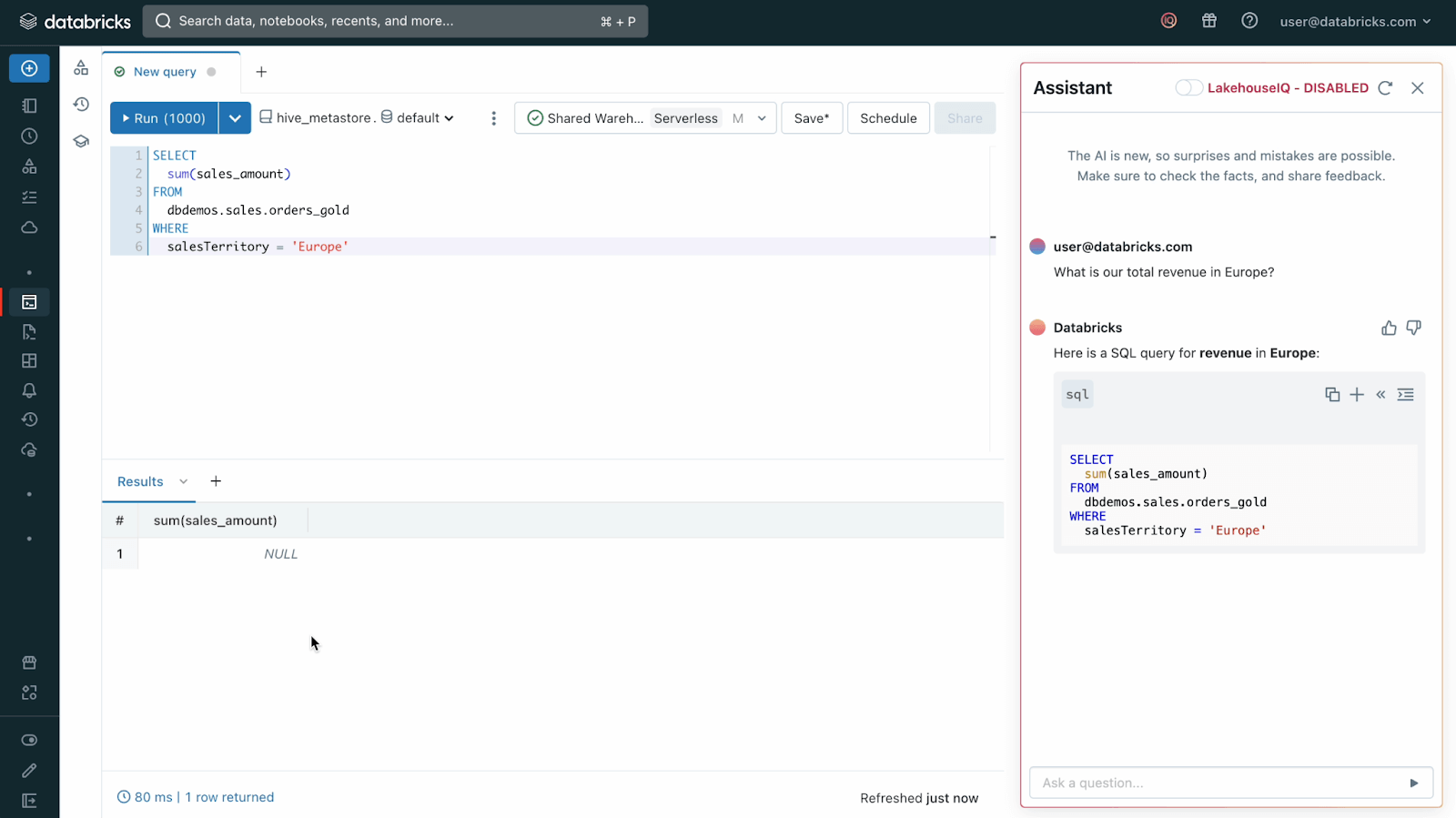

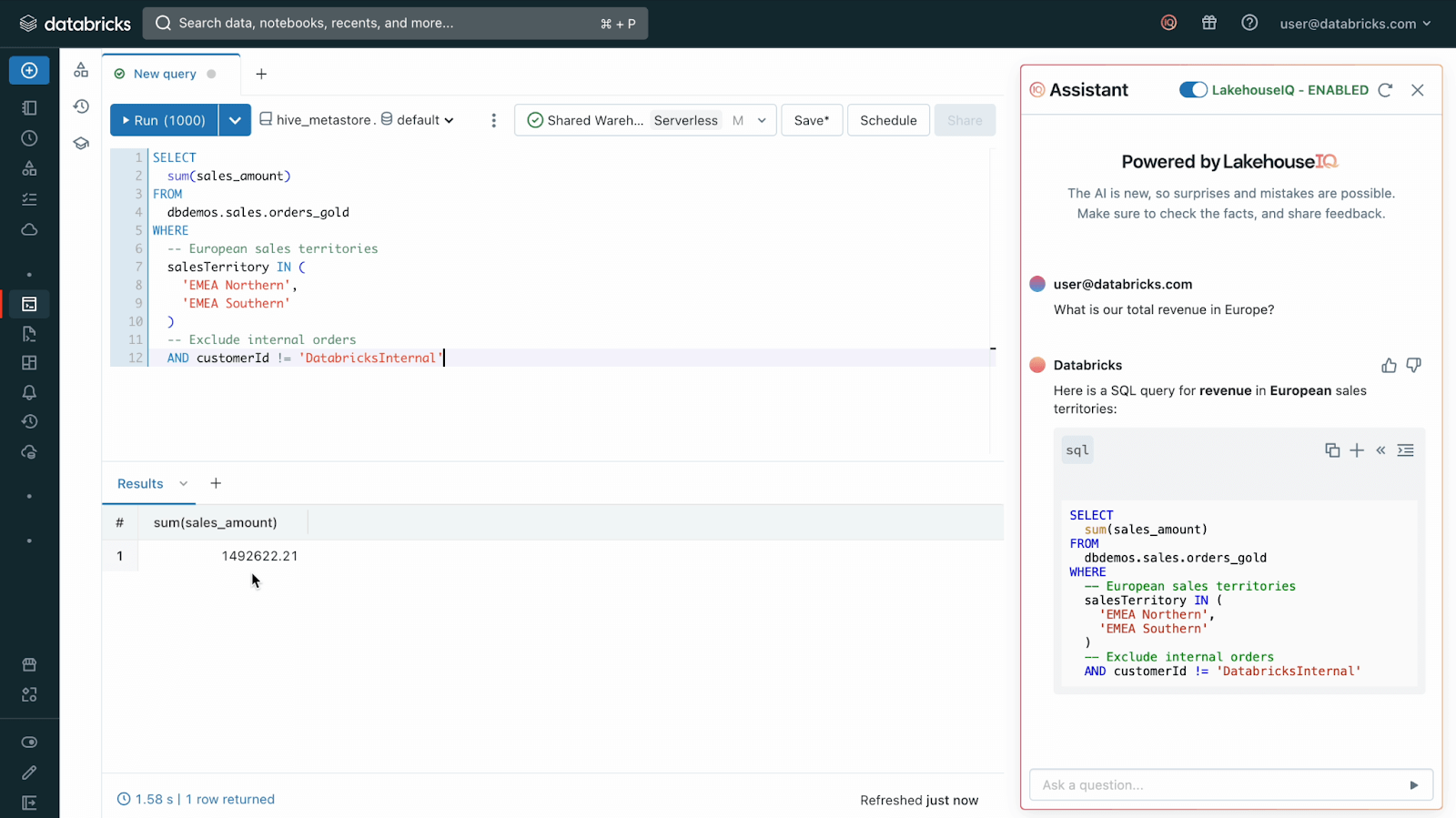

The first AI surface most Databricks users will see is the new Assistant in our SQL Editor and Notebooks that can write queries, explain them, and answer questions. It is already saving our users hundreds of hours of time. The Assistant relies heavily on LakehouseIQ to find and understand the right data for each activity and give accurate answers. Without a knowledge engine like LakehouseIQ, LLMs often cannot know how data is used in your enterprise – for example, in the query below, our Assistant with LakehouseIQ turned off searches for a sales territory called “Europe” and finds no results, because it does not know that the company actually has two European territories, North and South. The LakehouseIQ version not only knows this information, but automatically adds a filter to exclude internal usage, learned from other queries, dashboards and notebooks that used this dataset.

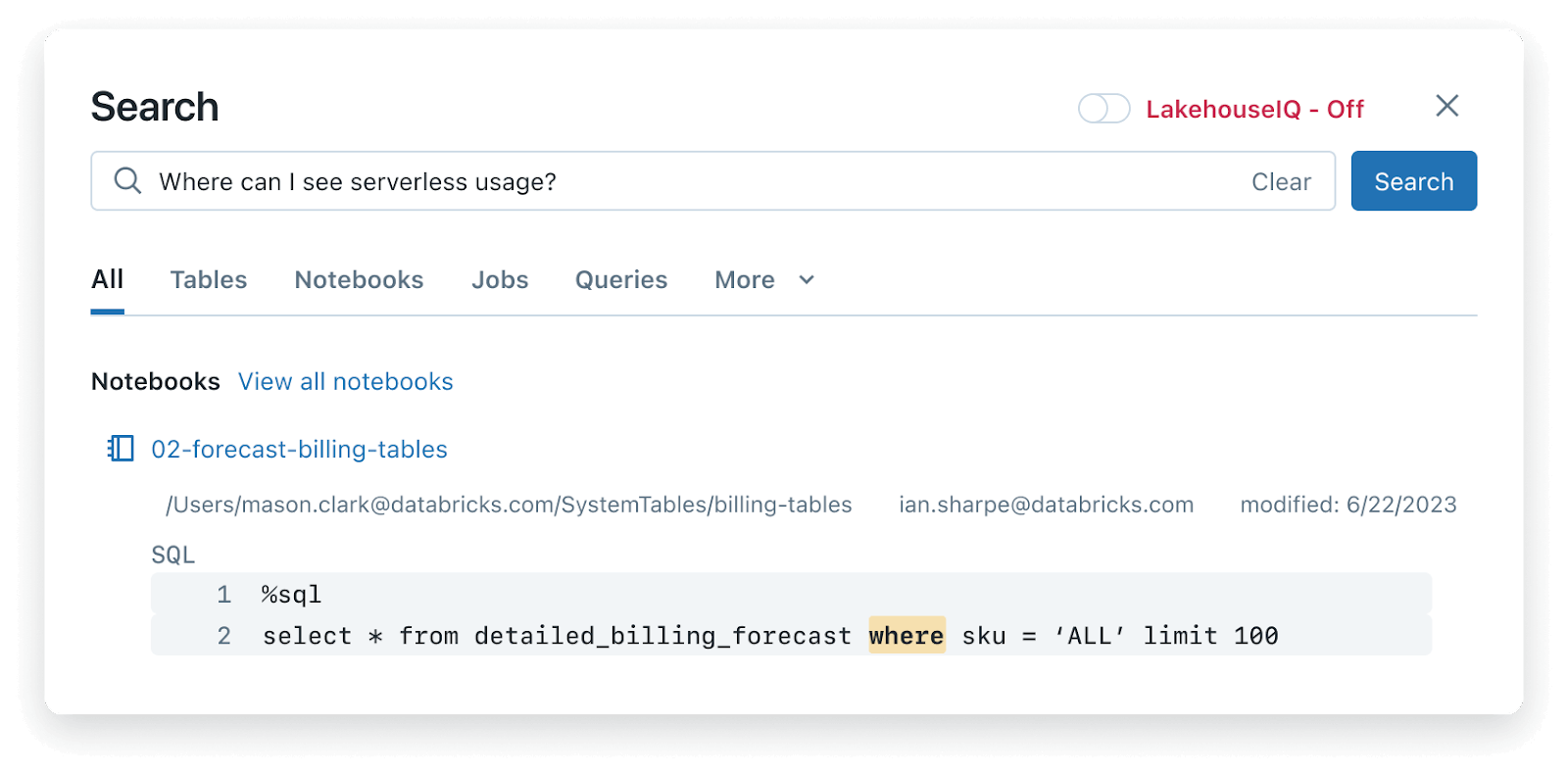

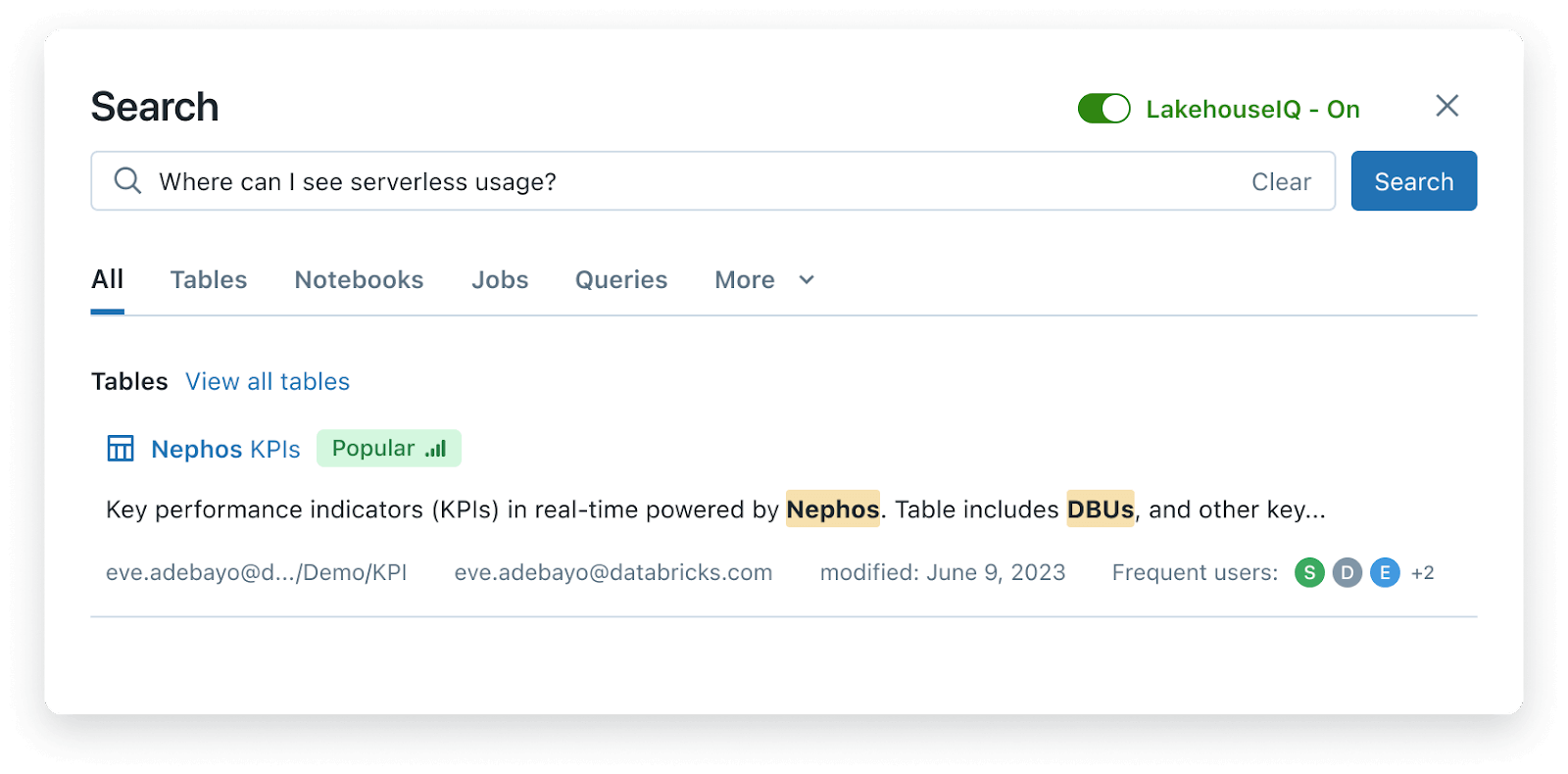

Search with LakehouseIQ

LakehouseIQ also significantly enhances Databricks’ in-product Search. Our new search engine doesn't just find data, it interprets, aligns and presents it in an actionable, contextual format, helping all users get started with their data faster. In this example on some of our internal data, LakehouseIQ understands that at Databricks, the codename for serverless is “Nephos”, and that “DBUs” are a measure of usage, thus finding the right result. It also exposes signals on popularity, freshness, and frequent users for each table.

Gartner®: Databricks Cloud Database Leader

Management and Troubleshooting

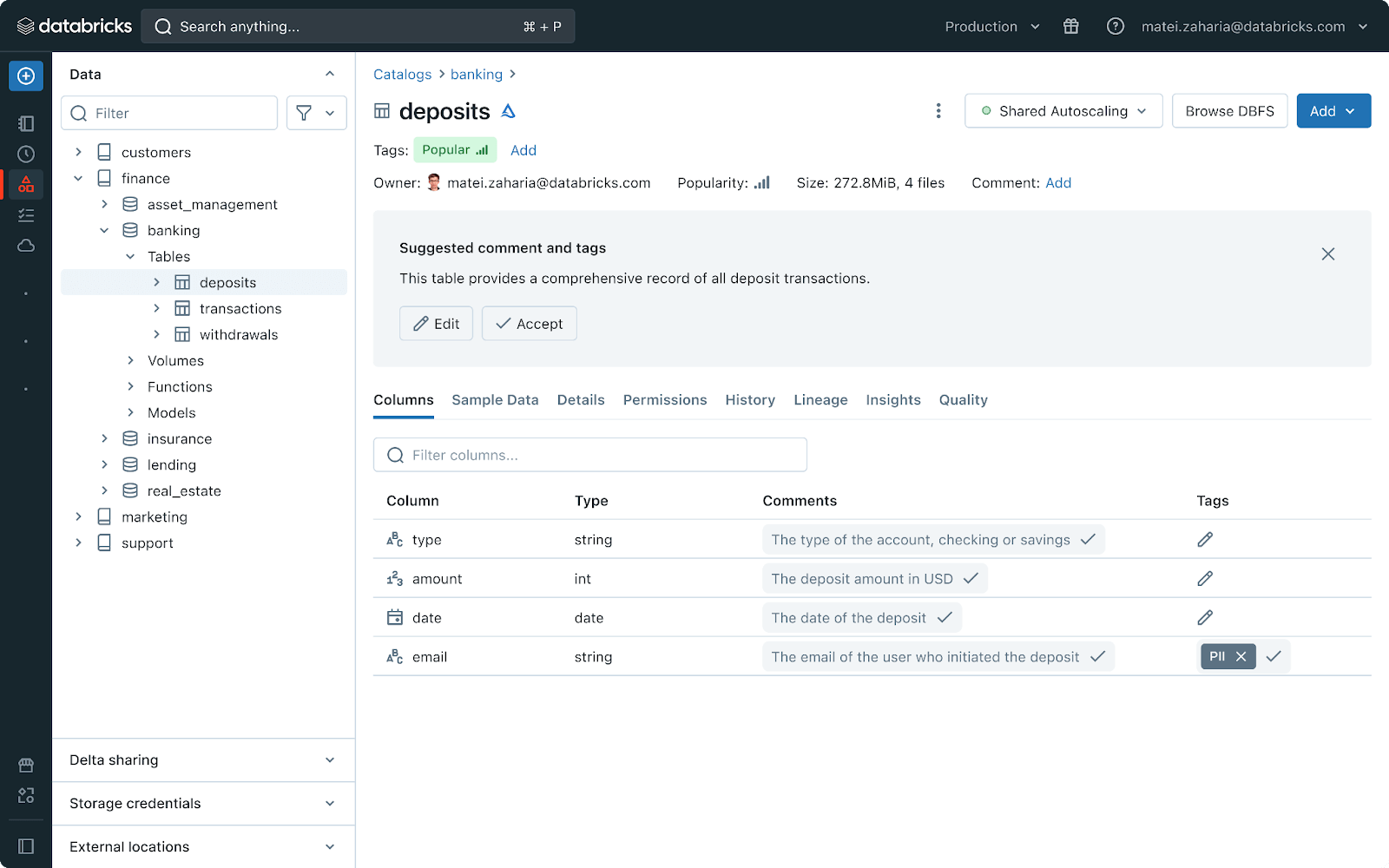

We are also integrating LakehouseIQ into many of the management workflows in the Lakehouse. For example, providing meaningful comments on datasets gets easier with automatic suggestions – and the more documentation you add, the better LakehouseIQ will be able to use that data. LakehouseIQ can also understand and debug jobs, data pipelines, and Spark and SQL queries (e.g., tell you that a dataset may be incomplete because an upstream job is failing), helping users figure out when something is wrong.

Governance and Security

LakehouseIQ is built on and governed by Unity Catalog, Databricks’ flagship solution for security and governance across data and AI. When using LakehouseIQ, your users will only see results for datasets they have access to in Unity Catalog, so you can open data analysis to more users without worrying about new security headaches. Coupled with other functionality we are announcing today, including AI-based automatic data classification, monitoring, and Lakehouse Federation to external systems, LakehouseIQ helps democratize all data in your enterprise.

Next Steps

We believe that LakehouseIQ is the dawn of an unprecedented era of data democratization. By harnessing LakehouseIQ's sophisticated language capabilities and deep contextual comprehension, Databricks offers substantial insights over any source of data in an engaging conversational format, revolutionizing the way we interact with data. We're not just making data accessible; we're making it intelligible, actionable, and much more valuable. We will be rolling out various LakehouseIQ features throughout the year, and are excited to get your feedback.

Don't miss the opportunity to witness LakehouseIQ in action at our Data + AI Summit.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read