Managed MLflow

Erstellen Sie bessere Modelle und generative KI-Apps

Was ist Managed MLflow?

Managed MLflow erweitert die Funktionalität von ,MLflow einer Open Source von entwickelten Plattform Databricks zum Erstellen besserer Modelle und generativer KI-Apps, mit Schwerpunkt auf Unternehmenszuverlässigkeit, Sicherheit und Skalierbarkeit. Das neueste Update für MLflow führt innovative GenAI- und LLMOps-Funktionen ein, die die Fähigkeit zur Verwaltung und Anwendung großer Sprachmodelle (LLMs) verbessern. Diese erweiterte LLM Unterstützung wird durch neue Integrationen mit den Branchenstandard- LLM Tools OpenAI und Hugging Face Transformers sowie dem MLflow Deployments Server erreicht. Darüber hinaus ermöglicht die Integration von MLflowmit LLM Frameworks (z. B. LangChain) eine vereinfachte Modellentwicklung zur Erstellung generativer KI-Anwendungen für eine Vielzahl von Anwendungsfällen, darunter Chatbots, Dokumentzusammenfassung, Textklassifizierung, Sentimentanalysen und mehr.

Vorteile

Modellentwicklung

Verbessern und beschleunigen Sie das Lebenszyklusmanagement maschinellen Lernens mit einem standardisierten Rahmen für produktionsreife Modelle. Verwaltete MLflow Rezepte ermöglichen nahtloses Bootstrapping ML Projekten, schnelle Iteration und Bereitstellung von Modellen im großenScale . Erstellen Sie mühelos Anwendungen wie Chatbots, Dokumentzusammenfassungen, Sentimentanalysen und Klassifizierungen. Entwickeln Sie ganz einfach generative KI-Apps (z. B. Chatbots, Dokumentzusammenfassung) mit den LLM-Angeboten von MLflow, die sich nahtlos in LangChain, Hugging Face und OpenAI integrieren lassen.

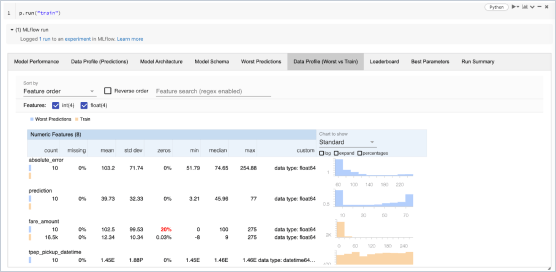

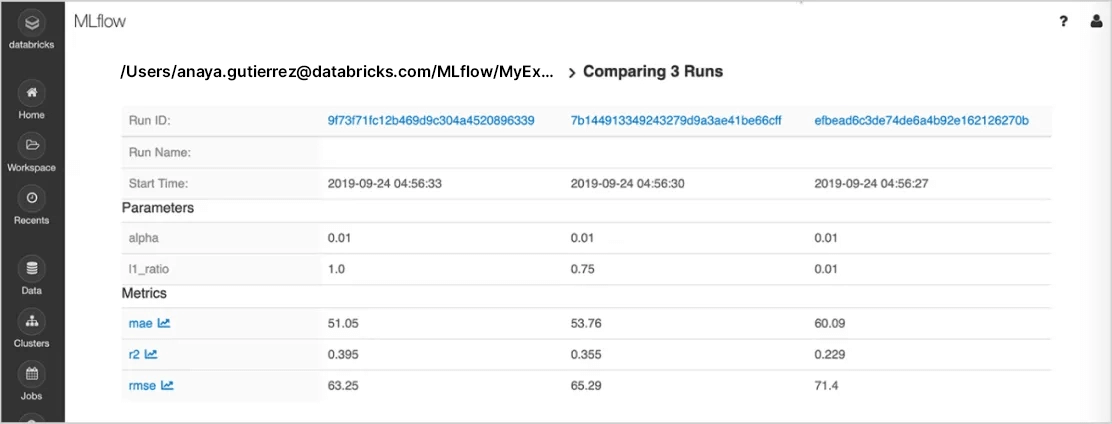

Nachverfolgung von Experimenten

Führen Sie Experimente mit jeder ML -Bibliothek, jedem Framework oder jeder Sprache durch und behalten Sie automatisch den Überblick über Parameter, Metriken, Code und Modelle aus jedem Experiment. Durch die Verwendung MLflow auf Databricks können Sie Experiment zusammen mit den entsprechenden Artefakten und Codeversionen sicher teilen, verwalten und vergleichen – dank integrierter Integrationen mit dem Databricks Arbeitsbereich und -Notebook. Sie können auch die Ergebnisse des GenAI-Experiments auswerten und die Qualität mit MLflow Auswertungsfunktion verbessern.

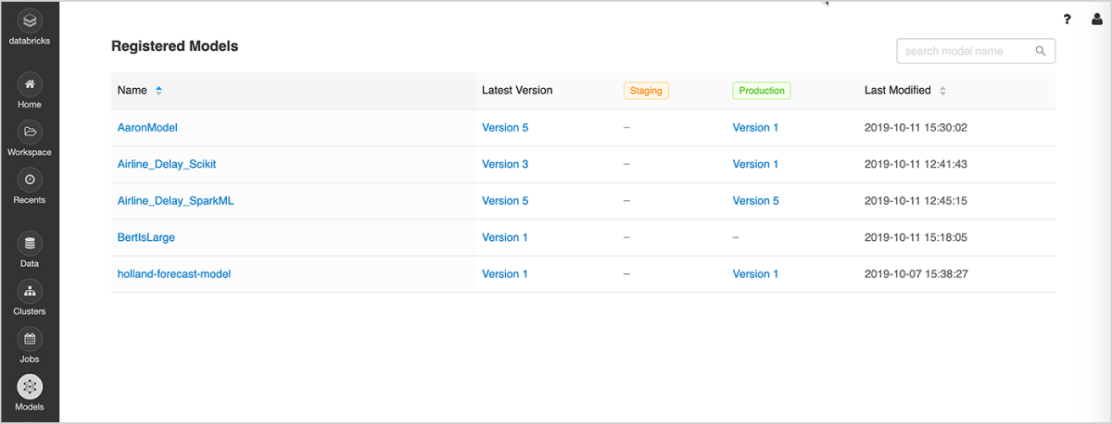

Modellverwaltung

Nutzen Sie eine zentrale Stelle, um ML-Modelle zu entdecken und gemeinsam zu nutzen, um sie gemeinsam vom Experiment zu Online-Tests und zur Produktion zu bringen, um sie in Genehmigungs- und Governance-Workflows und CI/CD-Pipelines zu integrieren und um ML-Einsätze und ihre Leistung zu überwachen. MLflow Model Registry erleichtert den Austausch von Know-how und Wissen und hilft Ihnen, die Kontrolle zu behalten.

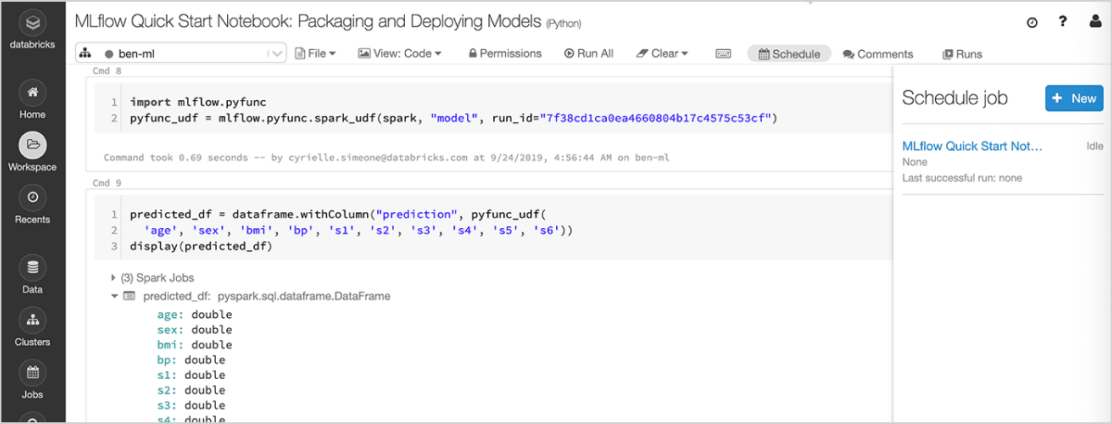

Modelle bereitstellen

Implementieren Sie schnell Produktionsmodelle für Batch-Inferenzen auf Apache Spark™ oder als REST-APIs mithilfe der integrierten Integration mit Docker-Containern, Azure ML oder Amazon SageMaker. Mit Managed MLflow auf Databricks können Sie Produktionsmodelle mit dem Databricks Jobs Scheduler und automatisch verwalteten Clustern operationalisieren und überwachen, um sie je nach Geschäftsanforderungen zu skalieren.

Die neuesten Upgrades für MLflow ermöglichen eine nahtlose Bereitstellung von Paket-GenAI-Anwendungen. Sie können jetzt Ihre Chatbots und andere GenAI-Anwendungen wie Dokumentzusammenfassung, Sentimentanalysen und Klassifizierung im Scale mithilfe von Databricks Model Serving anwenden.

Features

Features

Sehen Sie sich doch unsere Produktneuigkeiten von Azure Databricks und AWS an, um mehr über unsere neuesten Funktionen zu erfahren.

Vergleichen von MLflow-Angeboten

Open Source MLflow | Managed MLflow on Databricks | |

|---|---|---|

Nachverfolgung von Experimenten | ||

MLflow-Tracking-API | ||

MLflow-Tracking-Server | Eigenes Hosting | Vollständig verwaltet |

Notebooks-Integration | ||

Workflow-Integration | ||

Projekte reproduzieren | ||

MLflow-Projekte | ||

Modellverwaltung | ||

Git- und Conda-Integration | ||

Skalierbare Cloud/Cluster für Projektdurchläufe | ||

MLflow-Modellregister | ||

Modellversionierung | ||

Flexible Bereitstellung | ||

ACL-basierte Stage Transition | ||

CI/CD-Workflow-Integrationen | ||

Sicherheit und Management | ||

Eingebaute Batch-Inferenz | ||

MLflow-Modelle | ||

Eingebaute Streaming-Analyse | ||

Hochverfügbarkeit | ||

Automatische Updates | ||

Rollenbasierte Zugriffskontrolle | ||

Sicherheit und Management | ||

Rollenbasierte Zugriffskontrolle | ||

Rollenbasierte Zugriffskontrolle | ||

Rollenbasierte Zugriffskontrolle | ||

Rollenbasierte Zugriffskontrolle | ||

Sicherheit und Management | ||

Rollenbasierte Zugriffskontrolle | ||

Rollenbasierte Zugriffskontrolle | ||

Rollenbasierte Zugriffskontrolle | ||

Rollenbasierte Zugriffskontrolle | ||

Sicherheit und Management | ||

Rollenbasierte Zugriffskontrolle | ||

Rollenbasierte Zugriffskontrolle | ||

Rollenbasierte Zugriffskontrolle |

Ressourcen

Blogs

VIDEOS

TutorialTutorial

Webinare

Webinare

Webinare

Frequently Asked Questions

Ready to get started?