Are you ready to scale your Data and AI initiatives? How will you scale your security?

This is Blog #3 in a series of blog posts about Databricks security. My colleagues David Cook (our CISO) and David Meyer (SVP products) laid out Databricks' approach to Security in blog #1 & blog #2. With this blog, I will be talking about deploying and operating Databricks at scale while minimizing human error.

Democratize Data! What about Security?

As organizations push to leverage their data to make more intelligent decisions, a core requirement is to “Democratize data”. In other words, open up access to previously siloed and restricted data to broader parts of the organization. This often enables a new set of insights that unlock additional sources of revenue and can transform enterprises.

However, this new model of expanded access causes a fair bit of trepidation across organizations, especially in the C-suite. As they hear about data breach reports from enterprises across the spectrum, they look for ways to balance security and governance with access. In particular, access to data from a myriad of services and users is controlled through increasingly complex policies and configurations. The complexity increases the possibility of human error and surface area for attacks, if not done correctly. Earlier this year, IBM published a study in which they showed a dramatic increase in data breaches as a result of cloud service misconfiguration - due in great part to the role of human error.

The Databricks offering is designed with the specific goal of enabling this balance: maximize end-user productivity and access without sacrificing (and in many cases strengthening) security and governance compliance. As part of this, we’ve focused on automating as many operations as possible. Additionally, we've established "separation of concern" principles to minimize opportunities for human error and the impact of any such misconfigurations.

Gartner®: Databricks Cloud Database Leader

To provide more detail, here are some considerations we talk through with our customers

Deploy Securely

The first step is ensuring no unnecessary security vulnerabilities are introduced during initial deployment:

- Keeping your infrastructure in your account - Although Databricks provides the benefits of a fully managed SaaS service, all clusters are created and torn down inside of a customer’s Azure/AWS account, and data stays where it is. Instead of creating a whole new set of configurations in a different environment, which increases the risk of making a dangerous error, all existing security configurations and monitoring tools can continue to be leveraged.

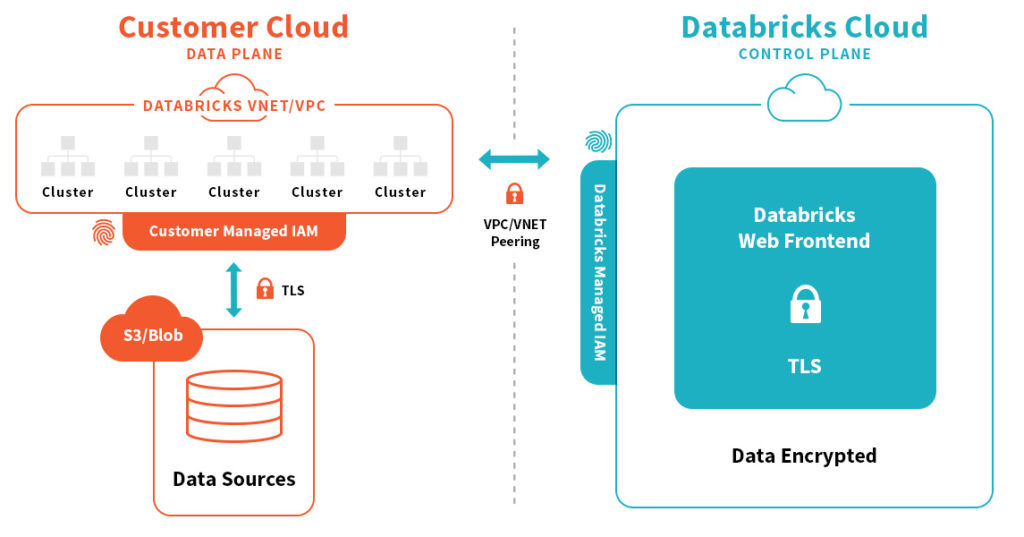

- Isolated networking to reduce blast radius - Databricks creates a dedicated VPC/VNET in the customer’s account that only contains Databricks infrastructure. This ensures that all Databricks policies and controls have no access to other production infrastructure in a customers account. Additionally, all communication with Databricks’ control infrastructure goes through direct links (while additionally eliminating any Public IPs). This prevents any traffic from traversing the public internet and is also encrypted with mutual TLS v1.2. And finally, multiple security groups are used so that if ever Databricks services need to connect to other customer VPC/VNETs (e.g., containing another production data source), there is no risk of exposing access to those services to the outside world.

Isolated networking and security groups

- Leverage scoped down permissions - In order to manage customer infrastructure, Databricks solely uses tokens and roles (vs. keys) to eliminate the risk of leaks. Additionally, these roles have extremely limited permissions (e.g., explicitly excluding access to any customer data) to only enable them to set up the Databricks infrastructure.

Operate Securely as you Scale Users

Equally as important as the initial deployment is ongoing operations and maintenance:

- Automate and minimize human intervention - Databricks automates general SaaS monitoring and updates, to eliminate human intervention. In the rare cases where human access is needed, it is only provided through a time-bound token-based model. Access is only available to a small subset of core Databricks operators, and all activity is logged. Read-only audit and access logs are delivered directly to customers where they can be fed into existing security monitoring infrastructure.

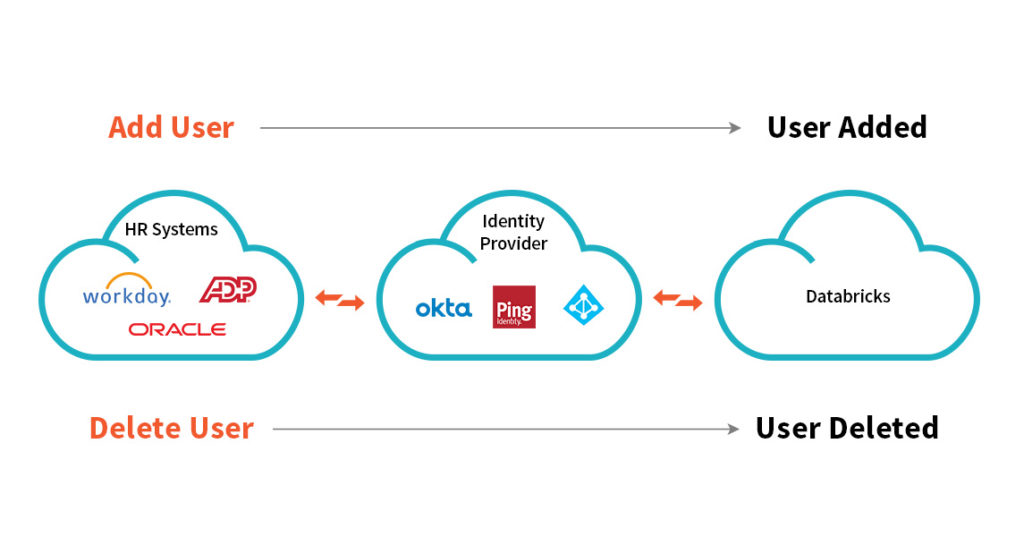

- Integrations with popular security tools - Integration with SSO identity providers (such as Azure Active Directory, Okta, Onelogin etc.) and support for SCIM allow you to easily manage and secure users. New user set up and removal of access during sensitive times when employees may be leaving the business is automated.

- Strict access controls and network-based isolation - After deployment, access right provided to the Databricks web application and identity roles can be further restricted to the bare minimum needed for steady-state operations, eliminating any broader permissions needed for deployment. Any exceptions are fully auditable and subject to a formal approval process by the customer.

Govern Data with Confidence

Finally, it is critical to ensure data is always protected

- Physical and logical data access control: Databricks provides the ability to leverage ACLs to restrict which users can physically access underlying data files, while also providing fine-grained access control (e.g., row and column based) to logical tables that have been created. For example, a Social Security number column can be hidden while allowing access to the rest of the table.

- Effortlessly secure end-to-end data and workflow - Databricks’ rich ecosystem enables seamless integrations and secure, encrypted, authenticated communication with popular enterprise big data technologies such as Data Lakes, Data Warehouses, JDBC/ODBC, and BI Tools.

- Audit Logs - Databricks provides comprehensive end-to-end audit logs of activities done by the users on the platform, allowing enterprises to monitor the detailed usage patterns of Databricks as the business requires.

Conclusion

At Databricks, we know how complex and time consuming it is to scale data systems and access while minimizing human error and managing risk. That’s why we’ve worked to make it virtually effortless to deploy and manage our platform while maintaining data governance and access at scale.

Try It!

- Call us to find out how Databricks can improve your security posture.

- Learn more by downloading our security e-book Protecting Enterprise Data on Apache Spark.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read

How to present and share your Notebook insights in AI/BI Dashboards

Product

December 10, 2024/7 min read