Accelerating Somatic Variant Calling with the Databricks TNSeq Pipeline

Genetic analyses are a critical tool in revolutionizing how we treat cancer. By understanding the mutations present in tumor cells, researchers can gain clues that lead to drug targets and eventually new therapies. At the same time, genetic characterizations of individual tumors enables physicians to tailor treatments to individual patients and improve outcomes while reducing side effects.

However, the full promise of genetic data for cancer therapeutic development has not been realized. There are a lack of robust, scalable, or standardized approaches to process and analyze cancer genome sequencing data. In a typical research setting, each scientist picks their own set of algorithms and stitches them together with custom glue code. This creates analytics workflows that are hard to manage, scale or reproduce. Moving from ad hoc analytics to robust, reproducible and well engineered genomics data pipelines is critical to take cancer research and treatment to the next level.

Identifying Genetic Variants Responsible for Cancer

The first step in this process is identifying the genetic variations in tumor cells compared to the non-tumor cells in an individual. This process is called somatic variant calling.

In industry, most researchers have standardized these data analytics workflows on the GATK's best practice pipeline for somatic variant calling, known as MuTect2. While MuTect2 offers high accuracy, it can take hours to days to run, and the mutations are output in a textual file format that is cumbersome for data scientists to analyze. Combining the mutation calls with clinical or imaging data requires additional complex integrations of distinct systems, slowing down the process of generating clinical reports or population-scale analyses of cancer mutations.

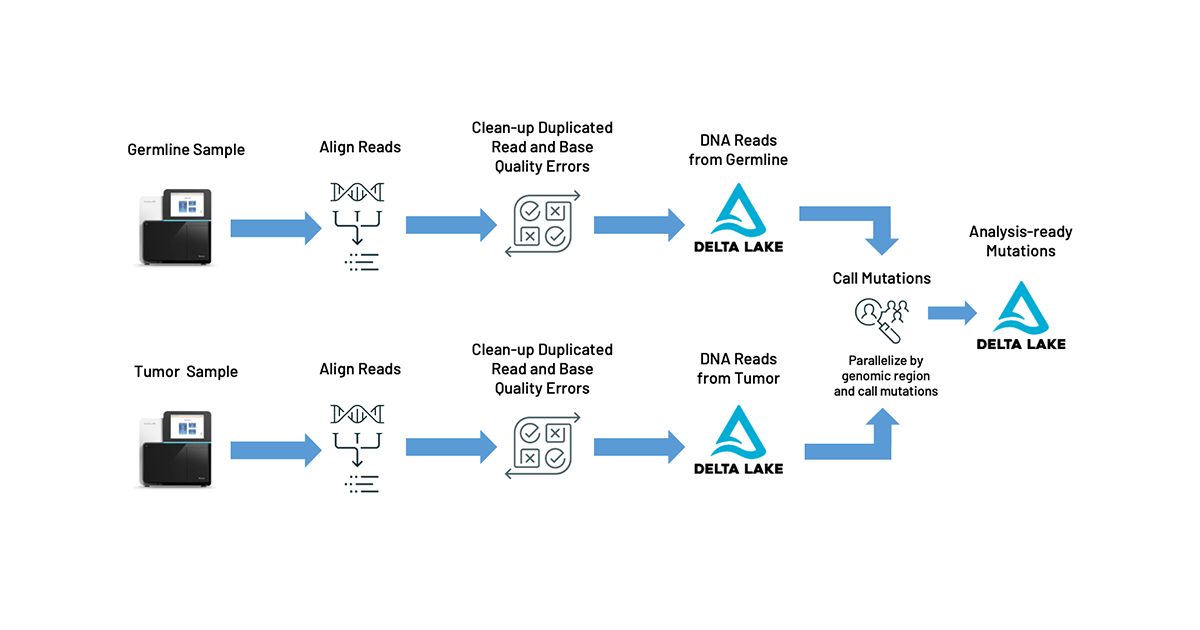

Fortunately, there’s a path forward with Databricks Unified Data Analytics Platform for Genomics. More specifically, to address the problems outlined above, we are excited to announce our TNSeq pipeline (AWS | Azure) which builds on top of our DNASeq pipeline. The TNSeq pipeline enables oncology teams to build and scale rapid data analysis pipelines that flow directly into downstream tertiary analyses for critical cancer research. Initially, the sequenced DNA from the tumor and germline samples are processed equivalently to our DNASeq pipeline: the reads are mapped to a reference genome and then common sequencing errors (like PCR duplicates or biased base quality scores) are corrected. Once aligned and preprocessed, somatic mutations are identified by pooling the tumor and germline reads together, and looking for genomic locations where different alleles are seen between the tumor and germline data. Ultimately, our pipeline reduces pipeline latency by 6x and total cost by 20%, while producing equivalent somatic variant calls. We output our mutation calls directly into Delta Lake tables, formatted using the Glow schemas. This allows for pipelines to feed directly into reports, annotation pipelines (AWS | Azure), and statistical genetics analyses.

By building on top of the Databricks Unified Data Analytics Platform for Genomics, our open source project Glow, and existing single node tools, our pipeline allows researchers and clinicians to seamlessly blend genomic data engineering and data science pipelines, while reducing pipeline latency, computational cost, and infrastructure complexity. By unifying cancer genomic data with both machine learning techniques and clinical/imaging data, Databricks customers are identifying genes that drive cancer progression, developing more sensitive algorithms for the early detection of cancer, and building the next generation of clinical reports that blend genomics with imaging and other patient data to provide clinicians with a full portrait of the patient’s cancer status.

Accelerating Somatic Variant Calling in the Genomics Runtime

Our pipeline uses Apache Spark™ and Glow to parallelize BWA-MEM for alignment and GATK’s MuTect2 tool for variant calling. Alignment is embarrassingly parallel over reads, so we can map each read fragment individually for the tumor and normal samples. We then use Spark to group all reads that are relevant for a given region of the genome into the same partition, duplicating reads across partitions as necessary. This technique allows our pipeline to produce concordant results with the single node MuTect2 tool while still achieving parallelism.

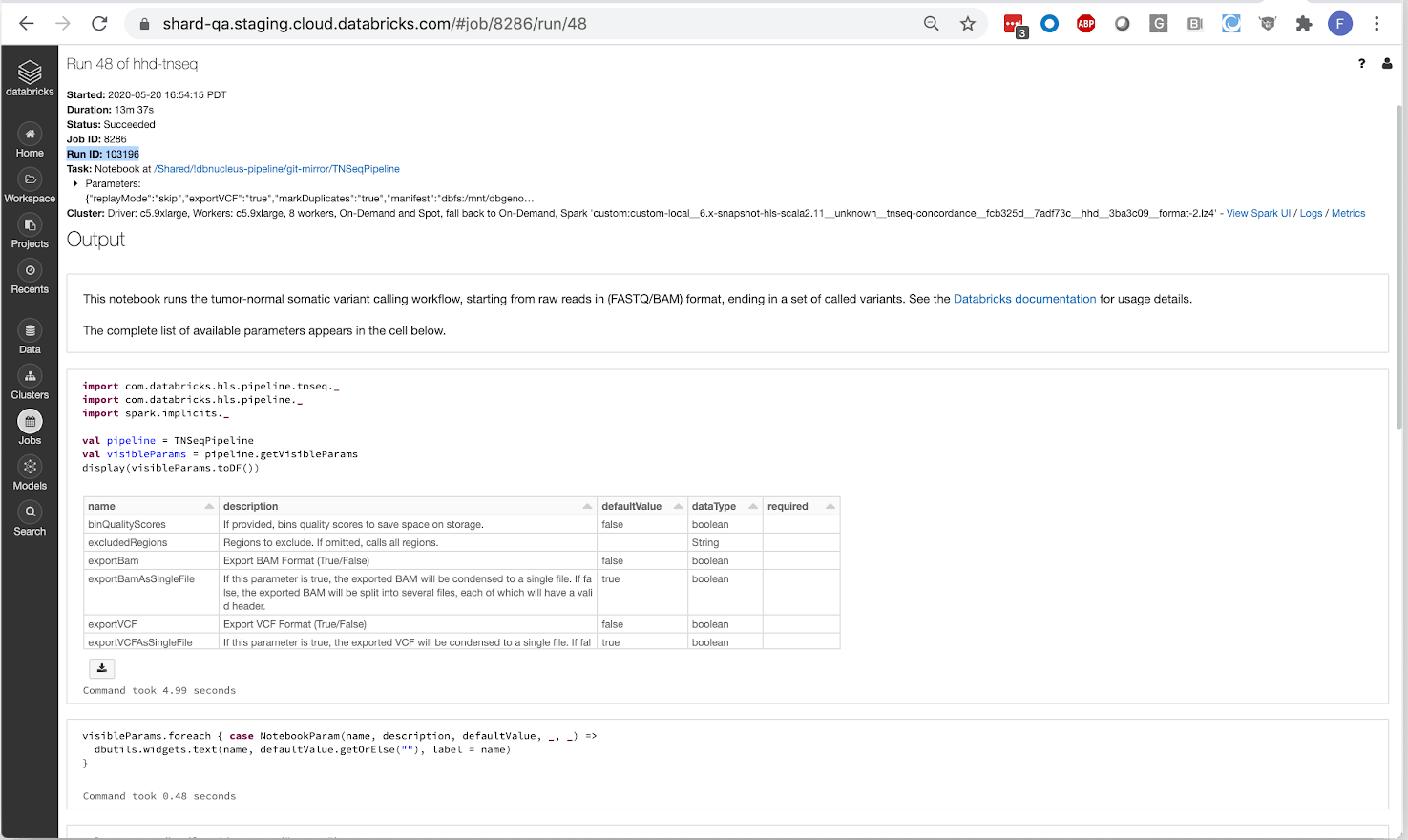

The pipeline operates as a Databricks job, so users can trigger new runs using the UI or programmatically with the Databricks CLI. In addition to producing standard output files like a BAM for aligned reads and a VCF for called variants, our pipeline writes results to a Delta Lake table. This format simplifies organization of thousands of cancer samples and allows for scalable analysis with Glow using the built-in regression tests or by integrating with single node tools, such as Samtools, using the Pipe Transformer.

Big Book of MLOps

Evaluating our Somatic Variant Calling Pipeline

We benchmarked the accuracy and performance of our pipeline using whole exome sequencing data from the Texas Cancer Research Biobank. The normal sample was sequenced at an average coverage of 95x resulting in 6GB of bzip compressed FASTQ files, while the tumor sample was sequenced at an average coverage of 99x for 6.4GB of bzip compressed FASTQ files.

Accuracy

We compared the end-to-end results of our pipeline against command line BWA-MEM and MuTect2. We used som.py to produce concordance metrics.

| Variant type | Precision | Recall |

| SNV | 0.9998 | 0.9994 |

| indel | 0.9963 | 0.9968 |

| all | 0.9977 | 0.9978 |

Since somatic variant calling tools like MuTect2 emphasize sensitivity to variations that may be supported by few reads, these tools are highly sensitive to slight variations in the alignments produced by BWA-MEM. However, BWA-MEM uses the index of a read within a batch to choose between equally good alignments. Because the index is not stable in a distributed setting, our distributed version of BWA-MEM can report different, although equally likely, alignments than the command line version.

To verify that all discrepancies between the two variant callsets derive from randomness during alignment, we also ran command line MuTect2 against the aligned BAM files produced by our pipeline. These results were identical to the variant calls produced by the Databricks pipeline.

| Variant type | Precision | Recall |

| SNV | 1 | 1 |

| indel | 1 | 1 |

| all | 1 | 1 |

Alignments produced by our pipeline differ from command line BWA-MEM because of nondeterminism in the underlying tool. Forcing our pipeline to run alignment for all reads in a single Spark partition produced identical alignments to command line BWA-MEM.

Performance

To evaluate the efficiency of our pipeline against standalone command line tools, we compared the runtime on one c5.9xlarge instance against command line MuTect2 and BWA-MEM. Since there is limited parallelism available in the single-node MuTect2 pipeline, we ran it on an i3.2xlarge instance with 8 cores. This analysis excludes cluster initialization time, which is amortized across all samples run in a single batch.

| Pipeline | Runtime (minutes) | Cores | Core-hours |

| Command line | 259.2 | 8 | 32.4 |

| Databricks | 54.27 | 36 | 32.56 |

The speedup primarily derives from the limited multithreading capabilities in command line MuTect2. By efficiently utilizing cluster resources, our pipeline matches the per-core performance of single node tools while significantly reducing overall runtime.

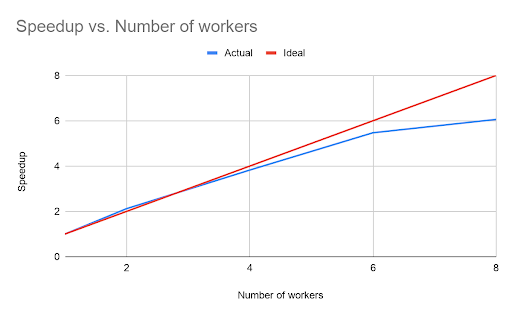

However, unlike the open-source GATK somatic variant calling pipeline, the Databricks TNSeq pipeline is designed to scale across multiple nodes. To demonstrate the scalability of our pipeline, we ran an experiment where we added additional worker nodes. In all experiments, each worker node used an AWS c5.9xlarge instance with 36 cores and 72GB of memory.

The runtime scaled nearly linearly with the number of workers in the cluster, although beyond six workers the benefit begins to decrease. The point of diminishing returns depends on the total data size. For this dataset, the total runtime of the pipeline with six workers was only 9.95 minutes.

Try it!

The TNSeq pipeline offers industry-leading latency and allows mutational data to flow directly from a pipeline into advanced ML and population-scale analyses. This pipeline is available in the Databricks Genomics Runtime (Azure | AWS) and is generally available for all Databricks users. Learn more about our genomics solutions in the Databricks Unified Analytics Platform for Genomics and try out a preview today.