Time Traveling with Delta Lake: A Retrospective of the Last Year

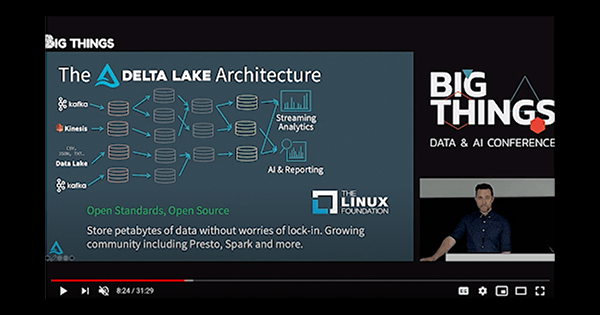

<a href="https://www.databricks.com/resources/ebook/delta-lake-running-oreilly?itm_data=timetravelingdeltalakeretrospective-blog-oreillydlupandrunning">Get an early preview of O'Reilly's new ebook</a> for the step-by-step guidance you need to start using Delta Lake.</p><hr><p>Try out <a href="https://github.com/delta-io/delta/releases/tag/v0.7.0" rel="noopener noreferrer" target="_blank">Delta Lake 0.7.0</a> with Spark 3.0 today!</p><p>It has been a little more than a year since Delta Lake became an <a href="https://www.databricks.com/blog/2019/04/24/open-sourcing-delta-lake.html" rel="noopener noreferrer" target="_blank">open-source project</a> as a <a href="https://www.linuxfoundation.org/projects/" rel="noopener noreferrer" target="_blank">Linux Foundation project</a>. While a lot has changed over the last year, the challenges for most data lakes remain stubbornly the same - the inherent unreliability of data lakes. To address this, Delta Lake brings reliability and data quality for data lakes and Apache Spark; learn more by watching <a href="https://www.youtube.com/watch?v=GGkRwVHq-Zc" rel="noopener noreferrer" target="_blank">Michael Armbrust’s session at Big Things Conference</a>.</p><p><a class="lightbox-trigger" href="https://www.youtube.com/watch?v=GGkRwVHq-Zc" rel="noopener noreferrer" target="_blank"><img class="aligncenter size-full wp-image-97884" src="https://www.databricks.com/wp-content/uploads/2020/06/blog-delta-lake-year-1.png" alt="Watch Michael Armbrust discuss Delta Lake: Reliability and Data Quality for Data Lakes and Apache Spark by Michael Armbrust in the on-demand webcast." height="630"></a></p><div class="text-center"><em>Delta Lake: Reliability and Data Quality for Data Lakes and Apache Spark by Michael Armbrust</em></div><p>With Delta Lake, you can <a href="https://www.youtube.com/watch?v=qtCxNSmTejk&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=18" rel="noopener noreferrer" target="_blank">simplify and scale your data engineering pipelines</a> and improve your data quality data flow with the <a href="https://www.youtube.com/watch?v=FePv0lro0z8" rel="noopener noreferrer" target="_blank">Delta Architecture</a>.</p><h2>Delta Lake Primer</h2><p>To provide more details, the following section provides an overview of the features of Delta Lake. Included are links to various blogs and tech talks that dive into the technical aspects including the Dive into Delta Lake Internals Series of tech talks.</p><p><a href="https://www.databricks.com/wp-content/uploads/2020/06/blog-delta-lake-year-2.png" data-lightbox=" " rel="noopener noreferrer" target="_blank"><img class="aligncenter size-full wp-image-97884" src="https://www.databricks.com/wp-content/uploads/2020/06/blog-delta-lake-year-2.png" alt="The Delta Architecture with the medallion data quality data flow" height="630"></a></p><div class="text-center"><em>The Delta Architecture with the medallion data quality data flow</em></div><h3>Building upon the Apache Spark Foundation</h3><ul><li><strong>Open Format</strong>: All data in Delta Lake is stored in Apache Parquet format, enabling Delta Lake to leverage the efficient compression and encoding schemes that are native to Parquet. Try this out using a Jupyter notebook and local Spark instance from <a href="https://www.databricks.com/blog/2019/10/03/simple-reliable-upserts-and-deletes-on-delta-lake-tables-using-python-apis.html" rel="noopener noreferrer" target="_blank">Simple, Reliable Upserts, and Deletes on Delta Lake Tables using Python APIs</a>.</li><li><strong>Spark API</strong>: Developers can use Delta Lake with their existing data pipelines with minimal change as it is fully compatible with Spark, the commonly used big data processing engine.</li><li><strong>Updates and Deletes</strong>: Delta Lake supports Scala / Java APIs to merge, update and delete datasets. This allows you to easily comply with GDPR and CCPA and also simplifies use cases like Change Data Capture. For more information, refer to <a href="https://www.databricks.com/blog/2019/08/02/announcing-delta-lake-0-3-0-release.html" rel="noopener noreferrer" target="_blank">Announcing the Delta Lake 0.3.0 Release</a>, <a href="https://www.databricks.com/blog/2019/10/03/simple-reliable-upserts-and-deletes-on-delta-lake-tables-using-python-apis.html" rel="noopener noreferrer" target="_blank">Simple, Reliable Upserts, and Deletes on Delta Lake Tables using Python APIs</a>, and <a href="https://www.youtube.com/watch?v=7ewmcdrylsA&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=16" rel="noopener noreferrer" target="_blank">Diving into Delta Lake Part 3: How do DELETE, UPDATE, and MERGE work</a>.</li></ul><h3>Transactions</h3><ul><li><strong>ACID transactions</strong>: Data lakes typically have multiple data pipelines reading and writing data concurrently, and data engineers have to go through a tedious process to ensure data integrity, due to the lack of transactions. Delta Lake brings ACID transactions to your data lakes. It provides serializability, the strongest level of isolation level. Learn more at <a href="https://www.youtube.com/watch?v=F91G4RoA8is&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=15&t=23s" rel="noopener noreferrer" target="_blank">Diving into Delta Lake: Unpacking the Transaction Log</a> <a href="https://www.databricks.com/blog/2019/08/21/diving-into-delta-lake-unpacking-the-transaction-log.html" rel="noopener noreferrer" target="_blank">blog</a> and <a href="https://www.youtube.com/watch?v=F91G4RoA8is&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=15&t=23s" rel="noopener noreferrer" target="_blank">tech talk</a>.</li><li><strong>Unified Batch and Streaming Source and Sink</strong>: A table in Delta Lake is both a batch table, as well as a streaming source and sink. Streaming data ingest, batch historic backfill, and interactive queries all just work out of the box. </li></ul><h3>Data Lake Enhancements</h3><ul><li><strong>Scalable Metadata Handling</strong>: In big data, even the metadata itself can be "big data". Delta Lake treats metadata just like data, leveraging Spark's distributed processing power to handle all its metadata. As a result, Delta Lake can handle petabyte-scale tables with billions of partitions and files at ease. Learn more at <a href="https://www.youtube.com/watch?v=F91G4RoA8is&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=15&t=23s" rel="noopener noreferrer" target="_blank">Diving into Delta Lake: Unpacking the Transaction Log</a> <a href="https://www.databricks.com/blog/2019/08/21/diving-into-delta-lake-unpacking-the-transaction-log.html" rel="noopener noreferrer" target="_blank">blog</a> and <a href="https://www.youtube.com/watch?v=F91G4RoA8is&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=15&t=23s" rel="noopener noreferrer" target="_blank">tech talk</a>.</li><li><strong>Time Travel (data versioning)</strong>: Delta Lake provides snapshots of data enabling developers to access and revert to earlier versions of data for audits or rollbacks, or to reproduce experiments. Learn more in <a href="https://www.databricks.com/blog/2019/02/04/introducing-delta-time-travel-for-large-scale-data-lakes.html" rel="noopener noreferrer" target="_blank">Introducing Delta Lake Time Travel for Large Scale Data Lakes</a> and <a href="https://www.youtube.com/watch?v=hQaENo78za0&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=21&t=112s" rel="noopener noreferrer" target="_blank">Getting Data Ready for Data Science with Delta Lake and MLflow</a>.</li><li><strong>Audit History</strong>: Delta Lake transaction log records details about every change made to data providing a full audit trail of the changes. Learn more about related scenarios such as <a href="https://www.youtube.com/watch?v=tCPslvUjG1w&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=7" rel="noopener noreferrer" target="_blank">addressing GDPR and CCPA and using Delta Lake</a> as a <a href="https://www.youtube.com/watch?v=7y0AAQ6qX5w&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=8" rel="noopener noreferrer" target="_blank">Using Delta Lake as a Change Data Capture source</a>.</li></ul><h3>Schema Enforcement and Evolution</h3><ul><li><strong>Schema Enforcement</strong>: Delta Lake provides the ability to specify your schema and enforce it. This helps ensure that the data types are correct and required columns are present, preventing bad data from causing data corruption. For more information, refer to <a href="https://www.youtube.com/watch?v=tjb10n5wVs8&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=16&t=0s" rel="noopener noreferrer" target="_blank">Diving Into Delta Lake: Schema Enforcement & Evolution</a> <a href="https://www.databricks.com/blog/2019/09/24/diving-into-delta-lake-schema-enforcement-evolution.html" rel="noopener noreferrer" target="_blank">blog</a> and <a href="https://www.youtube.com/watch?v=tjb10n5wVs8&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=16&t=0s" rel="noopener noreferrer" target="_blank">tech talk</a>.</li><li><strong>Schema Evolution</strong>: Business requirements continuously change, therefore the shape and form of your data does as well. Delta Lake enables you to make changes to a table schema that can be applied automatically, without the need for cumbersome DDL. For more information, refer to <a href="https://www.youtube.com/watch?v=tjb10n5wVs8&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=16&t=0s" rel="noopener noreferrer" target="_blank">Diving Into Delta Lake: Schema Enforcement & Evolution</a> <a href="https://www.databricks.com/blog/2019/09/24/diving-into-delta-lake-schema-enforcement-evolution.html" rel="noopener noreferrer" target="_blank">blog</a> and tech <a href="https://www.youtube.com/watch?v=tjb10n5wVs8&list=PLTPXxbhUt-YVPwG3OWNQ-1bJI_s_YRvqP&index=16&t=0s" rel="noopener noreferrer" target="_blank">talk</a>.</li></ul><h2>Checkpoints from the last year</h2><p>In April 2019, we announced that <a href="https://www.databricks.com/blog/2019/04/24/open-sourcing-delta-lake.html" rel="noopener noreferrer" target="_blank">Delta Lake would be open-sourced with the Linux Foundation</a>; the source code for the project can be found at <a href="https://github.com/delta-io/delta" rel="noopener noreferrer" target="_blank">https://github.com/delta-io/delta</a>. In that time span, the project has quickly progressed with releases (6 so far), contributors (65 so far), and stars (>2500). At this time, we wanted to call out some of the cool features.</p><h3>Execute DML statements</h3><p>With <a href="https://www.databricks.com/blog/2019/08/02/announcing-delta-lake-0-3-0-release.html" rel="noopener noreferrer" target="_blank">Delta Lake 0.3.0</a>, you now have the ability to run DELETE, UPDATE, and MERGE statements using the Spark API. Instead of running a convoluted mix of INSERTs, file-level deletions, and table removals and re-creations, you can execute DML statements within a single atomic transaction.</p><pre>import io.delta.tables._ val deltaTable = DeltaTable.forPath(sparkSession, pathToEventsTable) deltaTable.delete("date In addition, this release included the ability to <em>query commit history</em> to understand what operations modified the table. </pre><pre>import io.delta.tables._ val deltaTable = DeltaTable.forPath(spark, pathToTable) val fullHistoryDF = deltaTable.history() // get the full history of the table. val lastOperationDF = deltaTable.history(1) // get the last operation. </pre><p>The returned DataFrame will have the following structure.</p><pre>+-------+-------------------+------+--------+---------+--------------------+----+--------+---------+-----------+--------------+-------------+ |version| timestamp|userId|userName|operation| operationParameters| job|notebook|clusterId|readVersion|isolationLevel|isBlindAppend| +-------+-------------------+------+--------+---------+--------------------+----+--------+---------+-----------+--------------+-------------+ | 5|2019-07-29 14:07:47| null| null| DELETE|[predicate -> ["(...|null| null| null| 4| null| false| | 4|2019-07-29 14:07:41| null| null| UPDATE|[predicate -> (id...|null| null| null| 3| null| false| | 3|2019-07-29 14:07:29| null| null| DELETE|[predicate -> ["(...|null| null| null| 2| null| false| | 2|2019-07-29 14:06:56| null| null| UPDATE|[predicate -> (id...|null| null| null| 1| null| false| | 1|2019-07-29 14:04:31| null| null| DELETE|[predicate -> ["(...|null| null| null| 0| null| false| | 0|2019-07-29 14:01:40| null| null| WRITE|[mode -> ErrorIfE...|null| null| null| null| null| true| +-------+-------------------+------+--------+---------+--------------------+----+--------+---------+-----------+--------------+-------------+ </pre><p>For <a href="https://github.com/delta-io/delta/releases/tag/v0.4.0" rel="noopener noreferrer" target="_blank">Delta Lake 0.4.0</a>, we made executing DML statements by supporting Python APIs as noted in <a href="https://www.databricks.com/blog/2019/10/03/simple-reliable-upserts-and-deletes-on-delta-lake-tables-using-python-apis.html" rel="noopener noreferrer" target="_blank">Simple, Reliable Upserts, and Deletes on Delta Lake Tables using Python APIs</a>.</p><p><a href="https://www.databricks.com/wp-content/uploads/2020/06/blog-delta-lake-year-3.gif" data-lightbox=" " rel="noopener noreferrer" target="_blank"><img class="aligncenter size-full wp-image-97884" src="https://www.databricks.com/wp-content/uploads/2020/06/blog-delta-lake-year-3.gif" alt="Sample merge executed by a DML statement made possible by Delta Lake 0.4.0" height="630"></a></p><h3>Support for other processing engines</h3><p>An important fundamental of Delta Lake was that while it is a storage layer originally conceived to work with Apache Spark, it can work with many other processing engines. As part of the <a href="https://github.com/delta-io/delta/releases/tag/v0.5.0" rel="noopener noreferrer" target="_blank">Delta Lake 0.5.0 release</a>, we included the ability to create manifest files so that you can query Delta Lake tables from Presto and Amazon Athena.</p><p>The blog post <a href="https://www.databricks.com/blog/2020/01/29/query-delta-lake-tables-presto-athena-improved-operations-concurrency-merge-performance.html" rel="noopener noreferrer" target="_blank">Query Delta Lake Tables from Presto and Athena, Improved Operations Concurrency, and Merge performance</a> provides examples of how to create the manifest file to query Delta Lake from Presto; for more information, refer to <a href="https://docs.delta.io/latest/presto-integration.html" rel="noopener noreferrer" target="_blank">Presto and Athena to Delta Lake Integration</a>. Included as part of the same release was the experimental support for Snowflake and Redshift Spectrum. More recently, we’d like to call out integrations with <a href="https://docs.getdbt.com/reference/resource-configs/spark-configs/" rel="noopener noreferrer" target="_blank">dbt</a> and <a href="https://koalas.readthedocs.io/en/latest/reference/api/databricks.koalas.read_delta.html" rel="noopener noreferrer" target="_blank">koalas</a>.</p><p><a href="https://www.databricks.com/wp-content/uploads/2020/06/blog-delta-lake-year-4.png" data-lightbox=" " rel="noopener noreferrer" target="_blank"><img class="aligncenter size-full wp-image-97884" src="https://www.databricks.com/wp-content/uploads/2020/06/blog-delta-lake-year-4.png" alt="Delta Lake Connectors allow you to standardize your big data storage by making it accessible from various tools, such as Amazon Redshift and Athena, Snowflake, Presto, Hive, and Apache Spark." height="630"></a></p><p>With Delta Connector 0.1.0, your <a href="https://www.databricks.com/glossary/apache-hive">Apache Hive</a> environment can now read Delta Lake tables. With this connector, you can create a table in Apache Hive using STORED BY syntax to point it to an existing Delta table like this:</p><pre>CREATE EXTERNAL TABLE deltaTable(col1 INT, col2 STRING) STORED BY 'io.delta.hive.DeltaStorageHandler' LOCATION '/delta/table/path' </pre><h3>Simplifying Operational Maintenance</h3><p>As your data lakes grow in size and complexity, it becomes increasingly difficult to maintain it. But with Delta Lake, each release included more features to simplify the operational overhead. For example, <a href="https://github.com/delta-io/delta/releases/tag/v0.5.0" rel="noopener noreferrer" target="_blank">Delta Lake 0.5.0</a> includes improvements in concurrency control and support for file compaction. <a href="https://github.com/delta-io/delta/releases/tag/v0.6.0" rel="noopener noreferrer" target="_blank">Delta Lake 0.6.0</a> made further improvements including <a href="https://docs.delta.io/latest/delta-storage.html" rel="noopener noreferrer" target="_blank">support for reading Delta tables from any file system</a> and <a href="https://docs.delta.io/latest/delta-update.html#performance-tuning" rel="noopener noreferrer" target="_blank">improved merge performance and automatic repartitioning</a>.</p><p>As noted in <a href="https://www.databricks.com/blog/2020/05/19/schema-evolution-in-merge-operations-and-operational-metrics-in-delta-lake.html" rel="noopener noreferrer" target="_blank">Schema Evolution in Merge Operations and Operational Metrics in Delta Lake</a>, Delta Lake 0.6.0 introduces schema evolution and performance improvements in merge and operational metrics in table history. By <a href="https://docs.delta.io/latest/delta-update.html#automatic-schema-evolution" rel="noopener noreferrer" target="_blank">enabling automatic schema evolution</a> in your environment,</p><pre># Enable automatic schema evolution spark.sql("SET spark.databricks.delta.schema.autoMerge.enabled = true") </pre><p>you can run a single atomic operation to update values as well as merge together the new schema with the following example statement.</p><pre>from delta.tables import * deltaTable = DeltaTable.forPath(spark, DELTA_PATH) # Schema Evolution with a Merge Operation deltaTable.alias("t").merge( new_data.alias("s"), "s.col1 = t.col1 AND s.col2 = t.col2" ).whenMatchedUpdateAll( ).whenNotMatchedInsertAll( ).execute() </pre><p>Improvements to operational metrics were also included in the release so that you can review them from both the API and the Spark UI. For example, running the statement:</p><pre>deltaTable.history().show() </pre><p>provides the abbreviated output of the modifications that had happened to your table.</p><pre>+-------+------+---------+--------------------+ |version|userId|operation| operationMetrics| +-------+------+---------+--------------------+ | 1|100802| MERGE|[numTargetRowsCop...| | 0|100802| WRITE|[numFiles -> 1, n...| +-------+------+---------+--------------------+ </pre><p>For the same action, you can view this information directly within the Spark UI as visualized in the following animated GIF.</p><p><a href="https://www.databricks.com/wp-content/uploads/2020/06/blog-delta-lake-year-5.gif" data-lightbox=" " rel="noopener noreferrer" target="_blank"><img class="aligncenter size-full wp-image-97884" src="https://www.databricks.com/wp-content/uploads/2020/06/blog-delta-lake-year-5.gif" alt="Sample schema evolution in merge operations and operational metrics Spark UI." height="630"></a></p><div class="text-center"><em>Schema Evolution in Merge Operations and Operational Metrics Spark UI example</em></div><p>For more details surrounding this action, refer to <a href="https://www.databricks.com/blog/2020/05/19/schema-evolution-in-merge-operations-and-operational-metrics-in-delta-lake.html" rel="noopener noreferrer" target="_blank">Schema Evolution in Merge Operations and Operational Metrics in Delta Lake</a>.</p>

Gartner®: Databricks Cloud Database Leader

Enhancements coming with Spark 3.0

While the preceding section has been about our recent past, let’s get back to the future and focus on the enhancements coming with Spark 3.0.

Support for Catalog Tables

Delta tables can be referenced in an external catalog such as the HiveMetaStore with Delta Lake 0.7.0. Look out for Delta Lake 0.7.0 release working with Spark 3.0 in the coming weeks.

Expectations - NOT NULL columns

Delta tables can be created by specifying columns as NOT NULL. This will prevent any rows containing null values for those columns from being written to your tables.

More support is on the way, for example the definition of arbitrary SQL expressions as invariants as well as being able to define these invariants on existing tables.

DataFrameWriterV2 API

DataFrameWriterV2 is a much cleaner interface for writing a DataFrame to a table. Table creation operations such as “create”, “replace” are separate from data modification operations such as “append”, “overwrite” and provide the users a better understanding of what to expect. DataFrameWriterV2 APIs are only available in Scala with Spark 3.0.

Get Started with Delta Lake

Try out Delta Lake with the preceding code snippets on your Apache Spark 2.4.5 (or greater) instance (on Databricks, try this with DBR 6.6+). Delta Lake makes your data lakes more reliable (whether you create a new one or migrate an existing data lake). To learn more, refer to https://delta.io/, and join the Delta Lake community via Slack and Google Group. You can track all the upcoming releases and planned features in GitHub milestones. You can also try out Managed Delta Lake on Databricks with a free account.