How Organizations Can Extract the Full Potential of SAP Data with a Lakehouse

Put your ERP data to work

With the backdrop of intense global competition, opaque supply chains, and inflation, manufacturers of all types need to address high degrees of volatility and variability in their business. Companies are needing to invest in their operations to optimize production capacity, design resilient supply chains, and accelerate product time to market (TTM) in order to better serve customers and win against competition. To address these enterprise challenges, companies are seeking to further unlock their enterprise data. Data & AI is the key to transforming their business and generating those higher returns on invested capital (ROIC).

But this reality isn't so simple. Organizations need to be able to access and use all of its critical enterprise data. Enterprise data resides in IT business systems, such as SAP, as well as an array of other operational and external data sources (eg., sensor data coming from the production line or products).

Often times, both SAP ERP data and operational data sources reside in their source systems or within data warehouses / data lakes, distinct from each other. In addition, SAP data is highly structured, whereas operational data, which can amount to 90% of an enterprise's data, can be structured, semi-structured or unstructured, making the convergence of these data sources time consuming and labor intensive challenge.

In fact, until only recently, enterprise data convergence to achieve comprehensive, real-time business intelligence and prescriptive insight at a low enough total cost of ownership (TCO) was inconceivable. The challenges of connecting data sources, lack of advanced analytics, and increasing costs have historically held businesses back from digital transformation.

Gartner®: Databricks Cloud Database Leader

But today, businesses have the ability to provide a seamless path to transform ERP data into greater business value, supporting real-time advanced analytics at a much lower TCO by building a lakehouse on Databricks. With the Databricks Lakehouse, Manufacturing and CPG companies can get the most out of their SAP investment.

The Solution

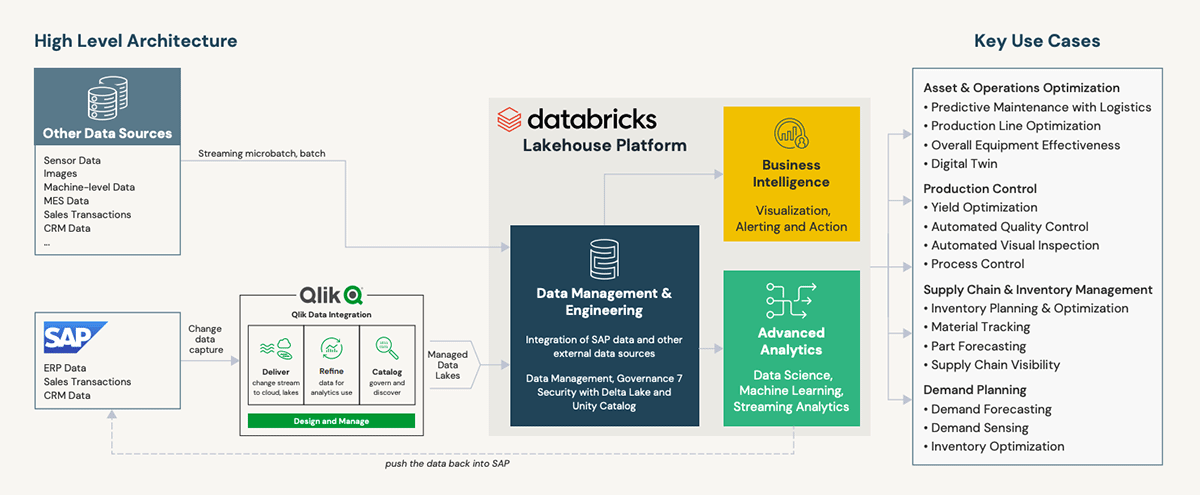

The Databricks Lakehouse Platform was purpose built for integrating multi-modal data, i.e., your SAP and non-SAP Data, to support all your BI to AI workloads on a single platform. The platform combines the best elements of data lakes and data warehouses to deliver the reliability, strong governance and performance of data warehouses with the openness, flexibility and machine learning support of data lakes. This unified approach simplifies your modern data stack by eliminating the data silos that traditionally separate and complicate data engineering, analytics, BI, data science and machine learning.

In partnership with Databricks, You can quickly get started on implementing the Lakehouse for you SAP and non-SAP Data with:

- Flexibility in Extracting & Integrating SAP Data

Qlik Data Integration accelerates the availability of SAP data with its scalable change data capture technology. Qlik Data Integration supports all core SAP and industry modules, including ECC, BW, CRM, GTS, and MDG, and continuously delivers incremental data updates with metadata to Databricks Lakehouse in real time.

Once in the Lakehouse, users can rapidly join SAP and non-SAP data together with streamlined pipeline creation tools such as Delta Live Tables to enable new reports and advanced analytics in hours versus days.

Enterprises are able to power decisions in real-time, blending the freshest reliable information from SAP and operational technology data sources (sensors, customers, process, suppliers, etc.) integrated together to power advanced analytics.

- Improved range of analytics and accuracy of predictions

The Lakehouse was designed to drive real-time and advanced analytics. The average Databricks customer sees a double-digit improvement in forecast accuracy when migrating forecasts from proprietary tools to the Lakehouse.

Customers are able to go beyond what is possible in SAP tools to create predictive and prescriptive analytics using various sources of data, including structured and unstructured data.

Common use cases include:- Demand forecasts, vendor managed inventory & replenishment (VMI / VMR) predictions that incorporate shipments and customer sales data.

- Predictive maintenance use cases that align replacement parts procure to pay business processes to limit downtime of the process/equipment

- Load tendering and multi-step route optimization that incorporates current orders with traffic data.

- Customer segmentations, customer lifetime value, and other customer analytics that help manufacturers prioritize where to invest trade and channel dollars.

- Improved outcomes and reduced TCO

Companies can maintain their investment in SAP while improving outcomes and reducing TCO. The Lakehouse architecture decouples storage from compute, enabling organizations to store petabytes of historic data at inexpensive cloud storage rates. Costs are incurred only on the data that is processed to perform an analysis.

Customers can replicate all of their data into the Lakehouse, and permanently archive historic data to the Lakehouse. Moving the data-at-rest to inexpensive cloud native storage data lakes will preserve accessibility and lower the total cost of ownership by reducing the ERP footprint.

When organizations do unlock the potential of their enterprise data by converging critical data from SAP with operational data in a lakehouse, they are able to drive digital transformation and realize substantial business outcomes. Take these examples:

- Shell: Developed a solution on Databricks to seamlessly aggregate inventory data from SAP to run large scale simulations for managing spare parts inventory. With Databricks they were able to save many millions against $1bn+ in sparts parts and run models 50x faster, increasing TTM

- Viacom18: Built a unified data Platform on Databricks to modernize data warehousing capabilities and accelerate data processing at scale, ingesting multiple data sources, including data from their ERP. With Databricks they increased operational efficiencies by 26%, and reduced query time, resulting in lowering TCO even with daily increases in data volumes

These are just a few examples from a myriad of possible use cases that you can address by converging SAP data with other operational data sources. To learn more, check out this Industry Week webinar Databricks, Qlik, and CelebalTech on how to leverage IT/OT data convergence to extract the full potential of business-critical SAP enterprise data, lowering IT costs and delivering real-time prescriptive insights, at scale. Or contact us for more information.