Transparency, visibility, data: Optimizing the Manufacturing Supply Chain with a Semantic Lakehouse

Learn how a Universal Semantic Layer can Democratize your Databricks Lakehouse and Enable Self Service BI

This is a collaborative post from Databricks, Tredence, and AtScale.

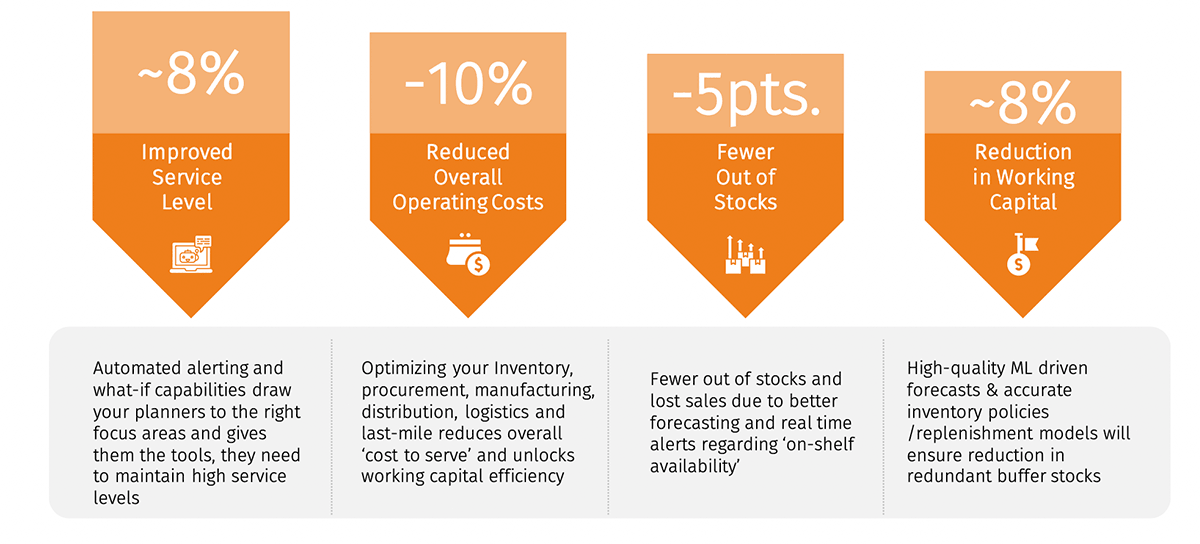

Over the last three years, demand imbalances and supply chain swings have amplified the urgency for manufacturers to digitally transform and reap the benefits that data and AI bring to manufacturing - granular insight, predictive recommendations, and optimized manufacturing and supply chain practices. But in truth, the industry's main data and AI deployment challenges have existed and will continue to exist, outside of exceptional circumstances.

If the events of the last few years proved anything, supply chains need to be agile and resilient.. Recent events have highlighted that demand forecasting at scale is needed in addition to safety stock or even duplicative manufacturing processes for high-risk parts or raw materials. By leveraging data, manufacturers can monitor, predict and respond to internal and external factors – including natural disasters, shipping, warehouse constraints, and geopolitical disruption – which reduces risk and promotes agility.

Successful supply chain optimization starts not at the loading dock, but during the first phases of product development, scale-up, and production. Integrated production and supply chain processes provide end-to-end visibility across all stages from design to planning to execution. This will incorporate a range of solutions:

- Spend analytics: Transparency and insight into where cash is spent are vital for identifying opportunities to reduce external spending across supply markets, suppliers and locations. However, spend analytics are also hugely important to supply chain agility and resilience. This requires a single source of data truth for finance and procurement departments.

- Supply chain 360: With real-time insights and aggregated supply chain data in a single business intelligence dashboard, manufacturers are empowered with greater levels of visibility, transparency, and insights for more informed decision-making. These dashboards can be used to identify risks and take corrective steps, assess suppliers, control costs, and more.

- Demand analytics: By collecting and analyzing millions – if not billions – of data points about market and customer behavior and product performance, manufacturers can use this understanding to improve operations and support strategic decisions that affect the demand of products and services. Around 80% say that using this form of data analysis has improved decision-making, while 26% say having this level of know-how to predict, shape, and meet demands has increased their profits.

Tredence, a Databricks Elite partner in data and AI service providers, sees 2023 and the foreseeable future as the "Year of the Supply Chain," as applications built on the Databricks Lakehouse Platform yield measurable outcomes and impact.

While supply chain visibility and transparency are top priorities for most companies, a recent study states only 6% of companies have achieved full supply chain visibility. It begs the question, "why can some companies leverage data and others can't?"

Why is supply chain visibility so hard to achieve?

For data to be actionable and leveraged as advanced analytics or real-time business intelligence, it needs to be available on time in a form that is clean and ready for downstream applications. Without that, time and effort are wasted on wrangling data instead of utilizing it for managing risk, reducing costs, or exploring additional revenue streams. A major challenge for manufacturers is that the opaque supply chain consisting of multiple teams, processes, and data silos. This drives proliferation of competing and often incompatible platforms and tooling, making integrating analytics across stages in the supply chain difficult.

Historically, manufacturing supply chains are made up of separate functions with different priorities and thus, each team uses a specific toolset to help them achieve their desired outcomes. Teams frequently work in isolation, leading to a huge duplication of efforts in analytics. Central IT teams need to juggle the dynamic needs of the business by maintaining a fragmented architecture that hinders their ability to make data actionable. Instead of waiting for IT to deliver data, business users create their own data extracts, models, and reports. As a result, competing data definitions and results destroyed management's confidence and trust in analytics outputs.

Mrunal Saraiya, Head of Digital Transformation (UX, Data, Intelligent Automation, and Advanced Technology Incubation), at Johnson & Johnson described how this can negatively impact the bottom line in a previous blog. Mrunal found that the inability to understand and control spending and pricing can ultimately lead to limited identification of future strategic decisions and initiatives that could further the effectiveness of global procurement. If this problem is not solved, Johnson & Johnson would miss the opportunity to achieve $6MM in boosted profitability.

Data intelligence reshapes industries

A Semantic Lakehouse for Supply Chain Optimization

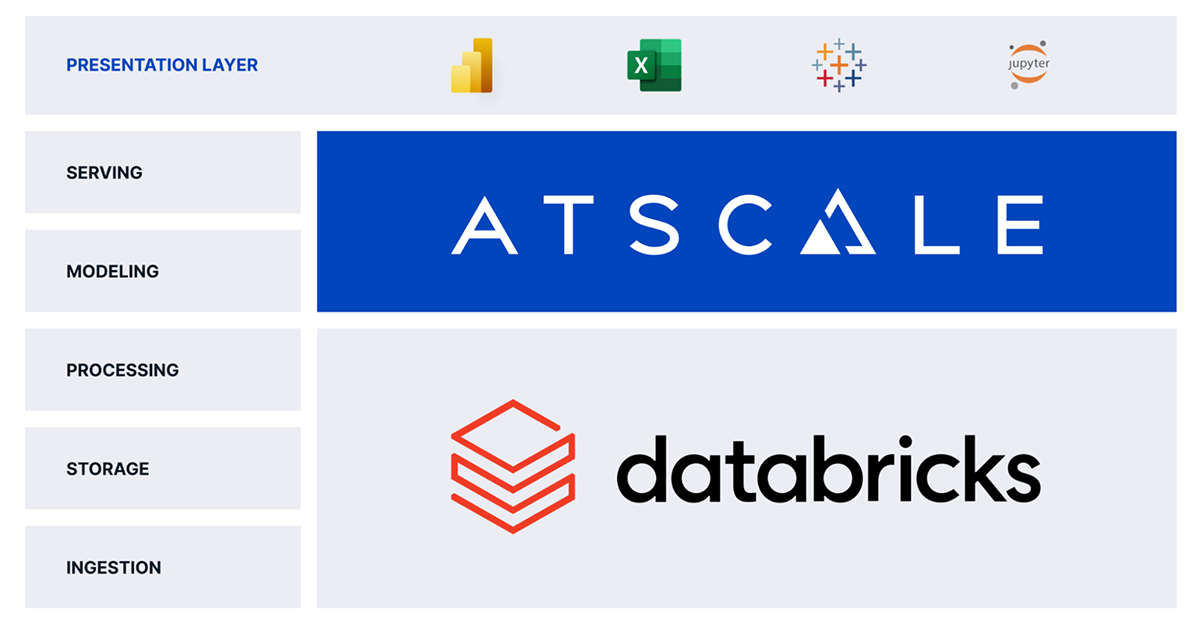

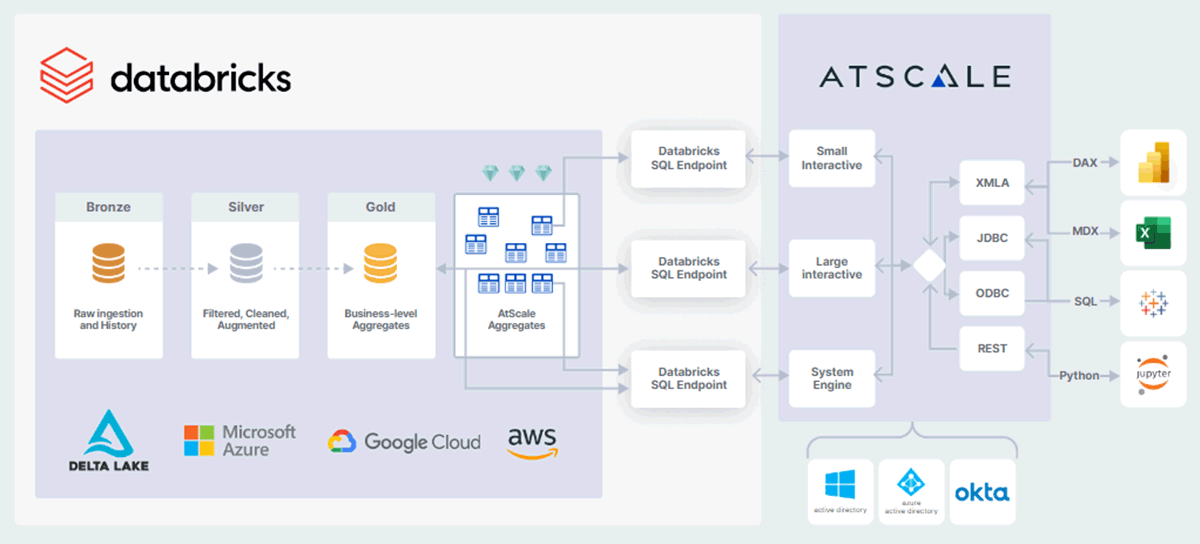

In a previous blog, Kyle Hale, Soham Bhatt, and Kieran O'Driscoll discussed creating a "Semantic Lakehouse" as a way to democratize data and accelerate time to insight. The combination of Databricks and AtScale offers manufacturers a more efficient way of storing, processing, and serving data consistently and competently to decision-makers across the supply chain.

A Semantic Lakehouse serves as the foundation for all analytical use cases across product development, production, and the supply chain. It ingests data from process IoT devices, data historians, MES, or ERP systems and stores it in an open format, preventing data silos. This enables real-time decision-making and granular analysis, leveraging all data for accurate results. In addition, the Unity Catalog and Delta Sharing feature allows open cross-platform data sharing with centralized governance, privacy-safe clean rooms and no data duplication.

Now that enterprise and external data are stored centrally and in a common format, it is easier to build analytics use cases. The Databricks Lakehouse is the only platform that can process both BI and AI/ML workloads in real-time. Using the Photon runtime on Databricks' Lakehouse results in even better performance for real-time supply chain applications and a reduced total cost per workload.

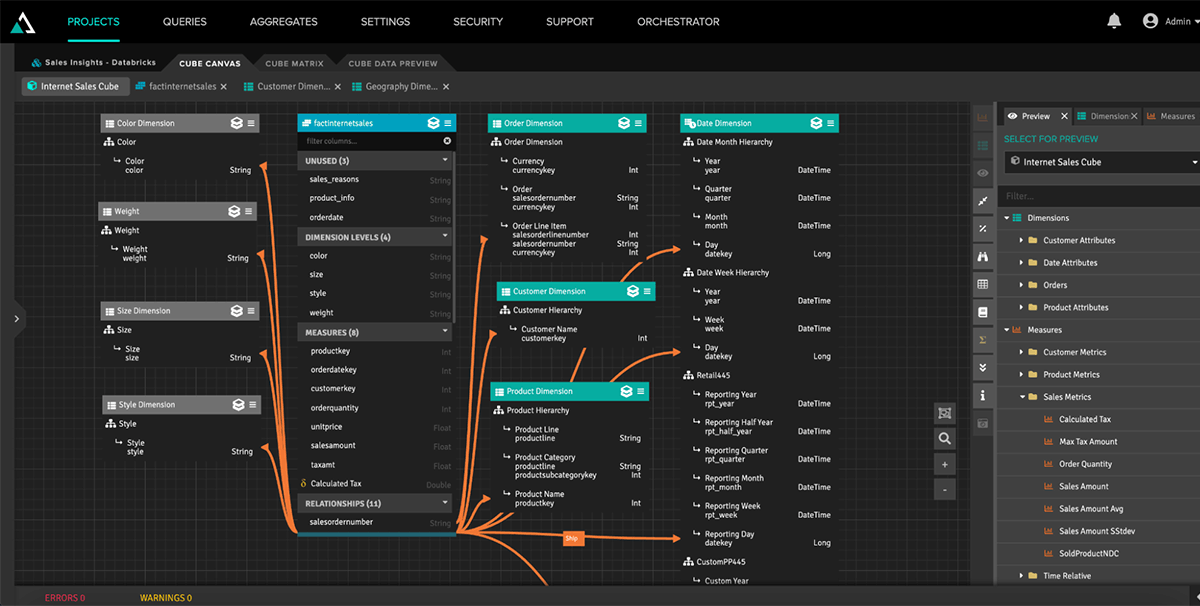

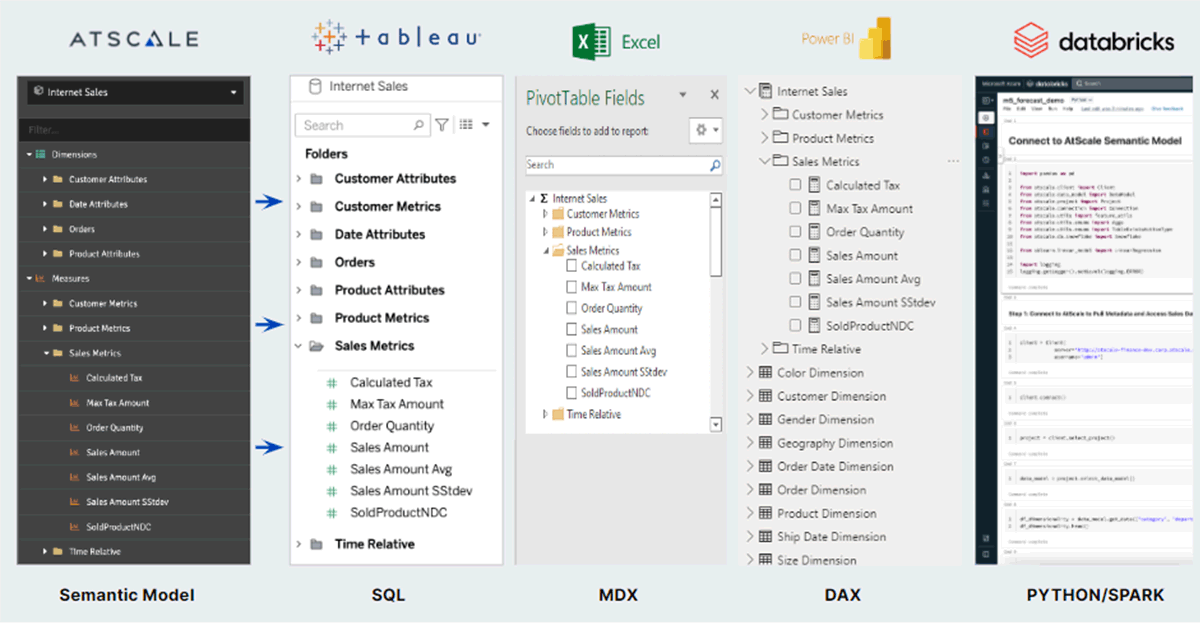

AtScale's semantic data model is effectively a data product for the business by translating enterprise data into business terms, simplifying the access and use of enterprise data. Domain experts across the supply chain can encode their business knowledge into digital form for others to use - breaking down silos and creating a holistic view of manufacturing's production and supply chain.

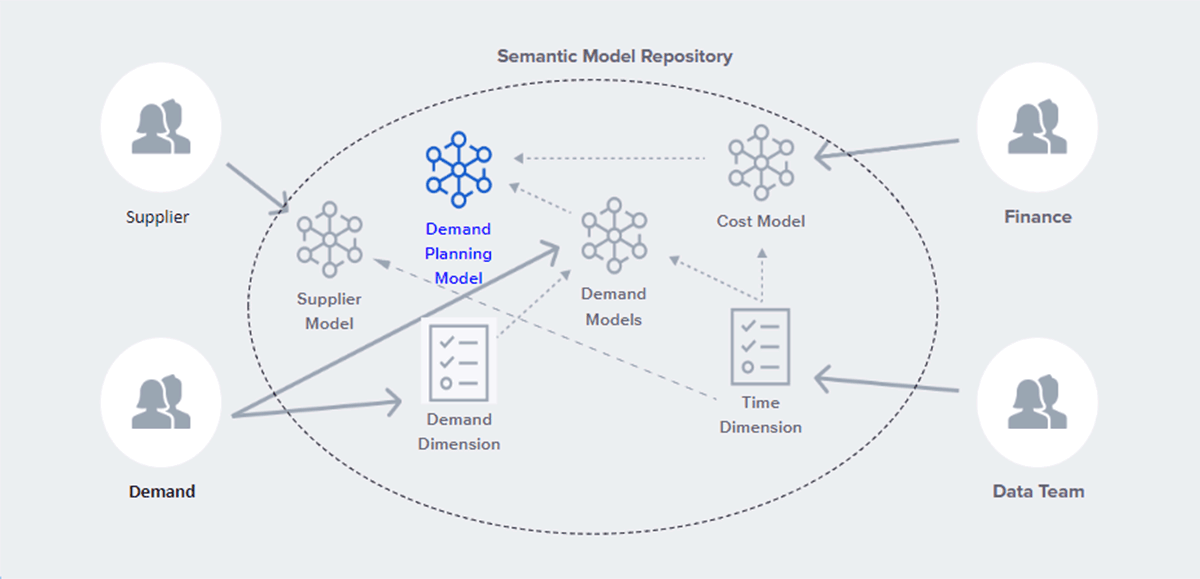

AtScale's semantic layer simplifies data access and structure allowing the central data team to define common models and definitions (i.e., business calendar, product hierarchy, organization structure) while the domain experts part of individual teams own and define their business process models (i.e., "shipping," "billing," "supplier"). With the ability to share model assets, business users can combine their models with those from other domain owners to create new mashups for answering deeper questions.

To support sharing and reuse, a semantic data platform supports role-based security and sharing model components for creating data products across multiple supply chain domains. Once this Semantic Model Repository is implemented, manufacturers can effectively shift to a hub-and-spoke model, where the semantic layer is the "hub," and the "spokes" are the individual semantic models at each supply chain domain.

By implementing a Semantic Model Repository to promote shared models with a consistent and compliant view across the supply chain, users can create data products that meet the needs of each supply chain domain all while working on a single source of truth.

A challenge that manufacturers face is the speed at which the business can surface new insights. In the fragmented architecture described earlier, there was an overdependence on IT to manually extract, transform and load data into a format that was ready to be analyzed by each supply chain domain. This approach often meant that actionable insights were slow to surface.

AtScale addresses this by autonomously defining, orchestrating, and materializing data structures based on the end user's query patterns. The entire lifecycle of these aggregates are managed by AtScale and are designed to improve the scale/performance of Databricks while also trying to anticipate future queries from BI users.

This approach radically simplifies data pipelines while hardening against disruption caused by changes to underlying data or responding to external factors that change the end user's query patterns.

Try it now on Databricks Partner Connect!

Interested in seeing how this works? AtScale is now available for a free trial on Databricks Partner Connect, providing immediate access to semantic modeling, metrics design, and speed of thought query performance directly on the Delta Lake using Databricks SQL.

Watch our panel discussion with Franco Patano, lead product specialist at Databricks for more information and to learn more about how these tools can help you create an agile, scalable analytics platform.

Tredence has deep CPG and Manufacturing supply chain experience as well as supply chain or other data and AI-focused solution accelerators can be found on the Databricks Brickbuilder website.

If you have any questions regarding AtScale or how to modernize and migrate your legacy EDW, BI, and reporting stack to Databricks and AtScale - visit the AtScale website.

Never miss a Databricks post

What's next?

Data Science and ML

October 1, 2024/5 min read

From Generalists to Specialists: The Evolution of AI Systems toward Compound AI

Product

November 27, 2024/6 min read