Cost Effective and Secure Data Sharing: The Advantages of Leveraging Data Partitions for Sharing Large Datasets

In today's business landscape, secure and cost-effective data sharing is more critical than ever for organizations looking to optimize their internal and external processes across the value chain.

However, traditional data-sharing solutions often come with significant limitations, such as requiring every collaborator you work with to be tied to a particular vendor or lacking interoperability and multicloud capabilities. To address these challenges, Databricks has developed Delta Sharing - the world's first open protocol for securely sharing a wide range of assets, including data, ML models, notebooks, and dashboards, across various clouds, regions, and data platforms.

Delta Sharing is natively integrated Unity Catalog, allowing you to manage, govern, audit, and track usage of the shared data on one platform. Unity Catalog is generally available on AWS, Azure and GCP.

Partition filtering is a powerful feature of Unity Catalog that allows data providers to securely share specific partitions of data. This feature not only enhances data privacy but also reduces the costs of sharing large datasets with multiple recipients. Partition filtering also improves data quality by ensuring that only relevant data is shared, improving the effectiveness of data sharing.

In this article, we will explore some of the best practices for sharing data at scale with partition filtering. By leveraging this powerful feature, organizations can greatly enhance the efficiency, security, and effectiveness of their data-sharing processes.

Basic Partition Sharing in Unity Catalog

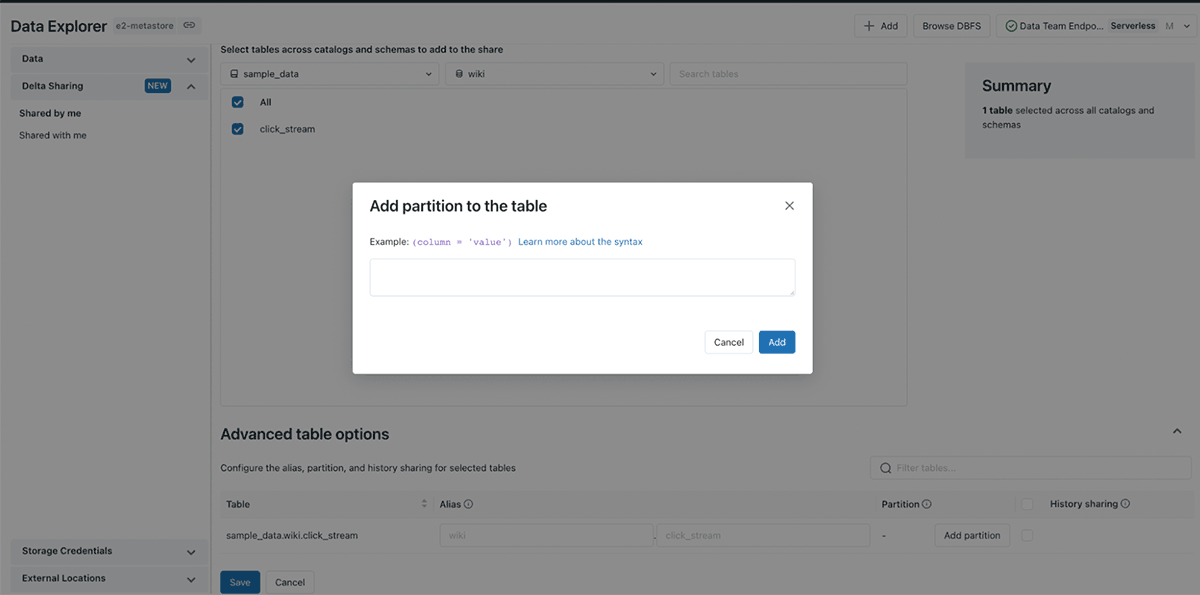

When you share data on Databricks, Unity Catalog allows you to not only share a table but also table partitions, meaning you can manage data sharing at a finer-granularity and share a subset of a table's data without creating any copies. An example usage in SQL API:

In this example, we are sharing a single partition of table `inventory` and potentially multiple partitions of table `retail` by providing a partition clause. You can also perform this task in the Data Explorer UI without spinning up any clusters.

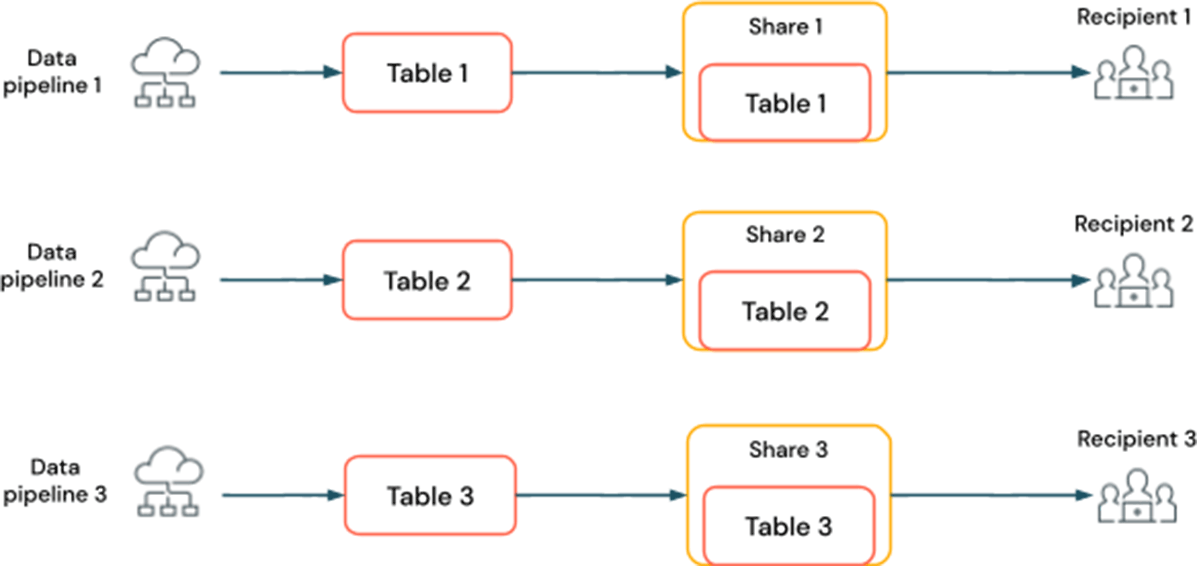

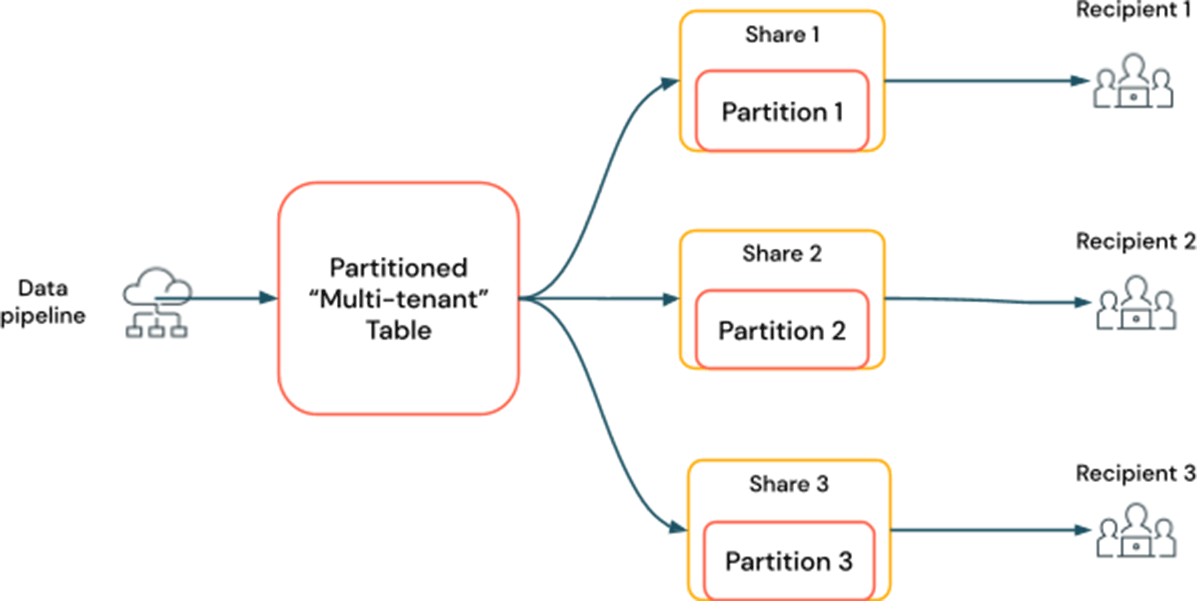

With partition sharing, you don't have to maintain separate tables for multiple data recipients if each recipient should only be able to consume certain partitions of a table. Instead, you can maintain a single, "multi-tenant" table and share its partitions by using the API described above. Data preparation and management now becomes more scalable because you don't have to create a table for each customer.

Gartner®: Databricks Cloud Database Leader

Parameterized Partition Sharing in Unity Catalog

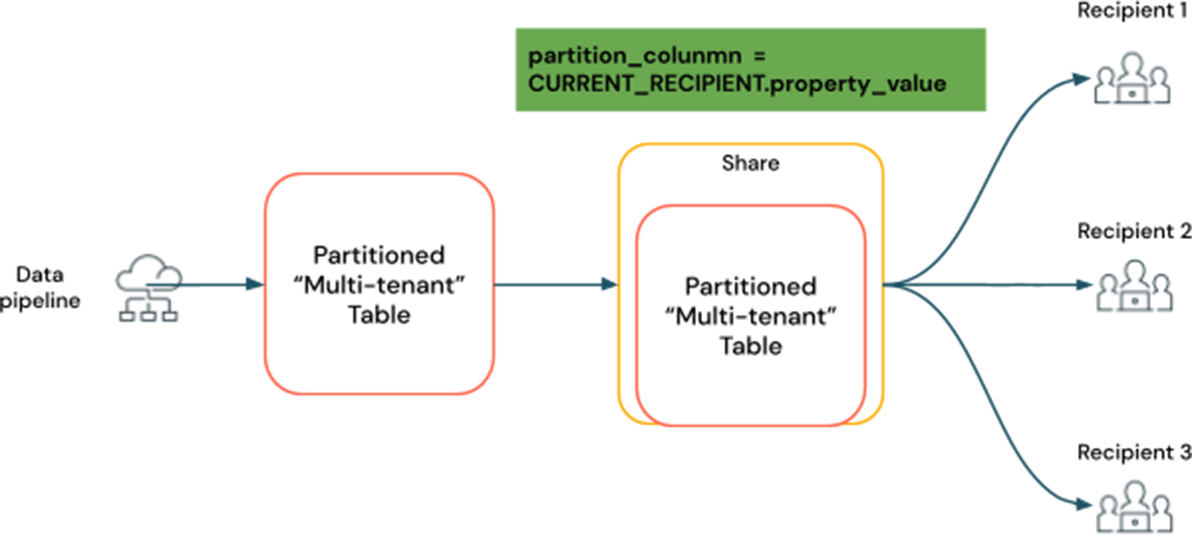

With basic partition sharing, you as a data provider would still need to provide exact values of the partition columns (for example, year = "2023"). Those values are static and can be hard to manage over the long term. To address that pain point, Unity Catalog now launches an advanced feature on partition sharing that allows you to reference a SQL function called `CURRENT_RECIPIENT` in the partition specification. The function is dynamically evaluated to a value of a data recipient's property and can be used to match partition columns.

Consider a case where you are maintaining a table to be shared with multiple business partners as data recipients. Each partner should only be able to access a portion of the table's data. To manage the data sharing more efficiently, you can partition the table based on an identifier of each partner, e.g. the partner's name, and use the parameterized partition sharing feature to share the table by composing a dynamically evaluated partition clause:

In this example, the recipients with access to the share will only be able to access the table partitions whose column matches its name. In the future if you are going to share the data with new recipients, the existing partition specification will continue working as long as the table contains partitions that match with the recipient.

Compared with basic partition sharing, parameterized partition sharing makes the data sharing task even more efficient and scalable. Not only can you now compose the partition clause in a more dynamic way, you can also leverage a single share to grant multiple recipients access to the same multi-tenant table instead of creating a share for each recipient.

Manage Recipient Properties

CURRENT_RECIPIENT is a function that is dynamically evaluated to the value of a recipient property. It supports two kinds of properties: built-in properties and custom properties.

Built-in properties are pre-defined for every recipient object created on Databricks Unity Catalog and can not be modified. When referencing a built-in property in the CURRENT_RECIPIENT() function, you need to specify a prefix of `databricks.`. Here are some example built-in properties:

- databricks.accountId: The ID of the data recipient's Databricks Account

- databricks.metastoreId: The ID of the data recipient's Unity Catalog Metastore

Custom properties are user-managed properties and they can be managed by the data sharing administrators. Similar to table properties, custom properties on recipient objects can be created, updated and deleted by the user. You can also think of custom properties as free-formed tags you can choose to attach to recipient objects. Below are some SQL examples of creating and updating a recipient object with custom properties. You can also read more about how to manage recipient properties in this documentation.

Custom properties are useful to avoid having to partition an already partitioned table to accommodate newly created recipient objects. For example, if you have a table partitioned on a column `partner_id` which contains partner identifiers defined by your own CRM system. In order to share the table with multiple partners, all you need to do is add the partner IDs as custom properties to their data recipient objects and reference it in the partition clause:

Conclusion and Future Work

Partition filtering is a powerful feature that allows you to share data more efficiently at scale. It is particularly useful when you have a single table containing data that's intended to be shared with multiple parties. With the recently launched parameterized partition sharing function, you can implement concise yet powerful and secure access control rules based on the attributes of the data recipients.

We are looking forward to expanding the capabilities of the partition filtering feature and even building support for dynamic filtering. Stay tuned!

If you already are a Databricks customer, follow the guides to get started with Delta Sharing in Unity Catalog (AWS | Azure| GCP). If you are not an existing Databricks customer, sign up for a free trial with a Premium or Enterprise workspace.

You can read more on how Databricks Lakehouse Platform can help you drive better data collaboration and sharing in the eBook- A New Approach to data sharing

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read