5 tips to get the most out of your Databricks Assistant

Back in July, we released the public preview of the new Databricks Assistant, a context-aware AI assistant available in Databricks Notebooks, SQL editor and the file editor that makes you more productive within Databricks, including:

- Generate SQL or Python code

- Autocomplete code or queries

- Transform and optimize code

- Explain code or queries

- Fix errors and debug code

- Discover tables and data that you have access to

While the Databricks Assistant documentation provides high-level information and details on these tasks, generative AI for code generation is relatively new and people are still learning how to get the most out of these applications.

This blog post will discuss five tips and tricks to get the most out of your Databricks Assistant.

5 Tips for Databricks Assistant

1. Use the Find Tables action for better responses

Databricks Assistant leverages many different signals to provide more accurate and relevant results. Some of the context that Databricks Assistant currently uses includes:

- Code or queries in a notebook cell or Databricks SQL editor tab

- Table and column names

- Active tables, which are tables currently being referenced in a Notebook or SQL editor tab

- Previous inputs and responses in the current session (Note that this context is notebook-scoped and will be erased if the chat session is cleared).

- For debugging or error fixes, Databricks Assistant will use the stack trace of the error.

Due to the different items that Databricks Assistant uses as context, you can use context to alter the way you interact with Databricks Assistant in order to get the best results. One of the easiest methods to get better results is to specify the tables you want Databricks Assistant to use as context when generating the response. You can manually specify the tables to use in the query or add that table to your favorites.

In the example below, we want to ask Databricks Assistant about the largest point differential between the home and away teams in the 2018 NFL season. Let's see how Databricks Assistant responds.

We received this response because Databricks Assistant has no context about which tables to use to find this data. To fix this, we can ask Databricks Assistant to find those tables for us or manually specify the tables to use.

The phrase "Find tables related" prompts Databricks Assistant to enter search table mode. In this mode we can search for tables that mention NFL games, and clicking on a table opens a dropdown where we can get suggested SELECT queries, a table description, or the ability to use that table and query it in natural language. For our prompt, we want to use the "Query in natural language" option which will explicitly set the table for the next queries.

After selecting the table to use, our original prompt is now producing a SQL query that gives us our answer of 44 points. By telling Databricks Assistant which table we want to use, we now get the correct answer.

2. Specify what the response should look like

The structure and detail that Databricks Assistant provides will vary from time to time, even for the same prompt. To get outputs in a structure or format that we want, we can tell Databricks Assistant to respond with varying amounts of detail, explanation, or code.

Continuing with our NFL theme, the below query gets a list of quarterbacks' passing completion rate who had over 500 attempts in a season, including whether they're active or retired.

This query will make sense to the person who wrote it, but what about someone seeing it for the first time? It might help to ask Databricks Assistant to explain the code.

If we want a basic overview of this code without going into too much detail, we can ask Databricks Assistant to keep the amount of explanatory text to a minimum.

On the flip side, we can ask Databricks Assistant to explain this code line-by-line in greater detail (output cut off due to length).

Specifying what the response should be like also applies to code generation. Certain prompts can have multiple methods of accomplishing the same task, such as creating visualizations. For example, if we wanted to plot out the number of games each NFL official worked in the 2015 season, we could use Matplotlib, Plotly, or Seaborn. In this example, we want to use Plotly, which should be specified in the prompt as seen in this image:

By changing how Databricks Assistant responds to our prompts and what is included, we can save time and get responses that meet our requirements.

3. Give examples of your row-level data values

Databricks Assistant inspects your table schema and column types to provide more accurate responses, however, it does not have access to row-level data.

Say we're working with this table containing data about players in the NFL Scouting Combine:

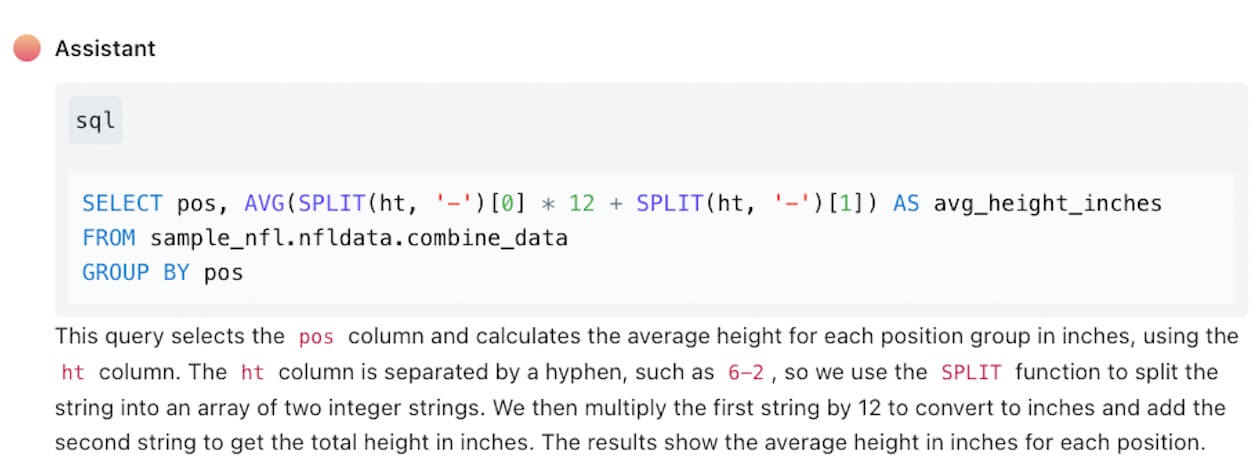

We can ask Databricks Assistant to get the average height for each position, and we will receive a SQL query that is syntactically correct and uses the right column names and table for our prompt.

However, when the query is run, an error is received. This is because the height column in our table is actually a string and in a "feet-inches" structure, such as 6-2, but Databricks Assistant does not have access to row-level data, so there is no way for it to know this.

To fix this, we can give an example of what the data in the row looks like in either the table description or column comments.

Open up your table in Catalog Explorer, and add a line of sample data to your table or column comments:

“The height column (ht) is in string format and is separated by a hyphen. Example: ‘6-2’.”

Now when we ask the Assistant the same question, we see that it will correctly split the column:

4. Test code snippets by directly executing them in the Assistant panel

A large part of working with LLM-based tools is playing around with what types of prompts work best to get the desired result. If we ask Databricks Assistant to perform a task with a poorly worded prompt or a prompt with spelling errors, we may not get the best result, and instead need to go back and fix the prompt.

In the Databricks Assistant chat window, you can directly edit previous prompts and re-submit the request without losing any existing context.

But even with high-quality prompts, the response may not be correct. By running the code directly in the Assistant panel, you can test and quickly iterate on the code before copying it over to your notebook. Think of the Assistant panel as a scratchpad.

With our code updated or validated in the chat window, we can now move it to our notebook and use it in downstream use cases.

Bonus: aside from editing code in the Assistant window, you can also toggle between the existing code, and the newly generated code, to easily see the differences between the two.

5. Use Cell Actions inside Notebooks

Cell Actions allow users to interact with Databricks Assistant and generate code inside notebooks without the chat window, and includes shortcuts to quickly access common tasks, including documenting, fixing, and explaining code.

Say we want to add comments (documentation) to a snippet of code in a notebook cell; we have two options. The first would be to open the Databricks Assistant chat window and enter a prompt such as "add comments to my code", or we can use cell actions and select "/doc" as shown below.

Cell Actions also allows for custom prompts, not just shortcuts. Let's ask Databricks Assistant to format our code. By clicking on the same icon, we can enter our prompt and hit enter.

Databricks Assistant will show the generated output code as well as the differences between the original code and the suggested code, from there, we can choose to accept, reject, or regenerate our response. Cell Actions are a great way to generate code inside Databricks Notebooks without opening the side chat window.

Gartner®: Databricks Cloud Database Leader

Conclusion

Databricks Assistant is a powerful feature that makes the developing experience inside of Databricks easier, faster, and more efficient. By incorporating the above tips, you can get the most out of Databricks Assistant.

You can follow the instructions documented here to enable Databricks Assistant in your Databricks Account and workspaces.

Databricks Assistant, like any generative AI tool, can and will make mistakes. Due to this, be sure to review any code that is generated by Databricks Assistant before executing it. If you get responses that don't look right, or are syntactically incorrect, use the thumbs-down icon to send feedback. Databricks Assistant is constantly learning and improving to return better results.