Announcing the General Availability of cross-cloud data governance

Access and govern all of your S3 data using Unity Catalog within a secure Azure Databricks environment

Summary

- Unity Catalog on Azure Databricks now supports direct access to AWS S3 data, allowing you to unify access controls, policies, and auditing across both S3 and ADLS.

- Teams can now configure and query S3 data directly in Azure Databricks without needing to migrate or duplicate data.

- The General Availability release supports read-only features like S3 external tables, volumes, and AWS IAM-based credentials within Azure Databricks.

We’re excited to announce that the ability to access AWS S3 data on Azure Databricks through Unity Catalog to enable cross-cloud data governance is now Generally Available. As the industry's only unified and open governance solution for all data and AI assets, Unity Catalog empowers organizations to govern data wherever it lives, ensuring security, compliance, and interoperability across clouds. With this release, teams can directly configure and query AWS S3 data from Azure Databricks without needing to migrate or copy datasets. This makes it easier to standardize policies, access controls, and auditing across both ADLS and S3 storage.

In this blog, we'll cover two key topics:

- How Unity Catalog enables cross-cloud data governance

- How to access and work with AWS S3 data from Azure Databricks

What is cross-cloud data governance on Unity Catalog?

As enterprises adopt hybrid and cross-cloud architectures, they often face fragmented access controls, inconsistent security policies, and duplicated governance processes. This complexity increases risk, drives up operational costs, and slows innovation.

Cross-cloud data governance with Unity Catalog simplifies this by extending a single permission model, centralized policy enforcement, and comprehensive auditing across data stored in multiple clouds, such as AWS S3 and Azure Data Lake Storage, all managed from within the Databricks Platform.

Key benefits of leveraging cross-cloud data governance on Unity Catalog include:

- Unified governance – Manage access policies, security controls, and compliance standards from one place without juggling siloed systems

- Frictionless data access – Securely discover, query, and analyze data across clouds in a single workspace, eliminating silos and reducing complexity

- Stronger security and compliance – Gain centralized visibility, tagging, lineage, data classification, and auditing across all your cloud storage

By bridging governance across clouds, Unity Catalog gives teams a single, secure interface to manage and maximize the value of all their data and AI assets—wherever they live.

How it works

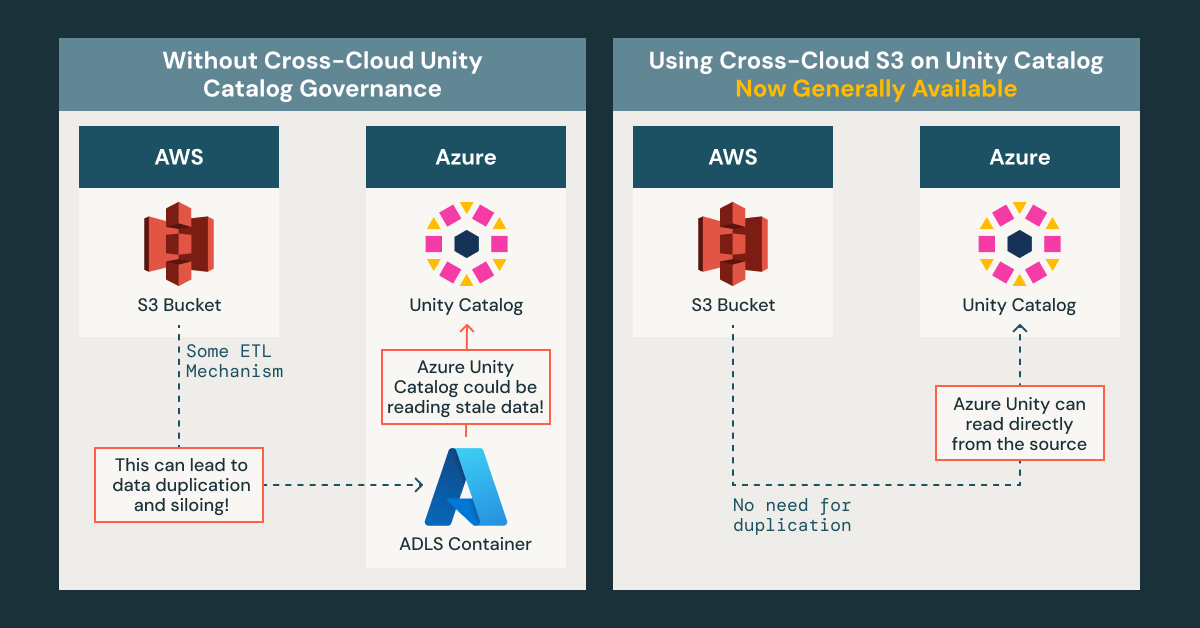

Previously, when using Azure Databricks, Unity Catalog only supported storage locations within ADLS. This meant that if you have data stored in an AWS S3 bucket but need to access and process it with Unity Catalog on Azure Databricks, the traditional approach would require extracting, transforming, and loading (ETL) that data into an ADLS container—a process that is both costly and time-consuming. This also increases the risk of maintaining duplicate, outdated copies of data.

With this GA release, you can now set up an external cross-cloud S3 location directly from Unity Catalog on Azure Databricks. This allows you to seamlessly read and govern your S3 data without migration or duplication.

You can configure access to your AWS S3 bucket in a few easy steps:

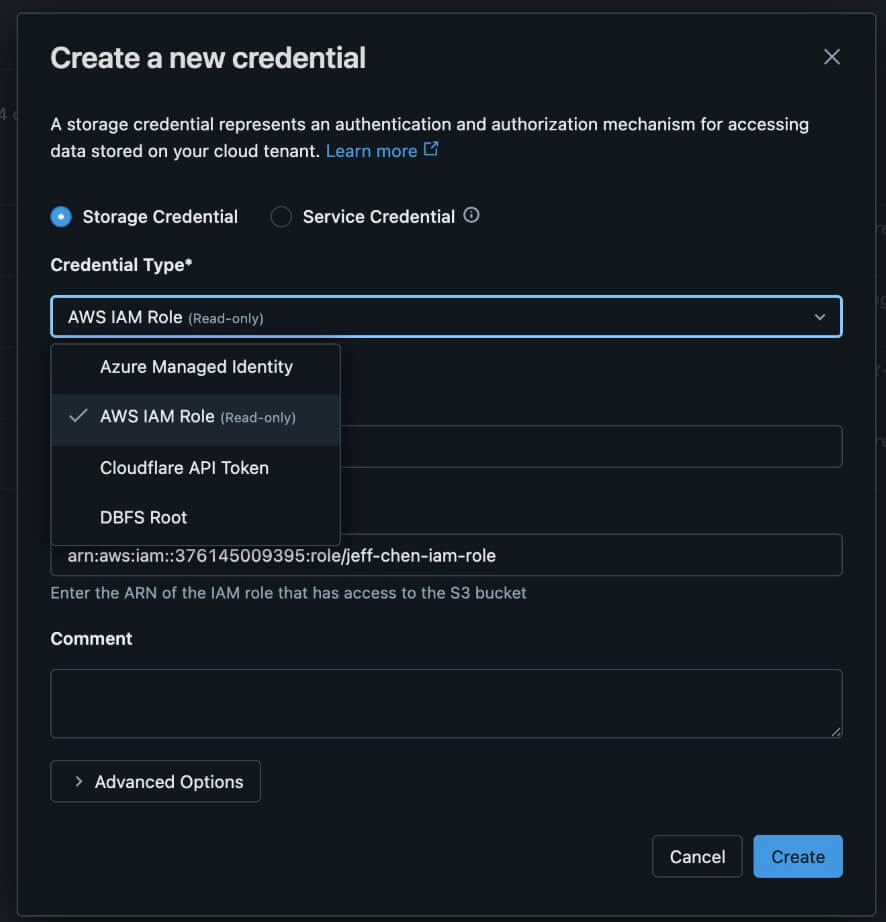

- Set up your storage credential and create an external location. Once your AWS IAM and S3 resources are provisioned, you can create your storage credential and external location directly in the Azure Databricks Catalog Explorer.

- To create your storage credential, navigate to Credentials within the Catalog Explorer. Select AWS IAM Role (Read-only), fill in the required fields, and add the trust policy snippet when prompted.

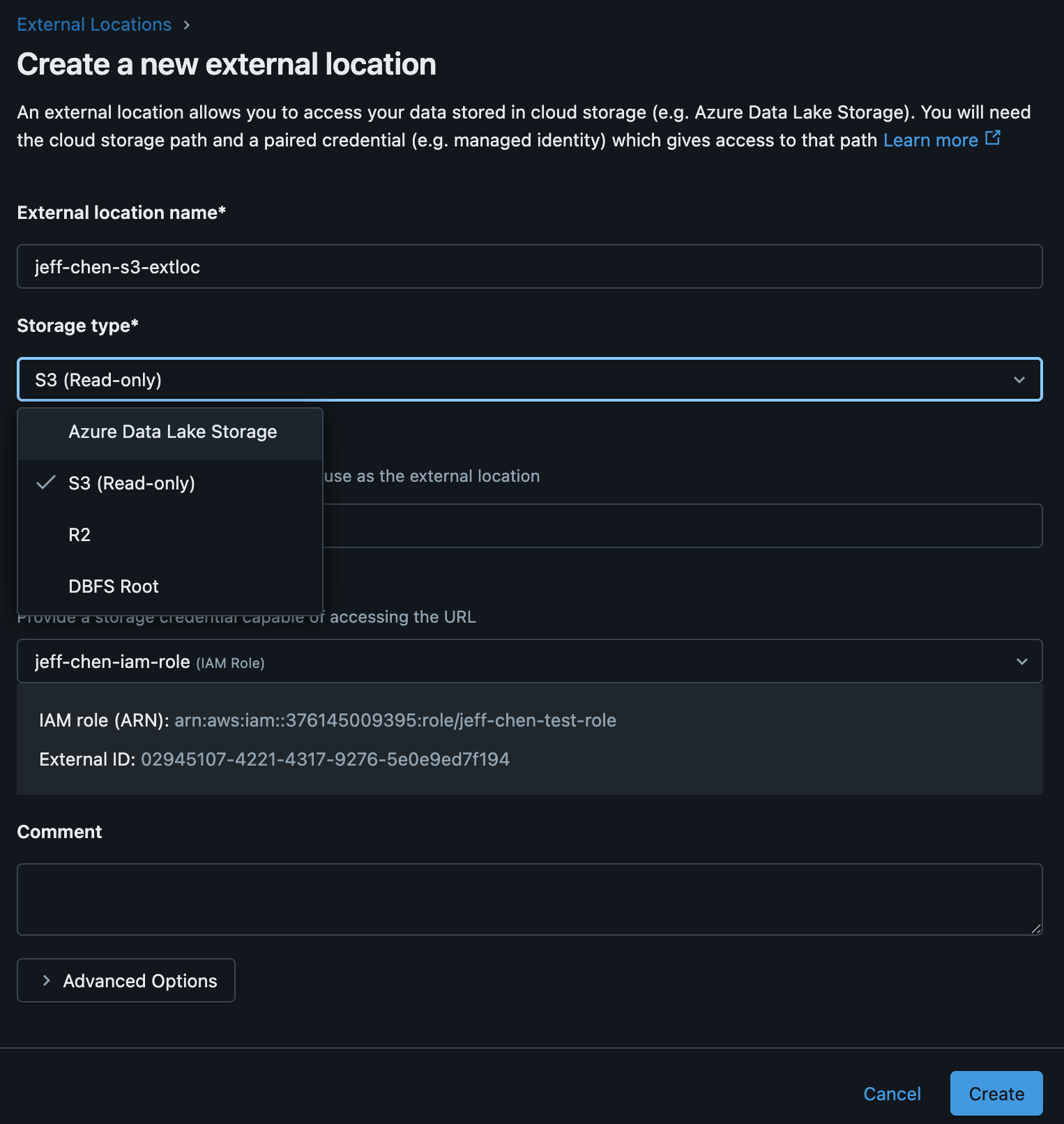

- To create an external location, navigate to External locations within the Catalog Explorer. Then, select the credential you just set up and complete the remaining details.

- To create your storage credential, navigate to Credentials within the Catalog Explorer. Select AWS IAM Role (Read-only), fill in the required fields, and add the trust policy snippet when prompted.

- Apply permissions. On the Credentials page within the Catalog Explorer, you can now see your ADLS and S3 data together in one place in Azure Databricks. From there, you can apply consistent permissions across both storage systems.

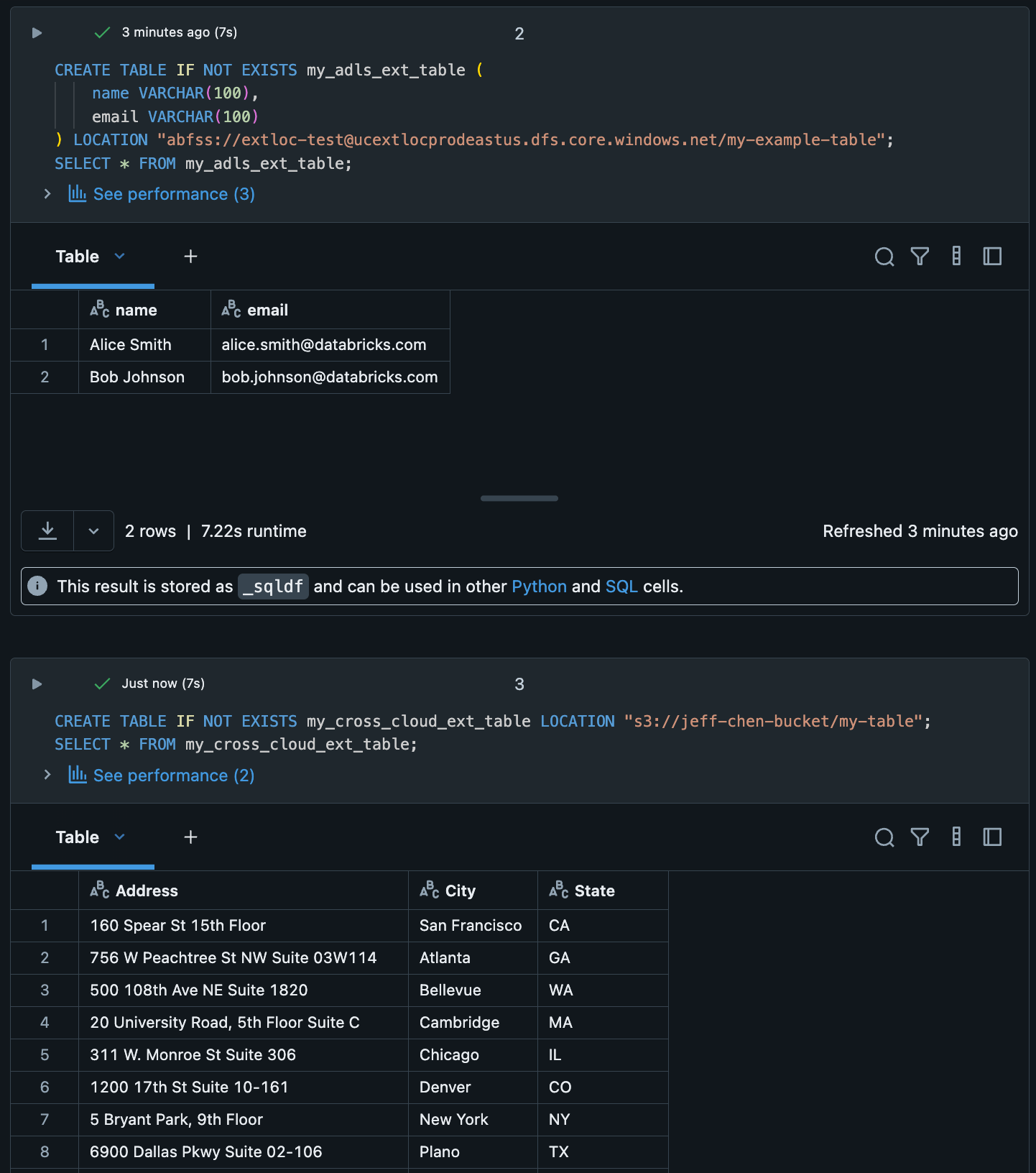

3. Start querying! You’re ready to query your S3 data directly from your Azure Databricks workspace.

Gartner®: Databricks Cloud Database Leader

What’s supported in the GA release?

With GA, we now support accessing external tables and volumes in S3 from Azure Databricks. Specifically, the following features are now supported in a read-only capacity:

- AWS IAM role storage credentials

- S3 external locations

- S3 external tables

- S3 external volumes

- S3 dbutils.fs access

- Delta sharing of S3 data from UC on Azure

Getting Started

To try out cross-cloud data governance on Azure Databricks, check out our documentation on how to set up storage credentials for IAM roles for S3 storage on Azure Databricks. It’s important to note that your cloud provider may charge fees for accessing data external to their cloud services. To get started with Unity Catalog, follow our Unity Catalog guide for Azure.

Join the Unity Catalog product and engineering team at the Data + AI Summit, June 9–12 at the Moscone Center in San Francisco! Get a first look at the latest innovations in data and AI governance. Register now to secure your spot!

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read