Announcing Storage-Optimized Endpoints for Vector Search

Multi-billion Vector Scale, 7x lower cost

Summary

- Introducing storage-optimized Vector Search: Billion vector scale, up to 7x lower cost, 20x faster indexing, familiar SQL-like filtering.

- Unlock more value from unstructured data for AI: Build high-performance RAG, entity resolution, and semantic search systems on documents, images, and more.

- Enterprise-ready and easy to adopt: Backed by Unity Catalog governance and integrated with tools like AI Playground for rapid RAG prototyping and budget policies for cost management.

Most enterprises sit on a massive amount of unstructured data—documents, images, audio, video—yet only a fraction ever turns into actionable insight. AI-powered apps such as retrieval‑augmented generation (RAG), entity resolution, recommendation engines, and intent‑aware search can change that, but they quickly run into familiar barriers: hard capacity limits, ballooning costs, and sluggish indexing.

Today, we are announcing the Public Preview of storage-optimized endpoints for Mosaic AI Vector Search—our new Vector Search engine, purpose‑built for petabyte‑scale data. By decoupling storage from compute and leveraging Spark's massive scale and parallelism inside the Databricks Data Intelligence Platform, it delivers:

- Multi-billion vector capacity

- Up to 7x lower cost

- 20x faster indexing

- SQL‑style filtering

Best of all, it’s a true drop‑in replacement for the same APIs your teams already use, now super‑charged for RAG, semantic search, and entity resolution in real‑world production. Additionally, to further support enterprise teams, we’re also introducing new features designed to streamline development and improve cost visibility.

What’s new in storage-optimized Vector Search

Storage-optimized endpoints were built in direct response to what enterprise teams told us they need most: the ability to index and search across entire unstructured data lakes, infrastructure that scales without ballooning costs, and faster development cycles.

Multi-billion Vector Scale, 7x lower cost

Scale is no longer a limitation. Where our Standard offering supported a few hundred million vectors, storage optimized is built for billions of vectors at a reasonable cost, allowing organizations to run full-data-lake workloads without the need to sample or filter down. Customers running large workloads are seeing up to 7x lower infrastructure costs, making it finally feasible to run GenAI in production across massive unstructured datasets.

For comparison, storage optimized pricing would be ~$900/month for 45M vectors and ~$7K/month for 1.3B vectors. The latter represents significant savings compared to ~$47K/month on our standard offering.

Up to 20x Faster Indexing

Unlock rapid iteration cycles that were previously impossible. Our re-architecture powers one of the most requested improvements—dramatically faster indexing. You can now build a 1 billion vector index in under 8 hours, and smaller indices of 100M vectors or smaller are built in minutes.

“The indexing speed improvement with storage-optimized is huge for us. What previously took about 7 hours now takes just one hour, a 7-8x improvement." — Ritabrata Moitra, Sr. Lead ML Engineer, CommercelIQ

SQL-like Filtering

Easily filter records without learning unfamiliar syntax. Beyond performance and scale, we’ve also focused on usability. Metadata filtering is now done using intuitive, SQL-style syntax, making it simple to narrow down search results using criteria you’re already familiar with.

Same APIs, Brand New Backend

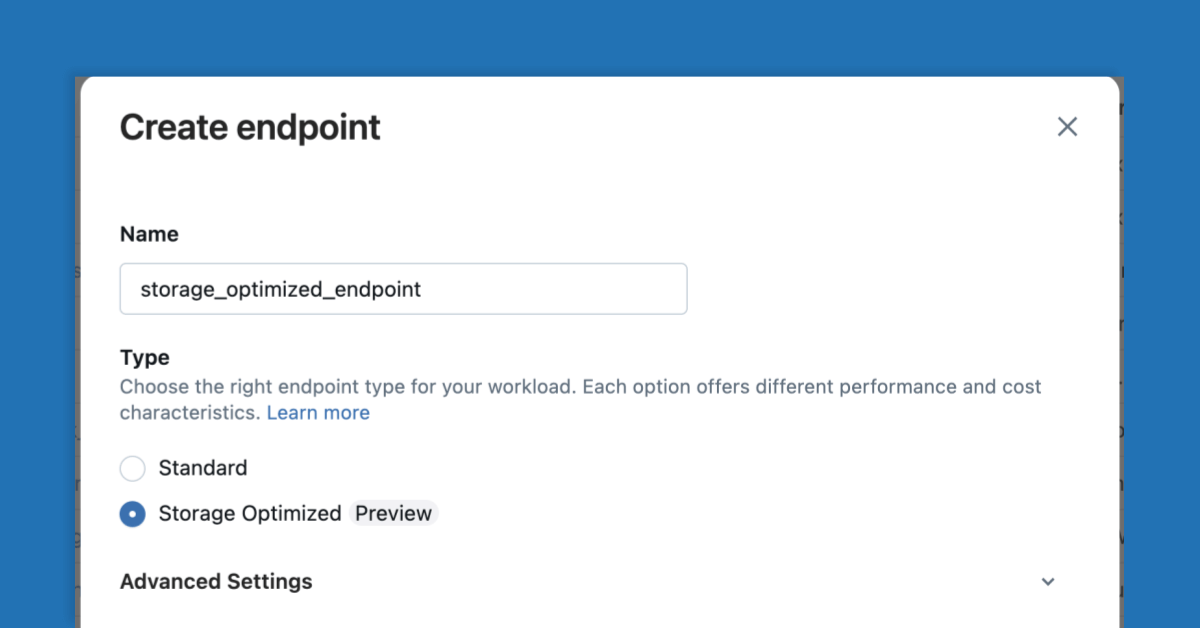

Migrating to storage-optimized endpoints is easy—just select it when creating a new endpoint, and create a new index on your table. The similarity search API remains the same, so there is no need for major code changes.

“We see storage-optimized Vector Search as essentially a drop-in replacement for the standard offering. It unlocks the scale we need to support hundreds of internal investors querying tens of millions of documents daily, without compromising on latency or quality.” — Alexandre Poulain, Director, Data Science & AI Team, PSP Investments

Because this capability is part of the Mosaic AI platform, it comes with full governance powered by Unity Catalog. That means proper access controls, audit trails, and lineage tracking across all your Vector Search assets—ensuring compliance with enterprise data and security policies from day one.

Enhanced Features to Streamline Your Workflow

To further support enterprise teams, we’re introducing new capabilities that make it easier to experiment, deploy, and manage Vector Search workloads at scale.

Teams can now test and deploy a chat agent backed by a Vector Search index as a knowledge base in two clicks – a process that used to require significant custom code. With direct integration in the Agent Playground now in Public Preview, select your Vector Search index as a tool, test your RAG agent, and export, deploy, and evaluate agents without writing a single line of code. This dramatically shortens the path from prototype to production.

Our improved cost visibility with endpoint budget policy tagging allows platform owners and FinOps teams to easily track and understand spend across multiple teams and use cases, allocate budgets, and manage costs as usage grows. More support for tagging indices and compute resources is coming soon.

Gartner®: Databricks Cloud Database Leader

This Is Just the Beginning

The release of storage-optimized endpoints is a major milestone, but we're already working on future enhancements:

- Scale-to-Zero: Automatically scale compute resources down when not in use to further reduce costs

- High QPS Support: Infrastructure to handle thousands of queries per second for demanding real-time applications

- Beyond Semantic Search: Efficient non-semantic retrieval capabilities for keyword-only workloads.

Our goal is simple: build the best vector search technology available, fully integrated with the Databricks Data Intelligence Platform you already rely on.

Start Building Today

Storage-optimized endpoints transform how you work with unstructured data at scale. With massive capacity, better economics, faster indexing, and familiar filtering, you can confidently build more powerful AI applications.

Ready to get started?

- Try Mosaic AI Vector Search for free: express setup gives you instant access and free serverless credits.

- Check out our documentation to see how it’s done!

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read