Bringing the Lakehouse to R developers: Databricks Connect now available in sparklyr

We’re excited to announce that the latest release of sparklyr on CRAN introduces support for Databricks Connect. R users now have seamless access to Databricks clusters and Unity Catalog from remote RStudio Desktop, Posit Workbench, or any active R terminal or process. This update also opens the door for any R user to build data applications with Databricks using just a few lines of code.

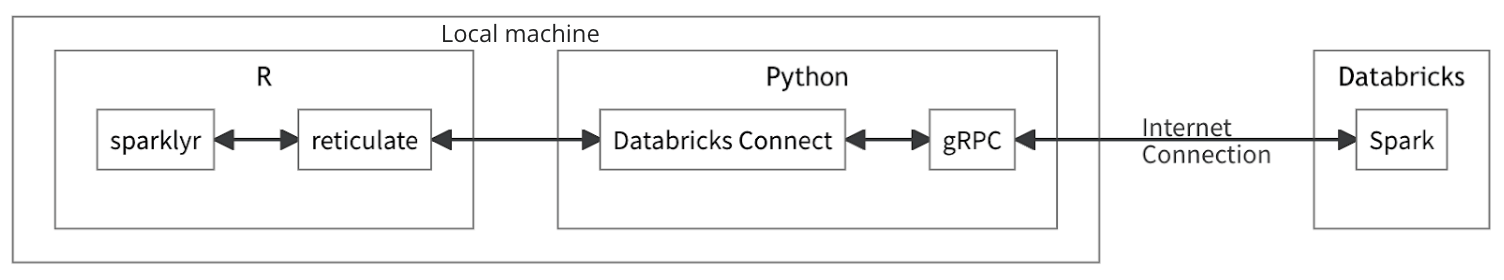

How sparklyr integrates with Python Databricks Connect

This release introduces a new backend for sparklyr via the pysparklyr companion package. pysparklyr provides a bridge for sparklyr to interact with the Python Databricks Connect API. It achieves this by using the reticulate package to interact with Python from R.

Architecting the new sparklyr backend this way makes it easier to deliver Databricks Connect functionality to R users by simply wrapping those that are released in Python. Today, Databricks Connect fully supports the Apache Spark DataFrame API, and you can reference the sparklyr cheat sheet to see which additional functions are available.

Getting started with sparklyr and Databricks Connect

To get up and running, first install the sparklyr and pysparklyr packages from CRAN in your R session.

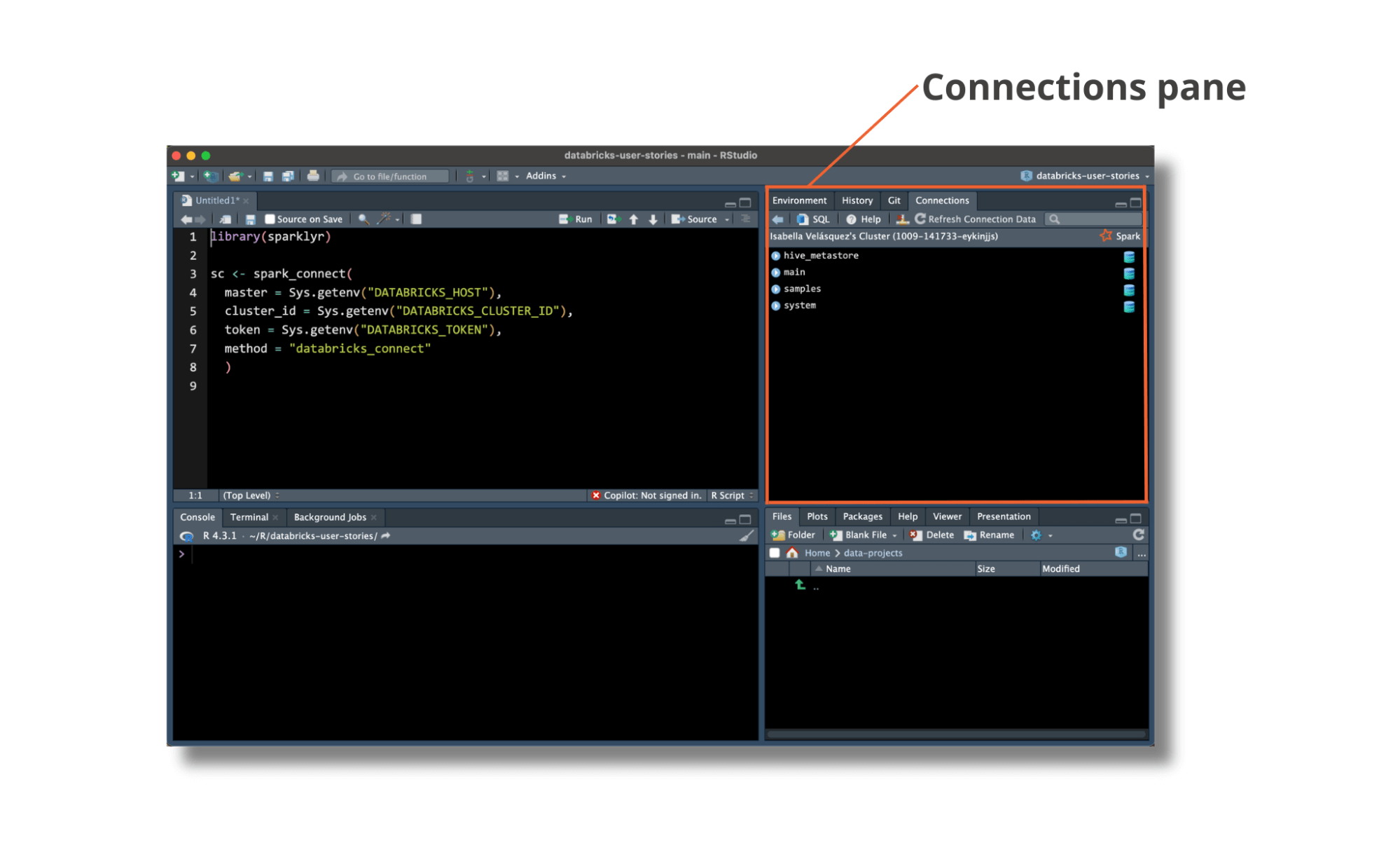

Now a connection can be established between your R session and Databricks clusters by specifying your Workspace URL (aka host), access token, and cluster ID. While you can pass your credentials as arguments directly to sparklyr::spark_connect(), we recommend storing them as environment variables for added security. In addition, when using sparklyr to make a connection to Databricks, pysparklyr will identify and help install any dependencies into a Python virtual environment for you.

More details and tips on the initial setup can be found on the official sparklyr page.

Accessing data in Unity Catalog

Successfully connecting with sparklyr will populate the Connections pane in RStudio with data from Unity Catalog, making it simple to browse and access data managed in Databricks.

Gartner®: Databricks Cloud Database Leader

Unity Catalog is the overarching governance solution for data and AI on Databricks. Data tables governed in Unity Catalog exist in a three-level namespace of catalog, schema, then table. By updating the sparklyr backend to use Databricks Connect, R users can now read and write data expressing the catalog.schema.table hierarchy:

Interactive development and debugging

To make interactive work with Databricks simple and familiar, sparklyr has long supported dplyr syntax for transforming and aggregating data. The newest version with Databricks Connect is no different:

In addition, when you need to debug functions or scripts that use sparklyr and Databricks Connect, the browser() function in RStudio works beautifully - even when working with enormous datasets.

Databricks-powered applications

Developing data applications like Shiny on top of a Databricks backend has never been easier. Databricks Connect is lightweight, allowing you to build applications that read, transform, and write data at scale without needing to deploy directly onto a Databricks cluster.

When working with Shiny in R, the connection methods are identical to those used above for development work. The same goes for working with Shiny for Python; just follow the documentation for using Databricks Connect with PySpark. To help you get started we have examples of data apps that use Shiny in R, and other frameworks like plotly in Python.

Additional resources

To learn more, please visit the official sparklyr and Databricks Connect documentation, including more information about which Apache Spark APIs are currently supported. Also, please check out our webinar with Posit where we demonstrate all of these capabilities, including how you can deploy Shiny apps that use Databricks Connect on Posit Connect.

Never miss a Databricks post

What's next?

Partners

December 11, 2024/15 min read

Introducing Databricks Generative AI Partner Accelerators and RAG Proof of Concepts

Customers

January 2, 2025/6 min read