Delta Sharing Top 10 Frequently Asked Questions, Answered - Part 1

Get answers to your top 10 questions about Delta Sharing, the most widely adopted open sharing protocol

Summary

- Delta Sharing lets you share live data and AI assets without copying files, eliminating duplication and ensuring recipients always have the freshest information.

- You can share tables, views, streams, notebooks, models, etc., and anyone can pull the data with everyday tools like Python, Spark, Power BI.

- It is growing fast (300% YoY) and 40% of its active shares are with users outside the Databricks ecosystem, proving it works as an open, cross‑platform data‑sharing protocol.

Delta Sharing is seeing incredible momentum, with a 300% year-over-year growth in active shares. This isn't just one-time file transfers; it represents sustained, ongoing collaboration that proves real value is being exchanged.

A key factor in this growth is the platform's open philosophy. Delta Sharing enables customers to share any Data and AI asset, with anyone, without any friction. 40% of Delta Sharing active shares are with recipients outside the Databricks ecosystem. This demonstrates that Delta Sharing is powering an open collaboration ecosystem that reaches across platforms and clouds.

In this post, we’ve gathered the top 10 questions people ask about Delta Sharing. Keep reading to get the overview, why it’s different, what the most common use cases are, and what you need to get started.

1. What is Delta Sharing?

Delta Sharing is the most widely adopted open protocol for secure data sharing. It lets organizations exchange live data and AI assets across platforms and clouds.

2. What makes Delta Sharing different?

Most sharing tools force you to copy data to a new destination, creating stale silos and expanding your attack surface. Delta Sharing lets you read live data at the source, so there’s nothing to move or duplicate.

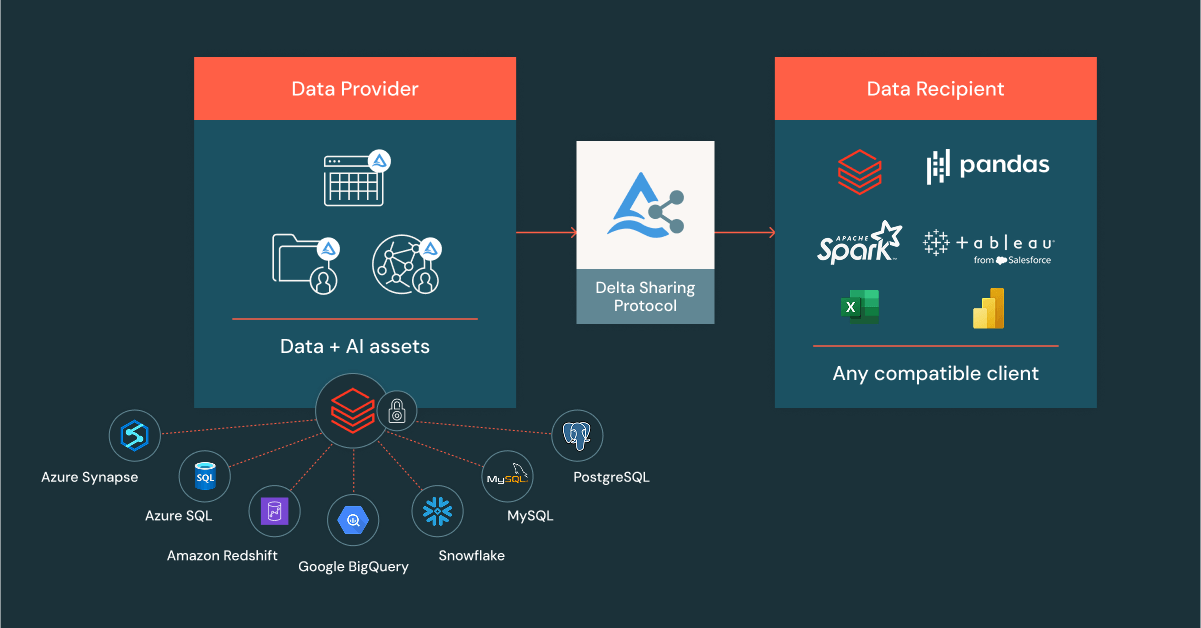

Second, because Delta Sharing is open source, it isn’t tied to a single ecosystem. You can share from your Databricks lakehouse or elsewhere, and recipients can consume the data whether they use Databricks or not.

Finally, recipients connect through standard, open connectors: Python, Apache Spark, Java, Power BI, and more, to read the shared tables you authorize.

Taken together, Delta Sharing provides platform-independent collaboration for data and AI across teams, tools, and clouds. You’re able to work without lock-in, without copies, and without governance gaps.

3. Does Delta Sharing work with Iceberg? If I use Apache Iceberg, how can I leverage Delta Sharing?

Yes, Delta Sharing is fully compatible with Apache Iceberg. By choosing Delta Sharing, you get the best of both worlds: access to the widest collaboration ecosystem with Apache Iceberg seamlessly working as your data source and destination, while leveraging the full power of Delta Sharing.

Delta Sharing makes sharing a first-class primitive in Iceberg. With unique features such as OIDC token federation, which allows open recipients to authenticate with custom IdPs, and Network Gateway, which simplifies and scales network configuration, customers unlock full interoperability across table formats.

Tables managed in Unity Catalog can now be shared with Iceberg clients such as Snowflake, Trino, and Spark. Additionally, foreign Iceberg tables managed by catalogs like Hive Metastore or AWS Glue can be federated into Unity Catalog and then shared through the same protocol. In both cases, you register the tables in Unity Catalog, create a share, and add relevant recipients either on or off Databricks. This ensures Iceberg users can collaborate with Databricks customers using live, governed data—without moving or duplicating it.

4. Can I use Delta Sharing to share data with users who are not Databricks’ customers?

Yes, you can share data with all your recipients, regardless of whether they use Databricks or any other platform. Delta Sharing is an open protocol that works both for Databricks-to-Databricks sharing, as well as Open Sharing. Delta Sharing provides open connectors that recipients can use on any platform including an Apache Spark connector, a Pandas connector, an Iceberg Rest Catalog connector, as well as a PowerBI, Tableau, and Excel connectors. Open sharing allows you to share not only tables, but also views, partitions, and change data feeds, allowing you to optimize your data sharing experience even when sharing externally.

5. Why use Delta Sharing? What problems does it solve?

We've looked at how thousands of customers are using Delta Sharing and found four main ways it really makes a difference for their businesses.

| Use Case | Description | Customer/Partner Example |

|---|---|---|

| Internal Sharing | Breaking down data silos within a company, across business units and clouds. | Mercedes-Benz uses it to create a unified data mesh for its global teams. |

| Peer-to-Peer Sharing | Securely collaborating with partners, suppliers, and customers. | Procore provides customers with direct access to critical project data for analytics. |

| 3rd-Party Data Licensing | Licensing and integrating external data and AI models. | S&P Global makes its market intelligence datasets available on the Databricks Marketplace |

| SaaS Application Sharing | Connecting to data locked in various SaaS applications. | Oracle Autonomous Database—along with Oracle Fusion Data Intelligence—can now securely and seamlessly share data with Databricks and other platforms |

Gartner®: Databricks Cloud Database Leader

6. If I already share data using SFTP, S3, Dropbox, or email, why would I need Delta Sharing?

If you’re still sharing data through SFTP, S3, Dropbox, or email, you’re exposing your organization to unnecessary risk and inefficiency. See what happened to Finastra where attackers exploited SFTP weakness stealing roughly 400GB of sensitive data.

Those old‑school tricks may work, but they’re dated and fragile. You end up copying full files, juggling static passwords or keys that never expire, and creating countless out‑of‑sync copies that open up major security and compliance gaps. Delta Sharing replaces all of that with a modern, secure, and auditable approach. You can share just the specific tables, rows, or columns someone needs (and AI Models as well), and the person pulling the data always sees the latest version because there’s no extra copy hanging around.

Security is tighter, too. Instead of handing out static passwords or access keys, Delta Sharing hands out short‑lived tokens, and it can hook into the identity system you already use, so you never have to manage a separate set of credentials. Every time someone looks at the data, it’s logged in Unity Catalog, which makes auditing and compliance a lot easier.

If you’re serious about protecting sensitive data and simplifying collaboration, Delta Sharing isn’t a “nice to have”; it’s the baseline for secure data exchange today.

Check out How Kythera Labs, a Databricks Built-On Partner, saves $2M+/year using Delta Sharing

7. What kinds of assets can I share using Delta Sharing?

You can share almost any kind of data or AI asset with Delta Sharing, and that breadth is pretty unique. These include tables (and table partitions), streaming tables, managed Iceberg tables, foreign schemas & tables, views (including dynamic views for row/column filtering), materialized views, volumes, notebooks, and AI models. If you share an entire schema (database), everything in it (tables, views, volumes, models) is shared immediately, and any new assets added later will also become available to recipients. All of these assets are tied to a single Unity Catalog metastore, keeping the sharing clean and organized.

8. How does Delta Sharing keep the data safe when a provider shares it with a recipient?

Delta Sharing uses a zero‑trust, token‑based approach. When someone asks for data, the sharing server checks Unity Catalog, then hands out a short‑lived, read‑only token or a pre‑signed URL that points directly to the storage—so no permanent passwords ever leave the provider. All traffic is wrapped in TLS encryption, and every request is logged for audit. Inside Databricks‑to‑Databricks, the handshake is handled automatically; external users can authenticate with simple credential files or OIDC federation, but the same temporary token, encrypted, and fully audited model applies. This ensures only the right people can see the right data, and only for a limited time. Read How Delta Sharing Enables Secure End-to-End Collaboration for a deep dive.

9. That sounds good. What are the cost implications of using Delta Sharing?

Getting started with Delta Sharing doesn’t cost a dime — there’s no charge to set up, configure, or share a data set or AI Model. You only see a bill when someone actually queries the data, and even then, the fees break down into three clear pieces.

First, the compute cost (the processing power needed to run the query) is usually paid by the person doing the query, though the data‑owner can choose to cover it if that makes more sense.

Second, there’s the egress cost for moving data out of the provider’s cloud; the newer R2 mode (now GA) even offers a “zero egress” option, so you can avoid that charge altogether.

Third, storage cost only matters if you decide to keep a replicated copy—live, on‑the‑fly access doesn’t require extra space.

Here’s a Databricks to Databricks share example: imagine a supplier on AWS shares a materialized view to a retailer on Azure. When the data is shared, the supplier pays egress for data leaving AWS, and when the retailer runs a query on the shared data, the retailer pays compute for the query.

10. What do I need to start using Delta Sharing?

The requirements depend on whether you are sharing with a Databricks recipient or a non-Databricks recipient.

- Databricks-to-Databricks (D2D): This model is for sharing data between Databricks workspaces. Both the provider and the recipient need a Unity Catalog metastore to manage the shared data. Read how Aon Reinsurance Solutions is Leveraging Databricks Delta Sharing to Help Make Better Decisions

- Databricks-to-Open (D2O): This model is for sharing data from a Databricks workspace to any external tool or platform. The provider needs a Unity Catalog-enabled workspace, and the recipient can connect using open connectors like Spark, Pandas, or Power BI. See how HP’s 3D Print division is enabling customers to monitor equipment performance in near real-time using D2O

External sharing must be enabled, and organizations should track governance and potential cross-cloud egress costs.

Ready to get started?

- Read the Delta Sharing documentation to start with Delta Sharing

- Watch the Delta Sharing in Action: Architecture and Best Practices from Data and AI Summit 2025

- Watch the Securing Data Collaboration: A Deep Dive Into Security, Frameworks, and Use Cases from Data and AI Summit 2025 to learn more about the Delta Sharing Security, Framework and Use Cases

Stay tuned for the next series of questions, where we'll explore topics including security, how Delta Sharing powers products like Clean Rooms and Databricks Marketplace, and other advanced features.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read