How KPMG uses Delta Sharing to access and audit tens of billions of transactions

A practical look at improving audits in the energy supply sector.

Summary

- KPMG navigated performance and productivity challenges in auditing big data from a major UK energy supplier

- Delta Sharing helped KPMG receive and analyze large data containing tens of billions of entries across clouds

- This resulted in improving the quality of our data analytics routine by 15 percentage points

Seamless and secure access to data has become one of the biggest challenges facing organizations. Nowhere is this more evident than in technology-led external audits, where analyzing 100% of transactional data is fast becoming the gold standard. These audits involve reviewing tens of billions of lines of financial and operational billing data.

To deliver meaningful insights at scale, analysis must not only be robust but also efficient — balancing cost, time, and quality to achieve the best outcomes in tight timeframes.

Recently in collaboration with a major UK energy supplier, KPMG leveraged Delta Sharing in Databricks to overcome performance bottlenecks, improve efficiency, and enhance audit quality. This blog discusses our experience, the key benefits, and the measurable impact on our audit process from using Delta Sharing.

The Business Challenge

To meet public financial reporting deadlines, we needed to access and analyze tens of billions of lines of the audited entity's billing data within a short audit window.

Historically, we relied on the audited entity's analytics environment hosted in AWS PostgreSQL. As data volumes grew, the setup showed its limits:

- Data Volume: Our approach required looking beyond the audit period to analyze historical data that was essential for the routine. As this dataset has significantly grown year on year, it eventually exceeded AWS PostgreSQL limits. This forced us to split the data across two separate databases, introducing additional operational overhead and cost.

- Data Transfer: Moving and copying data from a production environment to a ‘ring-fenced’ analytics PostgreSQL database caused a delayed start and a lack of freshness and agility.

- Query Performance Degradation: While PostgreSQL does support parallelism, it does not leverage multiple CPU cores when executing a single query, leading to suboptimal performance.

- Resourcing: Because access to the entity’s analytics environment was limited to their assets, we faced challenges in making the best use of our people and quickly onboarding new team members.

Given these constraints, we needed a scalable, high-performance solution that would allow efficient access to and processing of data without compromising security or governance, enabling reduced ‘machine time’ for quicker outcomes.

Why Delta Sharing?

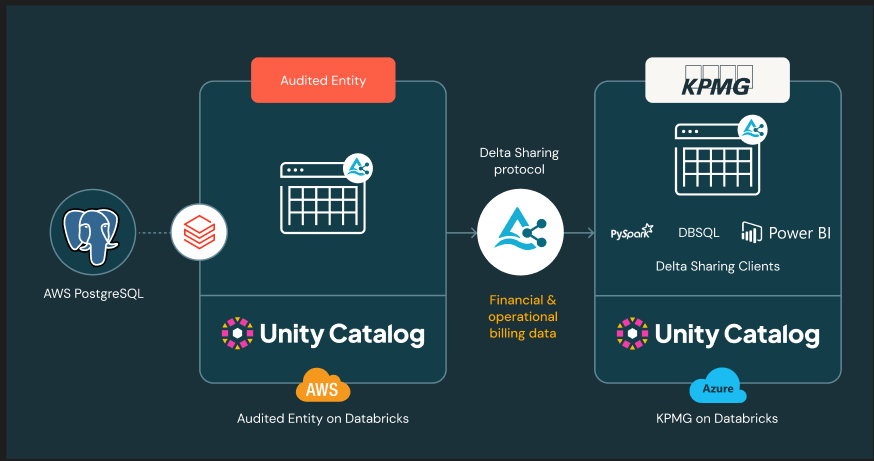

Delta Sharing, an open data-sharing protocol, provided the ideal solution by enabling secure and efficient cross-platform data exchange between KPMG and the audited entity without duplication.

Compared to extending PostgreSQL, Databricks offered several distinct advantages:

- Handles Large Datasets: Delta Sharing is designed to handle petabyte-scale data, eliminating PostgreSQL's performance limitations.

- Lower costs: Delta Sharing lowered storage and compute costs by reducing the need for large-scale data replication and transfers.

- Flexibility: Shared data could be accessed in Databricks using all of PySpark, SQL, and BI tools like Power BI, facilitating seamless integration into our audit deliverables.

- Delta Tables: We could “time travel” to past states of data. This was valuable for checking historical points that were previously lost in the client’s data model.

Implementation Approach

We introduced Delta Sharing in a way that did not disrupt ongoing audit work:

- Data Sharing: We gave the entity a list (in JSON format) of the tables and views we needed. They used Lakeflow Jobs and Delta Sharing to make these available to us directly in our Databricks environment. The audited entity provided access by sharing a key, granting us permission to secure these pre-agreed datasets with minimal effort between AWS and Azure. Delta Sharing handled this cross-cloud exchange securely, without copying or moving the data between platforms.

- Integration with Unity Catalog: Unity Catalog gave us a single place to manage permissions, apply governance policies, and maintain full visibility of who accessed what data.

- Scheduled Data Refreshes: During key audit cycles, data was refreshed to align with financial reporting timelines.

- Performance Optimization: Once inside Databricks, we reworked queries from PostgreSQL to Spark SQL and PySpark. With Delta Sharing providing governed, ready-to-use data, we focused on optimizing performance rather than managing data movement.

Gartner®: Databricks Cloud Database Leader

Measurable Impact

We used Delta Sharing to access and analyze billions of meter readings across millions of their customer accounts., We observed significant improvements across multiple KPIs:

- Faster queries: Delta Sharing allowed us to use more computing power for big data tasks. Some of our most complex queries finished over 80% faster—for example, going from 14.5 hours to 2.5 hours—compared to our old PostgreSQL process.

- Improved Audit Quality: By spending less time waiting for machines, we had more time to focus on exceptions, unusual patterns and complex edge cases. This improved our data analytics results by 15 percentage points in some instances and reduced the burden of any residual sampling.

- Cost Savings: By using Delta Sharing, we avoided making extra copies of the data. This meant we only stored and processed what was needed, which brought down both storage and compute costs.

- Quicker access: Since the data was provisioned through Delta Sharing, there was less time wasted waiting for it to be ready, allowing us to start work sooner.

- Easier Team Onboarding: Seamless on-boarding new team members and broader mix of coding skills - SQL and PySpark.

Using Delta Sharing has made a noticeable difference to our audit process. We can securely access data across cloud platforms-without delays or manual data movement-so our teams always work from the latest, single source of truth. This cross-cloud capability means faster audits, more reliable results for the audited clients we work with, and tight control over data access at every step. — Anna Barrell, Audit partner, KPMG UK

Technical Considerations

A couple of technical considerations of working with Databricks that should be considered:

• Delta Sharing: As early adopters, some features weren’t yet available (for example, sharing materialized views) though we’re excited that these are now refined with the GA release and we’ll be enhancing our delta sharing solutions with this functionality.

• Lakeflow Jobs: Currently, there is no mechanism to confirm whether an upstream job for a Delta Shared table has been completed. One script was executed before completion and led to an incomplete output, though this was quickly identified through our completeness and accuracy procedures.

Looking to the Future

Delta Sharing has proven to be a game-changer for audit data analytics, enabling efficient, scalable, and secure collaboration. Our successful implementation with the energy supplier demonstrates the value of Delta Sharing for clients with diverse data sources across cloud and platform.

We recognize that many organizations store a significant portion of their financial data in SAP. This presents an additional opportunity to apply the same principles of efficiency and quality at an even greater scale.

Through Databricks’ strategic partnership with SAP, announced in February of this year, we can now access SAP data via Delta Sharing. This joint solution, which has become one of SAP's fastest-selling products in a decade, allows us to tap into this data while preserving its context and syntax. By doing so, we can ensure the data remains fully governed under Unity Catalog and its total cost of ownership is optimized. As the entities we audit progress on their transformation journey, we at KPMG are looking to build on this traction, anticipating the additional benefits it will bring to a streamlined audit process.