Introducing the Databricks AI Governance Framework

A comprehensive guide to implementing enterprise AI programs responsibly and effectively

Summary

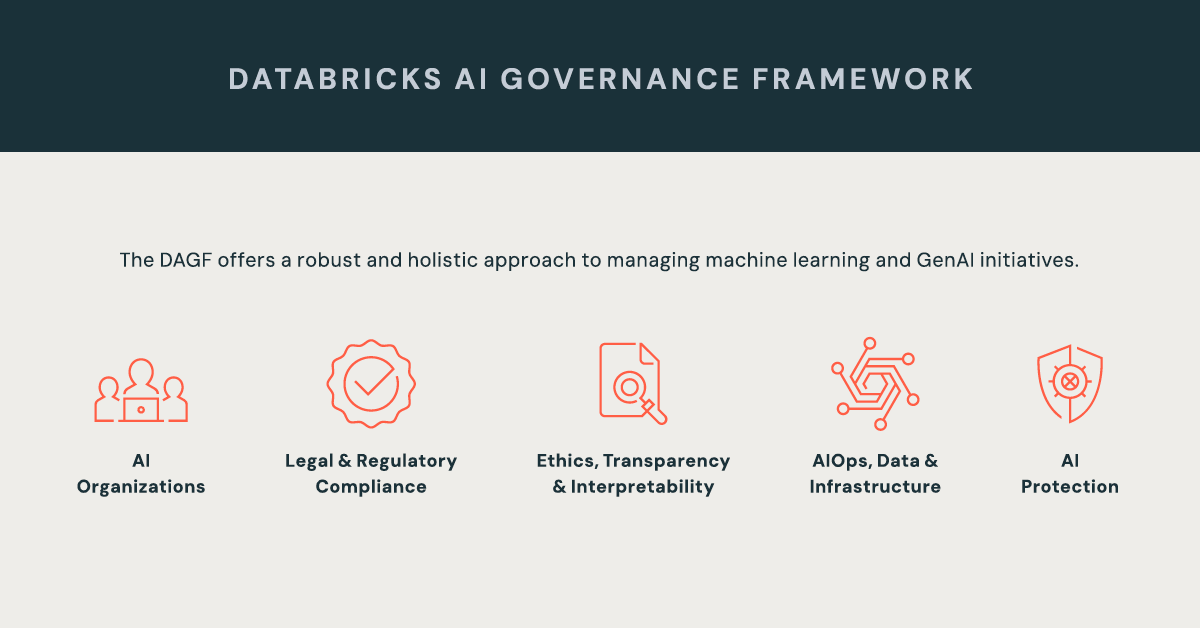

- The Databricks AI Governance Framework (DAGF) outlines a structured approach to responsible AI development, spanning 5 pillars and 43 key considerations.

- It provides best practices across risk management, legal compliance, ethical oversight, and operational monitoring to support transparent, accountable AI systems.

- DAGF helps enterprises scale AI programs while managing regulatory expectations, reducing risk, and maintaining stakeholder trust.

Today, we’re introducing the Databricks AI Governance Framework (DAGF v1.0), a structured and practical approach to governing AI adoption across the enterprise.

As organizations embrace AI at scale, the need for formal governance grows. Enterprises must align AI development with business goals, meet legal obligations, and account for ethical risks. This framework is designed to support program development, deployment, and continuous improvement.

The DAGF complements the Databricks AI Security Framework, offering a complete view of governance that spans both security and operational integrity.

Why AI governance can’t wait

According to a 2024 global survey of 1,100 technology executives and engineers conducted by Economist Impact, 40% of respondents believed that their organization’s AI Governance program was insufficient in ensuring the safety and compliance of their AI assets and use cases. In addition, data privacy and security breaches were the top concern for 53% of enterprise architects, while security and governance are the most challenging aspects of data engineering for engineers.

In addition, according to Gartner, AI trust, risk, and security management is the #1 top strategy trend in 2024 that will factor into business and technology decisions, and by 2026, AI models from organizations that operationalize AI transparency, trust, and security will achieve 50% increase in terms of adoption, business goals, and user acceptance.

While it is evident that the lack of enterprise-level AI governance programs is fast becoming a key blocker to realizing return on value from AI investments and AI adoption as a whole, we realized that there is not a single, comprehensive guidance framework that enterprises can leverage to build effective AI governance programs.

The five foundational pillars

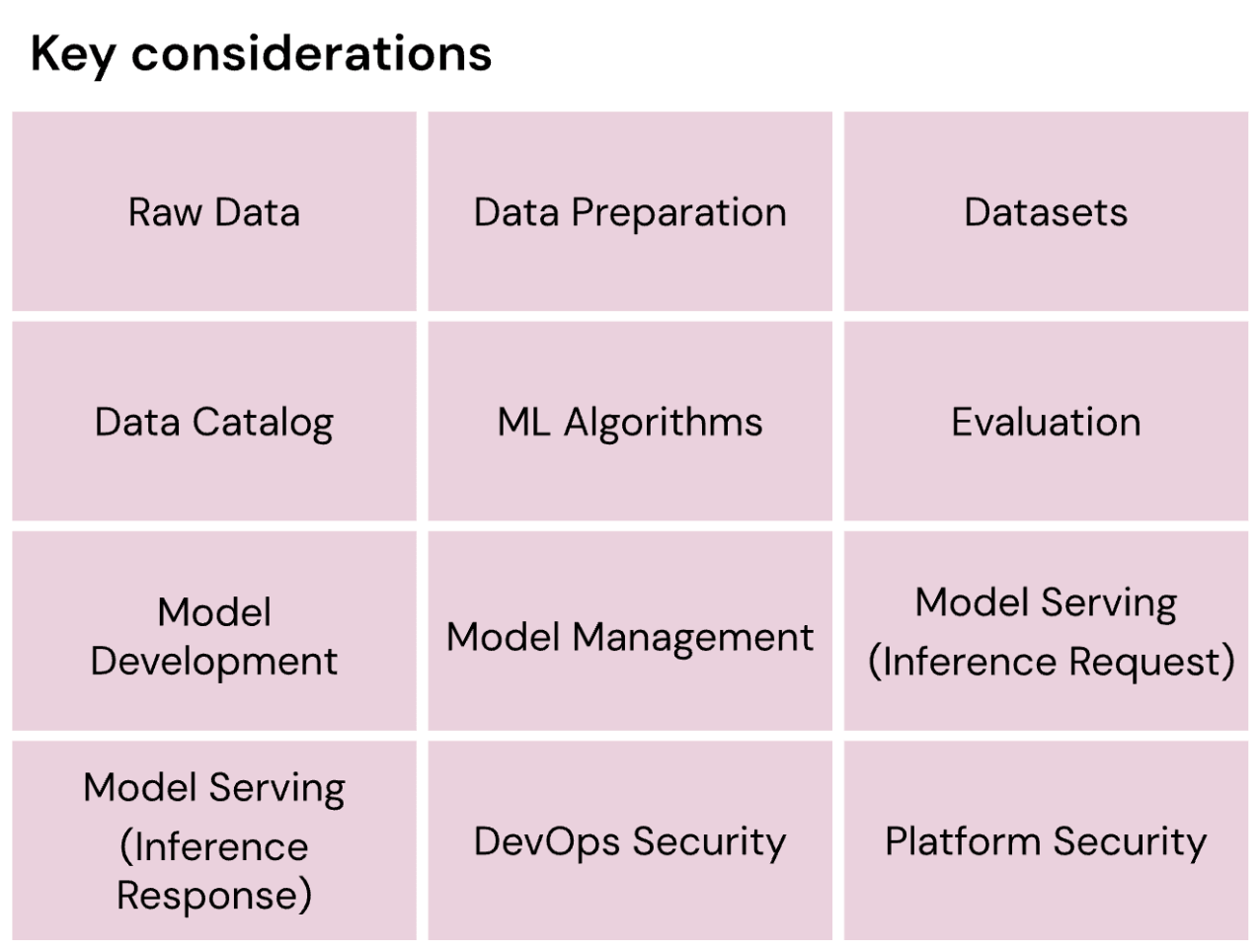

In this framework, we introduce 43 key considerations that are essential for every enterprise to understand (and implement as appropriate) to effectively govern their AI journeys.

These key considerations were then logically grouped across 5 foundational pillars, designed and sequenced to reflect typical enterprise org-structures and personas.

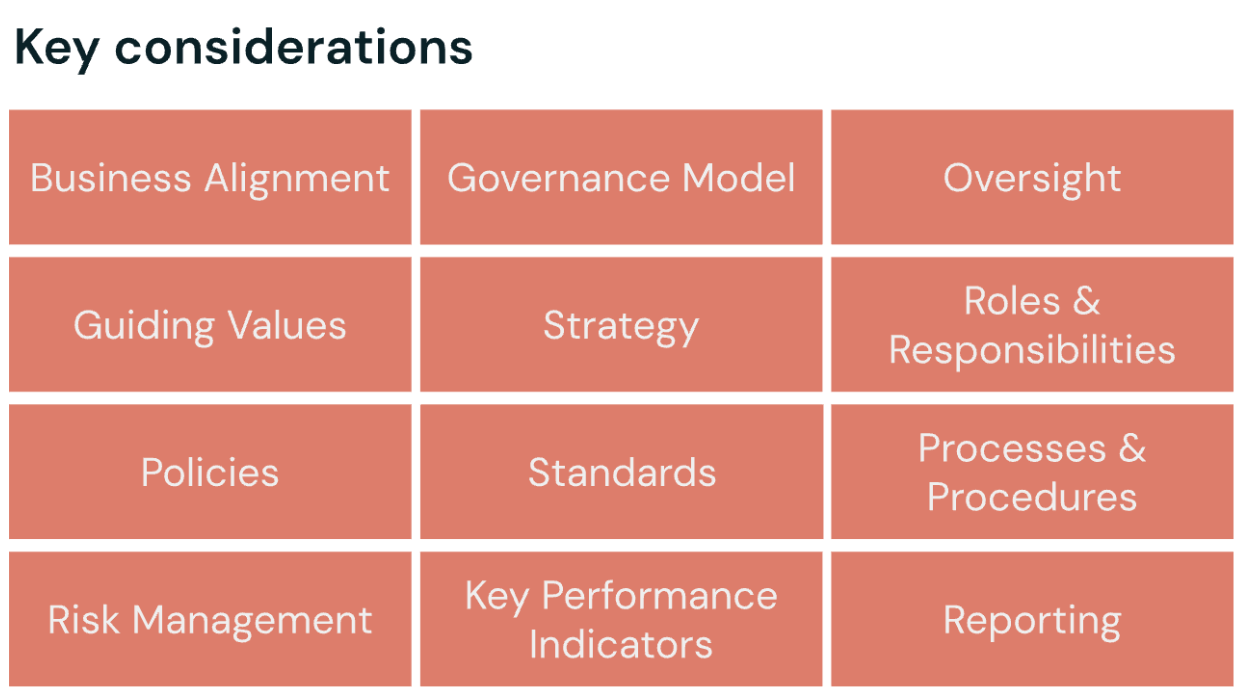

Pillar I: AI Organization

The AI Organization pillar embeds AI governance within the organization’s broader governance strategy. It underscores the foundation for an effective AI program through best practices like clearly defined business objectives and integrating the appropriate governance practices that oversee the organization's people, processes, technology, and data. It explains how organizations can establish the oversight required to achieve their strategic goals while reducing risk.

Pillar II: Legal and Regulatory Compliance

The Legal and Regulatory Compliance pillar helps organizations align AI initiatives with applicable laws and regulations. It guides managing legal risks, interpreting sector-specific requirements, and adapting compliance strategies in response to evolving regulatory landscapes. The outcome is AI programs are developed and deployed within a robust legal and regulatory framework.

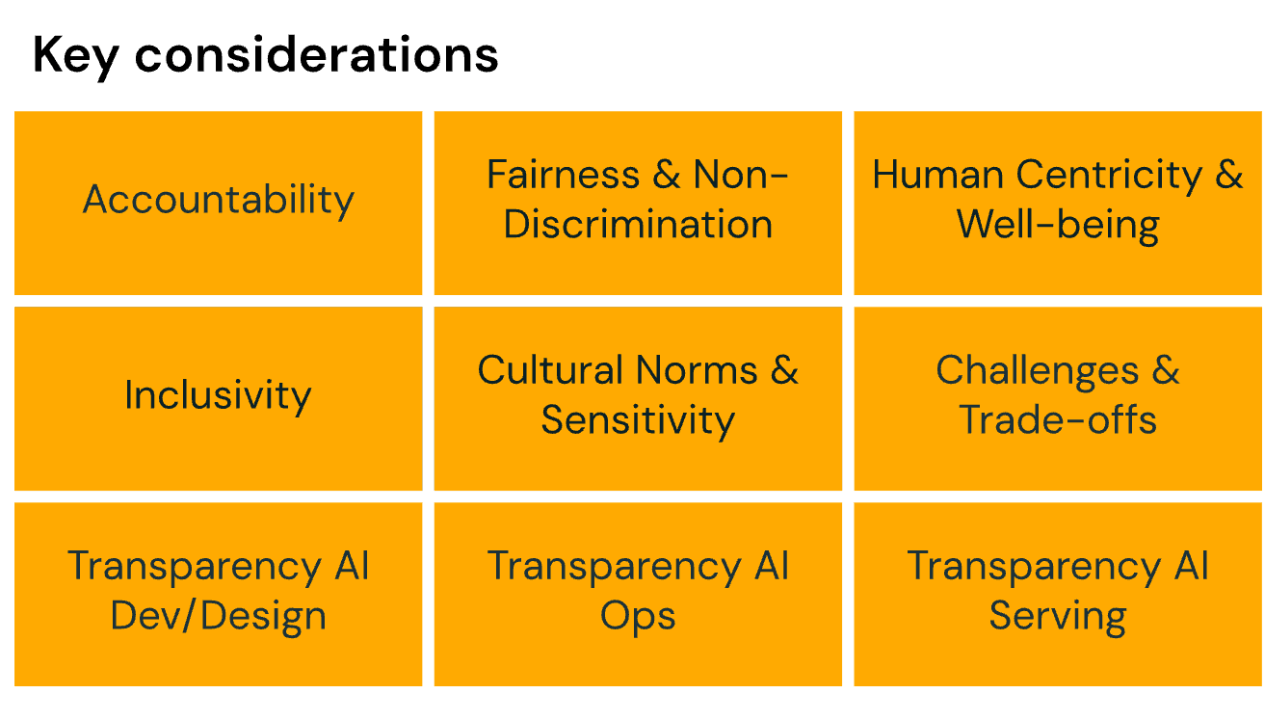

Pillar III: Ethics, Transparency and Interpretability

The Ethics, Transparency, and Interpretability pillar supports organizations in building trustworthy and responsible AI systems. It emphasizes adherence to ethical principles such as fairness, accountability, and human oversight while promoting explainability and stakeholder engagement. This pillar provides methods to establish accountability and structure within organizational teams, helping to ensure that AI decisions are interpretable, aligned with evolving ethical standards, and fostering long-term trust and societal acceptance.

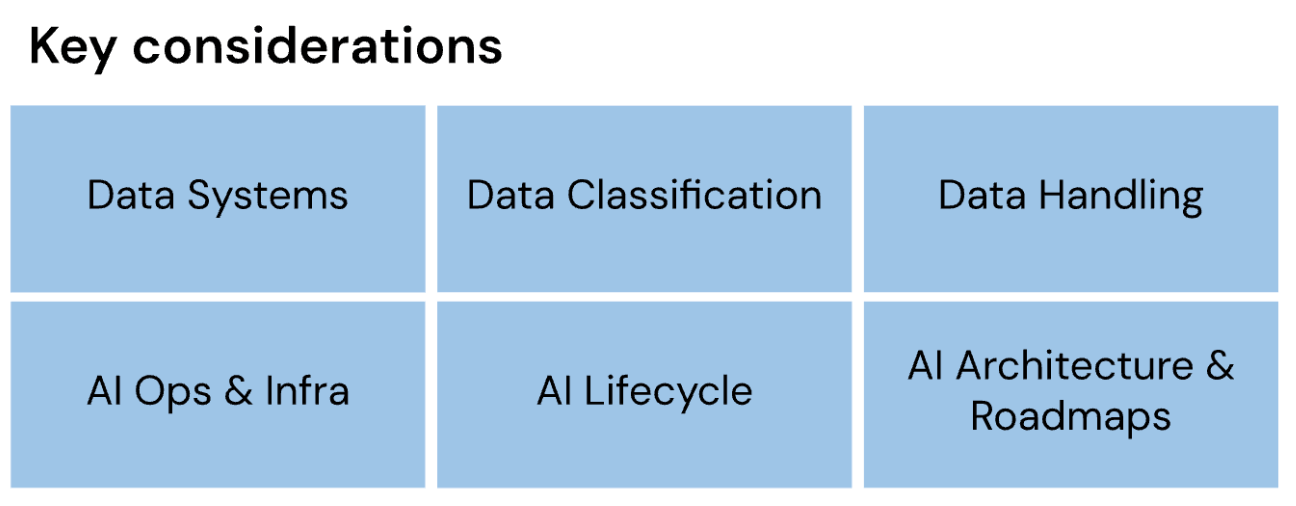

Pillar IV: Data, AI Ops, and Infrastructure

The Data, AI Operations (AIOps), and Infrastructure pillar defines the foundation that supports organizations in fully deploying and maintaining AI. It provides guidelines for creating a scalable and reliable AI infrastructure, managing the machine learning lifecycle, and ensuring data quality, security, and compliance. This pillar also emphasizes best practices for AI operations, including model training, evaluation, deployment, and monitoring, so AI systems are reliable, efficient, and aligned with business goals.

Pillar V: AI Security

The AI Security pillar introduces the Databricks AI Security Framework (DASF), a comprehensive framework for understanding and mitigating security risks across the AI lifecycle. It covers critical areas such as data protection, model management, secure model serving, and the implementation of robust cybersecurity measures to protect AI assets.

For an additional overview of DAGF and for an example walkthrough of how an organization can leverage the framework to create clear ownership and alignment across the AI program lifecycle, please watch this presentation from the authors made during the 2025 Data + AI Summit.

Gartner®: Databricks Cloud Database Leader

Why Databricks is leading this effort

As an industry leader in the data and AI space, with over 15,000 customers across diverse geographies and market segments, Databricks has continued to deliver on its commitment to principles of responsible development and open source innovation. We’ve upheld these commitments through our:

- Engagement with both industry and government efforts to promote innovation and advocate for the use of safe and trustworthy AI

- Interactive workshops to educate organizations on how to successfully shepherd their AI journey in a risk-conscious manner

- Open sourcing of key governance innovations such as MLFlow and Unity Catalog, the industry’s only unified solution for data and AI governance across clouds, data formats and data platforms.

These programs have offered us unique visibility into practical problems that enterprises and regulators face today in AI governance. In furthering our commitment to helping every enterprise succeed and accelerate their Data and AI journey, we decided to leverage this visibility to build (and make freely available) a comprehensive, structured and actionable AI Governance Framework.

Download the Databricks AI Governance Framework today!

The Databricks AI Governance Framework whitepaper is now available for download. Please reach out to us via email at [email protected] for any questions or feedback. If you’re interested in contributing to future updates of this framework (and other upcoming artifacts) by joining our reviewer community, we’d love to hear from you as well!

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read