Introducing Meta Llama 3.2 on Databricks: faster language models and powerful multi-modal models

Tune new Llama 3.2 models to achieve high quality and speed for enterprise use cases

Published: September 25, 2024

by Daniel King, Hanlin Tang and Patrick Wendell

We are excited to partner with Meta to launch the latest models in the Llama 3 series on the Databricks Data Intelligence Platform. The small textual models in this Llama 3.2 release enable customers to build fast real-time systems, and the larger multi-modal models mark the first time the Llama models gain visual understanding. Both provide key components for customers on Databricks to build compound AI systems that enable data intelligence – connecting these models to their enterprise data.

As with the rest of the Llama series, Llama 3.2 models are available today in Databricks Mosaic AI, allowing you to tune them securely and efficiently on your data, and easily plug them into your GenAI applications with Mosaic AI Gateway and Agent Framework.

Start using Llama 3.2 on Databricks today! Deploy the model and use it in the Mosaic AI Playground, and use Mosaic AI Model Training to customize the models on your data. Sign up to this webinar for a deep dive on Llama 3.2 from Meta and Databricks.

This year, Llama has achieved 10x growth further supporting our belief that open source models drive innovation. Together with Databricks Mosaic AI solutions, our new Llama 3.2 models will help organizations build Data Intelligence by accurately and securely working on an enterprise’s proprietary data. We’re thrilled to continue working with Databricks to help enterprises customize their AI systems with their enterprise data. - Ahmad Al-Dahle, Head of GenAI, Meta

What's New in Llama 3.2?

The Llama 3.2 series includes smaller models for use cases requiring super low latency, and multimodal models to enable new visual understanding use cases.

- Llama-3.2-1B-Instruct and Llama-3.2-3B-Instruct are purpose built for low-latency and low-cost enterprise use cases. They excel at “simpler” tasks, like entity extraction, multilingual translation, summarization, and RAG. With tuning on your data, these models are a fast and cheap alternative for specific tasks relevant to your business.

- Llama-3.2-11B-Vision-Instruct and Llama-3.2-90B-Vision-Instruct enable enterprises to use the powerful and open Llama series for visual understanding tasks, like document parsing and product description generation.

- The multimodal models also come with a new Llama guard safety model, Llama-Guard-3-11B-Vision, enabling responsible deployment of multimodal applications.

- All models support the expanded 128k context length of the Llama 3.1 series, to handle super long documents. Long context simplifies and improves the quality of RAG and agentic applications by reducing the reliance on chunking and retrieval.

Additionally, Meta is releasing the Llama Stack, a software layer to make building applications easier. Databricks looks forward to integrating its APIs into the Llama Stack.

Faster and cheaper

The new small models in the Llama 3.2 series provide an excellent new option for latency and cost sensitive use cases. There are many generative AI use cases that don’t require the full power of a general purpose AI model, and coupled with data intelligence on your data, smaller, task-specific models can open up new use cases that require low latency or cost, like code completion, real-time summarization, and high volume entity extraction. Accessible in Unity Catalog, you can easily swap the new models into your applications built on Databricks. To enhance the quality of the models on your specific task, you can use a more powerful model, like Meta Llama 3.1 405B, to generate synthetic training data from a small set of seed examples, and then use the synthetic training data to fine-tune Llama 3.2 1B or 3B to achieve high quality and low latency on your data. All of this is accessible in a unified experience on Databricks Mosaic AI.

Fine-tuning Llama 3.2 on your data in Databricks is just one simple command:

See the Mosaic AI Model training docs for more information and tutorials!

Big Book of MLOps

New open multimodal models

The Llama 3.2 series includes powerful, open multimodal models, allowing both visual and textual input. Multimodal models open many new use cases for enterprise data intelligence. In document processing, they can be used to analyze scanned documents alongside textual input to provide more complete and accurate analysis. In e-commerce, they enable visual search where users can upload a photo of a product to find similar items based on generated descriptions. For marketing teams, these models streamline tasks like generating social media captions based on images. We are excited to offer usage of these models on Databricks, and stay tuned for more on this front!

Here is an example of asking Llama 3.2 to parse a table into JSON representation:

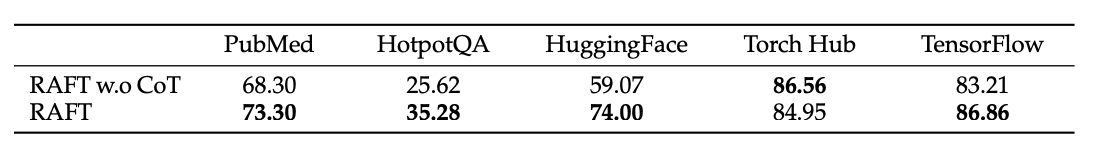

Image (Table 2 from the RAFT paper):

Prompt: Parse the table into a JSON representation.

Output:

Customers Innovate with Databricks and Open Models

Many Databricks customers are already leveraging Llama 3 models to drive their GenAI initiatives. We’re all looking forward to seeing what they will do with Llama 3.2.

- “Databricks’ scalable model management capabilities enable us to seamlessly integrate advanced open source LLMs like Meta Llama into our productivity engine, allowing us to bring new AI technologies to our customers quickly.” - Bryan McCann, Co-Founder/CTO, You.com

- “Databricks Mosaic AI enables us to deliver enhanced services to our clients that demonstrate the powerful relationship between advanced AI and effective data management while making it easy for us to integrate cutting-edge GenAI technologies like Meta Llama that future-proof our services." - Colin Wenngatz, Vice President of Data Analytics, MNP

- “The Databricks Data Intelligence Platform allows us to securely deploy state-of-the-art AI models like Meta Llama within our own environment without exposing sensitive data. This level of control is essential for maintaining data privacy and meeting healthcare standards." - Navdeep Alam, Chief Technology Officer, Abacus Insights

- "Thanks to Databricks Mosaic AI, we are able to orchestrate prompt optimization and instruction fine-tuning for open source LLMs like Meta Llama that ingest domain-specific language from a proprietary corpus, improving the performance of behavioral simulation analysis and increasing our operational efficiency." - Chris Coughlin, Senior Manager, DDI

Getting started with Llama 3.2 on Databricks Mosaic AI

Follow the deployment instructions to try Llama 3.2 directly from your workspace. For more information, please refer to the following resources:

- Read Meta’s Llama 3.2 launch blog post

- View and run the multimodal notebook

- Explore the Foundation Model Getting Started Guide

- Visit the Mosaic AI Model Training docs to get started fine-tuning on your data

- Apply LLMs on large batches of data with AI functions

- Build Production-quality Agentic and RAG Apps with Agent Framework and Evaluation

- View the fine-tuning and serving pricing pages

Attend the next Databricks GenAI Webinar on 10/8/24: The Shift to Data Intelligence where Ash Jhaveri, VP at Meta will discuss Open Source AI and the future of Meta Llama models

Never miss a Databricks post

Sign up

What's next?

Data Science and ML

June 12, 2024/8 min read

Mosaic AI: Build and Deploy Production-quality AI Agent Systems

Data Science and ML

October 1, 2024/5 min read