Reduce Overhead and Get Straight to Work With Personal Compute in Databricks

Focus on your work and not compute management

The Databricks Lakehouse enables organizations to use a single, unified platform for all their data, analytics, and AI workloads. These projects often start with data copied onto laptops or personal virtual machines for rapid iteration, but when they reach sufficient maturity or need to move to production, practitioners often have to suffer through painful migrations to official infrastructure.

Today, we are thrilled to announce Personal Compute in Databricks, rolling out this week on AWS and Azure. Personal Compute provides users with a quick and simple path for developing from start to finish on Databricks while giving administrators the access and infrastructure controls they need to maintain peace of mind. With Personal Compute,

- users can create modestly-sized, single-machine CPU or GPU resources containing Spark and other data science and machine learning libraries, enabling them to easily start and evolve their work in Databricks so they can focus on their job and not complex compute configuration or migrations, and

- administrators can sleep soundly knowing sensitive data never leaves the governed sandbox that Databricks provides.

Combining Personal Compute with the Databricks Notebook for data-native development, the Workspace for managed file storage, and Repos for version control, Databricks offers a fully-hosted development experience in the Lakehouse that is as familiar as a user's laptop and that can seamlessly scale from small, everyday workloads to massive, big data workloads on large Spark clusters.

Using Personal Compute

When granted access, users can create Personal Compute resources through either the Compute page or the Databricks Notebook [AWS, Azure]. These resources are single-machine all-purpose compute resources which are compatible with Unity Catalog, have CPUs and GPUs available, and use the latest version of the Databricks Runtime for Machine Learning (MLR).

Using Personal Compute from the Compute page

The "Compute" page now includes a new shortcut button for creating a Personal Compute resource, and from the "Compute" page users can create a Personal Compute resource in two steps:

- Click "Create with Personal Compute" at the top of the page. This will open the cluster configuration dialog with Personal Compute selected as the policy at the top.

- Click "Create Cluster" at the bottom of the dialog.

Users can also follow the traditional path of clicking "Create Cluster" at the top of the "Compute" page and choosing Personal Compute in the policy dropdown. Once their Personal Compute resource starts, it will be available for their use.

Using Personal Compute from the Notebook

Users can also create a Personal Compute resource from a notebook in only three steps:

- At the top-right of the notebook, click the "Connect" button.

- Select "Create new resource…" at the bottom of the dropdown. This will open a dialog that defaults to using the Personal Compute policy.

- Click "Create".

Users have the option to choose the resource's name, instance type, and runtime version as well as any other fields set as required in the policy by their administrator. Once their Personal Compute resource is running, their notebook will connect to it automatically. Just like any other cluster, their Personal Compute resource will be available for them to use across the work they do in Databricks, not just with the notebook through which it was created.

Gartner®: Databricks Cloud Database Leader

Managing Personal Compute as an administrator

We are also super excited about how significantly Personal Compute simplifies compute access and management in Databricks. Today, most workflows in Databricks take users through some form of compute management, and this is largely overhead that is disconnected from the focus of users' work. It also adds to administrators' management burden by requiring them to monitor the compute resources created by their users to control costs. With Personal Compute, administrators have a direct path to give users the ability to create laptop-like compute with guardrails so users can focus on the job they are trying to get done.

Users with access to Personal Compute can create compute resources with the following properties:

- Personal Compute resources are all-purpose compute resources (priced according to all-purpose compute pricing [AWS, Azure, GCP]);

- Personal Compute resources are single-node clusters [AWS, Azure, GCP] (that is, "clusters" with no workers and with Spark running in local mode);

- they use the single user cluster access mode [AWS, Azure, GCP coming soon], so they are Unity Catalog-compatible and only accessible by the creator;

- they use the latest version of the Databricks Runtime for Machine Learning (MLR) [AWS, Azure, GCP]; and

- they can use either standard instances or GPU-enabled instances.

Auto-termination [AWS, Azure, GCP] is also available for Personal Compute resources but disabled by default.

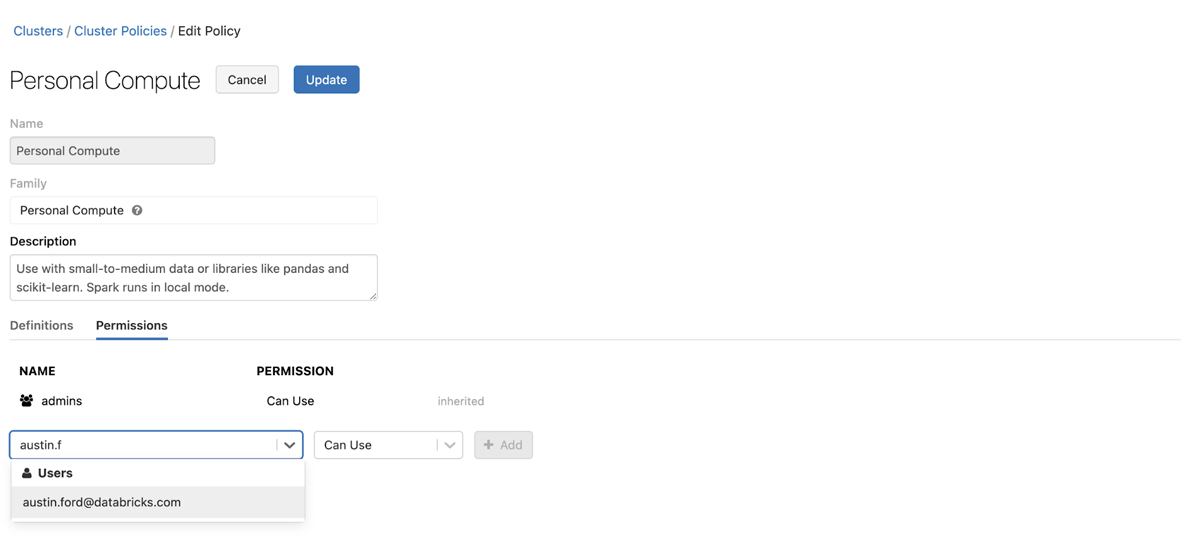

Controlling access to the Personal Compute policy

Workspace administrators can manage access to the Personal Compute policy on individual workspaces using the cluster policies UI [AWS, Azure], which enables the addition of individual users or groups to the policy's ACLs on that workspace.

Additionally, account administrators can enable or disable access to the Personal Compute policy for all users in their account using the Personal Compute account setting. [NOTE: This is only available on AWS initially. It will be added to Azure in the coming months.]

- From the account console, click "Settings".

- Click on the "Feature enablement" tab.

- Enable the Personal Compute setting to give all users in the account access to the Personal Compute policy. Or, switch the setting to "Delegate" if you want the policy to be managed at the workspace level (as above).

The default value for the Personal Compute setting is ON. During the initial rollout, account administrators will be able to adjust whether this switch is ON or not before the system begins to read the setting and use it to determine account-wide access to the policy.

Customizing Personal Compute for your users

The Personal Compute default policy can be customized by overriding certain properties [AWS, Azure]. Unlike traditional cluster policies, though, Personal Compute has the following properties fixed by Databricks:

- The compute type is always "all-purpose" compute, so Personal Compute resources are priced with the all-purpose SKU;

- the compute mode is fixed as "single-node"; and

- the access mode is fixed as "single user" with the user being the resource's creator.

To customize a workspace's Personal Compute policy, a workspace administrator can follow these steps:

- Navigate to the "Compute" by clicking "Compute" in the sidebar.

- Click the "Cluster Policies" tab.

- Select the Personal Compute policy, which opens the details of the Personal Compute policy.

- Click the "Edit" button at the top of the details page.

- Under the "Definitions" tab, click "Edit".

- A modal appears where you can override policy definitions. In the "Overrides" section, add the updated definitions [AWS, Azure], and click "OK".

The current rollout and what comes next

Over the week of October 10, 2022, the Personal Compute default policy will be rolling out to all premium- and enterprise-tier workspaces on AWS and all premium-tier workspaces on Azure. On AWS, the account setting for account-wide access to Personal Compute will roll out simultaneously with default value ON, and the setting's value will take effect beginning November 16, 2022. We look forward to bringing Personal Compute to GCP in the coming months, and we will bring the same account-wide access control switch to all clouds in the same timeframe.

| AWS | Azure | GCP | |

|---|---|---|---|

| Personal Compute default policy | Rolling out now | Rolling out now | Coming early 2023 |

| Personal Compute account-wide access setting | Rolling out now Takes effect November 16, 2022 |

Coming early 2023 | Coming early 2023 |

We're excited to see what our customers think of Personal Compute! If you're on AWS or Azure, please check it out and let us know what you think.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read