Product descriptions:

Many banks continue to rely on decades-old, mainframe-based platforms to support their back-end operations. But banks that are modernizing their IT infrastructures and integrating the cloud to share data securely and seamlessly are finding they can form an increasingly interconnected financial services landscape. This has created opportunities for community banks, fintechs and brands to collaborate and offer customers more comprehensive and personalized services. Coastal Community Bank is headquartered in Everett, Washington, far from some of the world’s largest financial centers. The bank’s CCBX division offers banking as a service (BaaS) to financial technology companies and broker-dealers. To provide personalized financial products, better risk oversight, reporting and compliance, Coastal turned to the Databricks Data Intelligence Platform and Delta Sharing, an open protocol for secure data sharing, to enable them to share data with their partners while ensuring compliance in a highly regulated industry.

Leveraging tech and innovation to future-proof a community bank

Coastal Community Bank was founded in 1997 as a traditional brick-and-mortar bank. Over the years, they grew to 14 full-service branches in Washington state offering lending and deposit products to approximately 40,000 customers. In 2018, the bank’s leadership broadened their vision and long-term growth objectives, including how to scale and serve customers outside their traditional physical footprint. Coastal leaders took an innovative step and launched a plan to offer BaaS through CCBX, enabling a broad network of virtual partners and allowing the bank to scale much faster and further than they could via their physical branches alone.

Coastal hired Barb MacLean, Senior Vice President and Head of Technology Operations and Implementation, to build the technical foundation required to help support the continued growth of the bank. “Most small community banks have little technology capability of their own and often outsource tech capabilities to a core banking vendor,” says MacLean. “We knew that story had to be completely different for us to continue to be an attractive banking-as-a-service partner to outside organizations.”

To accomplish their objectives, Coastal would be required to receive and send vast amounts of data in near real-time with their partners, third parties and the variety of systems used across that ecosystem. This proved to be a challenge as most banks and providers still relied on legacy technologies and antiquated processes like once-a-day batch processing. To scale their BaaS offering, Coastal needed a better way to manage and share data. They also required a solution that could scale while ensuring that the highest levels of security, privacy and strict compliance requirements were met. “The list of things we have to do to prove that we can safely and soundly operate as a regulated financial institution is ever-increasing,” says MacLean. “As we added more customers and therefore more customer information, we needed to scale safely through automation.”

Coastal also needed to accomplish all this with their existing small team. “As a community bank, we can’t compete on a people basis, so we have to have technology tools in place that teams can learn easily and deploy quickly,” adds MacLean.

Tackling a complex data environment with Delta Sharing

With the goal of having a more collaborative approach to community banking and banking as a service, Coastal began their BaaS journey in January 2023 when they chose Cavallo Technologies to help them develop a modern, future-proof data platform to support their stringent customer data sharing and compliance requirements. This included tackling infrastructure challenges, such as data ingestion complexity, speed, data quality and scalability. “We wanted to use our small, nimble team to our advantage and find the right technology to help us move fast and do this right,” says MacLean.

“We initially tested several vendors, however learned through those tests we needed a system that could scale for our needs going forward,” says MacLean. Though very few members of the team had used Databricks before, Coastal decided to move from a previously known implementation pattern and a data lake–based platform to a lakehouse approach with Databricks. The lakehouse architecture addressed the pain points they experienced using a data lake–based platform, such as trying to sync batch and streaming data. The dynamic nature and changing environments of Coastal’s partners required handling changes to data structure and content. The Databricks Data Intelligence Platform provided resiliency and tooling to deal with both data and schema drift cost-effectively at scale.

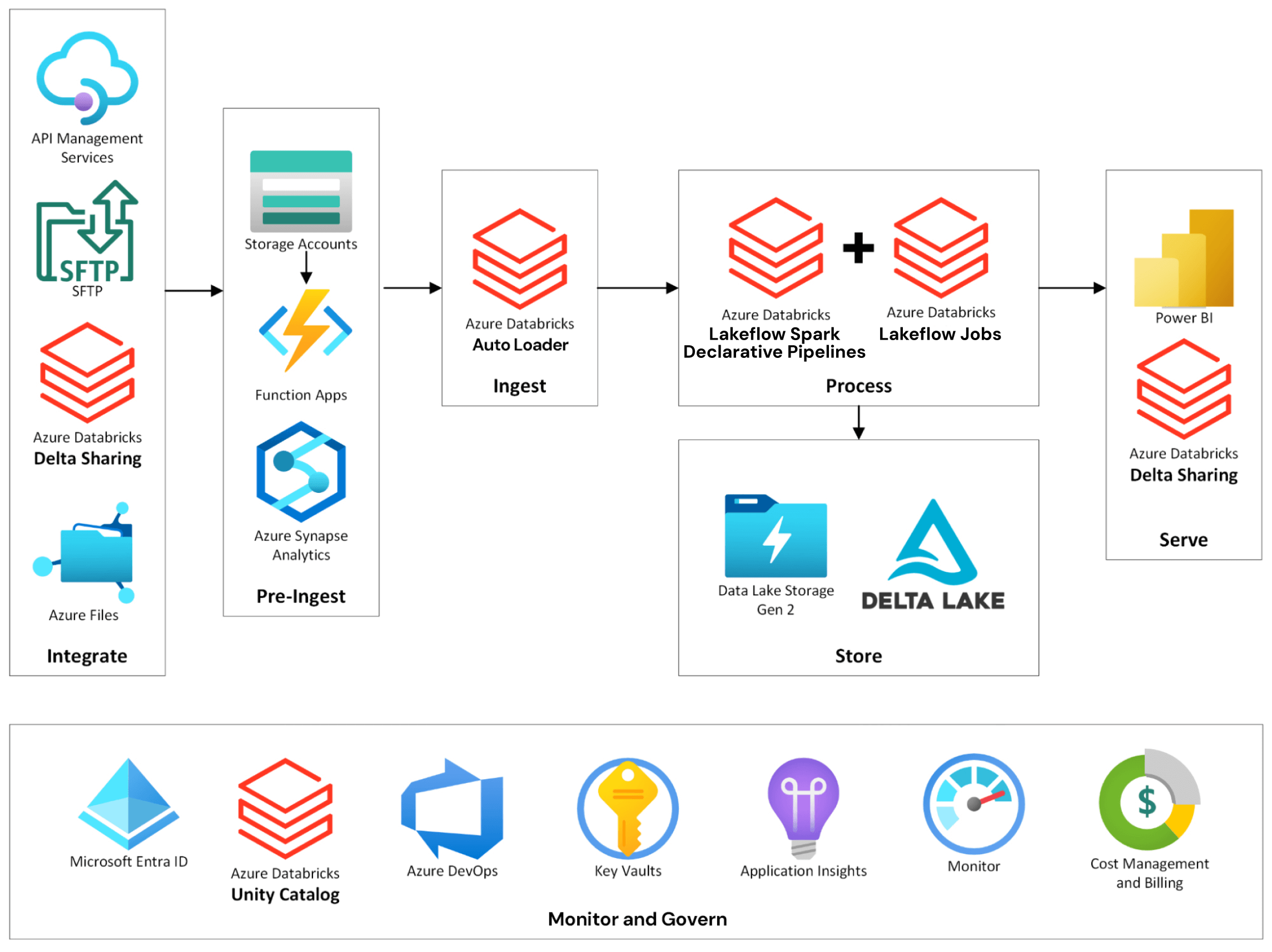

Coastal continued to evolve and extend their use of Databricks tools, including Auto Loader, Structured Streaming, Spark Declarative Pipelines, Unity Catalog and Databricks repos for CI/CD, as they created a robust software engineering practice for data at the bank. Applying software engineering principles to data can often be neglected or ignored by engineering teams, but Coastal knew that it was critical to managing the scale and complexity of the internal and external environment in which they were working. This included having segregated environments for development, testing and production, having technical leaders approve the promotion of code between environments, and include data privacy and security governance.

Coastal also liked that Databricks worked well with Azure out of the box. And because it offered a consolidated toolkit for data transformation and engineering, Databricks helped address any risk concerns. “When you have a highly complex technical environment with a myriad of tools, not only inside your own environment but in our partners’ environments that we don’t control, a consolidated toolkit reduces complexity and thereby reduces risk,” says MacLean.

Initially, MacLean’s team evaluated several cloud-native solutions, with the goal of moving away from a 24-hour batch world and into real-time data processing since any incident could have wider reverberations in a highly interconnected financial system. “We have all these places where data is moving in real time. What happens when someone else’s system has an outage or goes down in the middle of the day? How do you understand customer and bank exposure as soon as it happens? How do we connect the batch world with the real-time world? We were trapped in a no-man’s-land of legacy, batch-driven systems, and partners are too,” explains MacLean.

“We wanted to be a part of a community of users, knowing that was the future, and wanted a vendor that was continually innovating,” says MacLean. Similarly, MacLean’s team evaluated the different platforms for ETL, BI, analytics and data science, including some already in use by the bank. “Engineers want to work with modern tools because it makes their lives easier … working within the century in which you live. We didn’t want to Frankenstein things because of a wide toolset,” says MacLean. “Reducing complexity in our environment is a key consideration, so using a single platform has a massive positive impact. Databricks is the hands-down winner in apples-to-apples comparisons to other tools like Snowflake and SAS in terms of performance, scalability, flexibility and cost.”

MacLean explained that Databricks included everything, such as Auto Loader, repositories, monitoring and telemetry, and cost management. This enabled the bank to benefit from robust software engineering practices so they could scale to serving millions of customers, whether directly or via their partner network. MacLean explained, “We punch above our weight, and our team is extremely small relative to what we’re doing, so we wanted to pick the tools that are applicable to any and all scenarios.”

Improving time to value and growing their partner network

In the short time since Coastal launched CCBX, it has become the bank’s primary customer acquisition and growth division, enabling them to grow BaaS program fee income by 32.3% year over year. Their use of Databricks has also helped them achieve unprecedented time to value. “We’ve done two years’ worth of work here in nine months,” says Curt Queyrouze, President at Coastal.

Almost immediately, Coastal saw exponential improvements in core business functions. “Activities within our risk and compliance team that we need to conduct every few months would take 48 hours to execute with legacy inputs,” says MacLean. “Now we can run those in 30 minutes using near real-time data.”

Despite managing myriad technology systems, Databricks helps Coastal remove barriers between teams, enabling them to share live data with each other safely and securely in a matter of minutes so the bank can continue to grow quickly through partner acquisition. “The financial services industry is still heavily reliant on legacy, batch-driven systems, and other data is moving in real time and needs to be understood in real time. How do we marry those up?” asks MacLean. “That was one of the fundamental reasons for choosing Databricks. We have not worked with any other tool or technology that allows us to do that well.”

CCBX leverages the power and scale of a network of partners. Delta Sharing uses an open source approach to data sharing and enables users to share live data across platforms, clouds and regions with strong security and governance. Using Delta Sharing meant Coastal could manage data effectively even when working with partners and third parties using inflexible legacy technology systems. “The data we were ingesting is difficult to deal with,” says MacLean. “How do we harness incoming data from about 20 partners with technology environments that we don’t control? The data’s never going to be clean. We decided to make dealing with that complexity our strength and take on that burden. That’s where we saw the true power of Databricks’ capabilities. We couldn’t have done this without the tools their platform gives us.”

Databricks also enabled Coastal to scale from 40,000 customers (consumers and small-medium businesses in the north Puget Sound region) to approximately 6 million customers served through their partner ecosystem and dramatically increase the speed at which they integrate data from those partners. In one notable case, Coastal was working with a new partner and faced the potential of having to load data on 80,000 customers manually. “We pointed Databricks at it and had 80,000 customers and the various data sources ingested, cleaned and prepared for our business teams to use in two days,” says MacLean. “Previously, that would have taken one to two months at least. We could not have done that with any prior existing tool we tried.”

With Delta Sharing on Databricks, Coastal now has a vastly simplified, faster and more secure platform for onboarding new partners and their data. “When we want to launch and grow a product with a partner, such as a point-of-sale consumer loan, the owner of the data would need to send massive datasets on tens of thousands of customers. Before, in the traditional data warehouse approach, this would typically take one to two months to ingest new data sources, as the schema of the sent data would need to be changed in order for our systems to read it. But now we point Databricks at it and it’s just two days to value,” shares MacLean.

While Coastal’s data engineers and business users love this improvement in internal productivity, the larger transformation has been how Databricks has enabled Coastal’s strategy to focus on building a rich partner network. They now have about 20 partners leveraging different aspects of Coastal’s BaaS.

Recently, Coastal’s CEO had an ask about a specific dataset. Based on experience from their previous data tools, they brought in a team of 10 data engineers to comb through the data, expecting this to be a multiday or even multi-week effort. But when they actually got into their Databricks Data Intelligence Platform, using data lineage on Unity Catalog, they were able to give a definitive answer that same afternoon. MacLean explains that this is not an anomaly. “Time and time again, we find that even for the most seemingly challenging questions, we can grab a data engineer with no context on the data, point them to a data pipeline and quickly get the answers we need.”

The bank’s use of Delta Sharing has also allowed Coastal to achieve success with One, an emerging fintech startup. One wanted to sunset its use of Google BigQuery, which Coastal was using to ingest One’s data. The two organizations needed to work together to find a solution. Fortunately, One was also using Databricks. “We used Delta Sharing, and after we gave them a workspace ID, we had tables of data showing up in our Databricks workspace in under 10 minutes,” says MacLean. (To read more about how Coastal is working with One, read the blog.) MacLean says Coastal is a leader in skills, technology and modern tools for fintech partners.

Data and AI for good

With a strong data foundation set, MacLean has a larger vision for her team. “Technologies like generative AI open up self-serve capabilities to so many business groups. For example, as we explore how to reduce financial crimes, if you are taking a day to do an investigation, that doesn’t scale to thousands of transactions that might need to be investigated,” says MacLean. “How do we move beyond the minimum regulatory requirements on paper around something like anti-money laundering and truly reduce the impact of bad actors in the financial system?”

For MacLean this is about aligning her organization with Coastal’s larger mission to use finance to do better for all people. Said MacLean, “Where are we doing good in terms of the application of technology and financial services? It’s not just about optimizing the speed of transactions. We care about doing better on behalf of our fellow humans with the work that we do.”