Building Custom LLM Judges for AI Agent Accuracy

MLflow Introduces New LLM-as-a-Judge Capabilities that Streamline Creating Domain-Specific Judges and Enhances Agent Bricks

Summary

- Use Tunable Judges to systematically align domain-specific evaluators that agree with your domain experts

- Agent-as-a-Judge streamlines the process of creating complex judges, all in natural language

- Use all these features and more in Judge Builder, an intuitive visual workflow that simplifies creating and maintaining judges

As AI agents move from prototype to production, organizations must ensure quality and scale their evaluation processes to prevent reputational risks when agents behave unpredictably. MLflow helps address this challenge with research-backed, built-in LLM judges that assess key dimensions such as correctness, relevance, and safety. Still, many real-world applications demand more than generic quality markers, requiring domain-specific evaluations tailored to their unique context. For example, the criteria for judging a customer support chatbot differ greatly from those used to assess a medical diagnosis assistant or a financial advisory bot.

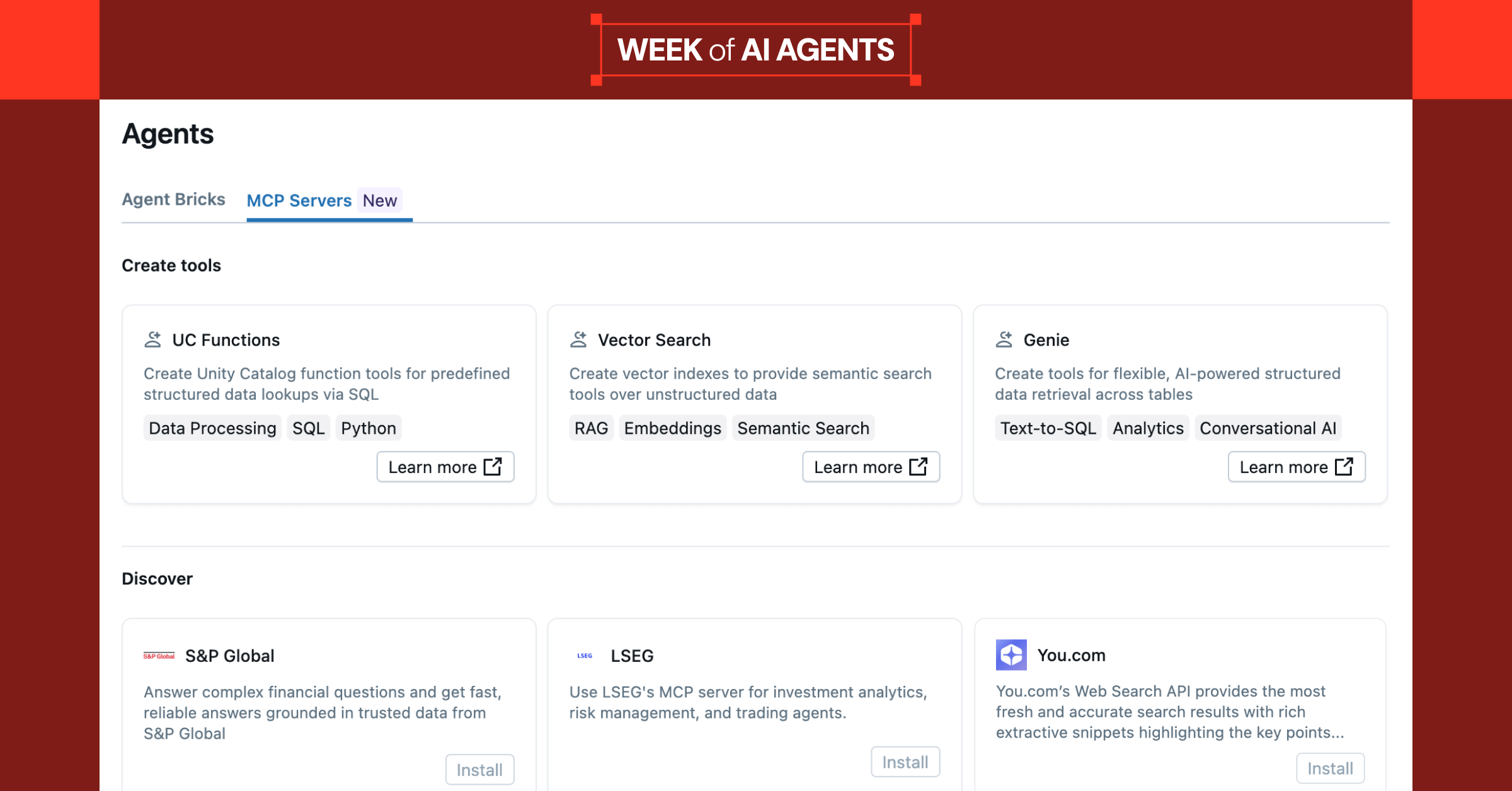

Previously, building custom evaluation logic was time-consuming and required tight collaboration between developers and domain experts (specialists with deep knowledge of the business context, regulations, and quality standards), creating a bottleneck in the development cycle. As part of the Databricks Week of Agents, we’re introducing three new MLflow-powered capabilities in Agent Bricks designed to make this process faster and more scalable:

- Tunable Judges enable systematic alignment with domain experts

- Agent-as-a-Judge automatically determines which parts of the trace to evaluate, eliminating manual implementation overhead for complex metrics

- Judge Builder brings all these features in an intuitive visual workflow that streamlines collaboration between developers and domain experts.

Together, these MLflow-powered capabilities enable Agent Bricks to simplify how teams build, monitor, and continuously improve high-quality AI agents.

Creating Tunable Judges

With the new make_judge SDK introduced in MLflow 3.4.0, you can easily create custom LLM judges tailored to your specific use case using natural language instructions rather than complex, programmatic logic. You simply define your evaluation criteria, and MLflow handles the implementation details.

Once you’ve created your first judges, MLflow’s tuning and alignment tools help you encode subject matter expert feedback into the loop. You can take comments or scoring data from domain experts and feed it directly into your custom judges, so the evaluation logic learns what “good” means for your specific use case. Improving quality isn’t a one-time task; it’s a loop. Each time you collect feedback and retrain or adjust your judges, they get more consistent and closer to how real users measure success. Over time, this steady tuning is what keeps your evaluations reliable and your agents improving at scale.

“To deliver on the future of marketing optimization, we need absolute confidence in our AI agents. The make_judge API provides the programmatic control to continuously align our domain-specific judges, ensuring the highest level of accuracy and trust in our attribution modeling.”— Tjadi Peeters, CTO, Billy Grace

To get started, create your first custom judge:

To align your judge with human expertise, collect traces with human feedback, then use MLflow's alignment optimization:

Explore the full documentation to learn more about defining your tunable LLM judge and aligning the judge. You can then register the judge to an experiment and use it for monitoring quality in production:

You can just as easily use the judge in offline evaluations:

Learn more about how to use scorers in evaluation and for continuously monitoring quality in production.

Getting Started with Agent-as-a-Judge

Agent-as-a-Judge adds intelligence to the evaluation process by automatically identifying which parts of a trace are relevant, eliminating the need for complex, manual trace traversal logic! Simply include the {{ trace }} variable in your judge instructions, and Agent-as-a-Judge will fetch the right data for evaluation.

For many evaluation scenarios, such as checking if a specific tool was called, if arguments were valid, or if redundant calls were made, traditional evaluation frameworks require writing custom code to manually search and filter through the trace structure. This code can become complex and brittle as your agent or evaluation requirements evolve. With agent-as-a-judge, your judge can focus directly on the intent and outcome, not manual data handling:

This declarative approach keeps judges readable and adaptable. You can extend the same pattern to check argument validity, redundant tool calls, or any other custom logic. For instance, you can add a condition like “Please ensure the tool call arguments are reasonable given the previous context and ensure the tool is not called redundantly”.

Agent-as-a-Judge supports the same alignment capabilities as every other judge, so you can tune them with human feedback using the syntax shown previously. Learn more about Agent-as-a-Judge here.

Gartner®: Databricks Cloud Database Leader

Introducing the Judge Builder

To make the judge creation and alignment process even simpler, Judge Builder provides a visual interface to manage the entire judge lifecycle. Domain experts can provide feedback directly through an intuitive review experience, while developers use that feedback to automatically align judges with domain standardsThis bridges the gap between technical implementation and domain expertise, streamlining feedback collection, judge alignment, and lifecycle management, all built on top of existing MLflow features and integrated with your existing MLflow experiments.

To get started with the Judge Builder, go to the Databricks Marketplace and install the Judge Builder app to your workspace. Learn more here, or contact your Databricks Representative for more information.

Conclusion

With tunable judges, Agent-as-a-Judge, and Judge Builder, you can build domain-specific LLM judges that match your expert standards and continuously improve with human feedback. Together, these features make it easier to build high-quality AI agents at scale with MLflow. Notably, these same features form the comprehensive evaluation engine that powers Agent Bricks.

Whether you're in the early stages of prototyping or managing production agents serving millions of users, MLflow and Agent Bricks provide the tools you need to build high-quality agents and deploy to production with confidence.

Get started today:

- Join us on 11/11 for “The Future of AI: Build Agents that Work” with Sam Altman and Ali Ghodsi.

- Explore tunable judges

- Learn about Agent-as-a-Judge

- Get started with Judge Builder

- Find the other announcements we’ve made in the Week of Agents

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read