Solution Accelerator

Toxicity Detection for Gaming

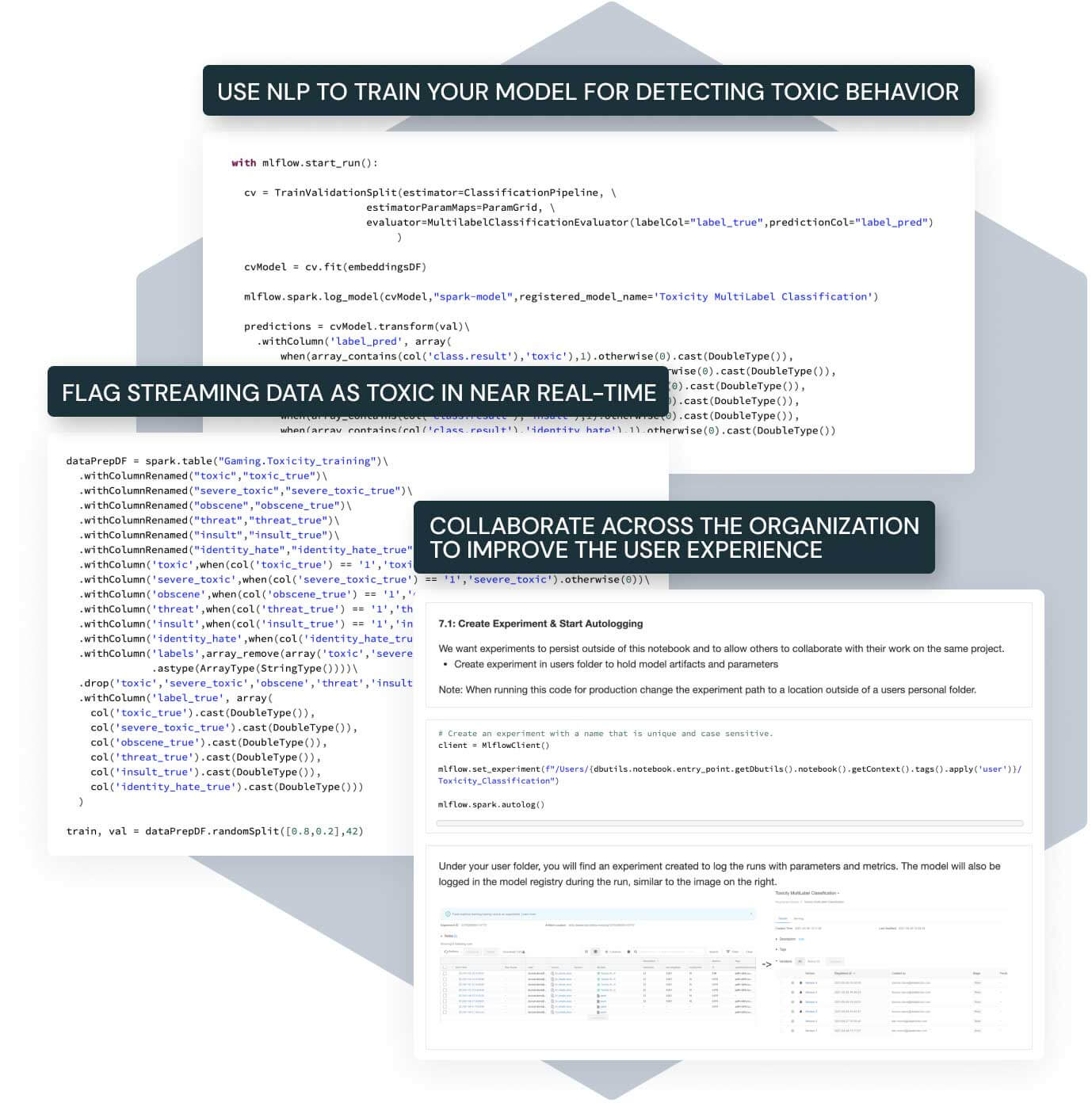

Pre-built code, sample data and step-by-step instructions ready to go in a Databricks notebook

Foster healthier gaming communities with real-time detection of toxic language

This Solution Accelerator walks you through the steps required to detect toxic comments in real time to improve the user experience and foster healthier gaming communities. Build a lakehouse for all your gamer data and use natural language processing techniques to flag questionable comments for moderation.

- Enable real-time detection of toxic comments within in-game chat

- Easily integrate into existing community moderation processes

- Seamlessly add new sources of gamer data (e.g., streams, files or voice data)

Resources

Case study

Explainer video

Blog