The Databricks AI Security Framework (DASF)

The Databricks AI Security Framework (DASF) outlines the key components of a modern AI/ML system, helping organizations assess potential risks and apply security best practices.

Architecture Summary

The Databricks AI Security Framework (DASF) outlines the key components of a modern AI/ML system, helping organizations assess potential risks and apply security best practices. By understanding how these components interact, organizations can better anticipate vulnerabilities and apply the appropriate mitigation strategies.

The Databricks Security team has incorporated these learnings into the Databricks Data Intelligence Platform with built-in controls and mapped documentation links for security and compliance.

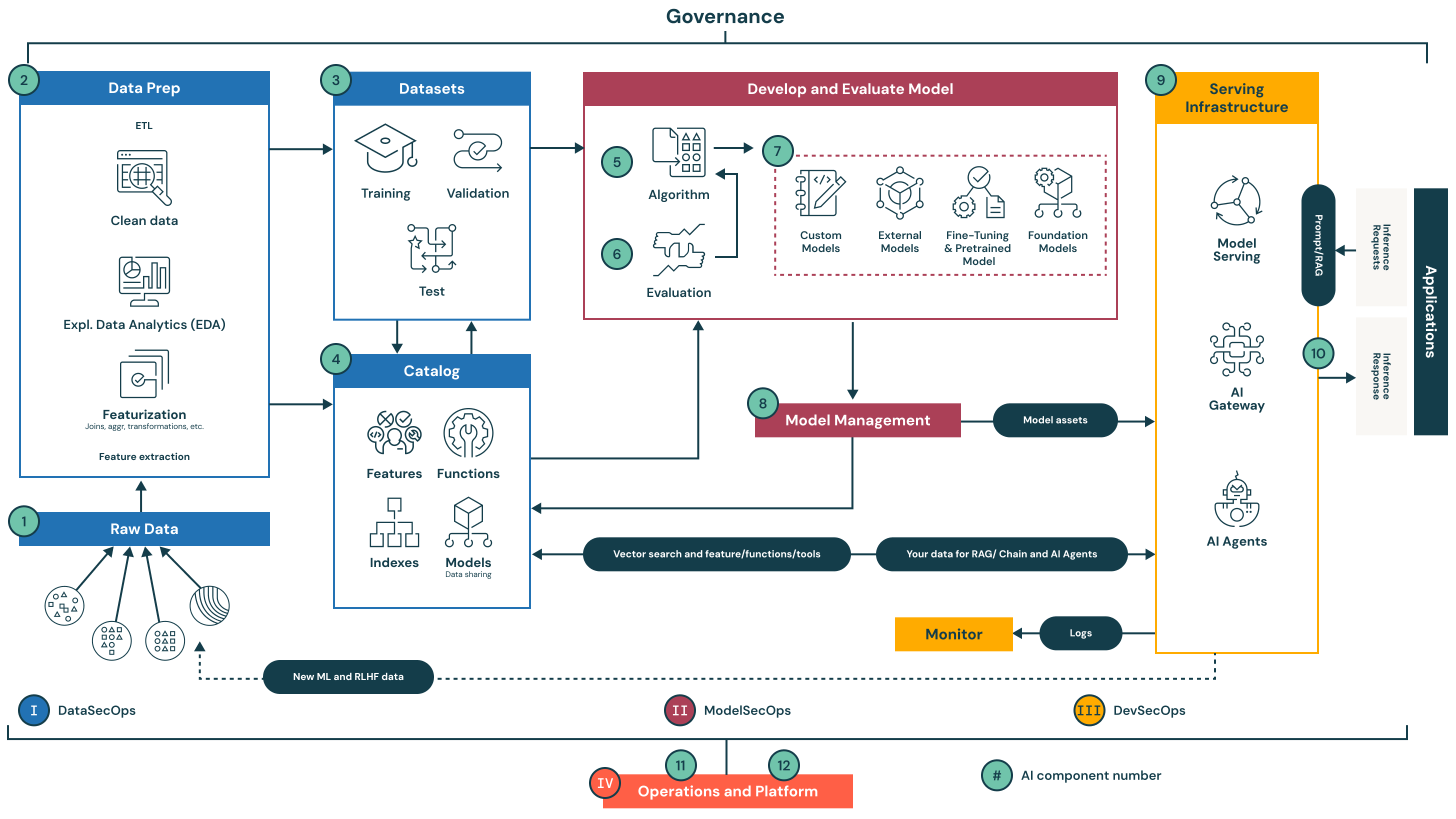

The system is organized into 12 foundational components grouped into four key stages. Each stage represents a critical pillar of secure, governed and operationally efficient AI and ML workflows.

I. Data Operations (Components 1–4)

Data operations focus on ingesting, preparing and cataloging data. This is the foundation of any trustworthy ML system, as model quality is dependent on the quality and security of input data.

1. Raw data: Data is ingested from multiple sources (structured, unstructured and semi-structured). At this stage, data must be securely stored and access-controlled.

2. Data prep: This step involves cleaning, exploratory data analysis (EDA), transformations and featurization. It is part of the extract, transform, load (ETL) process and is essential to derive meaningful training features.

3. Datasets: Prepared datasets are split into training, validation and test sets. These datasets must be versioned and reproducible.

4. Catalog: A centralized registry for data assets: features, indexes, models and functions. It supports discoverability, lineage, governance and secure data sharing across teams.

Key risk mitigation: Access controls, encryption, data lineage tracking and audit logging help ensure only authorized users can access, modify or share data.

II. Model Operations (Components 5–8)

Model operations involve experimentation, evaluation and lifecycle management of ML and AI models.

5. Algorithm: Custom or pre-built algorithms used to develop predictive models.

6. Evaluation: Systematic assessment of model performance using validation data and defined metrics.

7. Develop and evaluate model: This includes building models from scratch, using external APIs (e.g., OpenAI), fine-tuning foundation models or applying transfer learning techniques.

8. Model management: Centralized tracking and governance of models, including versioning, audit trails and lineage for reproducibility and compliance.

Key risk mitigation: Built-in versioning, experiment tracking, access controls and audit trails reduce the risk of unauthorized model changes and support traceability across model iterations.

III. Model Deployment and Serving (Components 9–10)

These components focus on delivering models securely to production and powering AI applications.

9. Prompt/RAG (retrieval augmented generation): Enables secure, low-latency access to structured and unstructured data for RAG and other inference-based applications.

10. Serving infrastructure: Comprises model serving, AI gateways and APIs that handle inference requests and responses across AI agents and applications.

Key risk mitigation: Secure model deployment practices such as container isolation, request rate limiting, input validation and traffic monitoring reduce the attack surface and ensure consistent service availability.

IV. Operations and Platform (Components 11–12)

This layer manages infrastructure security, access control and system reliability across environments.

11. Monitor: Continuously collects logs, metrics and telemetry for model performance, security auditing and system observability.

12. Operations and platform: Supports secure CI/CD, platform patching, vulnerability management and strict environment separation (development, staging, production).

Key risk mitigations: Maintaining environment isolation, applying patches regularly, enforcing role-based access control and monitoring system behavior help uphold security and compliance standards throughout the AI lifecycle.

Conclusion

This architecture provides a comprehensive view of secure, governed and production-ready AI systems. Each component has been evaluated for risk, and controls are embedded natively into the Databricks Data Intelligence Platform. Organizations can use this as a reference to accelerate the security assessment and implementation of their own AI systems.