Unit Testing Lakeflow Spark Declarative Pipelines for Production-Grade Workflows

Demo Type

Product Tutorial

Duration

Self-paced

Related Content

What you’ll learn

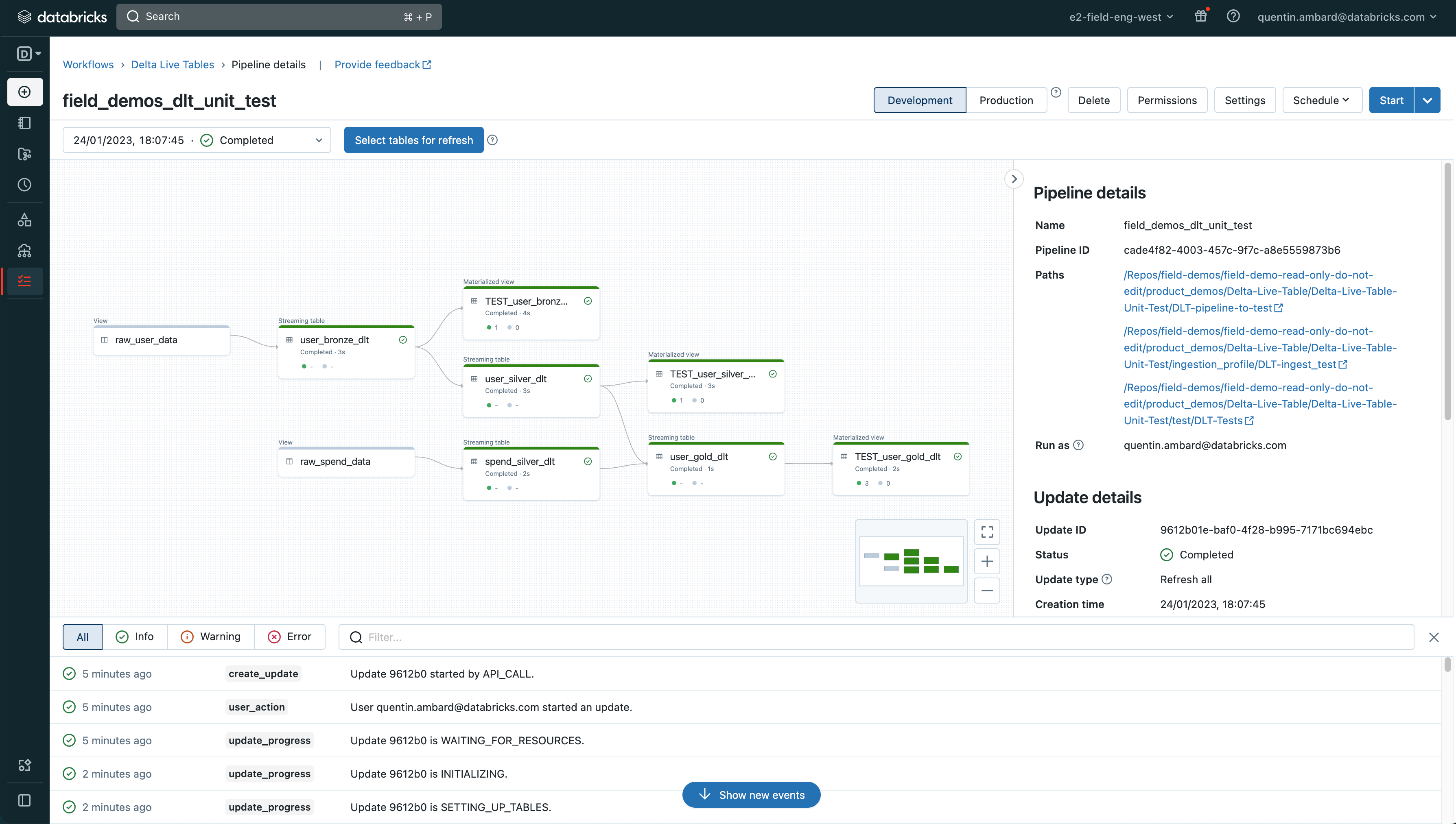

Production-grade pipelines require unit tests to guarantee their robustness. Declarative Pipelines lets you track the quality of your pipeline data by displaying expectations in a table.

These expectations can also be leveraged to write integration tests and make robust pipelines.

In this demo, we’ll show you how to test your Declarative Pipeline and make it composable, easily switching input data with your test data.

To install the demo, get a free Databricks workspace and execute the following two commands in a Python notebook

Dbdemos is a Python library that installs complete Databricks demos in your workspaces. Dbdemos will load and start notebooks, Declarative Pipeline, clusters, Databricks SQL dashboards, warehouse models … See how to use dbdemos

Dbdemos is distributed as a GitHub project.

For more details, please view the GitHub README.md file and follow the documentation.

Dbdemos is provided as is. See the License and Notice for more information.

Databricks does not offer official support for dbdemos and the associated assets.

For any issue, please open a ticket and the demo team will have a look on a best-effort basis.

Note - Databricks Lakeflow unifies Data Engineering with Lakeflow Connect, Lakeflow Spark Declarative Pipelines (previously known as DLT), and Lakeflow Jobs (previously known as Workflows).

These assets will be installed in this Databricks demos: