Introduction to Lakeflow Spark Declarative Pipeline - Bike

Demo Type

Product Tutorial

Duration

Self-paced

Related Content

What you’ll learn

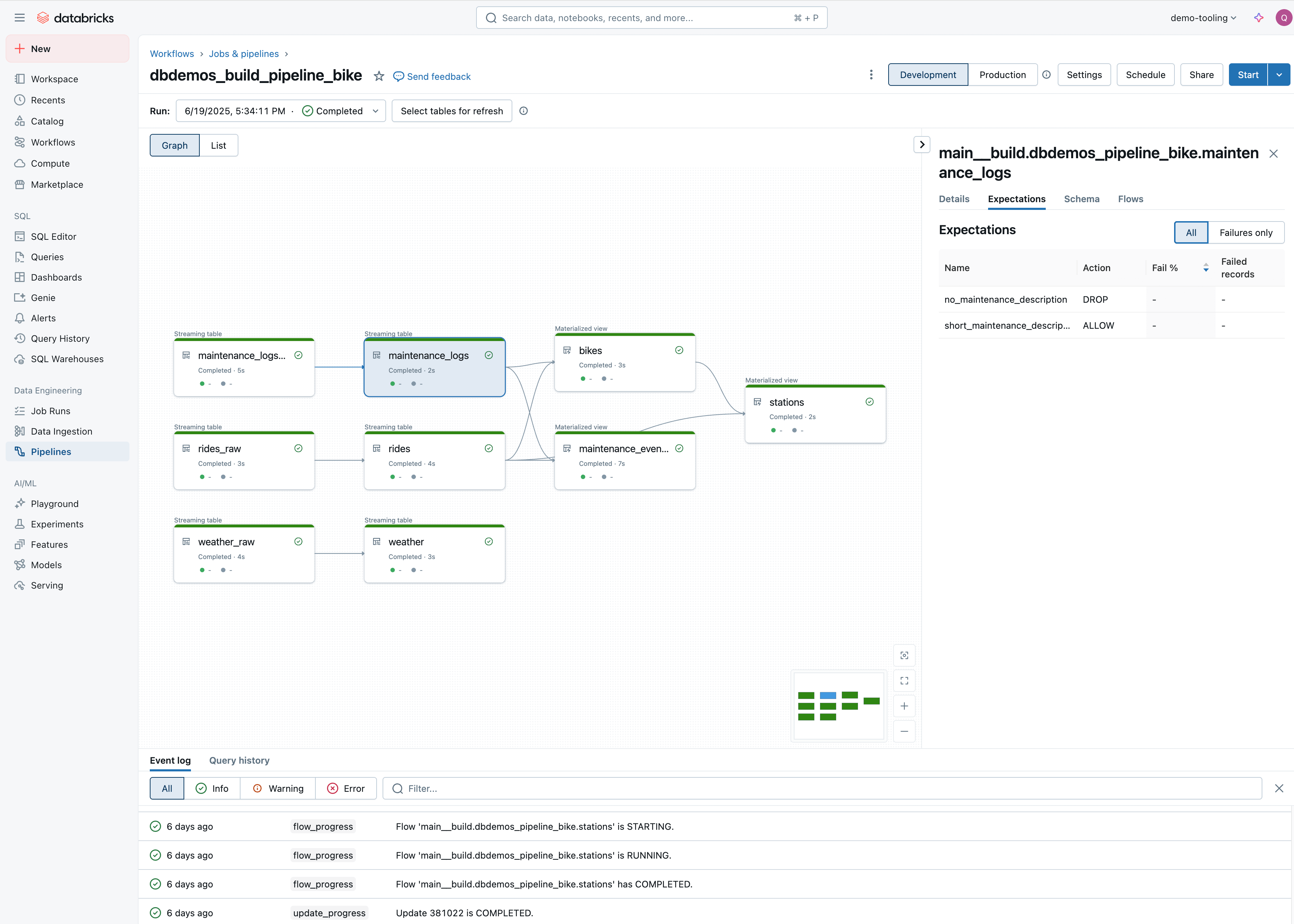

This demo is an introduction to Spark Declarative Pipeline, an ETL framework making data engineering accessible for all. Simply declare your transformations in SQL or Python, and Declarative Pipeline will handle the data engineering complexity for you:

- Accelerate ETL development: Enable analysts and data engineers to innovate rapidly with simple pipeline development and maintenance

- Remove operational complexity: By automating complex administrative tasks and gaining broader visibility into pipeline operations

- Trust your data: With built-in quality controls and quality monitoring to ensure accurate and useful BI, data science and ML

- Empower your data with AI: Perform state-of-the-art transformation with Databricks SQL AI queries

- Simplify batch and streaming: With self-optimization and autoscaling data pipelines for batch or streaming processing

In this demo, we will use a raw dataset containing information on our bike rental system as input. Our goal is to ingest this data in near real-time and build tables for our analyst team while ensuring data quality.

To install the demo, get a free Databricks workspace and execute the following two commands in a Python notebook

Dbdemos is a Python library that installs complete Databricks demos in your workspaces. Dbdemos will load and start notebooks, DLT pipelines, clusters, Databricks SQL dashboards, warehouse models … See how to use dbdemos

Dbdemos is distributed as a GitHub project.

For more details, please view the GitHub README.md file and follow the documentation.

Dbdemos is provided as is. See the License and Notice for more information.

Databricks does not offer official support for dbdemos and the associated assets.

For any issue, please open a ticket and the demo team will have a look on a best-effort basis.

Note - Databricks Lakeflow unifies Data Engineering with Lakeflow Connect, Lakeflow Spark Declarative Pipelines (previously known as DLT), and Lakeflow Jobs (previously known as Workflows).

These assets will be installed in this Databricks demos: