Webinar

Using SQL to Query Your Data Lake with Delta Lake on Azure

Modern data lakes leverage cloud elasticity to store virtually unlimited amounts of data "as is", without the need to impose a schema or structure. Structured Query Language (SQL) is a powerful tool to explore your data and discover valuable insights. Delta Lake is an open source storage layer that brings reliability to data lakes with ACID transactions, scalable metadata handling, and unified streaming and batch data processing. Delta Lake is fully compatible with your existing data lake.

Join Databricks and Microsoft as we share how you can easily query your data lake using SQL and Delta Lake on Azure. We’ll show how Delta Lake enables you to run SQL queries without moving or copying your data. We will also explain some of the added benefits that Azure Databricks provides when working with Delta Lake. The right combination of services, integrated in the right way, makes all the difference!

In this webinar you will learn how to:

- Integrate Delta Lake with Azure Data Lake Storage (ADLS) and other core Azure data services to help eliminate data silos and leverage Microsoft’s recommended Azure solution architectures

- Explore your data using SQL queries and an ACID-compliant transaction layer directly on your data lake

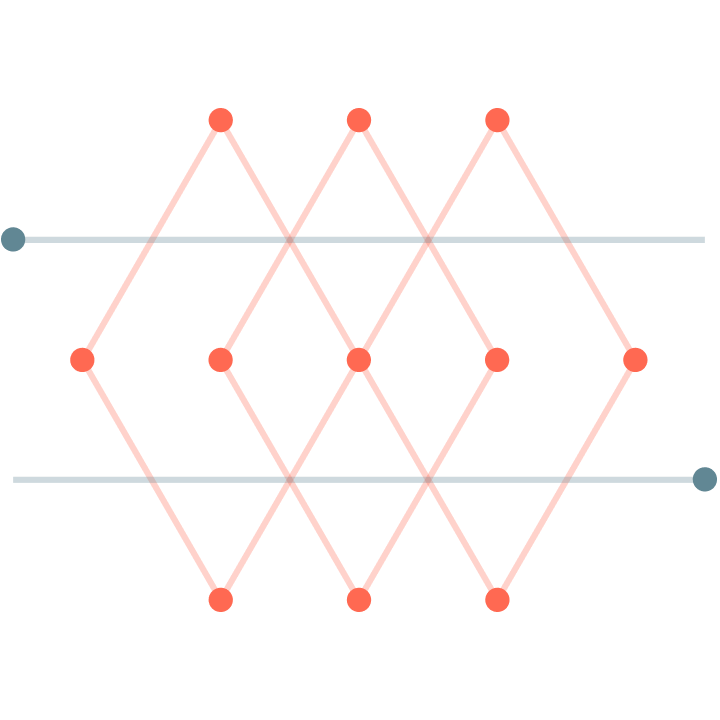

- Leverage gold, silver, and bronze “medallion tables” to consolidate and simplify data enrichment for your data pipelines and analytics workflows

- Audit and troubleshoot your data pipeline using Delta Lake time travel to see how your data changed over time

- See the benefits that Azure Databricks provides with features like Delta cache, file compaction, and data skipping

Featured Speakers

Kyle Weller, Sr. Program Manager, Microsoft

Mike Cornell, Sr. Solutions Architect, Databricks

Hosted by: Clinton Ford, Sr. Partner Marketing Manager, Databricks

Event Sponsor: Databricks (Databricks Privacy Policy) Event Co-Sponsor: Microsoft Corporation (Microsoft Privacy Statement)