Lakeflow

Ingest, transform, and orchestrate with a unified data engineering solution

TOP COMPANIES USING LAKEFLOWThe end-to-end solution for delivering high-quality data.

Tooling that makes it easy for every team to build reliable data pipelines for analytics and AI.85% faster development

50% cost reduction

99% reduction in pipeline latency

Unified tooling for data engineers

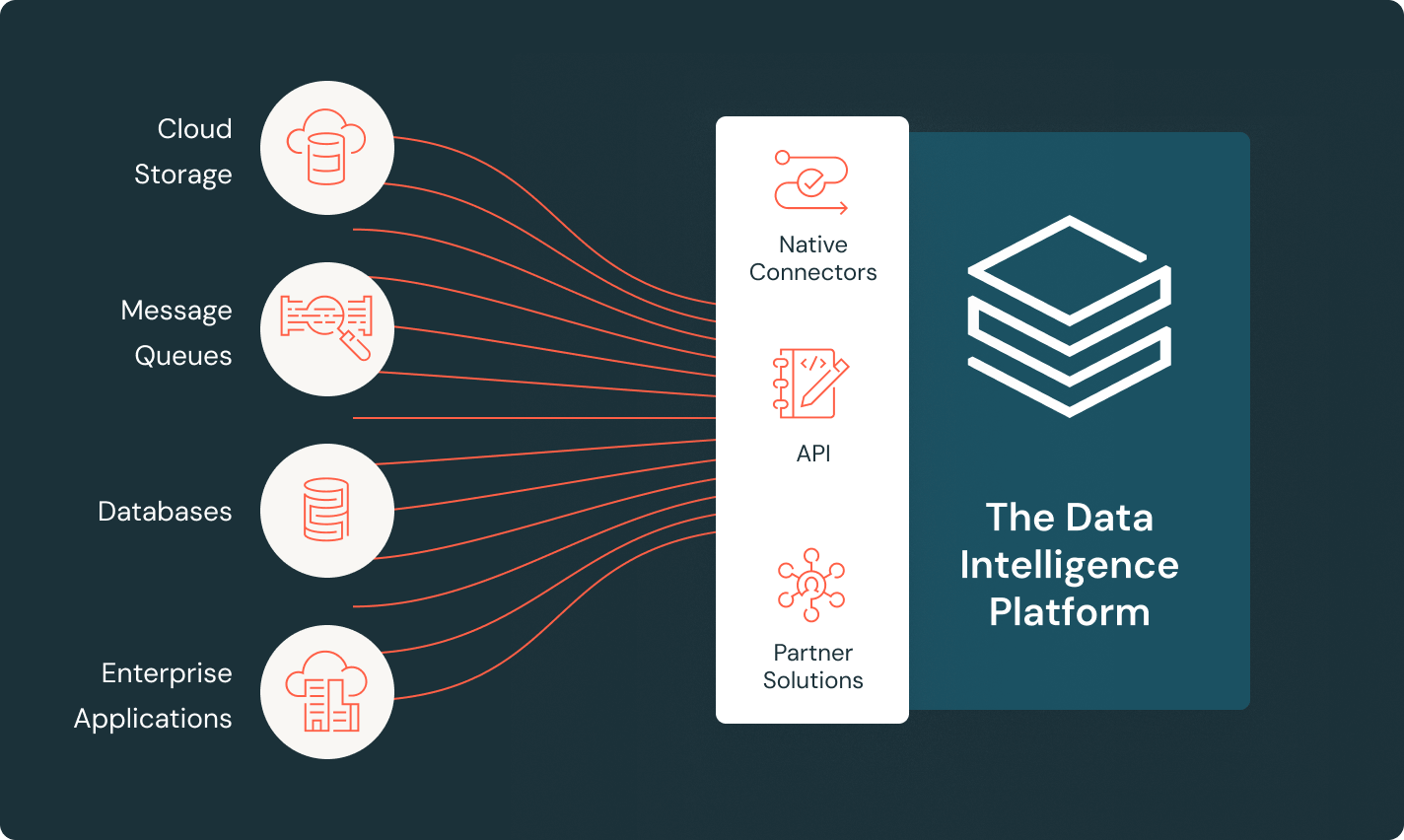

Lakeflow Connect

Efficient data ingestion connectors and native integration with the Data Intelligence Platform unlock easy access to analytics and AI, with unified governance.

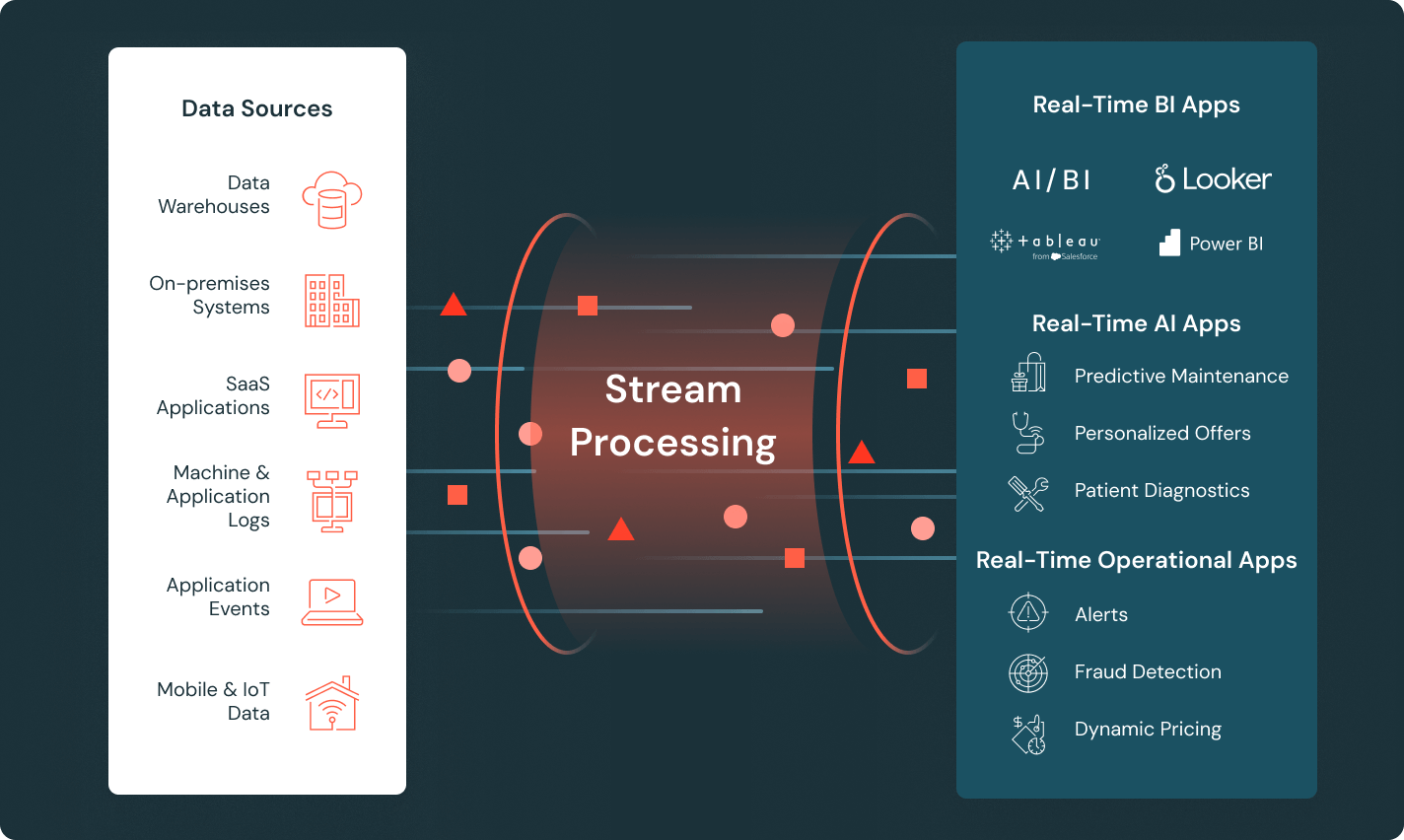

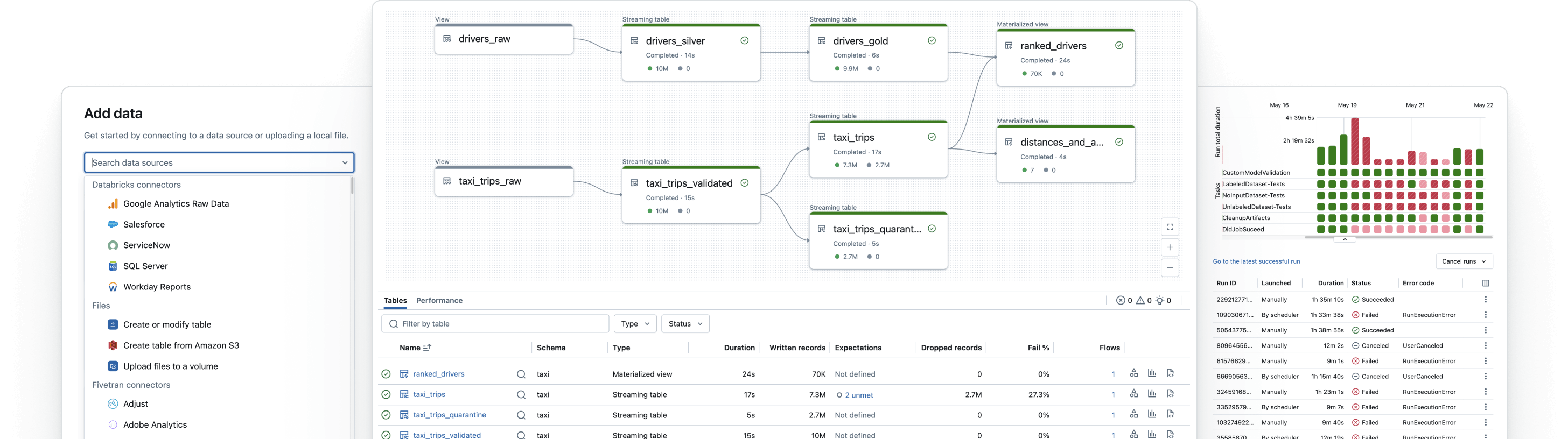

Spark Declarative Pipelines

Simplify batch and streaming ETL with automated data quality, change data capture (CDC), data ingestion, transformation, and unified governance.

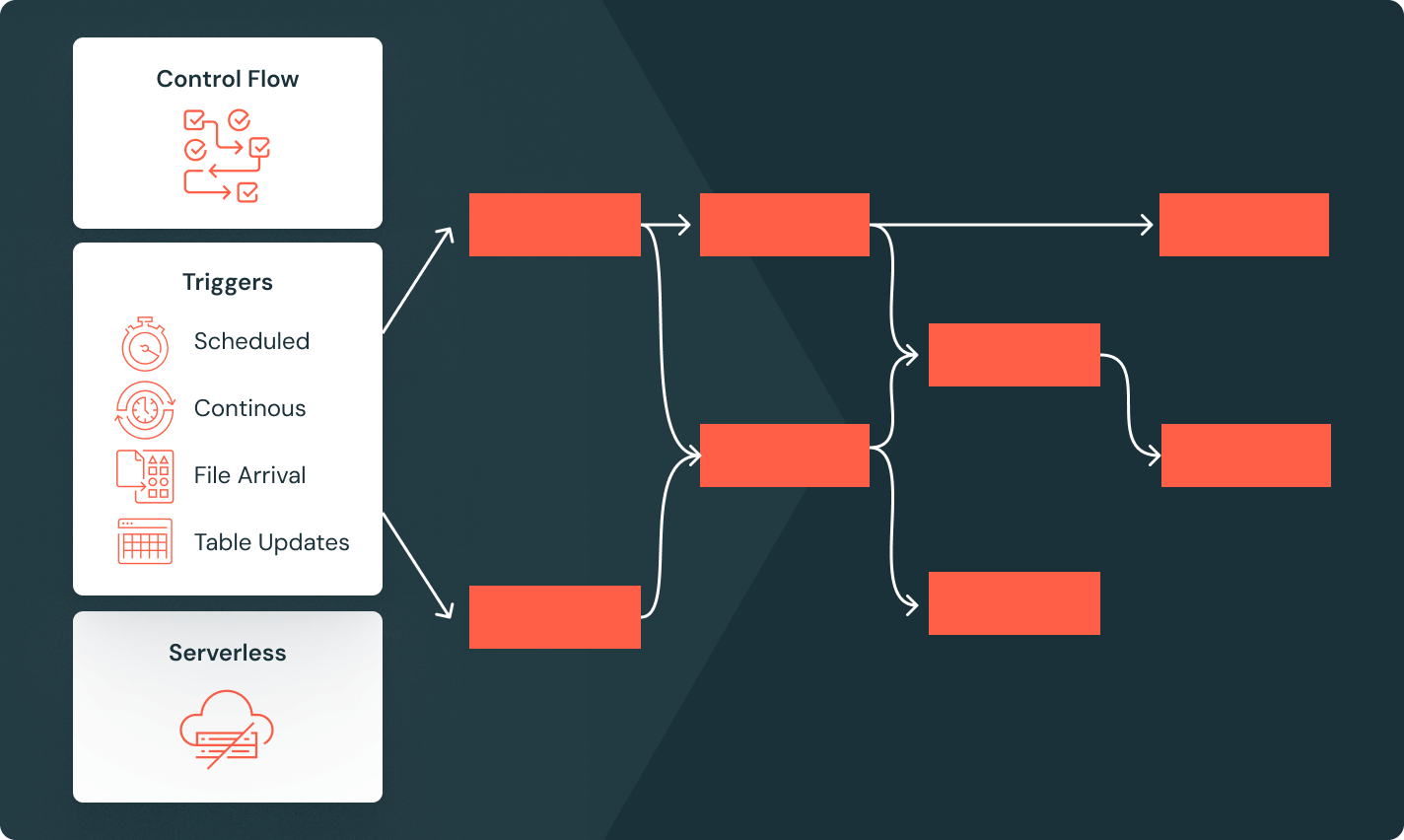

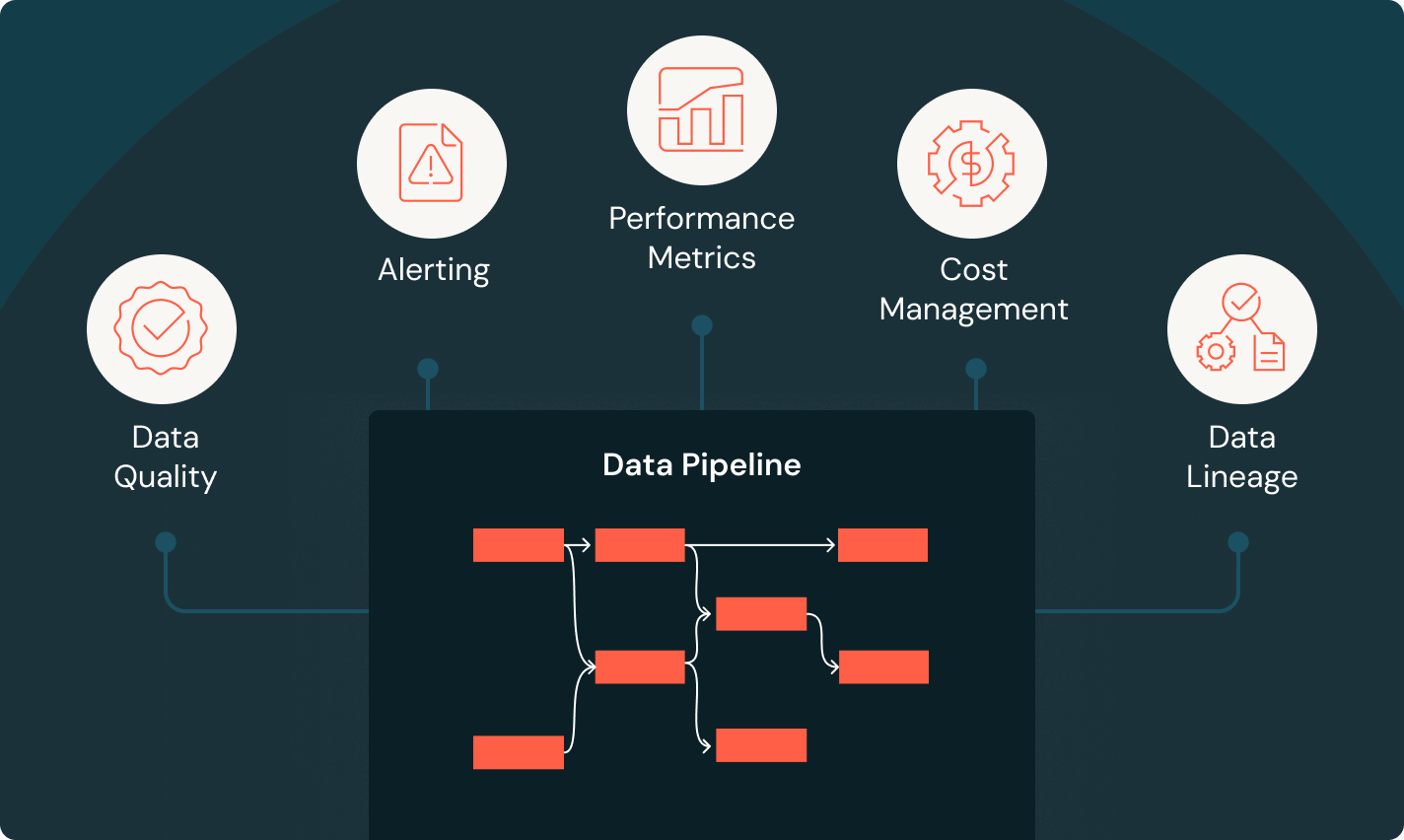

Lakeflow Jobs

Equip teams to better automate and orchestrate any ETL, analytics, and AI workflow with deep observability, high reliability, and broad task type support.

Unity Catalog

Seamlessly govern all your data assets with the industry’s only unified and open governance solution for data and AI, built into the Databricks Data Intelligence Platform.

Lakehouse Storage

Unify the data in your lakehouse, across all formats and types, for all your analytics and AI workloads.

Data Intelligence Platform

Explore the full range of tools available on the Databricks Data Intelligence Platform to seamlessly integrate data and AI across your organization.

Build reliable data pipelines

Transform raw data into high-quality gold tables

Implement ETL pipelines to filter, enrich, clean and aggregate data so it’s ready for analytics, AI and BI. Follow the medallion architecture to process data from bronze through silver to gold tables.

Take the next step

Data Engineering FAQ

Ready to become a data + AI company?

Take the first steps in your data transformation