Databricks Extends MLflow Model Registry with Enterprise Features

Published: April 15, 2020

by Mani Parkhe, Sue Ann Hong, Jules Damji and Clemens Mewald

We are excited to announce new enterprise grade features for the MLflow Model Registry on Databricks. The Model Registry is now enabled by default for all customers using Databricks' Unified Analytics Platform.

In this blog, we want to highlight the benefits of the Model Registry as a centralized hub for model management, how data teams across organizations can share and control access to their models, and touch upon how you can use Model Registry APIs for integration or inspection.

Central Hub for Collaborative Model Lifecycle Management

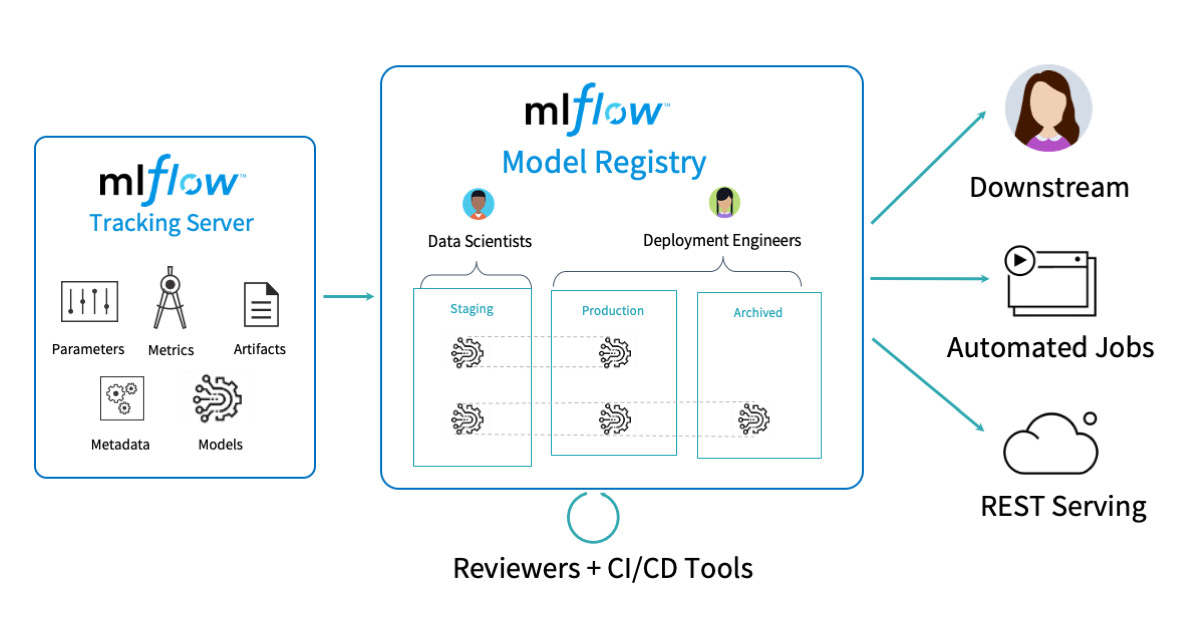

MLflow already has the ability to track metrics, parameters, and artifacts as part of experiments; package models and reproducible ML projects; and deploy models to batch or real-time serving platforms. Built on these existing capabilities, the MLflow Model Registry [AWS] [Azure] provides a central repository to manage the model deployment lifecycle.

Overview of the CI/CD tools, architecture and workflow of the MLflow centralized hub for model management.

One of the primary challenges among data scientists in a large organization is the absence of a central repository to collaborate, share code, and manage deployment stage transitions for models, model versions, and their history. A centralized registry for models across an organization affords data teams the ability to:

- discover registered models, current stage in model development, experiment runs, and associated code with a registered model

- transition models to deployment stages

- deploy different versions of a registered model in different stages, offering MLOps engineers ability to deploy and conduct testing of different model versions

- archive older models for posterity and provenance

- peruse model activities and annotations throughout model’s lifecycle

- control granular access and permission for model registrations, transitions or modifications

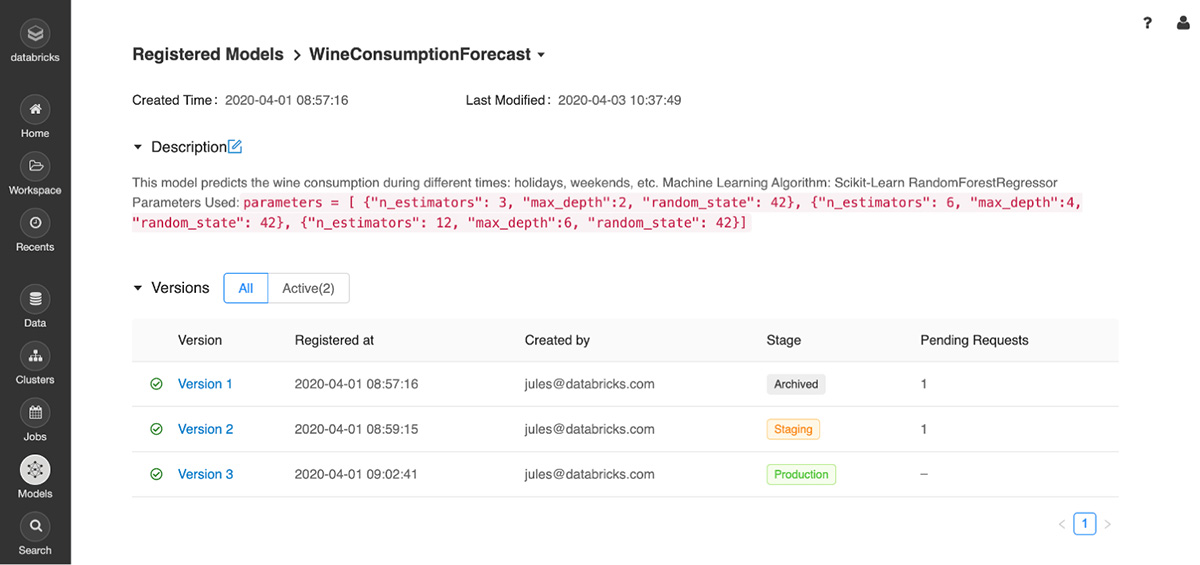

The Model Registry shows different version in different stages throughout their lifecycle.

Access Control for Model Stage Management

In the current decade of data and machine learning innovation, models have become precious assets and essential to businesses strategies. The models’ usage as part of solutions to solve business problems range from predicting mechanical failures in machinery to forecasting power consumption or financial performance; from fraud and anomaly detection to nudging recommendations for purchasing related items.

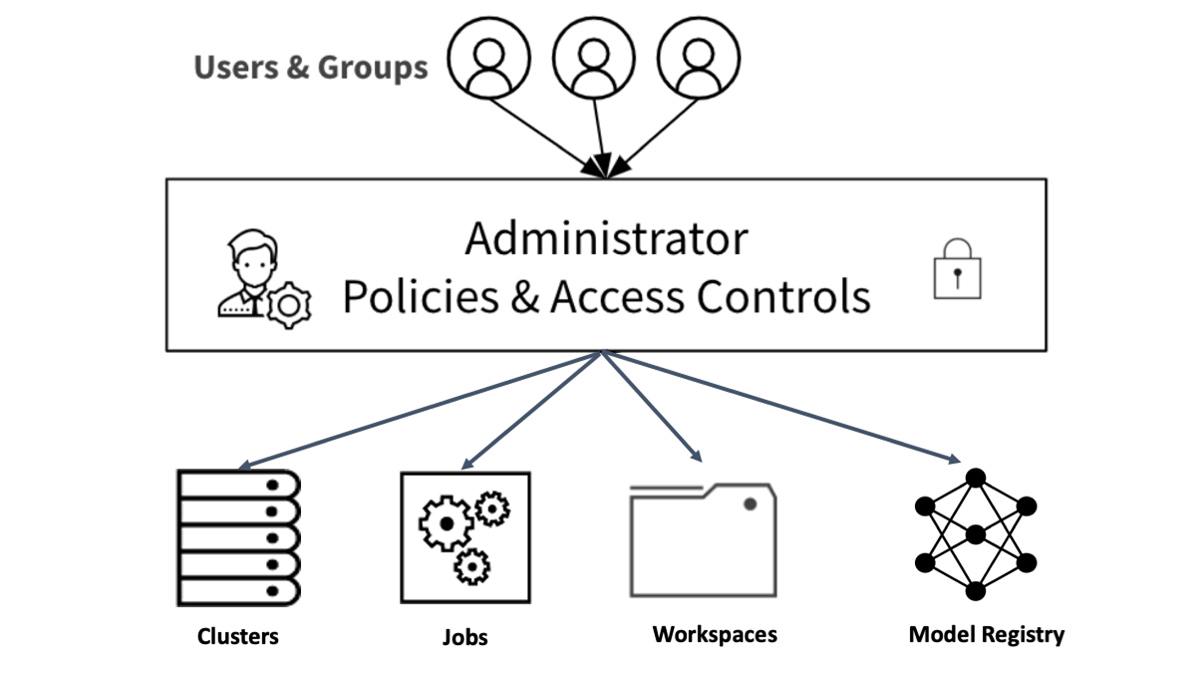

As with sensitive data, so with models that use this data to train and score, an access control list (ACL) is imperative so that only authorized users can access models. Through a set of ACLs, data team administrators can grant granular access to operations on a registered model during the model's lifecycle, preventing inappropriate use of the models or unapproved model transitions to production stages.

In Databricks Unified Analytics Platform you can now set permissions on individual registered models, following the general Databricks’ access control and permissions model [AWS] [Azure].

Access Control Policies for Databricks Assets.

From the Registered Models UI in the Databricks workspace, you can assign users and groups with appropriate permissions for models in the registry, similar to notebooks or clusters.

Set permissions in the Model Registry UI using the ACLs

As shown in the table below, an administrator can assign four permission levels to models registered in the Model Registry: No permissions, Read, Edit, and Manage. Depending on team members’ requirements to access models, you can grant permissions to individual users or groups for each of the abilities shown below.

| Ability | No Permissions | Read | Edit | Manage |

| Create a model | X | X | X | X |

| View model and its model versions in a list | X | X | X | |

| View model's details, its versions and their details, stage transition requests, activities, and artifact download URIs | X | X | X | |

| Request stage transitions for a model version | X | X | X | |

| Add a new version to model | X | X | ||

| Update model and version description | X | X | ||

| Rename model | X | |||

| Transition model version between stages | X | |||

| Approve, reject, or cancel a model version stage transition request | X | |||

| Modify permissions | X | |||

| Delete model and model versions | X |

Table for Model Registry Access, Abilities and Permissions

Gartner®: Databricks Cloud Database Leader

How to Use the Model Registry

Typically, data scientists who use MLflow will conduct many experiments, each with a number of runs that track and log metrics and parameters. During the course of this development cycle, they will select the best run within an experiment and register its model with the registry. Thereafter, the registry will let data scientists track multiple versions over the course of model progression as they assign each version with a lifecycle stage: Staging, Production, or Archived.

There are two ways to interact with the MLflow Model Registry [AWS] [Azure]. The first is through the Model Registry UI integrated with the Databricks workspace and the second is via MLflow Tracking Client APIs. The latter provides MLOps engineers access to registered models to integrate with CI/CD tools for testing or inspect model’s runs and its metadata.

Model Registry UI Workflows

The Model Registry UI is accessible from the Databricks workspace. From the Model Registry UI, you can conduct the following activities as part of your workflow:

- Register a model from the Run's page

- Edit a model version description

- Transition a model version

- View model version activities and annotations

- Display and search registered models

- Delete a model version

Model Registry APIs Workflows

An alternative way to interact with Model Registry is to use the MLflow model flavor or MLflow Client Tracking API interface. As enumerated above in the UI workflows, you can perform similar operations on registered models with the APIs. These APIs are useful for perusing or integrating with external tools that need access to models for nightly testing.

Load Models from the Model Registry

The Model Registry's APIs allow you to integrate with your choice of continuous integration and deployment (CI/CD) tools such as Jenkins to test your models. For example, your unit tests, with proper permissions granted as mentioned above, can load a version of a model for testing.

In the code snippet below, we are loading two versions of the same model: version 3 in staging and the latest version in production.

Now your Jenkins job has access to a staging version 3 of the model for testing. If you want to load the latest production version, you simply change the model:/URI to fetch the production model.

Integrate with an Apache Spark Job

As well as integrating with your choice of deployment (CI/CD) tools, you can load models from the registry and use it in your Spark batch job. A common scenario is to load your registered model as a Spark UDF.

Inspect, List or Search Information about Registered Models

At times, you may want to inspect a registered model’s information via a programmatic interface to examine MLflow Entity information about a model. For example, you can fetch a list of all registered models in the registry with a simple method and iterate over its version information.

This outputs:

With hundreds of models, it can be cumbersome to peruse or print the results returned from this call. A more efficient approach would be to search for a specific model name and list its version details using search_model_versions() method and provide a filter string such as "name='sk-learn-random-forest-reg-model'".

To sum up, the MLfow Model Registry is available by default to all Databricks customers. As a central hub for ML models, it offers data teams across large organizations to collaborate and share models, manage transitions, annotate and examine lineage. For controlled collaboration, administrators set policies with ACLs to grant permissions to access a registered model.

And finally, you can interact with the registry either using a Databricks workspace’s MLflow UI or MLflow APIs as part of your model lifecycle workflow.

Get Started with the Model Registry

Ready to get started or try it out for yourself? You can read more about MLflow Model Registry and how to use it on AWS or Azure. Or you can try an example notebook [AWS] [Azure]

If you are new to MLflow, read the open source MLflow quickstart with the lastest MLflow 1.7. For production use cases, read about Managed MLflow on Databricks and get started on using the MLflow Model Registry.

And if you’re interested to learn about the latest developments and best practices for managing the full ML lifecycle on Databricks with MLflow, join our interactive MLOps Virtual Event.

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read

How to present and share your Notebook insights in AI/BI Dashboards

Product

December 10, 2024/7 min read