Announcing Google’s Gemma 3 on Databricks

Build AI agents with Google's latest 12B multimodal model

Published: July 14, 2025

by Ying Chen, Megha Agarwal, Asfandyar Qureshi and Qi Zheng

Summary

- Gemma 3 12B, Google’s latest multimodal model, is now available on Databricks across all clouds

- Boost AI agents and inference apps with advanced language and vision capabilities, including document AI and visual Q&A

- Get started instantly via REST API, SQL, or AI Playground—with support for real-time and batch inference

We’re excited to announce that Google's Gemma 3 models are coming to Databricks, starting with the Gemma 3 12B model, which is now natively available across all clouds. Text capabilities are available today, with multimodal support rolling out soon.

Gemma 3 12B is optimized for enterprise workloads, striking the ideal balance between capability and computational efficiency. It excels at core use cases like document processing, content analysis, code generation, and conversational AI, making it a strong fit for production-grade applications.

Databricks has long been the platform where enterprises manage and analyze unstructured data at scale. As enterprises connect that data with large language models to build AI agents, the need for efficient, high-quality models with a reasonable price point has grown rapidly. Gemma 3 12B fills a critical gap—offering open, high-quality multimodal capabilities that power document AI and visual question answering use cases. Combined with Databricks’ unified platform for unstructured data and model development, teams can build and deploy production-grade AI faster and more affordably.

Enhanced quality and multimodal capabilities

Gemma 3 12B provides an attractive balance of size and quality:

- Broad capabilities: Gemma 3 12B has a large 128K context window that supports long documents as inputs. It also supports over 140 languages, making it ideal for global businesses.

- High quality for complex text-based tasks: Gemma 3 12B excels in language understanding and mathematical problem-solving. This makes it well-suited for enterprise applications that require sophisticated text analysis and logical reasoning.

- Empowers document AI and visual question answering use cases: The additional image modality unlocks use cases that require input from unstructured data, such as extracting information from tables in documents, receipt photos, and more.

Gartner®: Databricks Cloud Database Leader

Get started with Gemma 3 12B on Databricks

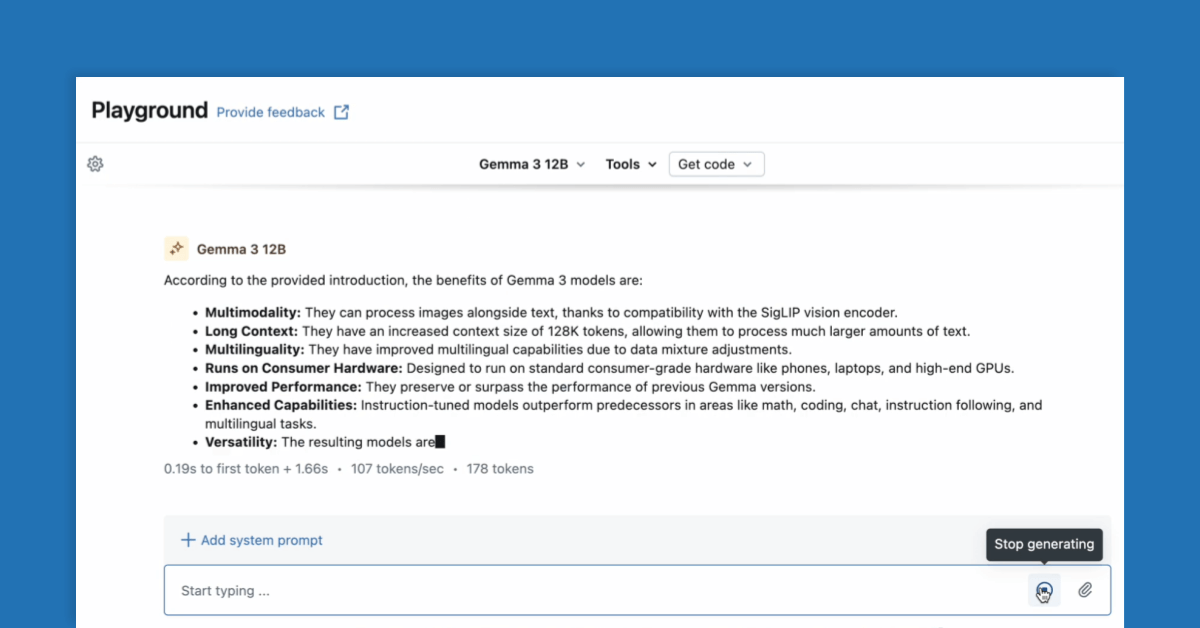

To get a sense of whether Gemma 3 12B would suit your use case, try it out in AI Playground.

You can query the model serving endpoint as well. For both AI Playground and the model serving endpoint, multimodal capabilities are coming soon.

Additionally, the newly released MLflow 3 allows you to evaluate the model more comprehensively across your specific datasets.

You can also run scalable batch inference by sending a SQL query to your table.

- Try out the Gemma 3 12B in AI Playground

- Query the model serving API for real-time response

- Scale with serverless batch inference

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read