Skip to main content![Asfandyar Qureshi]()

![Training Highly Scalable Deep Recommender Systems on Databricks (Part 1)]()

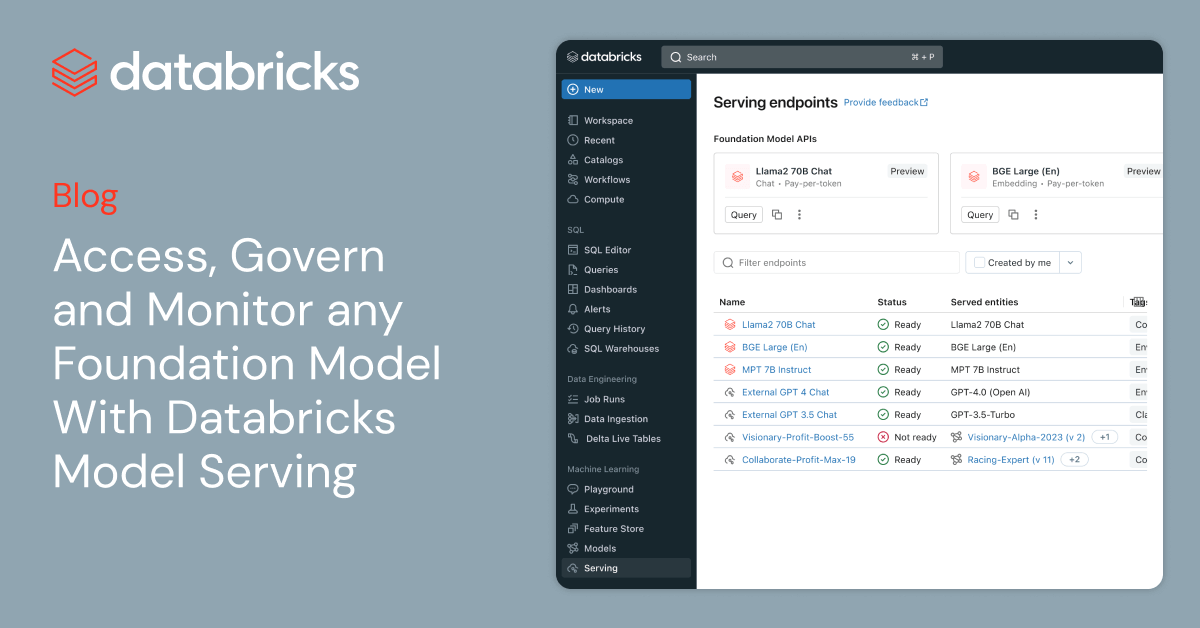

![foundation-model-seo-card]()

Asfandyar Qureshi

Asfandyar Qureshi's posts

Data Engineering

September 4, 2024/8 min read

Training Highly Scalable Deep Recommender Systems on Databricks (Part 1)

Engineering

December 11, 2023/6 min read

Data Engineering

September 4, 2024/8 min read

Engineering

December 11, 2023/6 min read