Transforming Supply Chain Management with AI Agents

Agents that Help Your Teams Make Effective Decisions in the Face of Complexity and Uncertainty

Summary

- Global supply chains need intelligent automation beyond traditional manual approaches.

- Combining AI with optimization reduces hallucination while providing transparent recommendations.

- Databricks offers comprehensive agent development, evaluation, and governance in one platform.

Efficiently managing supply chains has long been a top priority for many industries. Optimized supply chains allow organizations to deliver the right goods at the right place at the right time cost-effectively, allowing the firm to meet consumer demand while keeping margins high.

But uncertainty is always a factor, and in today’s global economy, frequent disruptions and delays, along with shifting trade ties, require supply chain organizations to plan for a range of contingencies and respond to challenges on short notice. To keep up with this, supply chain managers are increasingly seeking to augment their staff with agentic capabilities that can examine a wide range of scenarios and possible outcomes. Armed with these tools, organizations can support decision makers of varying degrees of expertise in rapidly examining a situation and steer them to the best possible solutions given the information at hand.

Enabling Expert Decision Making

This topic was recently addressed by David Simchi-Levi, a well-known MIT professor in supply chain and inventory management (whose groundbreaking 2015 work was the basis of our previous publication). In the paper, Professor Simchi-Levi and his co-authors proposed the use of large reasoning models to bridge the gap between business users and the complex mathematical optimization tools. The authors suggest that an agentic system with access to appropriate data can be engaged in much the same way as managers interact with technical modeling experts, an approach that democratizes supply chain technologies, and reduces the need for wide-spread expertise to drive adoption.

From an AI engineering perspective, combining large reasoning models with operational research adds strong grounding and transparency. Although progress has been rapid, large language models are probabilistic in nature and still prone to hallucination. Mathematical optimization, on the other hand, is a more deterministic, fully transparent and explainable technique that can generate concrete and actionable plans. Allowing a large reasoning model to use the more deterministic and proven technique and interpret the outcomes reduces the risk of hallucination, which is critical for building trust with business stakeholders and providing more repeatable recommendations.

Today, we are in the early stages of a generational transformation in supply chain management driven by disruptive technology. As agents begin to collaborate across the business value chain—for example, a supply chain agent working with demand or production planning agents—and with humans in the loop at critical junctions, we will see end to end decision flows that adapt in real-time and align with shared objectives. That is when tremendous gains in service, cost, productivity, and resilience will become evident.

The remainder of this blog post provides an overview of how to build, evaluate, and deploy an agentic system for supply chain management on Databricks. We have verified that our code is fully portable—including both AI agents and their deterministic optimization tools—across all three major cloud providers (Azure, AWS and GCP). Two AI Engineers were able to create the first working prototype of this agent in eight hours, demonstrating the ease of building such systems on Databricks. The supporting notebooks and scripts are open-sourced and available here.

Demonstrating the Potential through Supply Chain Risk Analysis

To demonstrate the potential for agentic AI within supply chain scenarios, we will revisit our analysis of an extended supply chain, published in a previous blog post. While we are focused on supplier risk in this particular scenario, the use of agentic capabilities demonstrated here is broadly applicable across a wide range of supply chain scenarios where users can use the assistance of an agentic tool to deal with complex information.

Let’s imagine a scenario where we are working for a global consumer packaged goods company and are responsible for keeping the supply chain running smoothly, adapting rapidly to various unforeseen changes. This means ensuring that products are manufactured on time, suppliers deliver materials reliably, inventory levels are managed effectively, and goods reach distribution centers and retail shelves without delay. Of course, saying this is much easier than doing it. On a daily basis, supply chain managers must handle unexpected demand fluctuations, production bottlenecks, supplier delays, transportation disruptions, and the constant pressure to reduce costs while maintaining service levels.

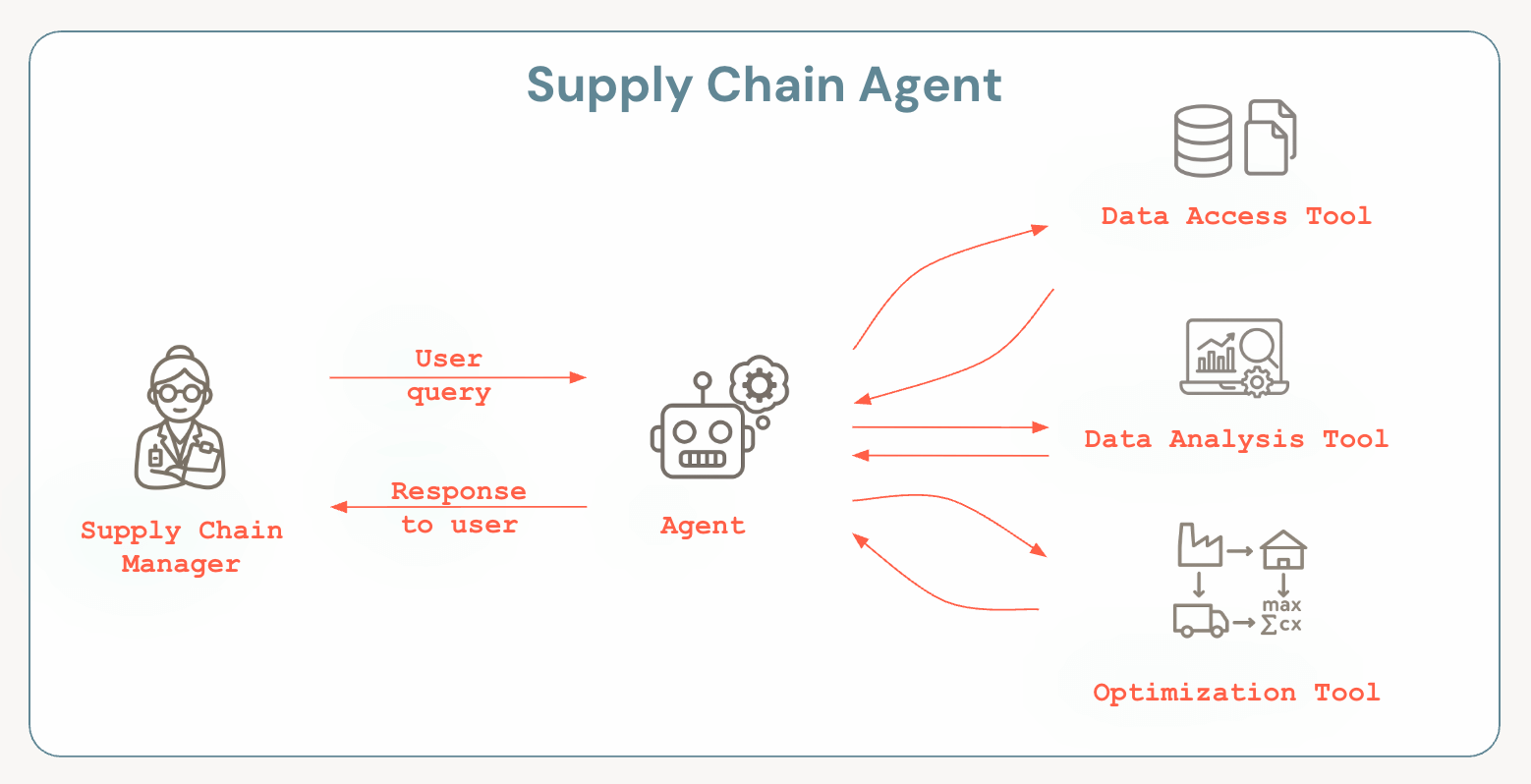

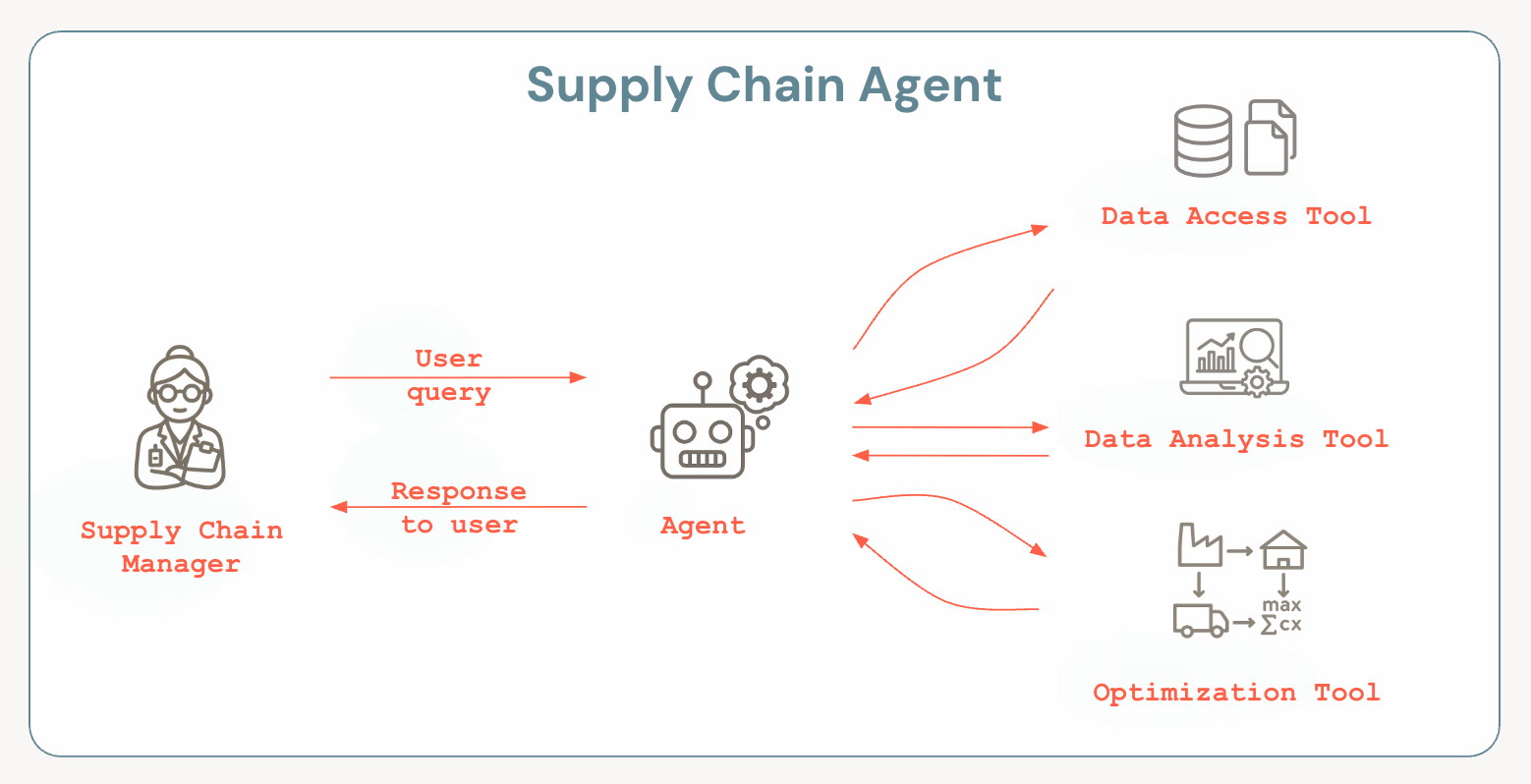

When faced with such challenges, more and more supply chain managers are turning to mathematical optimization tools to support their decision-making. Using the latest operational data, these tools run algorithms that recommend the best possible next steps to optimize key business metrics, whether that is profit or revenue. The challenge, however, is that these tools are often complex and difficult to use without proper training. To address this, we develop a supply chain agent that assists managers by interpreting their intent expressed in plain language, accessing and analyzing data, applying optimization techniques, and ultimately delivering clear insights and actionable recommendations.

Build

Before writing any code, we first need to understand what kinds of questions the agent will be asked and what tools it will require to answer them. Below are some example questions we expect the agent to handle:

- What is the demand for finished product X?

- What materials are needed to produce finished product Y, and what is the current inventory status of these materials?

- Which finished products consume material Z?

- What happens if Supplier A is disrupted and takes four weeks to recover? What actions should I take to mitigate the impact?

- If Supplier B goes down, how long can we continue without losing demand, and what are the recommended actions?

To answer these questions, the agent must be able to access the latest operational data stored in a database. For the first three questions, it needs to interpret the intent and generate the correct database queries. These are represented as the Data Access Tool and Data Analysis Tool in the diagram above. Although there are many ways to implement such tools, Databricks Genie stands out as a compelling option, providing both high-quality output and strong governance, and low cost to implement (i.e., no additional licenses).

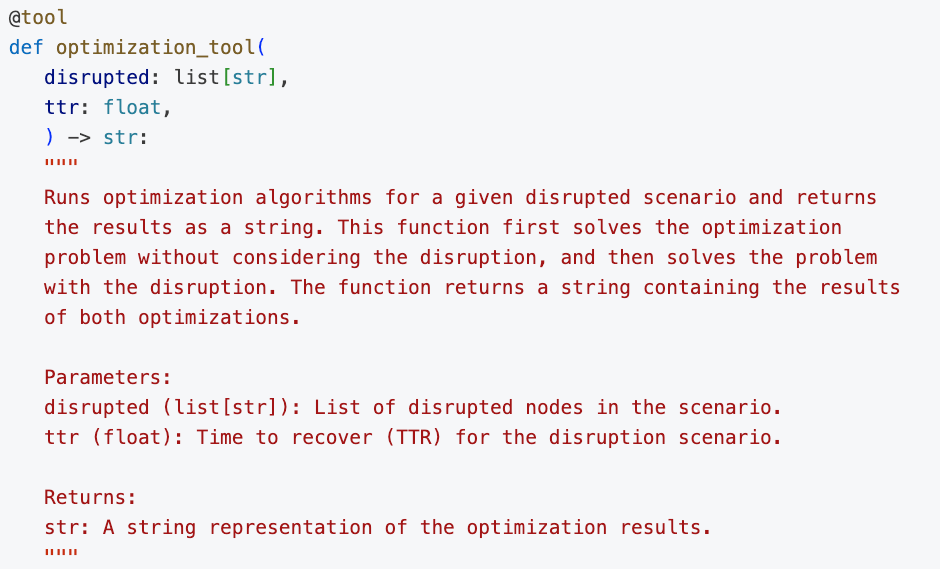

For the last two questions, the agent requires a tool that performs mathematical optimization described as the Optimization Tool above. The objectives and constraints of this formulation must reflect real business conditions, but for this blog post, we adopt the flexible expression proposed by Professor David Simchi-Levi in his research. When defining this tool, it is important to provide a clear description of when and how it should be used, enabling the agent to determine the right situations in which to apply it. These tools can then be defined and registered in Unity Catalog and exposed through an MCP Server to ensure better discoverability and governance.

Finally, we need a large language model with strong reasoning capabilities. The model must be able to understand the supply chain manager’s questions, plan how to obtain the answer, execute the plan, and then interpret and present the results clearly. On Databricks, we can easily experiment with a range of state-of-the-art reasoning models through the Foundation Model APIs (FMAPI) and choose the one that best fits our needs. Users can switch between the latest Claude series and the Llama families with a single line of code. Mosaic AI Gateway, built on top of Mosaic AI Model Serving, provides a unified, secure, and governed interface for accessing and managing these models. Check out the notebook to see how these components can be assembled to build a powerful agent.

Alternatively, users can leverage Agent Bricks to build an entire system with a no-code or low-code approach. Agent Bricks automatically optimizes many parameters, including the prompts in the agentic system that shape the final quality of the response. Once the agent is deployed in production, users can also provide live feedback to continuously improve response quality and adapt the agent to evolving requirements.

Evaluate

What is non-negotiable when deploying an agentic system in a real-world environment is quality. We must be able to evaluate and clearly understand how well the agent performs. The process starts with preparing an evaluation dataset containing questions similar to those users will ask in production. Each question is paired with expected outcomes, such as an expected response. In an agentic system, however, it is equally—if not more—important to assess whether the agent is using the right tools appropriately. On Databricks, we can use Mosaic AI Agent Evaluation to examine every detail of the agent’s execution. We see eval-driven development as a fundamental step on the road to achieve AgentOps and ensure our Agents behave in accordance with their programming; a similar maturity arc was seen with test-driven development (TDD) and behaviour-driven development (BDD) which enabled the DevOps movement in software development over 20 years ago. Take a look at this notebook to see how we validate both the correct tool usage and parameter settings.

Deploy

Once we are satisfied with our agent’s quality, we can deploy it on Databricks Model Serving and use its endpoint to send requests and receive responses. In our code repository, we show how to integrate this endpoint in a frontend application built using Databricks Apps and Lakebase for history and session management.

With MLflow Tracing integrated directly into the UI, inputs, outputs, and user feedback are automatically captured in context, making it easier to collate feedback and continuously improve performance.

The main benefit of building and hosting an end-to-end application on Databricks is easy to set up user authorization and the ability to use Unity Catalog to secure and govern all assets in one place. There is no need to stitch together separate services, which often creates security and governance bottlenecks. Databricks Apps is extremely flexible and supports multiple languages and frameworks, so you can build the front end you want. See the code to see how you can build a working front end for your supply chain agent.

Data intelligence reshapes industries

Discussion

A snapshot of a sample conversation between the supply chain manager and the agent is shown below. In about 15 seconds, the manager receives detailed recommendations on how to mitigate risk and protect the bottom line for a specific scenario. The speed and accuracy of the agent’s response are remarkable. Every number in the response is derived directly from the outputs of the optimization algorithm, as the agent has access to the decision variables, and the recommendations are based on interpreting these results. If humans were presented with the same volume of information, providing timely responses would be extremely difficult. For large language models, however, this is effortless.

The transparent and explainable responses from the agent are only possible because the tools it relies on are themselves transparent and explainable. These are essential requirements for business users to adopt the technology. We believe that the combination of probabilistic LLMs with deterministic physical models will become the standard architecture for mission critical applications such as supply chain management.

Why Databricks?

Databricks provides a unique platform for building agentic systems that meet the highest quality standards. With Mosaic AI Agent Evaluation, teams can rigorously test and benchmark their agents using production-like evaluation datasets, ensuring not only accurate responses but also proper tool usage and parameter handling. This comprehensive evaluation capability gives business stakeholders confidence that the agent’s recommendations are trustworthy, actionable, and aligned with real-world requirements.

Equally important, Databricks ensures enterprise-grade security and governance through Unity Catalog. All tools, datasets, and models can be registered, versioned, and managed in a unified governance layer, making it straightforward to track usage and maintain compliance.

Beyond governance, Databricks offers a complete and flexible environment for agent development: users can collect the operational data needed to build an agent using Lakeflow Connect, via Delta Sharing, or through Databricks rich partner ecosystem (for example, Everstream Analytics), easily experiment with state of the art language models, integrate optimization engines, and connect external tools through open interfaces.

This combination of quality, security, and completeness makes Databricks the ideal platform for building and deploying agentic solutions that are both powerful and ready for production.

Moving Forward with the Agentic Supply Chain

The growing complexity and unpredictability of global supply chains demand intelligent and practical solutions. Traditional approaches based on manual decision-making or expert-driven modeling are not sufficient. By combining probabilistic language models with deterministic mathematical optimization and real-time data access, organizations can empower supply chain managers to make faster, more accurate, and more scalable decisions, reducing the inherent traditional dependency on experts and time, which in many cases cost organisations millions of dollars per minute.

Databricks provides the foundation to bring this vision to life. From building and testing AI Agents, to ensuring trust through Mosaic AI Agent Evaluation, to governing data and models with Unity Catalog, Databricks offers a secure and comprehensive environment for deploying agentic systems. Its flexibility to integrate external tools and optimization engines makes it possible to adapt solutions to each company’s unique needs, which is portable across the different cloud providers.

The result is an agentic system that not only responds to questions in plain language but also grounds its recommendations in transparent and explainable logic. This combination reduces the risks of hallucination, builds trust with business stakeholders, and accelerates the adoption of AI in mission-critical domains like supply chain management. With Databricks, companies can move beyond experiments and deploy agentic systems that deliver real business value today.

Download the code to explore how to build, evaluate, and deploy your supply chain management agent on Databricks.

Never miss a Databricks post

What's next?

Retail & Consumer Goods

September 20, 2023/11 min read

How Edmunds builds a blueprint for generative AI

Retail & Consumer Goods

September 9, 2024/6 min read