What’s New: Lakeflow Jobs Provides More Efficient Data Orchestration

Lakeflow Jobs now comes with a new set of capabilities and design updates built to uplevel workflow orchestration and improve pipeline efficiency.

Summary

- Discover the newest UI/UX enhancements for Lakeflow Jobs that provide users with a cleaner and more intuitive look and feel, enhancing their overall experience.

- Learn about the latest added capabilities that provide additional flexibility and control over workflows.

- Find out more about Lakeflow Connect in Jobs, a new and more centralized way to ingest data into your pipeline.

Over the past few months, we’ve introduced exciting updates to Lakeflow Jobs (formerly known as Databricks Workflows) to improve data orchestration and optimize workflow performance.

For newcomers, Lakeflow Jobs is the built-in orchestrator for Lakeflow, a unified and intelligent solution for data engineering with streamlined ETL development and operations built on the Data Intelligence Platform. Lakeflow Jobs is the most trusted orchestrator for the Lakehouse and production-grade workloads, with over 14,600 customers, 187,000 weekly users, and 100 million jobs run every week.

From UI improvements to more advanced workflow control, check out the latest in Databricks’ native data orchestration solution and discover how data engineers can streamline their end-to-end data pipeline experience.

Refreshed UI for a more focused user experience

We’ve redesigned our interface to give Lakeflow Jobs a fresh and modern look. The new compact layout allows for a more intuitive orchestration journey. Users will enjoy a task palette that now offers shortcuts and a search button to help them more easily find and access their tasks, whether it's a Lakeflow Pipeline, an AI/BI dashboard, a notebook, SQL, or more.

For monitoring, customers can now easily find information on their jobs’ execution times in the right panel under Job and Task run details, allowing them to easily monitor processing times and quickly identify any data pipeline issues.

We’ve also improved the sidebar by letting users choose which sections (Job details, Schedules & Triggers, Job parameters, etc.) to hide or keep open, making their orchestration interface cleaner and more relevant.

Overall, Lakeflow Jobs users can expect a more streamlined, focused, and simplified orchestration workflow. The new layout is currently available to users who have opted into the preview and enabled the toggle on the Jobs page.

More controlled and efficient data flows

Our orchestrator is constantly being enhanced with new features. The latest update introduces advanced controls for data pipeline orchestration, giving users greater command over their workflows for more efficiency and optimized performance.

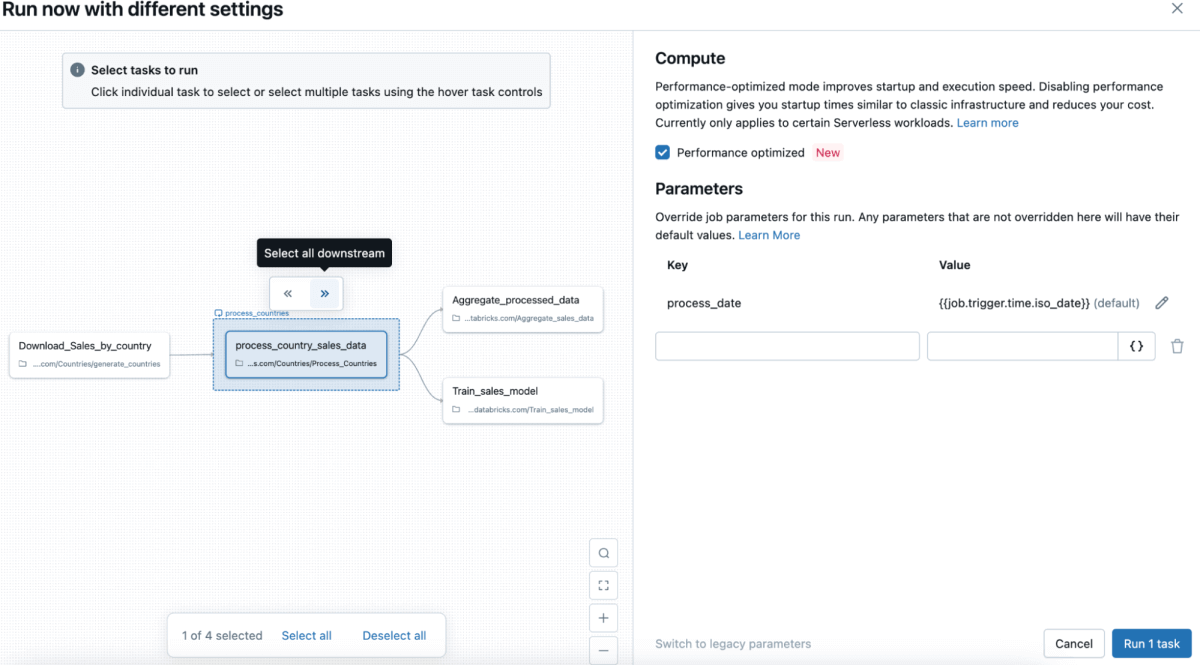

Partial runs allow users to select which tasks to execute without affecting others. Previously, testing individual tasks required running the entire job, which could be computationally intensive, slow, and costly. Now, on the Jobs & Pipelines page, users can select "Run now with different settings" and choose specific tasks to execute without impacting others, avoiding computational waste and high costs. Similarly, Partial repairs enable faster debugging by allowing users to fix individual failed tasks without rerunning the entire job.

With more control over their run and repair flows, customers can speed up development cycles, improve job uptime, and reduce compute costs. Both Partial runs and repairs are generally available in the UI and the Jobs API.

To all SQL fans out there, we have some excellent news for you! In this latest round of updates, customers will be able to use SQL queries’ outputs as parameters in Lakeflow Jobs to orchestrate their data. This makes it easier for SQL developers to pass parameters between tasks and share context within a job, resulting in a more cohesive and unified data pipeline orchestration. This feature is also now generally available.

Gartner®: Databricks Cloud Database Leader

Quick-start with Lakeflow Connect in Jobs

In addition to these enhancements, we’re also making it fast and easy to ingest data into Lakeflow Jobs by more tightly integrating Jobs with Lakeflow Connect, Databricks Lakeflow’s managed and reliable data ingestion solution, with built-in connectors.

Customers can already orchestrate Lakeflow Connect ingestion pipelines that originate from Lakeflow Connect, using any of the fully managed connectors (e.g., Salesforce, Workday, etc.) or directly from notebooks. Now, with Lakeflow Connect in Jobs, customers can easily create an ingestion pipeline directly from two entry points in their Jobs interface, all within a point-and-click environment. Since ingestion is often the first step in ETL, this new seamless integration with Lakeflow Connect enables customers to consolidate and streamline their data engineering experience, from end to end.

Lakeflow Connect in Jobs is now generally available for customers. Learn more about this and other recent Lakeflow Connect releases.

A single orchestration for all your workloads

We are consistently innovating on Lakeflow Jobs to offer our customers a modern and centralized orchestration experience for all their data needs across the organization. More features are coming to Jobs - we’ll soon unveil a way for users to trigger jobs based on table updates, provide support for system tables, and expand our observability capabilities, so stay tuned!

For those who want to keep learning about Lakeflow Jobs, check out our on-demand sessions from our Data+AI Summit and explore Lakeflow in a variety of use cases, demos, and more!

- Learn more about Lakeflow Jobs

- Create your first job with our Quickstart Guide

- Enable the new UI using the toggle in the Jobs & Pipelines Page (preview customers only)

- Couldn’t make it to the Data+AI Summit? Watch Lakeflow Jobs sessions on demand

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read