What’s New: Zerobus and Other Announcements Improve Data Ingestion for Lakeflow Connect

Lakeflow Connect expands its coverage of data sources, and Zerobus introduces a high-throughput direct write API with low latency

Summary

- Lakeflow Connect expands the breadth of data ingestion sources, including new query-based connectors for databases.

- Zerobus is a direct write API that simplifies ingestion for IoT, clickstream, telemetry and other similar use cases.

- Lakeflow Connect in Jobs provides a seamless, intuitive integration between both tools, helping users save time with a unified end-to-end experience.

Everything starts with good data, so ingestion is often your first step to unlocking insights. However, ingestion presents challenges, like ramping up on the complexities of each data source, keeping tabs on those sources as they change, and governing all of this along the way.

Lakeflow Connect makes efficient data ingestion easy, with a point-and-click UI, a simple API, and deep integrations with the Data Intelligence Platform. Last year, more than 2,000 customers used Lakeflow Connect to unlock value from their data.

In this blog, we’ll review the basics of Lakeflow Connect and recap the latest announcements from the 2025 Data + AI Summit.

Ingest all your data in one place with Lakeflow Connect

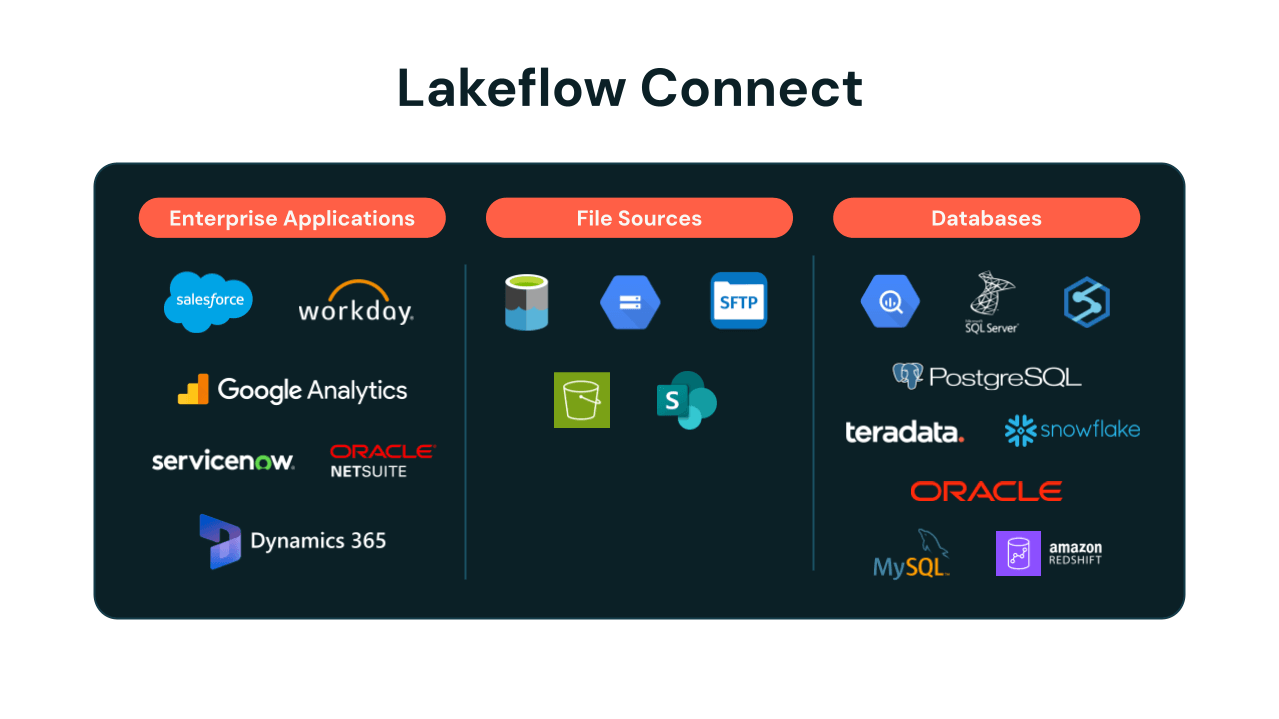

Lakeflow Connect offers simple ingestion connectors for applications, databases, cloud storage, message buses, and more. Under the hood, ingestion is efficient, with incremental updates and optimized API usage. As your managed pipelines run, we take care of schema evolution, seamless third-party API upgrades, and comprehensive observability with built-in alerts.

Data + AI Summit 2025 Announcements

At this year’s Data + AI Summit, Databricks announced the General Availability of Lakeflow, the unified approach to data engineering across ingestion, transformation, and orchestration. As part of this, Lakeflow Connect announced Zerobus, a direct write API that simplifies ingestion for IoT, clickstream, telemetry and other similar use cases. We also expanded the breadth of supported data sources with more built-in connectors across enterprise applications, file sources, databases, and data warehouses, as well as data from cloud object storage.

Zerobus: a new way to push event data directly to your lakehouse

We made an exciting announcement introducing Zerobus, a new innovative approach for pushing event data directly to your lakehouse by bringing you closer to the data source. Eliminating data hops and reducing operational burden enables Zerobus to provide high-throughput direct writes with low latency, delivering near real-time performance at scale.

Previously, some organizations used message buses like Kafka as transport layers to the Lakehouse. Kafka offers a durable, low-latency way for data producers to send data, and it’s a popular choice when writing to multiple sinks. However, it also adds extra complexity and costs, as well as the burden of managing another data copy—so it’s inefficient when your sole destination is the Lakehouse. Zerobus provides a simple solution for these cases.

Joby Aviation is already using Zerobus to directly push telemetry data into Databricks.

Joby is able to use our manufacturing agents with Zerobus to push gigabytes a minute of telemetry data directly to our lakehouse, accelerating the time to insights -- all with Databricks Lakeflow and the Data Intelligence Platform.” — Dominik Müller, Factory Systems Lead, Joby Aviation, Inc.

As part of Lakeflow Connect, Zerobus is also unified with the Databricks Platform, so you can leverage broader analytics and AI capabilities right away. Zerobus is currently in Private Preview; reach out to your account team for early access.

🎥 Watch and learn more about Zerobus: Breakout session at the Data + AI Summit, featuring Joby Aviation, "Lakeflow Connect: eliminating hops in your streaming architecture”

Gartner®: Databricks Cloud Database Leader

Lakeflow Connect expands ingestion capabilities and data sources

New fully managed connectors are continuing to roll out across various release states (see full list below), including Google Analytics and ServiceNow, as well as SQL Server – the first database connector, all currently in Public Preview with General Availability coming soon.

We have also continued innovating for customers who want more customization options and use our existing ingestion solution, Auto Loader. It incrementally and efficiently processes new data files as they arrive in cloud storage. We’ve released some major cost and performance improvements for Auto Loader, including 3X faster directory listings and automatic cleanup with “CleanSource,” both now generally available, along with smarter and more cost-effective file discovery using file events. We also announced native support for ingesting Excel files and ingesting data from SFTP servers, both in Private Preview, available by request for early access.

Supported data sources:

- Applications: Salesforce, Workday, ServiceNow, Google Analytics, Microsoft Dynamics 365, Oracle NetSuite

- File sources: S3, ADLS, GCS, SFTP, SharePoint

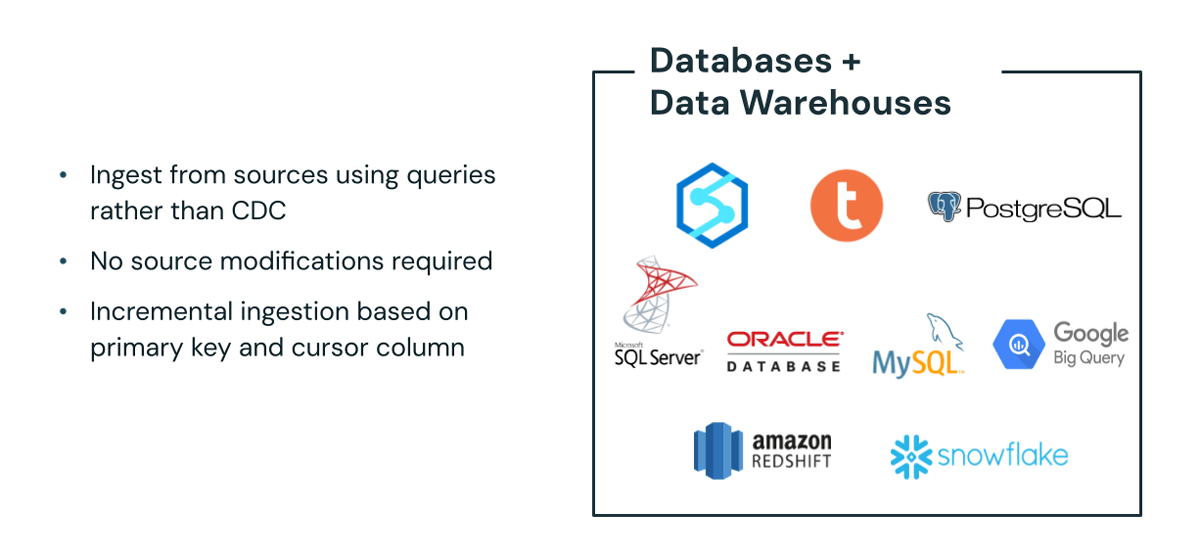

- Databases: SQL Server, Oracle Database, MySQL, PostgreSQL

- Data warehouses: Snowflake, Amazon Redshift, Google BigQuery

Within the expanded connector offering, we're introducing query-based connectors that simplify data ingestion. These new connectors allow you to pull data directly from your source systems without database modifications and work with read replicas where change data capture (CDC) logs aren't available. This is currently in Private Preview; reach out to your account team for early access.

🎥 Watch and learn more about Lakeflow Connect: Breakout session at the Data + AI Summit, “Getting Started with Lakeflow Connect”

🎥 Watch and learn more about ingesting from enterprise SaaS applications: Breakout session at the Data + AI Summit featuring Databricks customer Porsche Holding, "Lakeflow Connect: Seamless Data Ingestion From Enterprise Apps"

🎥 Watch and learn more about database connectors: Breakout session at the Data + AI Summit, "Lakeflow Connect: Easy, Efficient Ingestion From Databases"

Lakeflow Connect in Jobs, now generally available

We are continuing to develop capabilities to make it easier for you to use our ingestion connectors while building data pipelines, as part of Lakeflow’s unified data engineering experience. Databricks recently announced Lakeflow Connect in Jobs, which enables you to create ingestion pipelines within Lakeflow Jobs. So, if you have jobs as the center of your ETL process, this seamless integration provides a more intuitive and unified experience for managing ingestion.

Customers can define and manage their end-to-end workloads—from ingestion to transformation—all in one place. Lakeflow Connect in Jobs is now generally available.

🎥 Watch and learn more about Lakeflow Jobs: Breakout session at the Data + AI Summit "Orchestration with Lakeflow Jobs"

Lakeflow Connect: more to come in 2025 and beyond

Databricks understands the needs of data engineers and organizations who drive innovation with their data using analytics and AI tools. To that end, Lakeflow Connect has continued to build out robust, efficient ingestion capabilities with fully managed connectors to more customizable features and APIs.

We’re just getting started with Lakeflow Connect. Stay tuned for more announcements later this year, or contact your Databricks account team to join a preview for early access.

To try Lakeflow Connect, you can review the documentation, or check out the Demo Center.

Never miss a Databricks post

What's next?

Retail & Consumer Goods

September 20, 2023/11 min read