Product descriptions:

Access to high-quality, labeled data significantly improves model predictions and enhances context, boosting the performance of data analysis, ML, and AI applications. This produces more relevant results on search engine platforms and improved product recommendations on e-commerce sites. However, data labeling requires considerable resources and time. Manual labeling is costly and vulnerable to human errors. Human-in-the-loop methods only marginally speed up the process and increase accuracy. Large language models (LLMs) can enable vast improvements to this workflow.

Faster feedback, more accurate annotations

These challenges were the inspiration for startup Refuel.AI to build a platform for cleaned and labeled data that is powered by LLMs. Machine learning teams, product teams, and operations teams all need clean, labeled, diverse data sets to power their workloads, be it model training, observability, or product analytics. In the past, companies have relied on human annotations or human-in-the-loop types of processes for data cleaning and labeling. Now, imagine the same workflow — but with LLMs as data annotators. Feedback cycles can be up to 100 times faster.

Refuel LLM is a purpose-built model for data labeling and enrichment tasks. Launched in October 2023, the model was instruction-tuned on more than 5 billion tokens (comprising more than 2,500 unique tasks) on top of a Llama-v2-13b base model. It outperforms trained human annotators (80.4%), GPT-3-5-turbo (81.3%), PaLM-2 (82.3%), and Claude (79.3%) across a benchmark of 15 text labeling data sets. (See Figure 1)

The Refuel.AI team started working with Databricks Mosaic AI in early 2023 when they began seriously thinking about building Refuel LLM. Over the course of almost three months, the team trained close to 50 models as part of various experiments, and the Mosaic AI team was able to provide on-demand service and compute access.

First, the model was instruction-tuned on more than 5 billion tokens (comprising more than 2,500 unique tasks) on top of a Llama-v2-13b base model. The team conducted multiple training runs, each about three days long, on Mosaic AI Training infrastructure. The end result was a 78% increase in label quality.

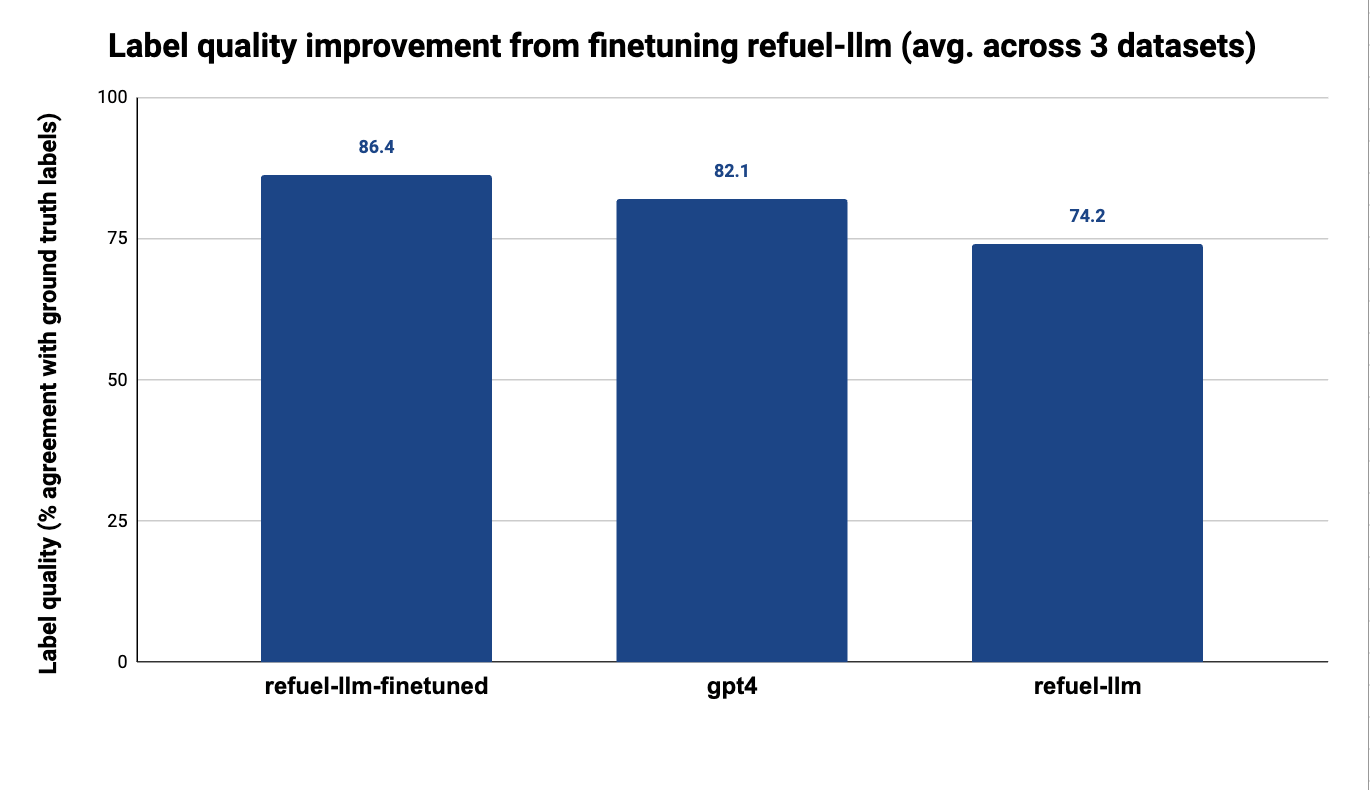

The next step was fine-tuning to further improve the performance. Thanks to Databricks infrastructure, it was fast and easy to fine-tune on a target domain, further improving performance and TCO by reducing prompt lengths. Refuel.AI was able to fine-tune the model on a cluster of 8x H100s within the Mosaic AI Training environment, for an additional 16% performance gain. (See Figure 2)

Training a Custom LLM in an Iterative Process

One of the main benefits of using Mosaic AI Training was the flexibility to experiment. With an average initial training run length of three days, Refuel.AI can afford to run experiments and train their LLM in an iterative process. One of the factors that made experimentation easier was the auto-scheduling of training runs. The team was able to queue up runs in advance and avoid hassles with GPU availability or node failures. The platform offers “set it and forget it” capabilities like graceful resumption, data streaming, and dynamic memory usage.

The Refuel.AI team was able to leverage the optimized infrastructure and comprehensive documentation from Databricks Mosaic AI to easily set up training runs. The initial release has already been wildly popular, with over ten thousand users accessing the Refuel LLM cloud or experimenting with the Refuel LLM playground. Thanks to the power of Databricks, the team has recently completed the next generation of Refuel LLM.