Your data. Your AI.

Your future.

Own them all on the new data intelligence platform

The Databricks

Data Intelligence Platform

Databricks brings AI to your data to help you bring AI to the world.Unify all your data + AI

Industry leaders are data + AI companies

More than meets the AI

In the spotlight

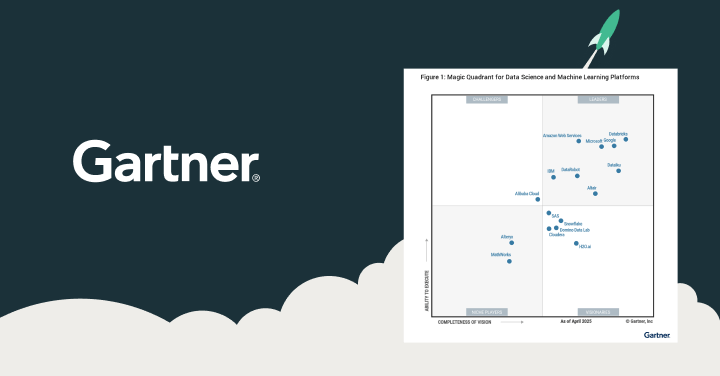

Report

Databricks is a Leader in the 2025 MQ for Data Science and ML Platforms

Virtual Event

Business Intelligence in the Era of AI. Join a live broadcast on May 6, 7 or 9.

Data Intelligence for All

Data + AI Summit is over but you can still watch the keynotes and hundreds of sessions on demand.

eBook

Business Intelligence Meets AI

Ready to become a data + AI company?

Take the first steps in your transformation