Tutorials

Discover the power of Lakehouse. Install demos in your workspace to quickly access best practices for data ingestion, governance, security, data science and data warehousing.

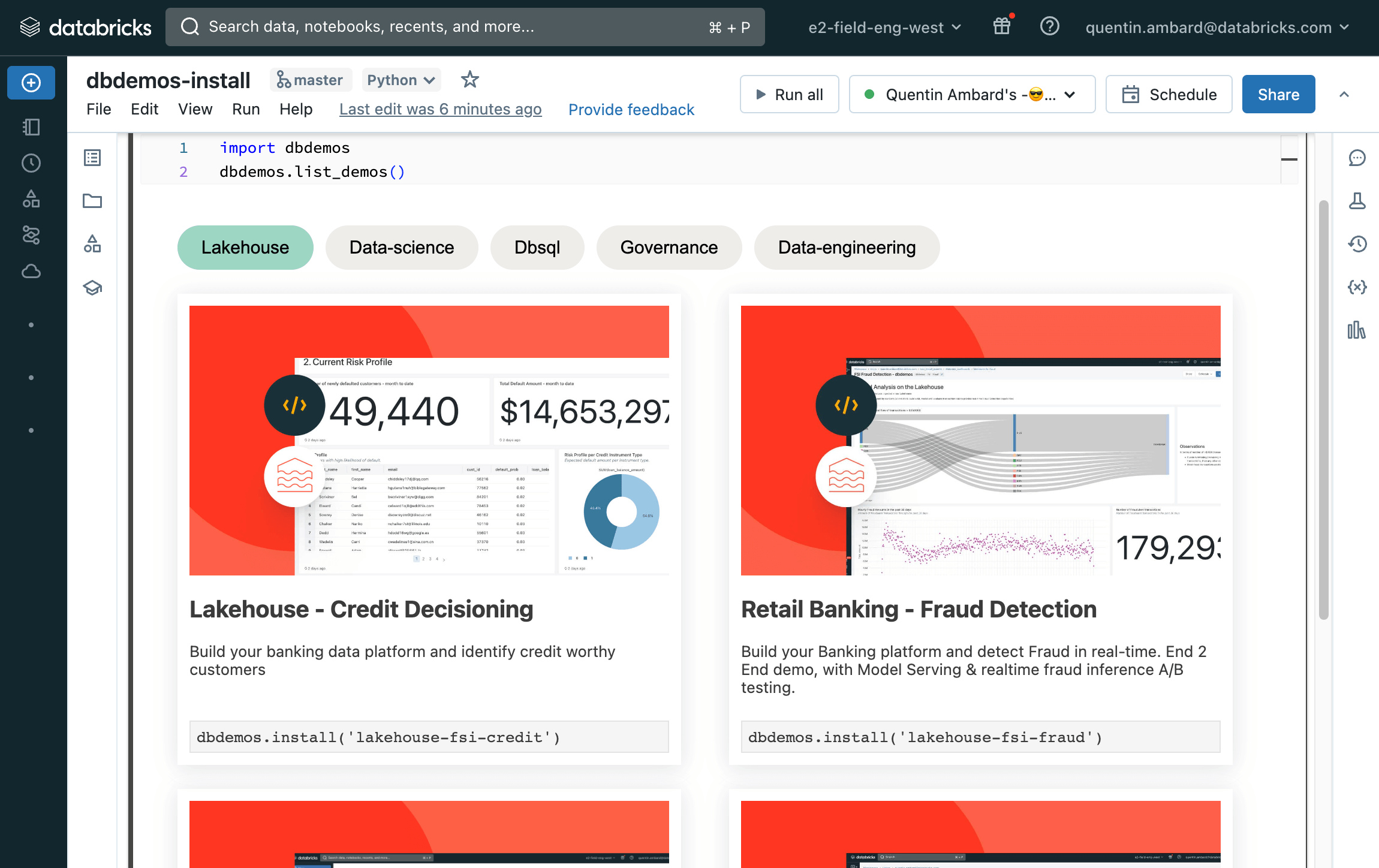

Tutorials quickstart

Install demos directly from your Databricks notebooks

Load the dbdemos package in a cell

List and install any demo

Explore all tutorials

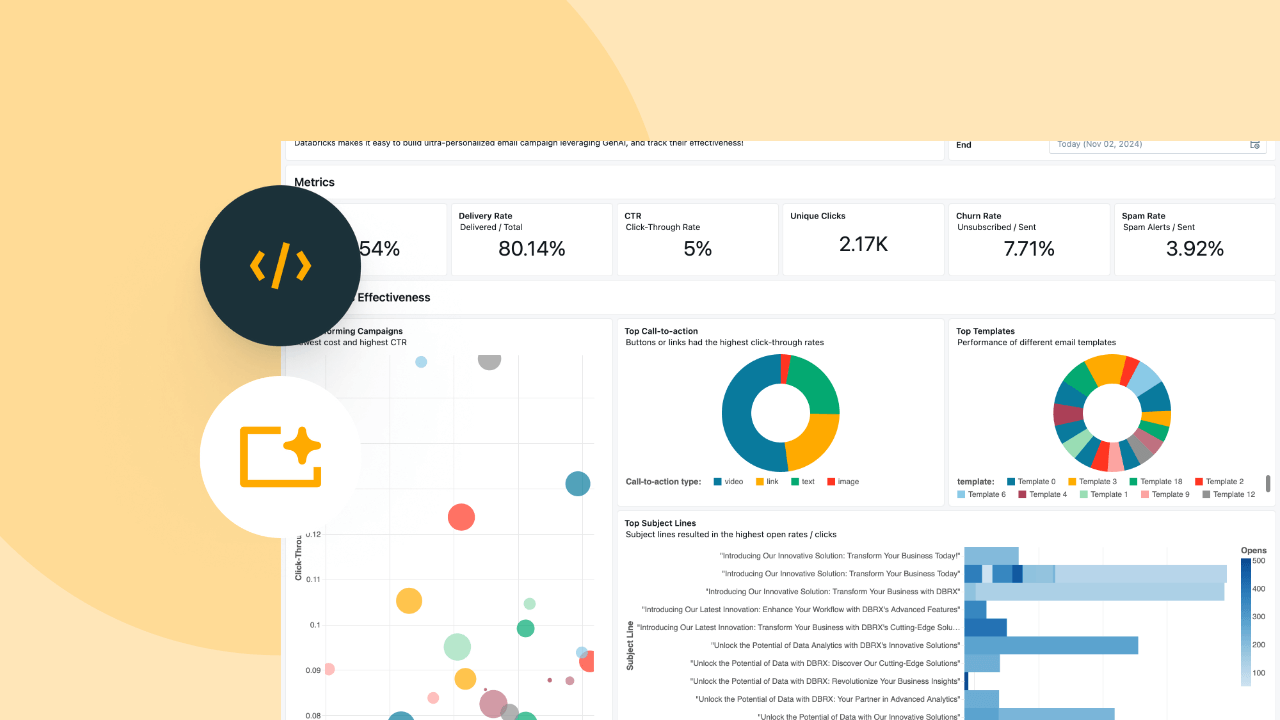

Databricks Intelligence Platform for C360: Reducing Customer Churn

Centralize customer data and reduce churn: Ingestion (Spark Declarative Pipelines), BI, Predictive Maintenance (ML), Governance (UC), Orchestration.

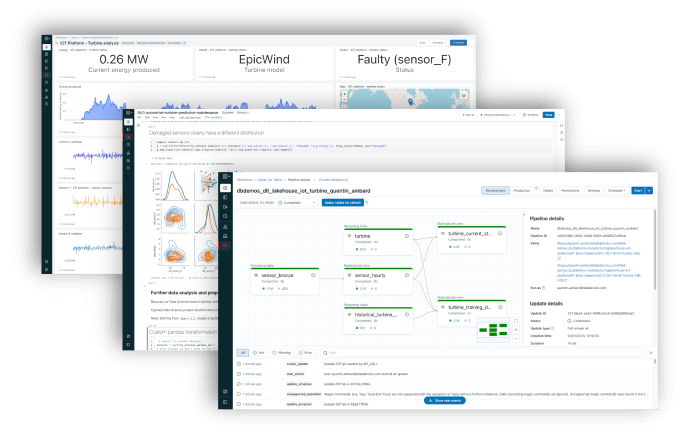

Databricks Intelligence Platform for IoT: Predictive Maintenance

Detect faulty wind turbine: Ingestion (Spark Declarative Pipelines), BI, Predictive Maintenance (ML), Governance (UC), Orchestration.

Databricks Intelligence Platform for Retail Banking: Fraud Detection

Build your Banking platform and detect Fraud in real-time. End 2 End demo, with Model Serving & realtime fraud inference A/B testing.

Databricks Intelligence Platform for FSI: Credit Decisioning

Build your banking data platform and identify credit worthy customers.

Databricks Intelligence Platform for HLS: Patient Readmission

Build your data platform and personalized health care to reduce readmission risk

Data Intelligence Platform for FSI: Smart Claims

Accelerate and automate your insurance claims processing with the Data Intelligence Platform.

dbdemos is distributed as a GitHub project

For more details, please open the GitHub README.md file and follow the documentation.

dbdemos is provided as is. See the License for more information. Databricks does not offer official support for dbdemos and the associated assets.

For any issue, please open an issue and the Demo team will have a look on a best-effort basis. See the Notice for more information.