Deloitte’s Guide to Declarative Data Pipelines With Delta Live Tables

This post was written in collaboration with Deloitte. We thank Mani Kandasamy, Strategy and Analytics, AI and Data Engineering at Deloitte Consulting, for his contributions.

Today, we are excited to share a new whitepaper for Delta Live Tables (DLT) based on the collaborative work between Deloitte and Databricks. This whitepaper shares our point of view on DLT and the importance of a modern data analytics platform built on the lakehouse. With DLT, data analysts and data engineers are able to spend less time on operational tooling and more time on getting value from their data.

By combining Deloitte's global experience in data modernization and advanced analytics with the Databricks Lakehouse Platform, we've been able to help businesses of all sizes stand up a target-state data and AI platform to strategically meet their immediate and long-term business goals. From the design phase through operation, we apply automation at every turn with tailored approaches for major initiatives like Lakehouse enablement, AI/ML and migrations.

Through these initiatives, we've observed that as organizations adopt lakehouse architectures, data engineers need an efficient way to capture and track continually arriving data. They often face the difficult task of cleansing complex data and transforming it into a format fit for analysis, reporting and machine learning. Even with the right tools, implementing streaming use cases is not easy and the complexity of the data management phase limits their ability for downstream analysis. This is where DLT comes in.

Data pipelines built and maintained more easily

DLT makes it easier to build and manage reliable data pipelines and deliver higher quality data on Delta Lake. It accomplishes this by allowing data engineering teams to build declarative data pipelines, improve data reliability through defined data quality rules and monitoring, and scale operations through deep visibility into data pipeline stats and lineage via an event log.

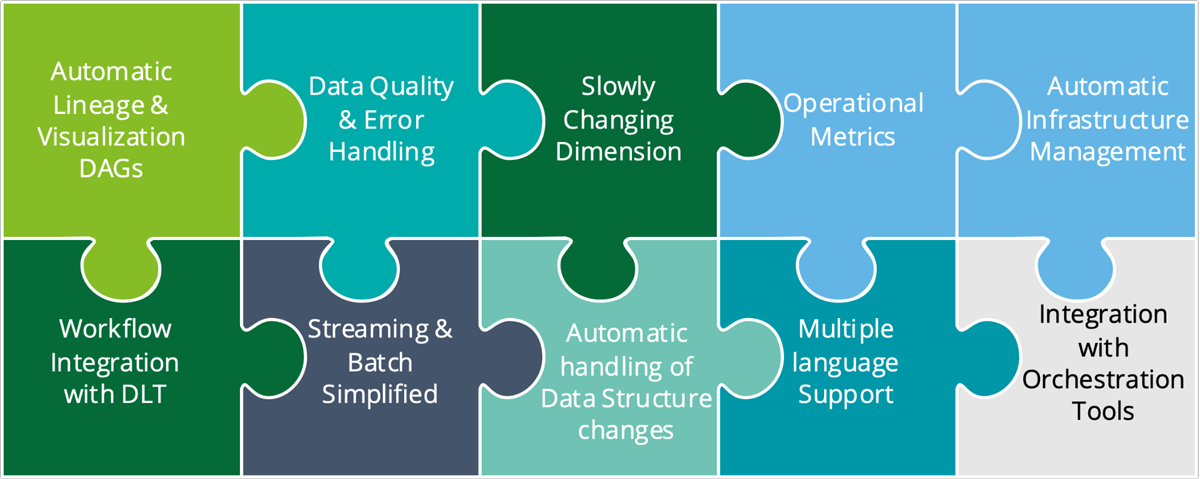

Now data teams can utilize the immense processing power of the Databricks Lakehouse Platform while simultaneously maintaining the ease of use of the modern data stack. In our whitepaper, we go into detail about each of the following DLT benefits:

With DLT, data teams can build and leverage their own data pipelines to deliver a new generation of data, analytics, and AI. Of the benefits shown above, we wanted to highlight these three as specific ways in which DLT reduces the load on data engineers and get to insights faster:

- Automatic infrastructure management: Removes overhead by automating complex and time-consuming activities like task orchestration, error handling, and performance optimization.

- Streaming and batch simplified: Combines streaming and batch without needing to develop separate pipelines, integrates with Auto Loader to handle data that arrives out of order, and makes data available as soon as it arrives.

- Automatic handling of data structure changes: Combines change records and partial updates into a single, complete and up-to-date row.

These DLT benefits and more make it easy to define end-to-end data pipelines by specifying the data source, the transformation logic and destination state of the data. This, instead of manually stitching together siloed data processing jobs. Now, data teams can maintain all data dependencies across the pipeline and reuse ETL pipelines with environment independent data management.

As an example, at a large investment bank, Deloitte and Databricks implemented a metadata driven framework to ingest multiple real-time and batch datasets in parallel. From this, the client realized a 30-50X performance improvement in their data pipelines and was able to achieve significant gains in developer productivity.

Read the Delta Live Tables Whitepaper

Download the "Delta Live Tables: Value Proposition and Benefits" whitepaper to learn more about Deloitte and Databricks' point of view on how to best utilize DLT to make faster and more reliable data-driven decisions. In it, you will also find specific DLT use cases and learn our best practices that will help you focus more on core transformation logic and less on operational complexity.

We are excited to be on the cutting edge of data engineering with DLT. As Databricks continues to innovate and release new DLT features, we are excited to help the developer community and our customers continue to reap more benefits from the modern Lakehouse platform.

Additional Resources

- Learn more about the Databricks and Deloitte strategic alliance.

- Watch the Delta Live Tables demos.

- Learn how to get started with Delta Live Tables

Never miss a Databricks post

What's next?

Product

November 21, 2024/3 min read