Finding a Data Platform that Can Do More, With Less

In today's economy, the key phrase is "do more with less." Doing more with less is not just about reducing infrastructure cost, but also reducing time spent on administering and maintaining multiple systems. With Azure Databricks, you can reduce costs and complexity while providing more impactful insights from your data, just in time for your business. We have helped many customers; take a look at how we have helped Optum Health save millions on potential revenue loss while being able to process 1 million insurance claims per minute. Let's not do less with less; let's do more with less.

If you are frustrated with the complexity of real-time analytics, slow query performance, or manual effort required for tasks such as tuning, maintenance, and workload management on your current data platform, this blog is for you.

More Real-Time Decision Making With Less Complexity

The rate of streaming data has increased exponentially in the last several years. From online order data to app telemetry and IoT data, there are more ways to understand your customers and your business than ever. Wouldn't it be great to combine these new data sources with years of historical data to make real-time business decisions?

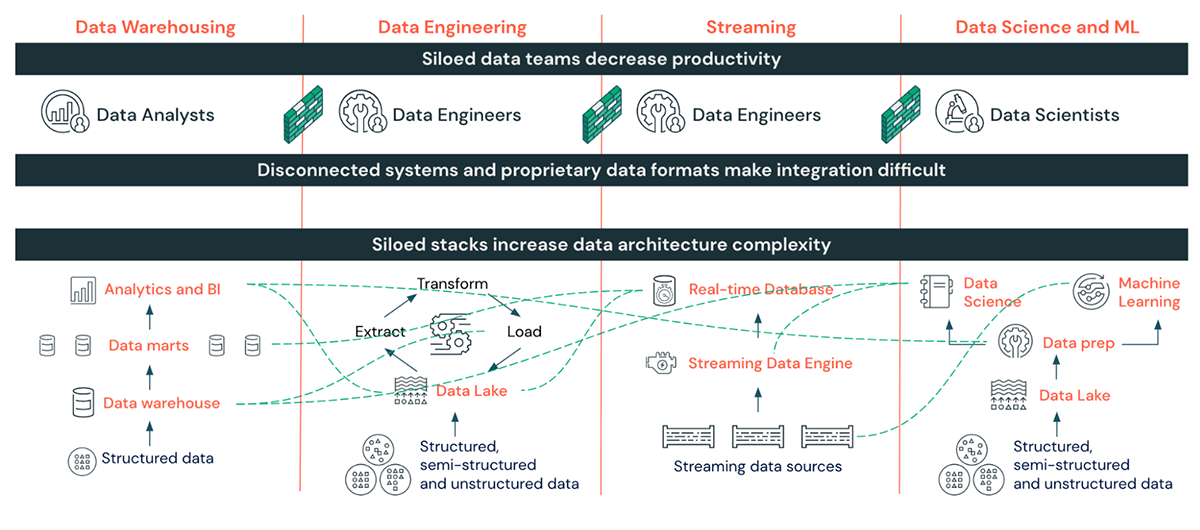

Pulling this off has typically involved setting up and managing multiple tools for ingesting and processing streaming data, joining it with historical data, and serving it to BI applications.

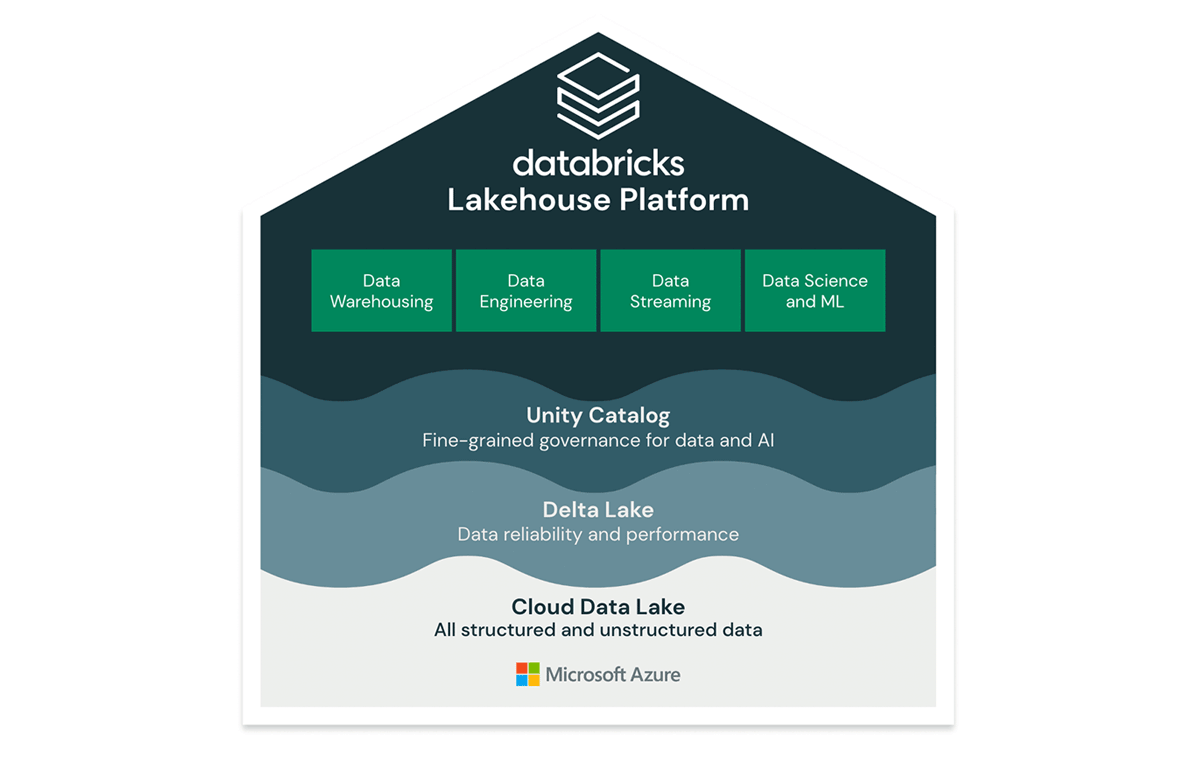

With the Azure Databricks Lakehouse Platform, you get a single tool to ingest, process, join, and serve streaming and historical data, all in familiar ANSI SQL. With Delta Live Tables, you can build robust pipelines and move from batch to stream processing with the same code so your tables are up to date in seconds. Then with Azure Databricks SQL, your business teams can ask questions of this live data to make real-time decisions or connect directly to BI tools like Power BI for real-time dashboarding. Learn about how Columbia Sportswear is leveraging Azure Databricks to make real-time decisions on their operations by reducing data processing time from 4 hours down to 5 minutes with streaming.

More Performance With Less Cost

While most data warehouses can scale up to meet the most demanding workloads, they are not all built to scale without coming at a big cost to your business. A data warehouse that is not scalable can mean data analysts waiting hours for queries to finish and BI reports becoming unusable.

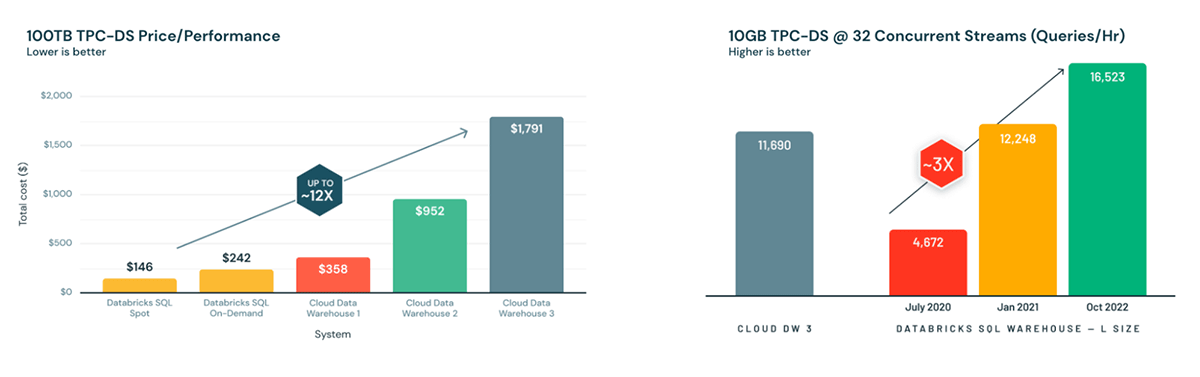

Gartner®: Databricks Cloud Database Leader

Azure Databricks SQL was built for performance first, meaning your queries complete faster, your BI reports are running smoothly, and you can handle even the most demanding workloads without getting a call from your CFO. As a result of our highly performant Photon Engine and features like caching and data skipping, Databricks SQL broke the TPC-DS 100 TB world record, an industry-standard benchmark. Azure Databricks SQL is built to handle high concurrency, low latency BI workloads, beating out leading cloud data warehouse platforms as seen above.

Making multiple copies of your data can get expensive. In legacy data warehouse platforms, compute and storage are tightly coupled and your data is locked in a proprietary storage format and you incur additional cost to load data from your data lake to your data warehouse. With Azure Databricks, you can keep all of your data in your data lake using the open source Delta Lake storage format to get warehouse-level performance without locking up your data or spending money to make copies of your data.

You also get a multi-cluster architecture with true separation of compute and storage. This means you can isolate your ETL and BI workloads to remove resource contention, a common bottleneck, so you only pay for the compute you need - no more overprovisioned warehouses running 24/7.

More Speed To Market With Less Overhead

A good data platform should allow your team to focus on building new data products rather than spending too much time on administration and DevOps.

However, most data platforms require you to take the system offline for start up, shut down, and scaling, and even lock tables for simple things like renaming the table. They often require capacity planning, constant performance monitoring and tuning, and manual workload management.

With Azure Databricks SQL, you get a fully serverless warehouse without compromising on performance, which means instant access to compute rather than waiting around 10-15 minutes for a cluster to spin up. You also get instant auto-scaling to horizontally scale to meet unlimited concurrency needs for your business and auto-stop to shut down the warehouse when it is not in use.

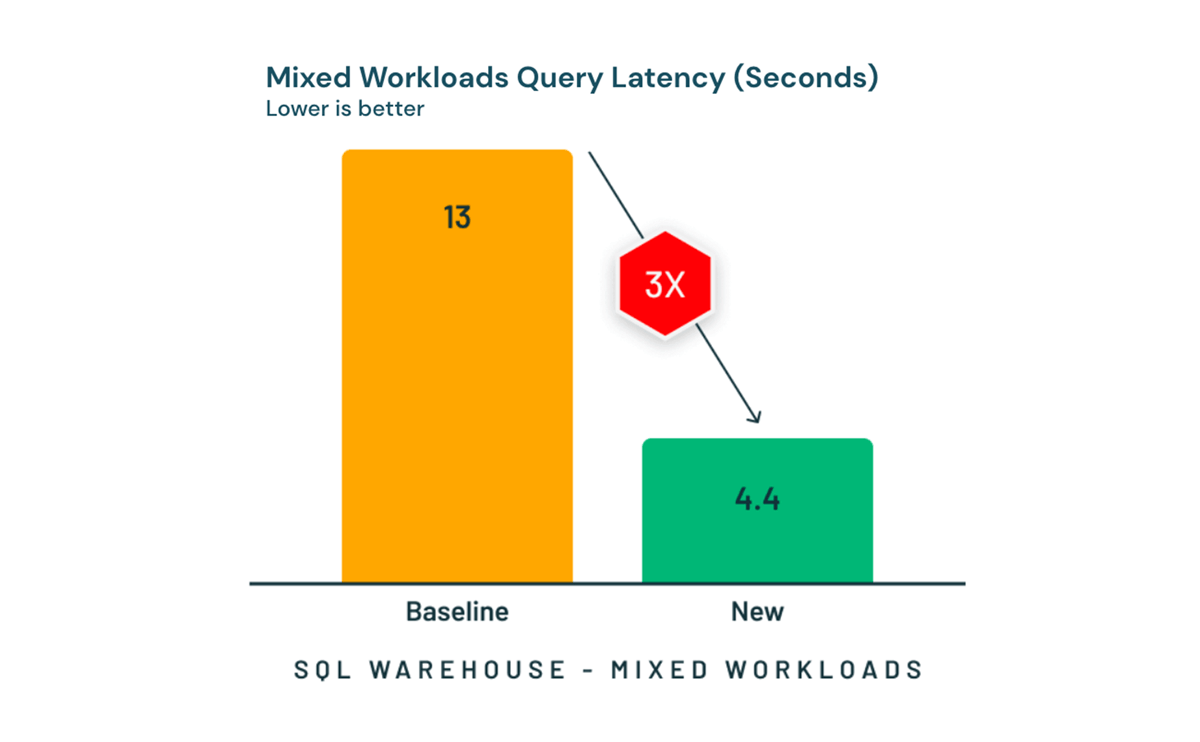

Azure Databricks SQL Serverless is now available in public preview and comes with Intelligent Workload Management, which uses AI to learn from the history of your workloads. We use this history for new queries to determine if we should prioritize it to run immediately or scale up to run it without disrupting running queries, resulting in an average of 3X less latency for mixed query workloads. Instant compute access, automatic scaling and workload management, and fast performance means your data analysts can be more agile and deliver insights faster. At Canadian Broadcasting Corporation, moving to Databricks SQL has resulted in a 50% reduction in time to insight.

Doing more with less doesn't have to mean turning off systems or shutting down projects. On the contrary - with Azure Databricks, you can deliver real-time insights on your data, at a lower cost, and let your data teams focus on delivering faster results to your customers.

Check out this walkthrough to get started on building performant streaming pipelines and real-time dashboards with Azure Databricks.