Navigating the SQL Server to Databricks Migration: Tips for a Seamless Transition

Strategies, Tools, and Best Practices for Transitioning to the Lakehouse Architecture

Summary

- Discover how to convert complex T-SQL logic to distributed Spark SQL with 90% automation.

- Implement zero-downtime migration using CDC replication and parallel validation frameworks.

- Optimize legacy SSIS packages for petabyte-scale processing in Databricks Workflows.

The imperative for modernization

Traditional database solutions like SQL Server have struggled to keep up with the demands of modern data workloads due to a lack of support for AI/ML, streaming capabilities, and high costs. Enterprises increasingly adopt cloud-native solutions like Databricks to gain flexibility, scalability, and cost efficiency while enabling advanced analytics use cases.

Key benefits of Databricks over SQL Server

- Unified platform: Combines structured and unstructured data processing with AI/ML capabilities. Further, Unity Catalog provides comprehensive data governance for all data assets.

- Scalability: Databricks, through its cloud-native infrastructure, can scale resources elastically according to workload demands. This architecture enables it to handle large, complex workloads with improved query performance and reduced latency.

- Cost efficiency: Pay-as-you-go cloud pricing models reduce infrastructure hardware costs. Lower administrative costs and improved resource utilization also significantly reduce the overall TCO.

- Advanced analytics: Databricks provides built-in features for advanced analytics use cases such as AI/ML, GenAI, and real-time streaming. Further, with Databricks SQL, users can integrate their BI tools of choice, thus empowering them to perform complex analyses more efficiently.

Architectural deep dive

Migrating from SQL Server to Databricks involves rethinking your data architecture to leverage the Lakehouse model’s strengths. Understanding the key differences between the two platforms is critical for designing an effective migration strategy. Key differences between SQL Server and Databricks:

|

Feature |

SQL Server |

Databricks |

|---|---|---|

|

Architecture |

Monolithic RDBMS |

Open Lakehouse |

|

Scalability |

Vertical scaling |

Horizontal scaling via clusters |

|

AI/ML support |

Minimal |

Built-in support for AI/ML |

|

Real-time streaming |

Limited |

Fully supported |

Enterprise data migration

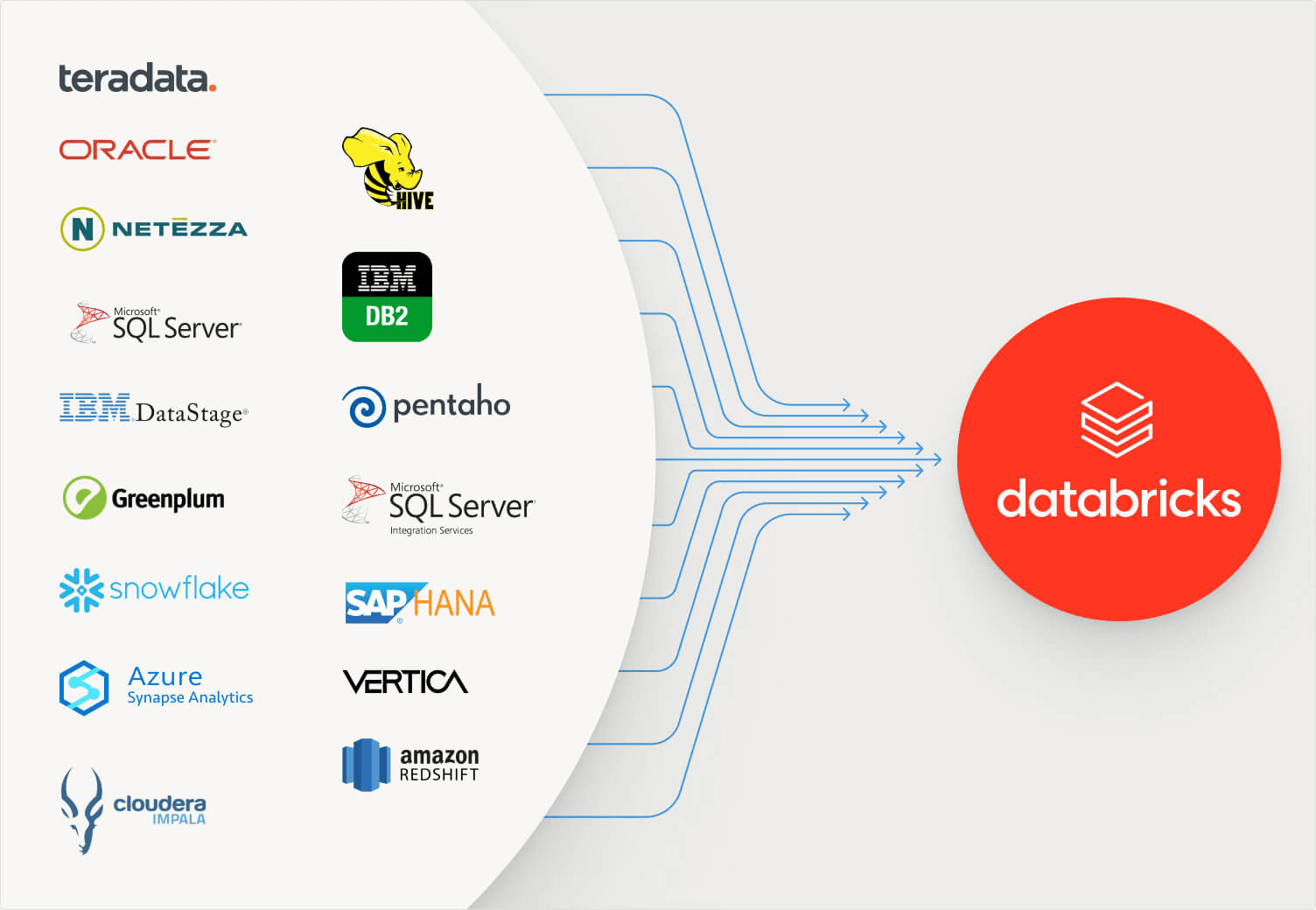

Migrating data from on-premises SQL Server to cloud-based Databricks requires selecting the right tools and strategies based on workload size and complexity.

Recommended approaches for data migration:

- Databricks Lakeflow Connect: Lakeflow Connect offers a fully managed SQL Server connector for seamless data ingestion from SQL Server into Databricks lakehouse. For more information, refer to Ingest data from SQL Server.

- Leveraging Databricks Lakehouse Federation: Databricks Lakehouse Federation allows for federated queries across different data sources, including SQL Server.

- ISV Partners: Databricks ISV Partners, such as Qlik and Fivetran can replicate data from SQL Server to the Databricks Delta table.

Code migration

Migrating from T-SQL to Databricks SQL requires refactoring SQL scripts, stored procedures, and ETL workflows into Databricks-compatible formats while optimizing performance. Databricks has mature code converters and migration tooling to make this process smoother and highly automated.

Databricks Code Converter (acquired from BladeBridge) can automatically convert the logic into either Databricks SQL or PySpark notebooks. The BladeBridge conversion tool supports schema conversion (tables and views) and SQL queries (select statements, expressions, functions, user-defined functions, etc.). Further, stored procedures can be converted to modular Databricks workflows, SQL Scripting, or DLT pipelines.

ETL Workflow modernization

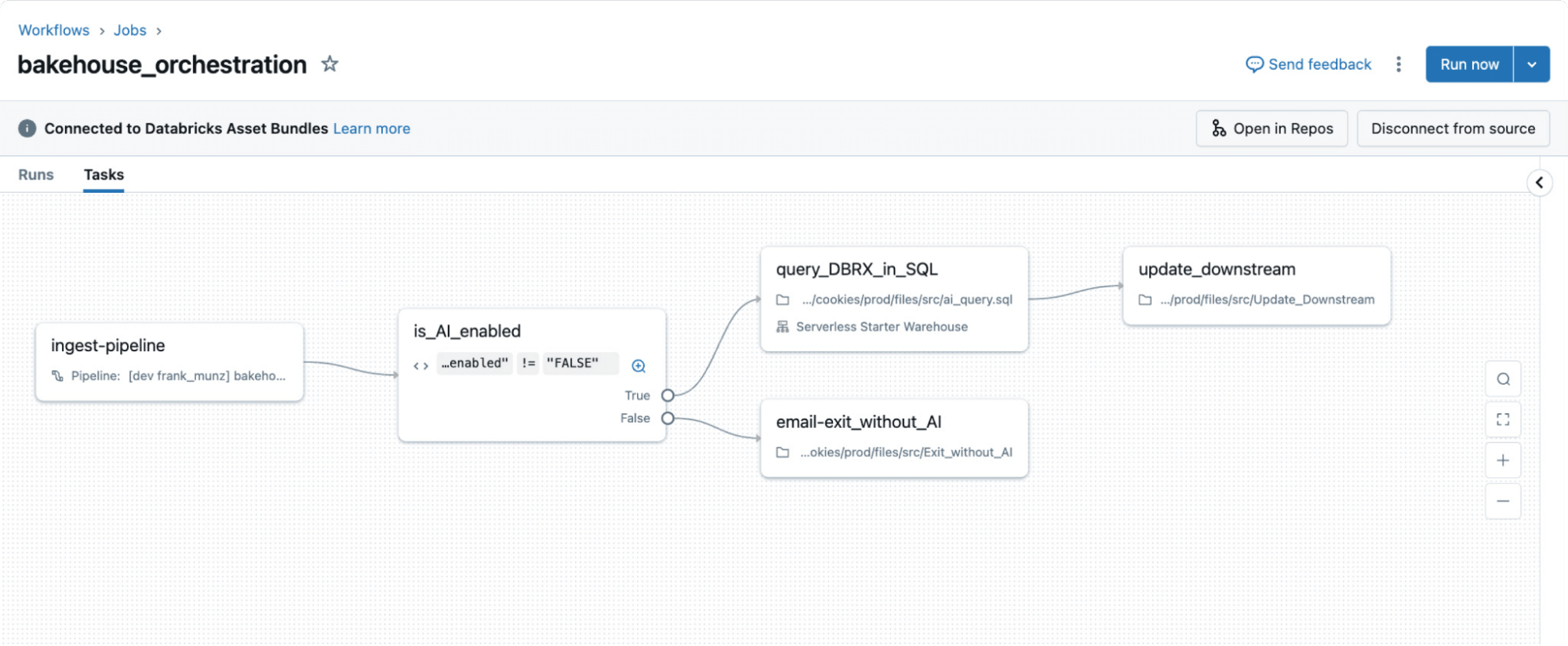

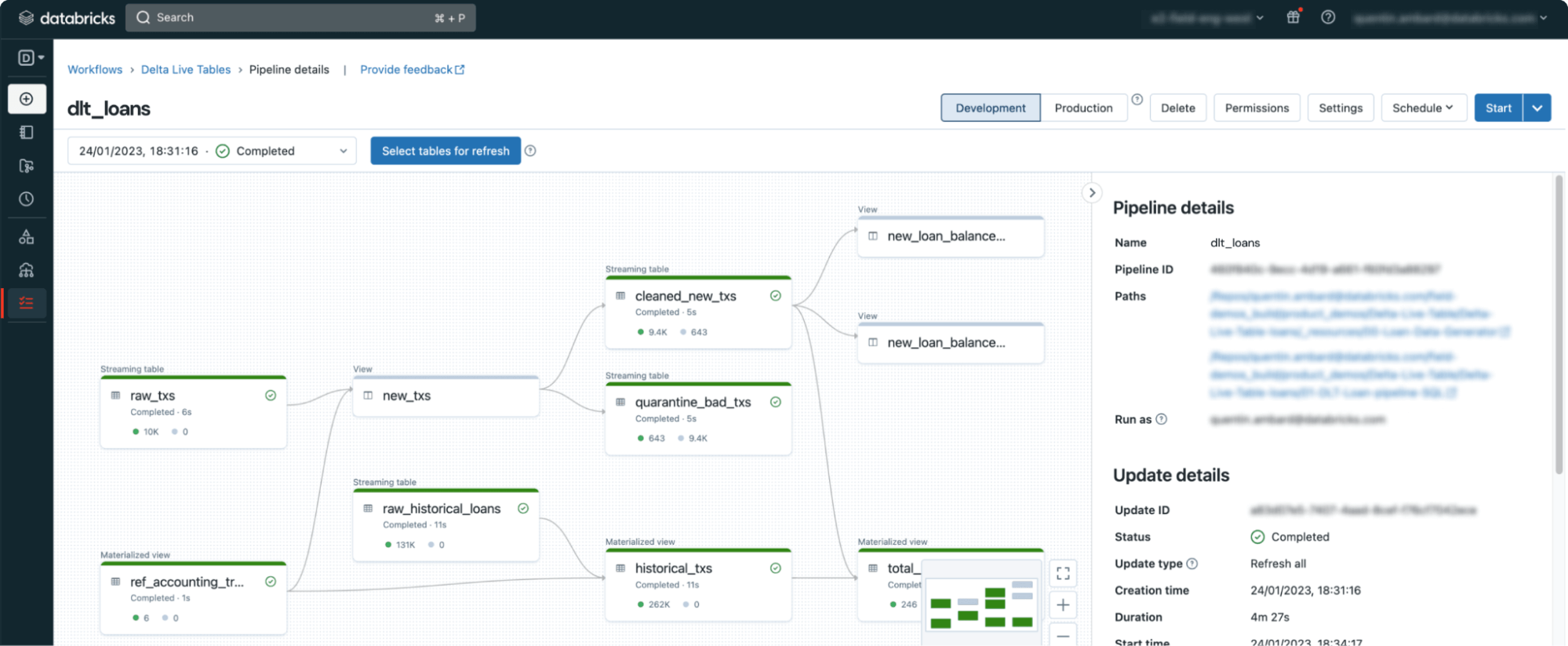

Databricks offers multiple options for modernizing ETL pipelines, simplifying complex workflows traditionally managed by SSIS or SQL Agent.

Options for ETL orchestration on Databricks:

- Databricks Workflows: Native orchestration tool supporting Python scripts, Notebooks, dbt transformations, etc.

- DLT (DLT): Declarative pipelines with built-in data quality checks.

Gartner®: Databricks Cloud Database Leader

BI and analytics tools integration

Databricks SQL enables organizations to meet data warehousing needs and support downstream applications and BI Dashboards. Repointing BI tools like Power BI or Tableau is critical after migrating data pipelines to ensure business continuity.

Microsoft Power BI, a commonly seen downstream application in various customer environments, typically operates on top of SQL Server's serving layer.

Power BI integration best practices

- Use DirectQuery mode for real-time analytics on Delta tables. DirectQuery is 2- 5x faster with Databricks vs. SQL Server.

- Leverage materialized views in Databricks SQL Warehouse for faster dashboards via aggregations.

- Use SQL Serverless Warehouse for the best performance for high-concurrency, low-latency workloads.

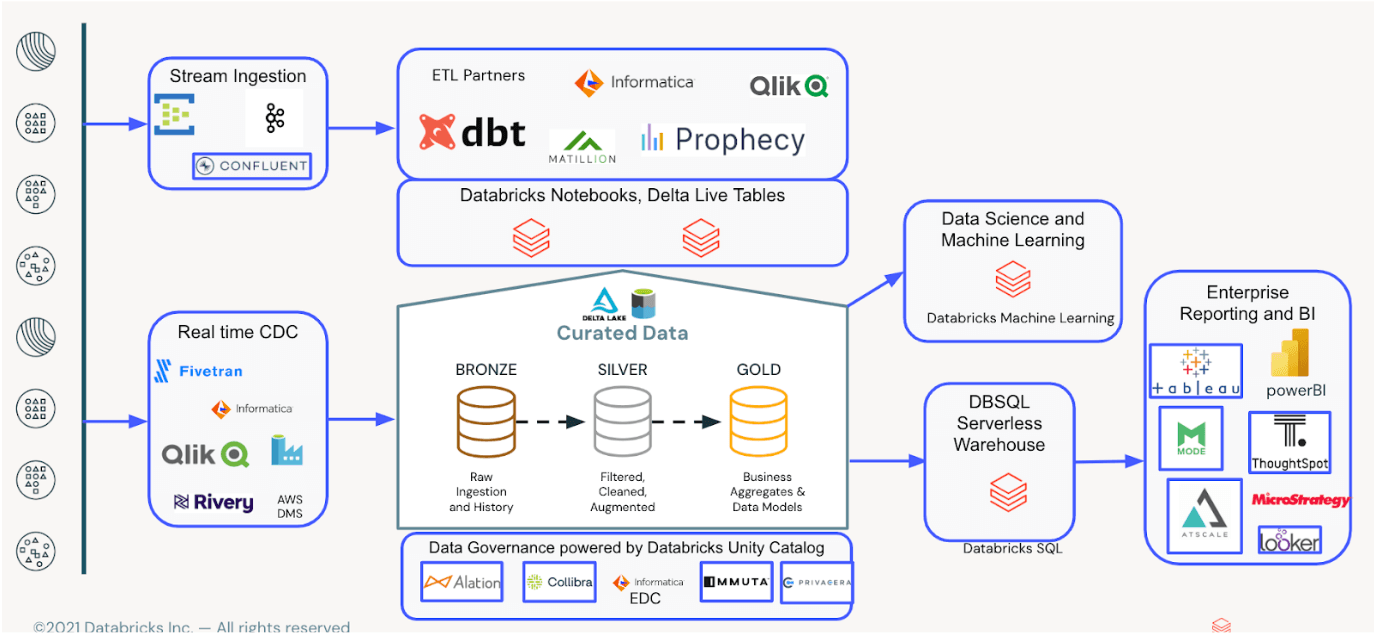

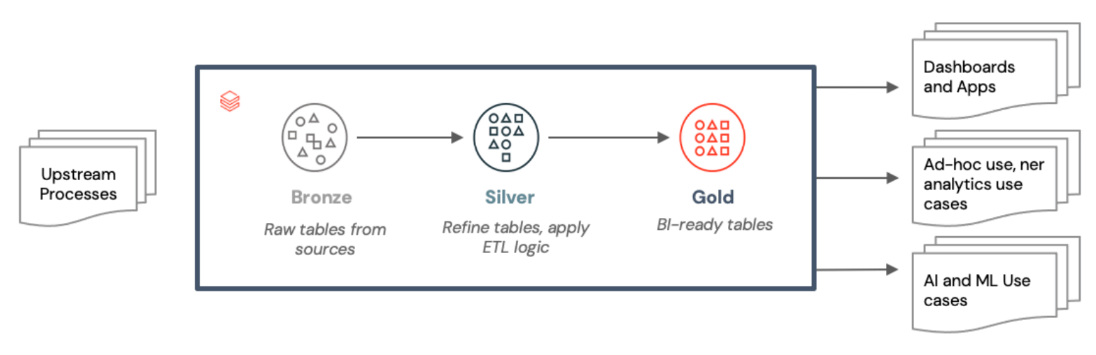

Below is a future-state architecture that works well for optimizing BI models and semantic layers to align with business needs. It includes a bronze, silver, and gold layer to feed dashboards, applications, AI, and ML use cases.

Validation framework

Validation ensures that migrated datasets maintain accuracy and consistency across platforms. Recommended validation steps:

- Perform schema checks between the source (Netezza) and the target (Databricks).

- Compare row counts and aggregate values using automated tools like Remorph Reconcile or DataCompy.

- Run parallel pipelines during a transitional phase to verify query results

Knowledge transfer and organizational readiness

Upskilling teams on Databricks concepts, Delta Lake architecture, Databricks SQL, and performance optimization is critical for long-term success. Training recommendations:

- Train analysts on Databricks SQL Warehouse features.

- Provide hands-on labs for engineers transitioning from SSIS to DLT pipelines.

- Document migration patterns and troubleshooting playbooks.

Predictable, low-risk migrations

Migrating from SQL Server to Databricks represents a significant shift not just in technology but in approach to data management and analytics. By planning thoroughly, addressing the key differences between platforms, and leveraging Databricks’ unique capabilities, organizations can achieve a successful migration that delivers improved performance, scalability, and cost-effectiveness.

The migration journey is an opportunity to modernize where your data lives and how you work with it. By following these tips and avoiding common pitfalls, your organization can smoothly transition to the Databricks Platform and unlock new possibilities for data-driven decision-making.

Remember that while the technical aspects of migration are important, equal attention should be paid to organizational readiness, knowledge transfer, and adoption strategies to ensure long-term success.

Practical tips from Capgemini

Capgemini shared some practical tips for migrating from SQL Server to Databricks in this webinar. Check it out to hear about how the migration worked at a life sciences company! Highlights include:

- Start small with phased migrations.

- Automate coding and testing phases.

- Onboard early users early in the migration process.

- Focused optimization with features like data skipping, Z-order, and VACUUM.

- Go high with Lakehouse by leveraging the entire platform.

What to do next

Migration can be challenging. There will always be tradeoffs to balance and unexpected issues and delays to manage. You need proven partners and solutions for the migration's people, process, and technology aspects. We recommend trusting the experts at Databricks Professional Services and our certified migration partners, who have extensive experience delivering high-quality migration solutions promptly. Reach out to get your migration assessment started.

You should also check out the Modernizing Your Data Estate by Migrating to Azure Databricks eBook.

We also have a complete SQL Server to Databricks Migration Guide–get your free copy here.