Navigating Your Netezza to Databricks Migration: Tips for a Seamless Transition

Strategies, Tools, and Best Practices for Transitioning to the Lakehouse Architecture

Summary

- Understanding Netezza’s limitations and how Databricks Lakehouse architecture addresses them through scalability and unified analytics capabilities.

- Learn about schema translation strategies, automated code conversion tools like BladeBridge, and efficient data migration techniques tailored for Netezza workloads.

- Explore best practices for ETL modernization, performance optimization, validation methods, and organizational readiness during migration efforts.

Why migrate from Netezza to Databricks?

The limitations of traditional enterprise data warehouse (EDW) appliances like Netezza are becoming increasingly apparent. These systems have tightly coupled storage, compute, and memory architecture, which limits scalability. Expanding capacity often requires costly hardware upgrades, and even in the cloud, it results in rigid architecture and high costs. As organizations look to modernize their Netezza EDW platform, migrating to Databricks Lakehouse offers a scalable cloud-native solution to overcome these challenges as it provides not only the best price-performant Cloud Data Warehouse but also a solid advanced analytics and Data Intelligence Platform, along with streaming and unified governance capabilities - which future proofs their data architecture.

Key benefits of migrating to Databricks

Migrating from Netezza to Databricks isn’t just a lift-and-shift exercise—it’s an opportunity to modernize your data architecture and unlock broader capabilities. By moving to a lakehouse architecture, organizations can break free from the limitations of tightly coupled, hardware-dependent systems and adopt a more scalable, flexible, and future-ready platform. Below are some of the key benefits that make Databricks a compelling target for Netezza migrations.

- Unified platform: Combines structured and unstructured data processing with AI/ML capabilities. Databricks maintains a single copy of data in cloud storage and provides various processing engines for data warehousing, machine learning, and Generative AI applications, simplifying management and increasing productivity.

- Scalability: Unlike appliance-based Netezza, Databricks offers unlimited scalability through cloud-native infrastructure. Resources scale elastically based on workload demands, significantly reducing infrastructure and licensing costs while ensuring performance even under intense query loads.

- Cost efficiency: Reduces infrastructure costs with pay-as-you-go cloud pricing models.

- Advanced analytics: Databricks delivers advanced analytics features unavailable on traditional warehouse appliances, such as built-in AI, ML, and GenAI functionalities. The platform integrates seamlessly with BI tools (Tableau, Power BI, ThoughtSpot) and supports stored procedure-like SQL scripting, empowering users to perform complex analyses more efficiently.

- Simplified data governance: With Unity Catalog, Databricks simplifies data governance, offering centralized security, comprehensive auditing, end-to-end data lineage, and fine-grained access control across all data assets.

- AI: Securely connect your data with any AI model to create accurate, domain-specific applications. Databricks has infused AI throughout the Data Intelligence Platform to optimize performance and to build intelligent experiences.

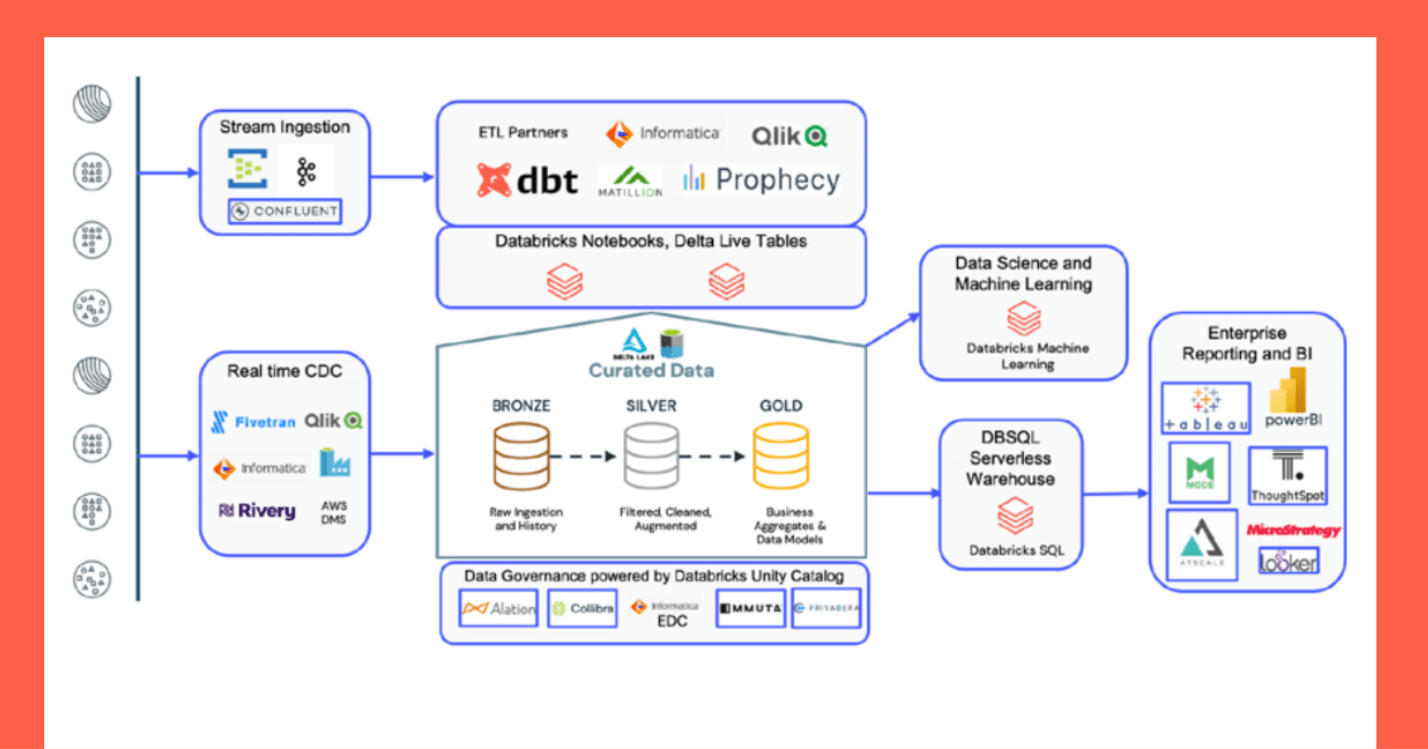

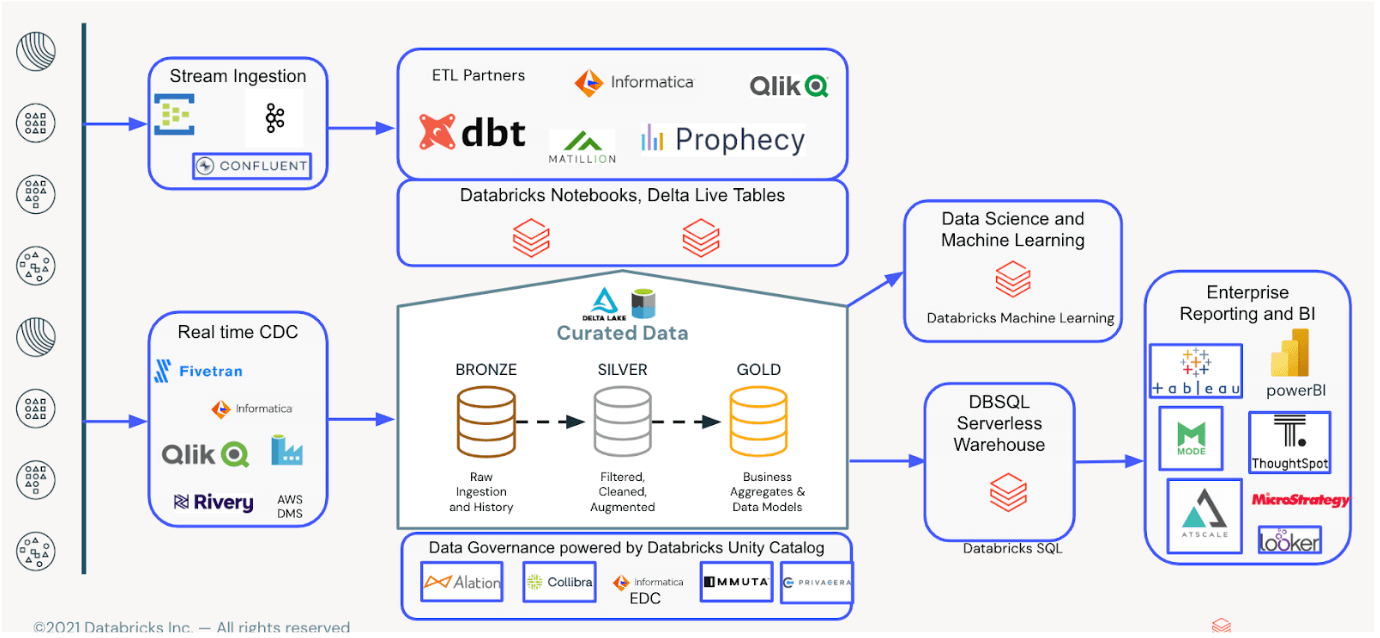

Redesigning for the Lakehouse architecture

Redesigning for the Lakehouse

Migrating from Netezza to Databricks is an opportunity to simplify and modernize your data architecture. The lakehouse architecture replaces rigid, appliance-based systems with a scalable, cloud-native approach that supports both analytics and AI on a unified platform.

A common approach is to organize the lakehouse into layered zones:

- Bronze Layer: Ingests raw, unfiltered data from various sources into a centralized landing zone. This layer preserves data fidelity for auditing and replay purposes.

- Silver Layer: Hosts cleaned, standardized, and domain-modeled data. This is typically where most transformations and business logic are applied.

- Gold Layer: Provides business-ready datasets—star schemas, marts, sandboxes, and data science zones—tailored for consumption by analysts, data scientists, and applications.

This layered structure promotes clarity, reusability, and consistency. It also breaks down data silos, making it easier to govern and collaborate across teams while maintaining data quality and access controls.

Data migration strategies

Migrating data from Netezza requires careful planning to ensure accuracy, performance, and minimal disruption. The best approach depends on the size and complexity of your workloads and your existing infrastructure. Below are proven strategies for moving data from Netezza into Databricks efficiently.

Choose the right method based on workload size and complexity:

- NZUNLOAD + Auto Loader: Export from Netezza to cloud storage, then ingest with Databricks Auto Loader.

- Ingestion Partners: Use partner tools with change data capture (CDC) support.

- Cloud Tools: AWS DMS, Azure Data Factory, or GCP DMS for streamlined migration.

- JDBC/ODBC Drivers: Direct access via Databricks connectors.

Code and logic migration

Netezza SQL scripts, stored procedures, and ETL pipelines running on Netezza must be translated into Databricks-compatible formats while optimizing performance.

Automated code conversion with BladeBridge

Databricks Migration tooling, BladeBridge, can automatically convert the Netezza SQL dialect into either Databricks SQL scripts.

BladeBridge can automate over 80-90% of NZSQL to Databricks SQL, including converting stored procedures to modular Databricks workflows, SQL Scripting, or DLT pipelines.

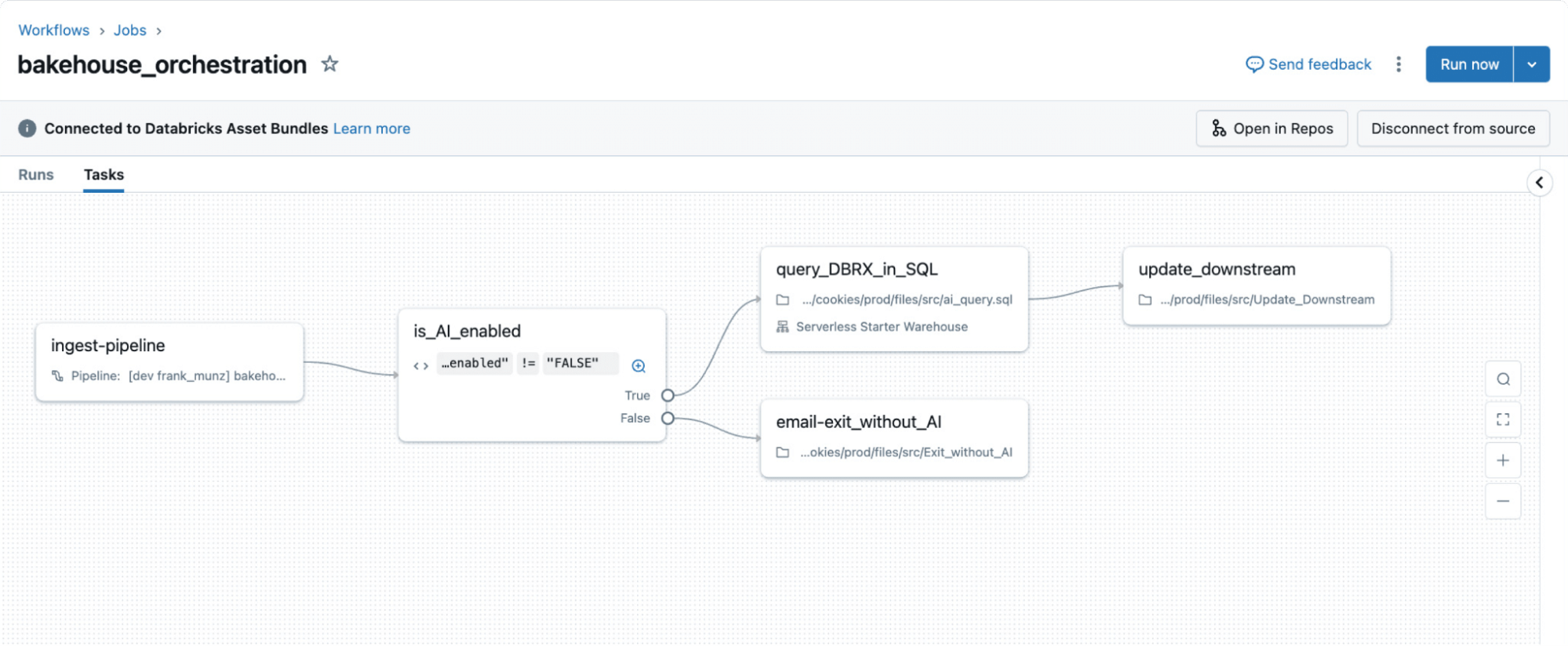

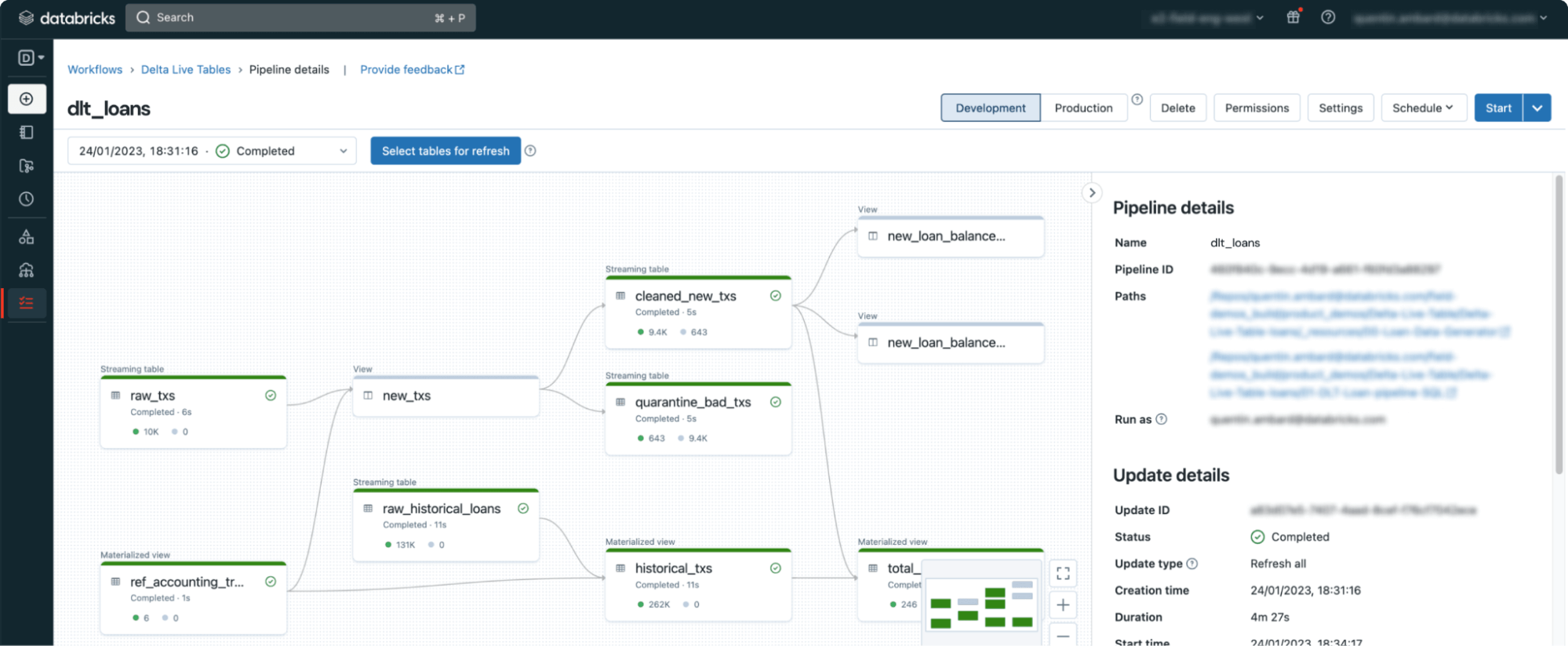

Modernizing your ETL Pipelines

Databricks offers multiple options for modernizing ETL pipelines, simplifying complex workflows traditionally managed by other tools. Options for ETL orchestration on Databricks:

- Databricks Workflows: Native orchestration tool supporting Python scripts, Notebooks, dbt transformations, etc.

- DLT Pipelines: Declarative pipelines with built-in data quality checks.

- External Tools: Integrate Apache Airflow or Azure Data Factory via REST APIs.

Gartner®: Databricks Cloud Database Leader

BI and analytics integration

After data is migrated and pipelines are modernized, the next step is enabling access for analytics and reporting. Databricks provides built-in tools and seamless integrations with popular BI platforms, making it easy for analysts and business users to query data, build dashboards, and explore insights—without needing to move data out of the lakehouse.

Databricks offers a serverless SQL warehouse with many features that make BI easier, such as:

- AI/BI Genie: Our AI models continuously learn and adapt to your data and evolving business concepts and provide accurate answers within the context of your organization using natural language Q&A. With AI/BI Genie, you can get answers to questions not addressed in your BI Dashboards.

- AI-driven Dashboards: Simply describe the visual you want in natural language and let Databricks Assistant generate the chart. Then, modify the chart using point and click.

- Easy BI tool Integration: Databricks SQL easily connects BI tools (Power BI, Tableau, and more) to your lakehouse for fast performance, low latency and high user concurrency to your data lake.

Post-migration validation

Validation ensures that migrated datasets maintain accuracy and consistency across platforms. Recommended validation steps:

- Perform schema checks between the source (Netezza) and the target (Databricks).

- Compare row counts and aggregate values using automated tools like Remorph Reconcile or DataCompy.

- Run parallel pipelines during a transitional phase to verify query results.

Knowledge transfer and organizational readiness

Upskilling teams on Databricks concepts such as Delta Lake architecture, Spark SQL, and guidelines on performance optimization is critical for long-term success. Training recommendations:

- Train analysts on Databricks SQL Warehouse features.

- Provide hands-on labs for engineers transitioning from NZSQL to DLT pipelines.

- Document migration patterns and troubleshooting playbooks.

Predictable, low-risk migrations

Migrating from Netezza to Databricks represents a significant shift not just in technology but in approach to data management and analytics. By planning thoroughly, addressing the key differences between platforms, and leveraging Databricks’ unique capabilities, organizations can achieve a successful migration that delivers improved performance, scalability, and cost-effectiveness.

The migration journey is an opportunity to modernize where your data lives and how you work with it. By following these tips and avoiding common pitfalls, your organization can smoothly transition to the Databricks Platform and unlock new possibilities for data-driven decision-making.

Remember that while the technical aspects of migration are important, equal attention should be paid to organizational readiness, knowledge transfer, and adoption strategies to ensure long-term success.

What to do next

Migration can be challenging. There will always be tradeoffs to balance and unexpected issues and delays to manage. You need proven partners and solutions for the migration's people, process, and technology aspects. We recommend trusting the experts at Databricks Professional Services and our certified migration partners, who have extensive experience delivering high-quality migration solutions promptly. Reach out to get your migration assessment started.

We also have a complete Netezza to Databricks Migration Guide–get your free copy here.