A Unified Approach to Data Exfiltration Protection on Databricks

Comprehensive framework and recommendations for mitigating unauthorized data movement on Databricks.

Summary

- Introduces a unified three-requirement framework to contextualize data exfiltration for data platforms.

- Provides 19 prioritized security controls organized by implementation urgency from identity management to workspace restrictions.

- Unified controls across Databricks on AWS, Azure Databricks, and Databricks on GCP.

Overview:

Data exfiltration is one of the most serious security risks organizations face today. It can expose sensitive customer or business information, leading to reputational damage and regulatory penalties under laws like GDPR. The problem is that exfiltration can happen in many ways—through external attackers, insider mistakes, or malicious insiders and is often hard to detect until the damage is done.

Security and cloud teams must protect against these risks while enabling employees to use SaaS tools and cloud services to do their work. With hundreds of services in play, analyzing every possible exfiltration path can feel overwhelming.

In this blog, we introduce a unified approach to protecting against data exfiltration on Databricks across AWS, Azure, and GCP. We start with three core security requirements that form a framework for assessing risk. We then map these requirements to nineteen practical controls, organized by priority, that you can apply whether you are building your first Databricks security strategy or strengthening an existing one.

A Framework for Categorizing Data Exfiltration Protection Controls:

We’ll start by defining the three core enterprise requirements that will form a comprehensive framework for mapping relevant data exfiltration protection controls:

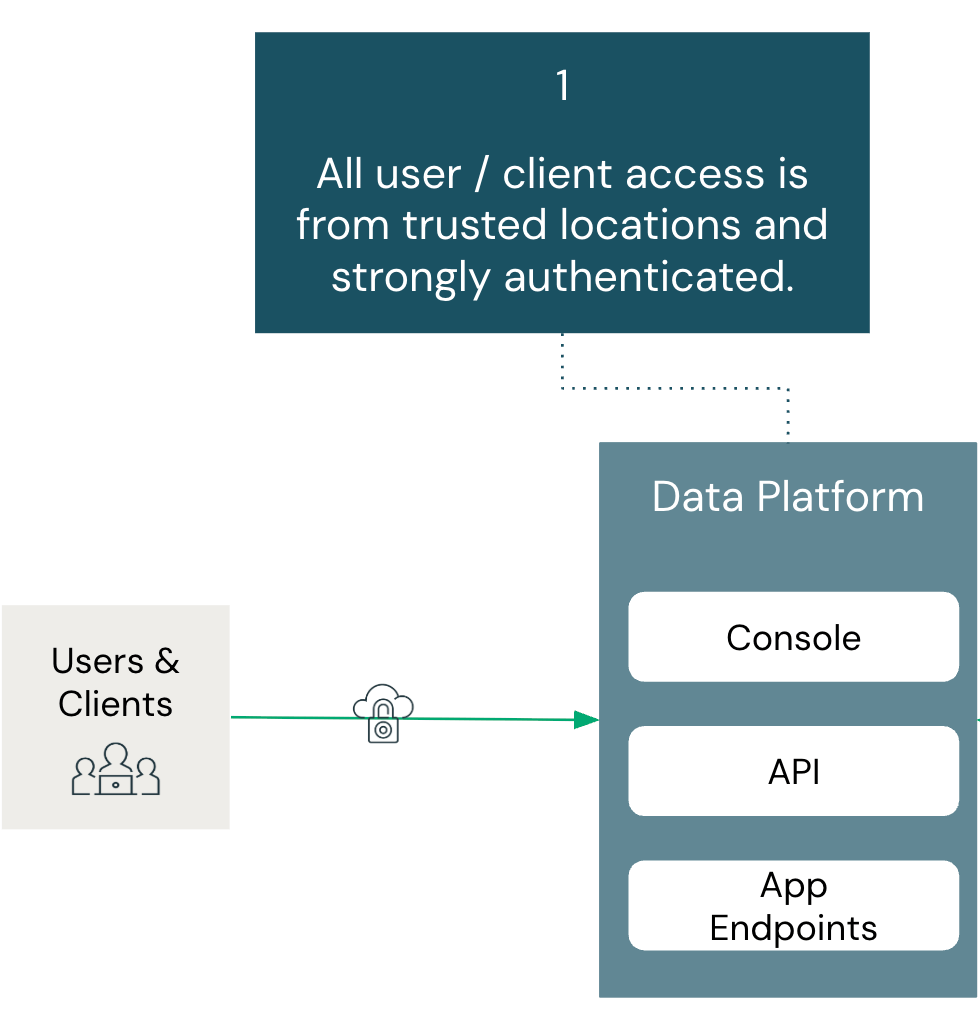

- All user/client access is from trusted locations and strongly authenticated:

- All access must be authenticated and originate from trusted locations, ensuring users and clients can only reach systems from approved networks through verified identity controls.

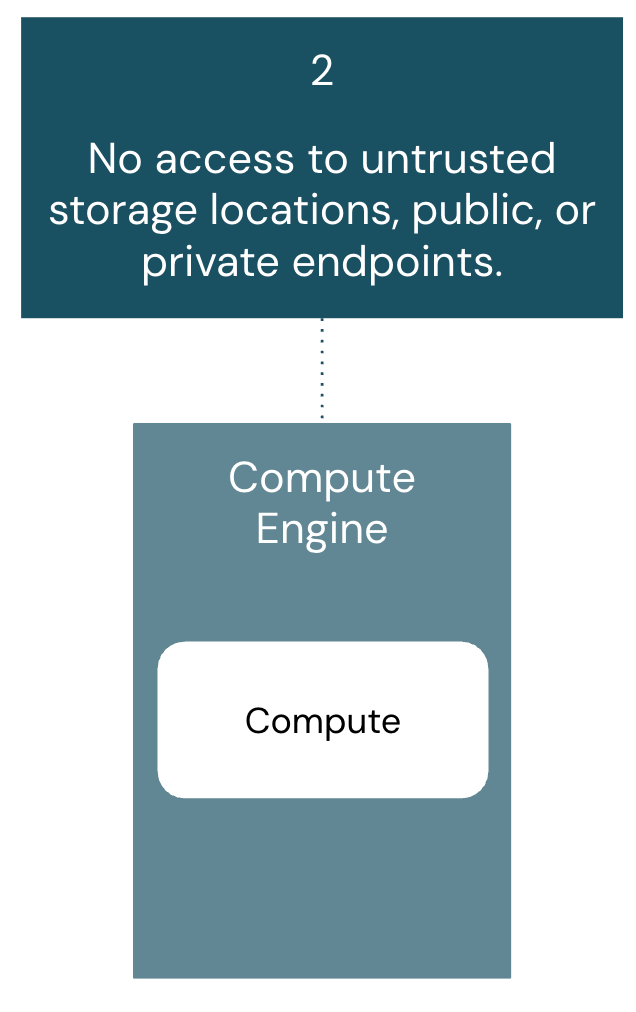

- No access to untrusted storage locations, public, or private endpoints:

- Compute engines must only access administrator-approved storage and endpoints, preventing data exfiltration to unauthorized destinations while protecting against malicious services.

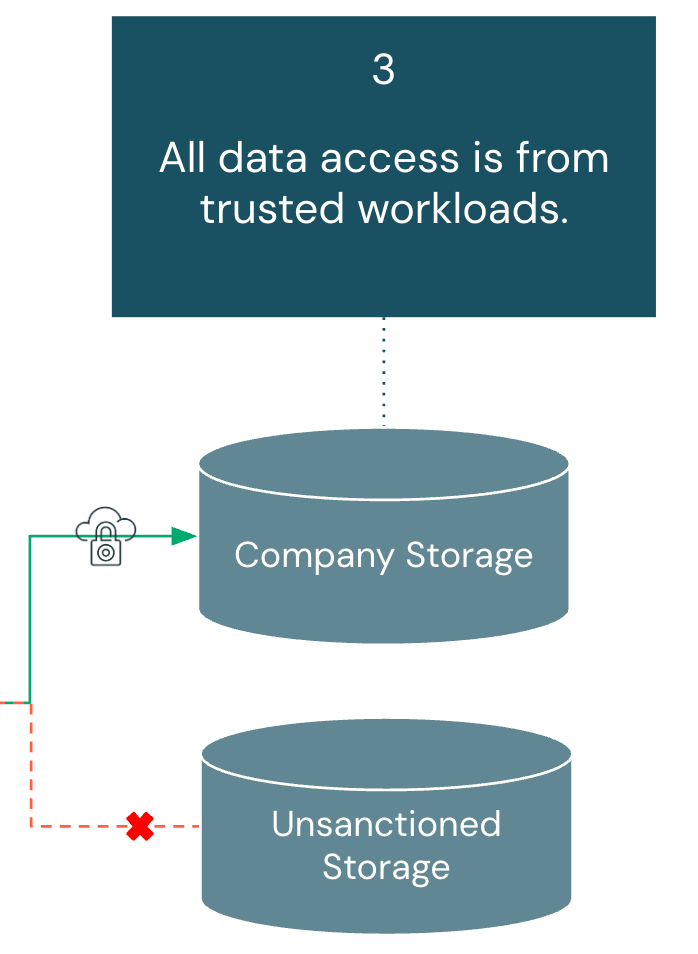

- All data access is from trusted workloads:

- Storage systems must only accept access from approved compute resources, creating a final verification layer even if credentials are compromised on untrusted systems.

Overall, these three requirements working together address user behaviors that could facilitate unauthorized data movement outside the organization's security perimeter. However, it is critical that we think of each of these three requirements as a whole. If there is a gap in controls in one of the requirements, it hampers the security posture of the entire architecture.

In the following sections, we'll examine specific controls mapped to each individual requirement.

Data Exfiltration Protection Strategies for Databricks:

For readability and simplicity, each control under the relevant requirement is organized by: architecture component, risk scenario, corresponding mitigation, implementation priority, and cloud-specific documentation.

The legend for the prioritization to implement is as follows:

- HIGH - Implement immediately. These controls are essential for all Databricks deployments regardless of environment or use case.

- MEDIUM - Assess based on your organization's risk tolerance and specific Databricks usage patterns.

- LOW - Evaluate based on workspace environment (development, QA, production) and organizational security requirements.

NOTE: Before implementing controls, ensure you're on the correct platform tier for that feature. Required tiers are noted in the relevant documentation links.

All User and Client Access is From Trusted Locations and Strongly Authenticated:

Summary:

Users must authenticate through approved methods and access Databricks only from authorized networks. This establishes the foundation for mitigating unauthorized access.

Architecture components covered in this section include: Identity Provider, Account Console, and Workspace.

Why Is This Requirement Important?

Ensuring that all users and clients connect from trusted locations and are strongly authenticated is the first line of defense for mitigating data exfiltration. If a data platform cannot confirm that access requests originate from approved networks or that users are validated through multiple layers of authentication (such as MFA), then every subsequent control is weakened, leaving the environment vulnerable.

| Architecture Component: | Risk: | Control: | Priority to Implement: | Documentation: |

|---|---|---|---|---|

| Identity Provider and Account Console | Users may attempt to bypass corporate identity controls by using personal accounts or non-single-sign-on (SSO) login methods to access Databricks workspaces. | Implement Unified Login to apply single-sign on (SSO) protection across all, or selected, workspaces in the Databricks account. NOTE: We recommend enabling multi-factor authentication (MFA) within your Identity Provider. If you cannot use SSO, you may configure MFA directly in Databricks. | HIGH | AWS, Azure, GCP |

| Identity Provider | Former users may attempt to log in to the workspace following a departure from the company. | Implement SCIM or Automatic Identity Management to handle the automatic de-provisioning of users. | HIGH | AWS, Azure, GCP |

| Account Console | Users may attempt to access the account console from unauthorized networks. | Implement account console IP access control lists (ACLs) | HIGH | AWS, Azure, GCP |

| Workspace | Users may attempt to access the workspace from unauthorized networks. | Implement network access controls using one of the following approaches: - Private Connectivity - IP ACLs | HIGH | Private Connectivity: AWS, Azure, GCP IP ACLs: AWS, Azure, GCP |

Gartner®: Databricks Cloud Database Leader

No Access to Untrusted Storage Locations, Public, or Private Endpoints:

Summary:

Compute resources must only access pre-approved storage locations and endpoints. This mitigates data exfiltration to unauthorized destinations and protects against malicious external services.

Architecture components covered in this section include: Classic Compute, Serverless Compute, and Unity Catalog.

Why Is This Requirement Important?

The requirement for compute to access only trusted storage locations and endpoints is foundational to preserving an organization’s security perimeter. Traditionally, firewalls served as the primary safeguard against data exfiltration, but as cloud services and SaaS integration points expand, organizations must account for all potential vectors that could be exploited to move data to untrusted destinations.

| Architecture Component: | Risk: | Control: | Priority to Implement: | Documentation: |

|---|---|---|---|---|

| Classic Compute | Users may execute code that interacts with malicious or unapproved public endpoints. | Implement an egress firewall in your cloud provider network to filter outbound traffic to only approved domains and IP addresses. Otherwise, for certain cloud providers, remove all outbound access to the internet. | HIGH | AWS, Azure, GCP |

| Classic Compute | Users may execute code that exfiltrates data to unmonitored cloud resources by leveraging private network connectivity to access storage accounts or services outside their intended scope. | Implement policy driven access (e.g., VPC endpoint policies, service endpoint policies, etc.) and network segmentation to restrict cluster access to only pre-approved cloud resources and storage accounts. | HIGH | AWS, Azure, GCP |

| Serverless Compute | Users may execute code that exfiltrates data to unauthorized external services or malicious endpoints over public internet connections. | Implement serverless egress controls to restrict outbound traffic to only pre-approved storage accounts and verified public endpoints. | HIGH | AWS, Azure, GCP |

| Unity Catalog | Users may attempt to access untrusted storage accounts to exfiltrate data outside the organization's approved data perimeter. | Only allow admins to create storage credentials and external locations. Give users permissions to use approved Unity Catalog securables. Practice the principle of least privilege for cloud access policies (e.g. IAM) for storage credentials. | HIGH | AWS, Azure, GCP |

| Unity Catalog | Users may attempt to access untrusted databases to read and write unauthorized data. | Only allow admins to create database connections using Lakehouse Federation. Give users permissions to use approved connections. | MEDIUM | AWS, Azure, GCP |

| Unity Catalog | Users may attempt to access untrusted non-storage cloud resources (e.g., managed streaming services) using unauthorized credentials. | Only allow admins to create service credentials for external cloud services. Give users permissions to use approved service credentials. Practice the principle of least privilege for cloud access policies (e.g. IAM) for service credentials. | MEDIUM | AWS, Azure, GCP |

All Data Access is From Trusted Workloads:

Summary:

Data storage must only accept access from approved Databricks workloads and trusted compute sources. This mitigates unauthorized access to both customer data and workspace artifacts like notebooks and query results. Architecture components covered in this section include: Storage Account, Serverless Compute, Unity Catalog, and Workspace Settings.

Why Is This Requirement Important?

As organizations adopt more SaaS tools, data requests increasingly originate outside traditional cloud networks. These requests may involve cloud object stores, databases, or streaming platforms, each creating potential avenues for exfiltration. To reduce this risk, access must be consistently enforced through approved governance layers and restricted to sanctioned data tooling, ensuring data is used within controlled environments.

| Architecture Component: | Risk: | Control: | Priority to Implement: | Documentation: |

|---|---|---|---|---|

| Storage Account | Users may attempt to access cloud provider storage accounts through non-Unity Catalog governed compute. | Implement firewalls or bucket policies on storage accounts to only accept traffic from approved source destinations. | HIGH | AWS, Azure, GCP |

| Unity Catalog | Users may attempt to read and write data from different environments (e.g., development workspace reading production data) | Implement workspace bindings for catalogs. | HIGH | AWS, Azure, GCP |

| Serverless Compute | Users may require access to cloud resources through serverless compute, forcing administrators to expose internal services to broader network access than intended. | Implement private endpoints rules in the Network Connectivity Configuration object [AWS, Azure, GCP [Not currently available] | MEDIUM | AWS, Azure, GCP [Not currently available] |

| Workspace Settings | Users may attempt to download notebook results to their local machine. | Disable Notebook results download in the Workspace admin security setting. | LOW | AWS, Azure, GCP |

| Workspace Settings | Users may attempt to download volume files to their local machine. | Disable Volume Files Download in the Workspace admin security setting. | LOW | Documentation not available. Toggle to disable found within workspace admin security settings under egress and ingress. |

| Workspace Settings | Users may attempt to export notebooks or files from the workspace to their local machine. | Disable Notebook and File exporting in the Workspace admin security setting. | LOW | AWS, Azure, GCP |

| Workspace Settings | Users may attempt to download SQL results to their local machine. | Disable SQL results download in the Workspace admin security setting. | LOW | AWS, Azure, GCP |

| Workspace Settings | Users may attempt to download MLflow run artifacts to their local machine. | Disable MLflow run artifact download in the Workspace admin security setting. | LOW | Documentation not available. Toggle to disable found within workspace admin security settings under egress and ingress. |

| Workspace Settings | Users may attempt to copy tabular data to their clipboard through the UI. | Disable Results table clipboard feature in the Workspace admin security setting. | LOW | AWS, Azure, GCP |

Proactive Data Exfiltration Monitoring:

While the three core enterprise requirements let us establish the preventive controls necessary to secure your Databricks Data Intelligence Platform, monitoring provides the detection capabilities needed to validate these controls are functioning as intended. Even with robust authentication, restricted compute access, and secured storage, you'll need visibility into user behaviors that could indicate attempts to circumvent your established controls.

Databricks offers comprehensive system tables for access control monitoring [AWS, Azure, GCP]. Using these system tables, customers can set up alerts based on potentially suspicious activities to augment existing controls on the workspace.

For out-of-the-box queries that can drive actionable insights, visit this blog post: Improve Lakehouse Security Monitoring using System Tables in Databricks Unity Catalog. Cloud-specific logs [AWS, Azure, GCP] can be ingested and analyzed to augment the data from Databricks system tables.

Conclusion:

Now that we've covered the risks and controls associated with each security requirement that make up this framework, we have a unified approach to mitigate data exfiltration in your Databricks deployment.

While preventing the unauthorized movement of data is an everyday job, this will provide your users with a foundation to develop and innovate while protecting one of your company's most important assets: your data.

To continue the journey of securing your Data Intelligence Platform, we highly recommend visiting the Security and Trust Center for a holistic view of Security Best Practices on Databricks.

- The Best Practice guides provide a detailed overview of the main security controls we recommend for typical and highly secure environments.

- The Security Reference Architecture - Terraform Templates make it easy to automatically create Databricks environments that follow the best practices outlined in this blog.

- The Security Analysis Tool continuously monitors the security posture of your Databricks Data Intelligence Platform in accordance with best practices.